-

Trolley problem and if you'd agree to being run overGet there early, find the one who tied them there, free them all, give the fat man some diet advice. Buy them all a ticket to the train.

Solved. -

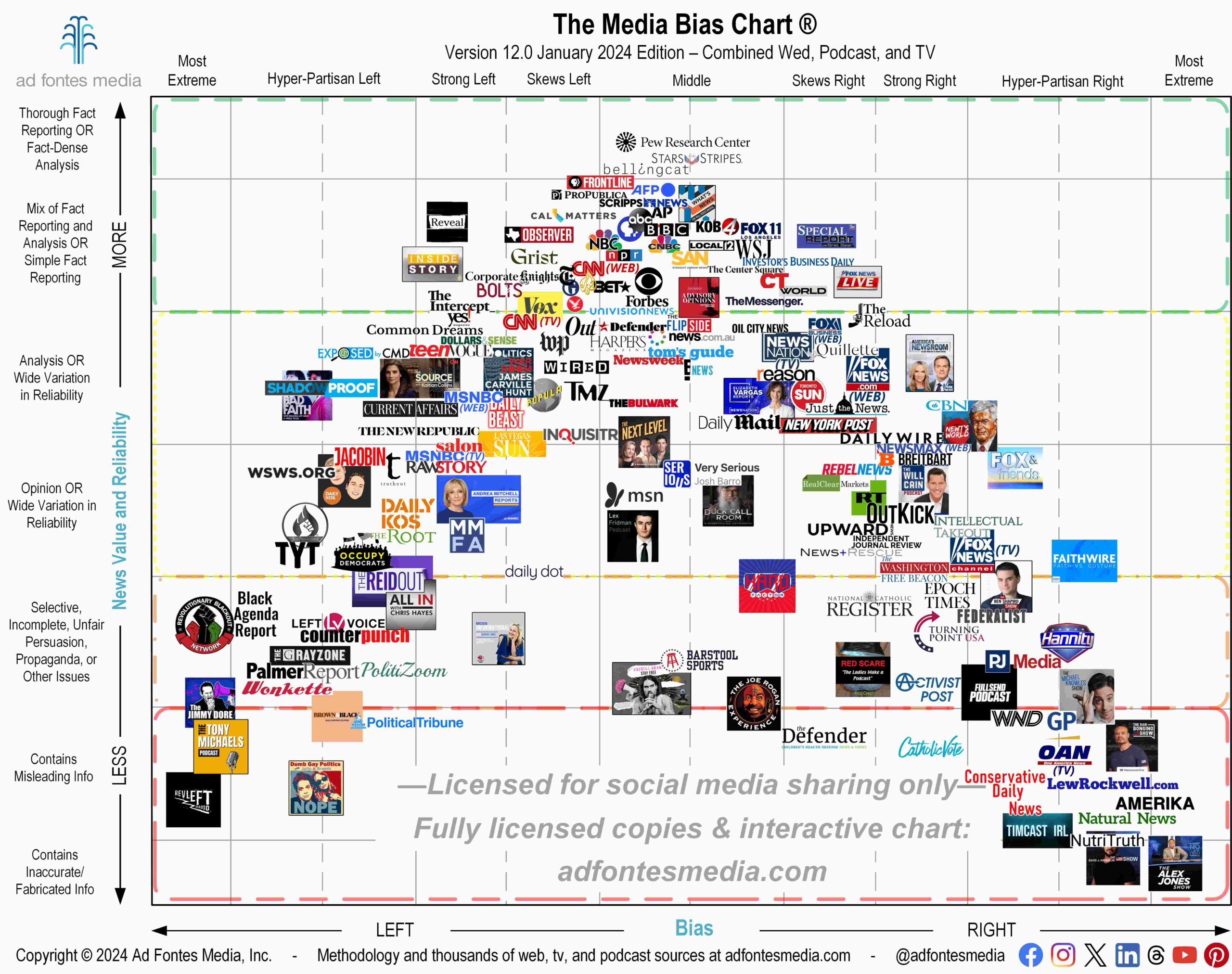

Objective News Viewership.Begin to at least cast a wider net and be aware of biases and political leanings. This OP borders on, or is downright propaganda in its low quality.

https://mediabiasfactcheck.com/fox-news-bias/

https://www.allsides.com/media-bias/media-bias-chart

We can draw from different sources for bias checking, but these are the most wide spread for now. For scientific rigor around bias checking there are machine learning techniques developed; but I'm not sure if any are in use at this time. https://www.researchgate.net/publication/362614797_Machine-learning_media_bias -

How Different Are Theism and Atheism as a Starting Point for Philosophy and Ethics?Here I was thinking the same about you. — Pantagruel

In what way? You either have the broad definition of bias as something that gravitate towards something or how bias is described in thinking and reasoning, which is what this is about.

The concept that we must put a man on the moon was a bias that flew in the face of current technology (so to speak). The resultant Saturn V project was a monument to the power of human creative thought resulting in countless technological innnovations. — Pantagruel

How is that a bias? It was an ambition and goal, how is any of that a bias?

And the Saturn V wasn't enabled by creative thinking. Once again, creative thinking aren't conclusions, they're explorations. The conclusions that made Saturn V possible weren't abstractions mounted together into a functioning form, it was creative thinking that guided the journey towards rigid and factually based conclusions, i.e the final form of Saturn V that functioned did so because of the unbiased end point of that exploration. Creative thinking didn't enable Saturn V to do anything, it was the unbiased science that made it function. Belief doesn't take you to the moon, it was the hard work of unbiased reasoning that enabled it and that may start with creative thinking, but must end without bias, or else that belief will blow the thing up.

So, what biases helped build the Saturn V and make it fly? Creative thinking and ambition or goals aren't cognitive biases. Exploration in itself isn't the knowledge or conclusion. My point is that the journey towards factual conclusions can be filled with creative thinking, but anyone who stops moving towards the conclusions that lies past their biases will find themselves in a blown up rocket.

Who says that logic and rational reasoning are the sole measure of validity? Again, this is one of your own biases....

towards further and further rigid structures until a solid form of conclusion emerges.

— Christoffer

the exploration of ideas require going from the abstract to the solid.

— Christoffer

exploratory journey from abstract chaos to solid order

— Christoffer

Again, these are all scientifically biased, with respect to the role that science plays in human existence. To claim that science provides (or can provide) an adequate framework for existence is, number one, not itself a scientific claim. For which reason such perspectives are usually criticized. Which was the original point, that your estimation is itself value-laden, hence typical of the very belief-structure that you reject. — Pantagruel

How are they biases? What are they biases towards?

Would you say that a conclusion that is formed on no solid grounds, that only relies on abstract random ideas; is on equal terms with a conclusion that has been formed by stripping away lose ends, beliefs and been built on further and further verifications and support in evidence?

If not, then the first quote is not a bias or biased in any way, it is an observation of how knowledge is actually acquired. Case point is the Saturn V rocket. It can start with a creative thought and idea, but you can never have a biased conclusion as the foundation for the rocket's function. Belief has no place on its blueprint.

You conflate exploration with conclusions, that's the problem here. You say something is biased but don't provide any argument that uses the term properly. You use the term in a vague form. Human biases, in the context of this thread; belief, is an end point that appears before a conclusion in truth. Having a bias towards a belief stops the journey from reaching actual rational conclusions. Belief didn't enable the Saturn V to fly, it was the engineering and math, the conclusions so rigid in their truth that they rhymed with the physical laws of the universe, far beyond any beliefs in our heads.

To claim that science provides (or can provide) an adequate framework for existence is, number one, not itself a scientific claim. — Pantagruel

That's not what this is about. This thread is about what limitations that theistic or religious belief has on the process of philosophical thinking and in science.

For which reason such perspectives are usually criticized. Which was the original point, that your estimation is itself value-laden, hence typical of the very belief-structure that you reject. — Pantagruel

Did you understand what I meant by the journey from free thought to rigid and solid conclusions? That's the core of my argument. Because you are conflating the journey with it's destination. Belief, ambition, creativity, abstraction or emotion may be the initial state of the Saturn V rocket, but the destination was a machine that could fly us to the moon. You cannot fly a rocket on belief alone, you can not design a rocket if you have a bias towards an engineering solution that is simply just based on belief.

The journey is not the destination. And in context of this thread, conflating the journey for the destination is exactly the problem with bias that theists and religious thinkers have. Stopping their journey before the destination purely on the belief that they are already there. And when presented evidence that they're not, they just move a little bit closer, but never arriving until they rid themselves of beliefs and biases. -

How Different Are Theism and Atheism as a Starting Point for Philosophy and Ethics?I understand your approach. However, as I said, you are generalizing both with respect to belief and bias and, in the human world, knowledge is not exclusively of the scientific kind. — Pantagruel

Since I'm describing a gradual journey from free thought to unbiased conclusions I don't think it is generalizing at all. Between the two points are numerous positions to hold, it's only that final conclusions that become universalized conclusions from which we build a human knowledge consensus. That end point cannot incorporate a belief treated as axiomatic truth. But the journey to that point consist of many layers of thought and reasoning as well as hypotheses and fiction.

It sounds more like you didn't contemplate on what I wrote enough to see these nuances in my argument?

There are types of belief that cannot be reached through bias elimination; but which in fact function through bias-amplification (which could be described as the instantiation of value, which is one way that a bias could be described). — Pantagruel

I think you are applying too many vague definitions of biases within the context of this topic. A human cognitive bias is simply a gravitational pull towards interpretations without a knowledge-based foundation for it. And rather focus on emotional influences than logic and rational reasoning.

Any conclusion that produce a foundation for universalized truths cannot incorporate human biases or at least requires a demand for mitigation of any that arise. A concept that does not have such end point may incorporate biases, but rarely are concepts benefitted by them.

Do you have any examples of concepts that benefit from biases? Or are the biases within those concepts only there as temporary necessities because we've yet to answer the concepts fully? Either by lack of further information or lacking conceptual frameworks.

Any creative human enterprise, for example, goes beyond materialistic-reductive facts to assemble complex fact-value syntheses. It is these artefacts which form the basis of human civilization. And, in fact, science itself is one such construct. Science was discovered through pre-scientific thought, after all. — Pantagruel

But isn't that just part of the journey I described? You are conflating the end point, the final conclusions with the journey to its point.

As I described many times now. The gradual journey from free thought, including creative thinking, abstract explorations etc. towards a point of universalized conclusions is a gradual journey. The methods go from being free in thought, no bounds or limitations - towards further and further rigid structures until a solid form of conclusion emerges. Without this journey we cannot form further knowledge. Just like Einstein didn't just straight up write his equations on the chalk board, he used his creative "thought lab" and explored concepts through very abstract thinking, but he manifested them as rational theories through math and later verified through experiments. Almost no notable physicist in history have arrived at a theory by just pure math and cold reasoning based on previous evidence. The exploration of ideas require going from the abstract to the solid.

And the history of science follows this journey as well. It started maybe as early as the first human's with human cognition looked up at the sun wondering what that round element of warmth was. Creatively forming explanations that in their lack of unbiased methods formed the foundation of religion. And through our history as a species we've moved closer and closer to better ways of defining knowledge and truths about reality around us. The scientific methods of today are much more effective than even ten years ago. We journey further and further towards a scientific system that removes more and more of human bias influences on it.

So however we view knowledge and our ability to form it; it always shows that form of exploratory journey from abstract chaos to solid order, through all gradual steps in-between. But if a religious belief stands in the way through that journey, then the person holding those beliefs will have to move their goal posts further and further and eventually rid themselves of that bias towards their beliefs if to ever find themselves able to form that final state of knowledge. And even if we can't reach that final stage, it is the act itself to move towards it that builds our consensus of knowledge and the act of mitigating anything in the way that clouds our ability to move forward. If someone stops their journey and settles down with a bias towards a certain belief as an act of just deciding before the end, what the end is; they effectively just chose to not look any further and that can never lead to final or further knowledge. -

How Different Are Theism and Atheism as a Starting Point for Philosophy and Ethics?Perspective is essentially a form of bias. — Pantagruel

Yes, but the difference within this topic has to do with the kind of bias that is bound to a belief without grounding in reality. We are biased in certain perspectives like how we can only see a fraction of all light waves, but that is a bias that we know about and can work around when explaining the nature of light. A bias out of belief rejects accepting it as a bias for the purpose of mitigating it in search of truth, and instead make that "belief" equal to an "axiomatic truth"

Acknowledging a bias in order to mitigate it in reasoning is not the same as religious belief bias. One is an acceptance of certain limitations while the other is a demand for that specific perspective to be the truth.

Belief systems are the fabric of our human reality. — Pantagruel

Yes, we are pattern recognizers. We only think in relations between objects and concepts. These relations are infused with emotional factors and produce an experience of reality that is utterly skewed towards our hallucinatory rendition of it.

And this is why we have methods to mitigate such biases in order to arrive at concepts that decode reality better than mere human interpretation.

The difference is when these biases are accepted as an axiomatic truth that is part of any unbiased research. And this is why I say that theists and religious thinkers reason with limitations as they do not accept that their religious belief is part of the biases to mitigate.

But as I also wrote, I am convinced that reasoning must have a component of free thought. That it's not about purely biased or unbiased thinking, but that it is a gradient of stepping stones from ignorance to knowledge. We need to start at the biased, abstract, play with concepts and ideas, we need to start in that chaos of free thought to be able to find pathways towards unbiased knowledge. It needs to be a gradual movement, slowly going from that chaos to stripping away bias after bias until we are able to universalize a concept or conclusion. In science, this is done with the methods of math, experiments, verifications, repeatability etc. -methods that strips away our biases until we arrive at answers that live and exist outside our human minds.

In religious belief biases however; it is equivalent to reaching the last gate before truth and be blocked by a guard who won't let you in. The guard will not accept any reasoning or explanations as to why he should open the gate because he has orders to never let you in. Regardless of how ridiculous his reasons are for not letting you in, he won't have it any other way. He is not interested in anything beyond his own emotional reasoning. You will never be able to enter the gate until the guard is gone, but he never leaves. So instead, you end your journey for truth at that point instead of getting rid of the guard. You produce an unfinished concept, a half-truth; in which you agree up to a certain point and then you just let the guard keep repeating his reasoning as the last step, because you can't bother to get rid of him. And it doesn't matter if something appears that fundamentally proves the guard wrong, he will just move the gate away from that evidence and say it's still not enough to open the gate; just like how theists and religious thinkers move their goal posts every time science have disproven something that was earlier an accepted truth within that belief.

The problem for theists and religious thinkers is that they cannot move past the last step towards a universalized concept. They are blocked by the guard, their religious belief bias. They won't accept it as something to be mitigated, as is done with spotting biases during scientific research or logical reasoning, but instead they hold onto it so strongly that all they can ever achieve is to produce half-truths and flawed reasoning. And they don't care about their limitations because they are content to just settle down outside the gate with that guard. -

How Different Are Theism and Atheism as a Starting Point for Philosophy and Ethics?I didn't realize we had a choice in that? Oh wait, we do? Of course. That is the essence of belief.

Of course, if you are saying that we haven't any choice in it, then it can't be a problem or a solution, can it? — Pantagruel

Why can't a factual thing be a seed for a solution? What are actually you implying here? A factual property of our existence is a hint at our functionality in face of nature. Anything else is an invention; something requiring an addition of an invented concept rather than being what it is. Adhering to what "is" negates belief as what "is" exists outside of any beliefs. -

How Different Are Theism and Atheism as a Starting Point for Philosophy and Ethics?Isn't this in fact also a belief, purporting guidance? — Pantagruel

What we deem facts of the world are what we can live by as honest as possible as truth. Our biological nature have parameters that we can measure, we have statistical data and knowledge about our human psychology that acts as our guidance. That isn't belief, that is adhering to how we function as an entity, as an animal within this ecosystem of nature. Belief does not feature evidence, with evidence comes hypothesis and therefore what I described is hypothesis, not belief. Compared to all else, an hypothesis has more ground than belief.

which is exactly comparable to the type of normative beliefs systems he says we can do without. — Pantagruel

With what I just said in mind, is it really so? Or is it that all attempts at proposing a framework gets demoted to equal "belief"-systems in order to level the playing field in favor of unsupported claims. How is adhering to our biology and human psychology equal to belief in a deity or God? One has a lot of evidence and logical rigor and one is a wish beyond any existing support.

I don't see how you can conclude it as equal? I'm not saying I have the philosophical or ethical answers beyond my conclusion in this, but I'm saying that we are more likely to find a common, universalizable truth about our human condition if we look at what we are and not at what we wish ourselves to be based on wishful thinking and how we want our existence to be.

So, am I arguing for another belief system? Or am I arguing for exploring deep in ourselves the ethical truth of our being based on what studies in psychology tells us about our species? Because what I'm seeing is not the nihilism of Dostojevskij, what I'm seeing is an optimism that seems to have gotten lost in the stigma of no faith. I do not think we need faith, I think we need to be honest towards who we are as a species, without interpretations by those who want power over the conversation.

So, is what I'm talking about belief? Or is it closer to truth than how belief operates? -

How Different Are Theism and Atheism as a Starting Point for Philosophy and Ethics?to what extent does the existence of 'God', or lack of existence have upon philosophical thinking. Inevitably, my question may involve what does the idea of 'God' signify in itself? The whole area of theism and atheism may hinge on the notion of what the idea of God may signify. Ideas for and against God, which involve philosophy and theology, are a starting point for thinking about the nature of 'reality' and as a basis for moral thinking. — Jack Cummins

I can definitely see a difference in how people of each approach philosophical concepts. I would also say that while many say that religious people can still keep that faith while working as, for instance a theoretical physicist, I observe a difference in reasoning.

A belief system or lack thereof is basically linked to the definition of a strong bias. We know that bias is essential to human cognition, and that the deliberate action of reducing bias is essential to science and logical reasoning in philosophy. That leads to the conclusion that such a strong belief is in fact causing the problem of strong bias in reasoning, affecting the outcome of a philosophical argument or scientific conclusion/interpretation.

But there are values that needs to be taken into account. Just like art plays an important function in opening up minds to new ways of thinking, so can a strong belief system focus reasoning. It's comparable to people who've taken psychedelics and experienced a profound connection with "all things in the universe". The emotional journey of that has sometimes changed how people think about different subjects without necessarily changing their belief system. Plenty of atheists have taken such substances and have a profound expansion of their perspectives, even though those experiences are "artificial" in nature.

The important part is that the problem lies in the conclusions made. Many theists and believers use their convictions as part of their premises in arguments and such bias breaks any logic or scientific merit of their conclusions. Atheists are more keen to naturally thinking in an unbiased fashion, it's a natural pathway of their reasoning. So they're better at producing unbiased arguments than believers and theists. However, if a theist and believer understand the inability to universalize their concepts due to their fundamental bias, they can view concepts in a certain perspective that an atheist may not easily access.

I'm convinced that any philosophical and scientific thinking requires a gradual movement from free thought to rigid logic. A large problem is that people view critical thinking either too abstract or too rigid in logic, but it should be treated as going from an abstract play with ideas, concepts and visions down to a sound grounded logic that can be universalized. It's not either end, it's the journey and progress from one side to the conclusion in the other.

Theists and believers have a harder problem reaching that end and atheists and the scientifically minded have a problem beginning in the abstract play. Both sides need to understand this more deeply about each other.

I'm a big advocate for keeping ideas close to the facts of reality that we have around us and I'm under absolutely no belief or theistic notion whatsoever. But I find my play with the supernatural; the ideas and abstractions in art, which I hold is an underrated component of our process towards expanded perspectives.

I wonder to what extent if God does not exist, if as Dosteovosky asks, whether everything is permitted? So, I am left wondering about the limits and freedoms arising from both theism and atheism. How do you see both perspectives in thinking? — Jack Cummins

Theists are too bound by arbitrary rules and principles, lost in scriptures and made up concepts for what constitutes what is permitted. One can argue that the lack of belief means everything is permitted, but I hold that we can find scientific answers to what is permitted or not through our biology. Yes, everything is theoretically permitted, but only for psychopaths and those are generally not considered the normality of a human being, even evidenced by their reduced statistical existence compared to non-psychopaths. No, we have a biology that push us towards compassion, push us towards empathy. It's more the natural state than any other, regardless of what pessimists say. Our psychology makes us prone to outside influence on our behavior, but our natural state without heavy manipulation leans into empathy and compassion towards other people.

So, we don't need religion or belief to guide us, we just need to rid ourselves of the manipulators and psychopaths that play with our minds. We need to focus on the natural drives towards compassion and empathy and work aligned with that and not against it. We think that a lack of belief in a system that put principles and rules on us to follow is the only way to limit us from doing violence upon another, but it's not the lack of belief that leads to violence, it is the lack in acceptance of our empathically natural and biological interactions between people that leads to nihilism. -

A first cause is logically necessaryIf you want to demonstrate how you've countered my argument, simply explain to me what my argument is Christopher. I'm telling you you don't understand it. — Philosophim

Your argument's conclusion is that there has to be a first cause, which is only one interpretation in physics. And through the explanations given, your logic of causality as a framework for beyond our reality does not function or becomes inconclusive since your reasoning is bound to this reality and do not compute with quantum mechanics. And seen as causality itself is in question even in our reality and isn't a defined constant, other than on the scales in which determinism operates, you cannot conclude your conclusions through the reasoning you provide.

that you're presenting a straw man. — Philosophim

You don't even seem to understand what a straw man is.

When you're over there beating an argument of your own imagination, there's really nothing else to discuss until you resolve the accusation. — Philosophim

You accusing others of fallacies does not resolve your own fallacies. That's deflection and projection. You ignore engaging with the criticism given and try to steer the argument into other directions by cherry picking out of context and accusing other's of fallacies that doesn't even fit the definition. There's no point in engaging any further with someone so deeply in love with their own argument that they are totally incapable of even understanding the criticism given, even on a surface level.

I can take all the stuff you've already said and apply it to your summary. If you can't summarize the argument and tell me what I'm actually saying — Philosophim

I have made plenty of summaries, but you ignore them. You want people to engage in a way that makes it easy for you to counter-argue, if you don't understand the criticism, you deflect in this way. This kind of demand for others to engage in the way you want in order to control the discussion is downright childish.

after he confessed he didn't have to understand the argument. Such a person has nothing of value to add to the point. — Philosophim

I didn't confess to that, I said that your flawed reasoning is at odds with quantum physics and I rely on that for the context of this topic. Get off your high horse.

Its also not control freaky to guide a person back to the OP — Philosophim

I don't need your guidance.

This isn't a generic open ended discussion thread. — Philosophim

You don't own how other people engage with you and I've stayed on topic, but you simply don't seem to understand how.

then my accusation of you using a straw man fallacy is correct and none of your other points mean anything. — Philosophim

This is is hilarious. You use a straw man wrong and if other's don't engage in the way you want them to, you use that deflection to ignore everything that's been said. Are you able to extrapolate any criticism from the huge amount of writing I've given your thread or are you gonna continue act like a 7-year old king of your sand castle?

I'm done with this low level discussion; you're not equipped to handle a philosophical discussion and so the discussion is pointless. -

A first cause is logically necessaryThis is a lot of effort to avoid addressing the summary I put forth. — Philosophim

It's a lot of effort for trying to explain how I actually argued against you since you ignore engaging with the actual counter arguments and keep rejecting in ways like this:

When the writer of the idea tells you that you're off, and tries to clarify it for you, listen. — Philosophim

That you are the writer of the idea is not a foundation for the idea being solid.

A straw man accusation is serious. — Philosophim

Yet you ignore your own faults on display while praising your own writing?

Trolling by going to chat GPT at this point is just silly. — Philosophim

Is it? Or is the point that I've already addressed your argument and that you are still just praising your own writing as your form of defense, dismissing engagement with what's been actually written. Using GPT like this for a breakdown is primarily because you seem to not understand the criticism you get so you try to hide behind the same straw man that you falsely accuse others of doing. Let's do that again with what you wrote right now and maybe you'll see once again how problematic your reasoning is, starting at the argument in which you accuse me of a straw man:

Misapplication of the Straw Man Fallacy: The argument accuses Christoffer of committing a straw man fallacy. "I don't have to, I understand the physics instead." This statement by Christoffer does not necessarily constitute a straw man fallacy. A straw man fallacy involves misrepresenting someone's argument to make it easier to attack. Christoffer's statement could be interpreted as an assertion that his understanding of physics negates the need to engage with the argument, rather than misrepresenting the original argument.

Lack of Context: The counter-argument lacks context about what the original discussion was and what Christoffer's statement was addressing. Without this context, it's hard to determine whether his response was indeed a straw man or a relevant counterpoint.

Presumption of Misunderstanding: The counter-argument assumes that Christoffer does not understand the original point (OP), without providing evidence of this misunderstanding. This assumption may not be fair or accurate.

Condescending Tone: The tone of the counter-argument is somewhat condescending, particularly in the lines "I can help you come to understand the OP's point if you want" and "When you show understanding, then critique." This approach can be counterproductive in a logical discussion, as it might provoke defensiveness rather than constructive dialogue.

Lack of Direct Engagement with Christoffer’s Point: The counter-argument does not directly address Christoffer's claim about understanding physics. Instead, it diverts to explaining the straw man fallacy and summarizing the original argument. A more effective counter-argument might have directly addressed how Christoffer's understanding of physics relates to the original point.

Oversimplification of Complex Topics: The summary of the original argument about first causes and chains of causality simplifies complex philosophical and scientific topics. While simplification can be helpful for understanding, it risks omitting nuances that are crucial for a thorough discussion of such topics.

In summary, while the counter-argument attempts to point out a logical fallacy and guide the discussion back to the original topic,it has its own issues including a potential misapplication of the straw man fallacy, lack of context, presumptions about understanding, condescending tone, lack of direct engagement with the opposing point, and oversimplification of complex topics.

And further analysis of what you wrote now:

Accusation of Avoidance Without Directly Addressing Counterpoints: Philosophim accuses Christoffer of avoiding the main points of the original post (OP) without directly addressing the specific critiques raised by Christoffer. This can be seen as a way to deflect the conversation away from the substantive issues raised in the counter-argument.

Overemphasis on Understanding as Perceived by the Original Writer: Philosophim places significant emphasis on Christoffer showing an understanding of the argument in Philosophim's terms. While it's important for parties in a debate to understand each other's points, insisting on understanding as defined solely by one party can be problematic, especially if it disregards the other party's perspective or understanding.

Continued Focus on Straw Man Accusation: Philosophim continues to assert that Christoffer is committing a straw man fallacy. However, without directly engaging with the specific points of Christoffer's argument, this accusation seems more like a general dismissal rather than a response to the substance of Christoffer's critique.

Dismissal of AI Analysis as Trolling: Philosophim dismisses the use of an AI-generated analysis in Christoffer's argument as "trolling." This dismissal could be seen as avoiding engagement with the points raised by the AI, which Christoffer used to support his argument.

Failure to Address Specific Philosophical and Logical Flaws Pointed Out: Philosophim does not directly address the specific philosophical and logical flaws that Christoffer and the AI analysis have pointed out, such as the potential false dichotomy, circular reasoning, and the speculative nature of the conclusion.

Insistence on Direct Engagement with the OP’s Points Without Acknowledging Counter-Argument’s Merit: Philosophim insists that Christoffer directly engage with the points of the original argument while seemingly not acknowledging the potential merit or relevance of Christoffer's counterpoints.

Implying a Lack of Worthwhile Engagement: Philosophim suggests that if Christoffer cannot address the OP in a manner Philosophim deems acceptable, there's no point in continuing the discussion. This stance can limit the scope of the debate and potentially dismiss valid criticisms.

In summary, Philosophim's response focuses heavily on procedural aspects of the debate (such as the perceived failure to understand the OP and the straw man accusation) rather than substantively engaging with the critiques raised by Christoffer. This approach can hinder constructive dialogue and the exploration of the philosophical issues at hand.

Analyzing in this way produces an objective analysis of your argument. Dismissing it for the sake of how the analysis is done rather than the points it brings up makes zero sense. You're just deflecting all criticism you get by cherry picking parts of a counter argument out if its context and making a straw man of it yourself, then calling out the other person for doing a straw man. I've countered your argument, I've engaged in further explanations for the objections you raised and yet you still act as if no one has countered your OP. It's dishonest. Your OP post has flaws in its reasoning, explained multiple times now, including an AI analysis in the attempt to making it more objective, yet you still praise your own logic and fail to engage in the discussion on the merits of discourse. Rather, you demand people to counter argue within the context that you want, not by the merits of your own reasoning, which has been clearly demonstrated to be flawed. There's no point in providing more arguments than I've already given because at this point you're just ignoring the counterpoints raised and tries to deflect through dishonest cherry picking.

Incorrect. I'm declaring a very real critique of his point. Look, throwing out a bunch of quantum physics references and going off on his own theories with a ton of paragraphs is not a good argument. — Philosophim

You falsely assume that your argument can solely rely on a logical argument and ignore the actual science which provides counters to it. The theories provided are there to show you how your logical reasoning isn't enough for the conclusions you made.

I'm not going to spend my time when I've already directed him to address particular points that he's ignoring — Philosophim

I've directed you to the problems of your reasoning, that's what's being ignored here. You're so biased towards your own argument that you value it like gold and any counter argument is straw manned by you. You're just projecting your own fallacies by dismissing and deflecting when calling out straw man's of other people, especially when you don't even use the accusation of straw man properly.

He doesn't understand. He's in his own world. — Philosophim

Again, projecting by describing yourself. You fail to simply understand that your argument of a first cause is just empty dislocated logic in face of the science actually decoding reality into a complexity beyond that use of logic. So it's you who live in your own world of your own logic out of a limited understanding of the science, and demand that everyone acknowledge how brilliant you are or else they are beneath you.

I answer this directly with the summary I gave. He ignores this completely. — Philosophim

Just reiterating your argument again is not a valid counter argument to any of the criticism. It is being ignored because your OP as already been addressed, in summery:

The OP simplifies things too much by saying everything either has a prior cause or there's a first cause, ignoring other possibilities. It also talks about this first cause (Alpha) without really explaining it well. It sticks to a traditional idea of cause and effect that might not hold up in complex areas like quantum mechanics. The way it defines "Alpha" is circular; like it's saying it exists because it has to, which isn't a strong argument. It also quickly dismisses other ideas about never-ending or looping causes without much reasoning. The argument doesn't differentiate between different kinds of causes and ends up with a speculative conclusion that a first cause must exist. It doesn't consider other theories about the universe that don't need a first cause. It has notable gaps that has been thoroughly pointed out, which you totally ignore.

And to drive the point further, here's a summery of an AI analysis of your deduction alone, without the fluff:

Overall, while the argument lays out a structured approach to discussing causality,it has limitations. It depends on specific assumptions about how causality works and doesn't fully explore or address alternative models, such as causality as a concept that may not be universally applicable or may operate differently at different scales or in different contexts (like in quantum mechanics).

If nothing of this is enough to point out that your OP is flawed, including everything that I've written prior, then you are simply not equipped to handle criticism and well only keep deflecting through self-praise. -

A first cause is logically necessaryHave you ever heard of a logical fallacy called a "Straw man argument"? — Philosophim

Maybe understand in what context I wrote that in before calling it a straw man.

I've listed an argument. If you say, "I don't have to understand it, I'm going to attack this thing instead," — Philosophim

No, I argue against the conclusion you make as we already have physics telling us similar things that doesn't need you to re-invent the wheel, but also other interpretations that gets ignored by the absolutism of your conclusion.

You get so hung up on forcing people to understand you that you use others rejection of your argument as some evidence that you are right. But in doing so you ignore the objections being raised.

In order to maybe simplify things (as you seem to not really care about the counter argument you are answering to) I let an AI break down the flaws of your OP. Notice the highlighted parts in relation to what I've been writing. Also notice the irony in you calling out fallacies.

This argument, which aims to establish the necessity of a "first cause" in the context of causality, has several philosophical and logical flaws:

False Dichotomy: The argument begins by presenting a dichotomy: either everything has a prior cause, or there is a first cause. This framing may oversimplify the complex nature of causality and exclude other possibilities, such as causality not being applicable in all contexts (e.g., quantum mechanics), or the concept of causality itself being a limited human construct that may not apply universally.

Undefined Terms and Concepts: The argument uses terms like "Alpha" without adequately defining them or explaining how these concepts interact with established understandings of causality. The notion of an "Alpha" as an uncaused cause is a speculative philosophical concept, not an empirically established fact.

Assumption of Classical Causality: The argument assumes a classical, linear model of causality (A causes B, B causes C, etc.). However, in some areas of physics, especially quantum mechanics, the traditional concept of causality may not hold in the same way. This assumption limits the argument's applicability to all of existence.

Circular Reasoning in Alpha Logic: The argument about the "Alpha" is somewhat circular – it defines an Alpha as something that must exist because it cannot have a cause, and then uses this definition to argue for its existence. This is a form of begging the question, where the conclusion is assumed in the premise.

Overlooking Infinite Regression and Looped Causality: While the argument addresses infinite regression and looped causality, it dismisses these concepts without sufficient justification. It's a significant leap to conclude that because these concepts are difficult to comprehend or seem counterintuitive, they must lead to a first cause. Infinite or looped causality models are viable theoretical concepts in cosmology and philosophy and cannot be dismissed lightly.

Conflating Different Types of Causality: The argument does not distinguish between different types of causality (e.g., material, efficient, formal, final causes in Aristotelian terms). This lack of distinction can lead to confusion and misapplication of the concept of causality to different contexts.

Speculative Conclusion: The conclusion that a causal chain will always lead to a first cause (Alpha) is speculative and not empirically verifiable. It's a philosophical position that depends on the acceptance of certain premises and definitions, which are themselves debatable.

No Consideration of Alternative Models: The argument does not consider or address alternative models of the universe that do not require a first cause, such as certain models of an eternal or cyclic universe.

In summary, while the argument is an interesting philosophical exercise, it is not conclusive. It relies on certain assumptions about causality, does not adequately address alternative theories, and contains logical flaws such as false dichotomy and circular reasoning. — ChatGPT

Try to understand it first. When you show understanding, then critique. — Philosophim

I have critiqued, but you don't understand the critique you get, and instead you use an argument about people not understanding you as your go-to defense against other's critique. -

A first cause is logically necessaryHuman minds invented math with our ability to create discrete identities or 'ones'. Just like the reason we have a Plank scale is because it is the limit of our current measurements. — Philosophim

Math is an invention of interpretation, it does not change the fact of 2 + 2 = 4, which is a function of reality only interpreted through math. Same as with the Planck scale, it's not bound to measurement, it is bound to fundamental quantum randomness, it is the scale edge-point at which our reality stops acting properly. It is not an invention.

Don't insinuate someone doesn't know something, explain why they don't know something. Otherwise its a personal attack. Personal attacks are not about figuring out the solution to a discussion, they are ego for the self. You cannot reason with someone who cares only about their ego. — Philosophim

Don't posit to know something without demonstrating it. So far you haven't demonstrated understanding quantum mechanics, which produces a problem in that you use produce conclusions based on misunderstandings. Pointing out that you misunderstand something and use something wrong is not a personal attack, it is simply pointing at the flaw on reasoning. The irony here is that you lift up your logic and reasoning as rock solid and you dismiss criticism with the evidence of how well your logic is. But your argument mainly only point out that there must be a first cause, without it, there would be a cyclic loop. And maybe I misunderstand here, because that just sound like stating something obvious, axioms of logic that are already logical in themselves, without a need for overcomplicated reasoning.

You were claiming it came from the Planck scale, so I asked you what caused the Planck scale. This is not me asserting how the Planck scale works. But again, this is silly. — Philosophim

The Planck scale is a scale in which reality breaks down; there's no property to this that had a caus, it is a singularity point. It's like saying "what caused this centimeter" and not mean the invented measurement, but the centimeter in itself without relation. That is silly. Such a scale singularity point in which reality breaks down and dimensions stop to have any meaning is a state in which causality breaks down as well. Without causality there are no causes and all our reality in this scale means no prior cause. If a randomness of probabilities exist there they exist without prior causes, they exist there out of pure randomness, causeless spontaneous existence. But since such existence forms properties, they expand. - This is me explaining why calling for a cause to the Planck scale makes no sense.

And what caused the big bang? Did something prior to the big bang cause the big bang? Or is the big bang a first cause with no prior cause for its existence? You keep dodging around the basic point while trying to introduce quantum mechanics. Citing quantum mechanics alone does not address the major point. — Philosophim

I'm not dodging, I'm countering the points you make. I've already explained the different solutions to the Big Bang theory. Penrose cycles, inflationary universe, loops or the one I described, which points out a first event without a prior cause.

But the key point is that the density of the universe right at the event of Big Bang would mean dimensions having no meaning, therefor no causality can occur in that state. It is fundamentally random and therefor you cannot apply a deterministic causality logic to it. And since you can't do that, how can you ask any of the questions in the way you do? It's either cyclic in some form, or it is an event that has no causality as its state is without the dimensions required for causality to happen.

If you want an answer to a first cause, that's the answer I've been given many times now. The first cause in that scenario is the first causal event to form out of the state in which causality has no meaning, which is a state that has mathematical and theoretical support in physics. But if your point is that "aha! see there's a first cause!" then you are just stating the obvious here and I don't know what your point really is? Because you jump between pointing out the obvious, entangled in a web of unnecessary reasoning, and asking irrational questions about physics.

Yes, it is an invention by us. Its the limitation of our measuring tools before the observations using the tools begins affecting the outcome. "At the Planck scale, the predictions of the Standard Model, quantum field theory and general relativity are not expected to apply, and quantum effects of gravity are expected to dominate." — Philosophim

No, it is the theoretical scale supported by math in which general relativity and quantum physics breaks down. You even quoted exactly that part, and yet you don't seem to understand what it means.

At the Planck scale, the predictions of the Standard Model, quantum field theory and general relativity are not expected to apply, and quantum effects of gravity are expected to dominate.

You don't seem to understand the difference between an invention of interpretation and the thing that's being interpreted. You argue as if the number 2 is an invention. The invention is the interpretation of reality that correlates to the real thing of 2 something.

A Planck unit is a mathematical invention of interpretation. The Planck scale is not the invention.

And it's not "the place in which our tools begins affecting the outcome", read the bold line in that quote you posted and really think about what it actually really means.

So either way, you're proving my point, not going against it. You're seeking very hard to disprove what I'm saying, but perhaps you should make sure you understand what I'm saying first. I don't think you get it. — Philosophim

But it isn't conclusive. You still have the Penrose theories, and other cyclic interpretations that do not have a first cause as it's circular. There's no need for a first cause as the cycle, the loop causes itself. It's only paradoxical because we aren't equipped to understanding such things intuitively, because we are bound to thinking within the parameters of this reality. But the math supports such interpretations as well. And as I've pointed out, math is not an invention of reality, it is an invention of interpretation and our interpretations have yet to formulate a defined answer as to if our universe appeared out of nothing, or if it is a form of cyclic looping event causing itself.

What I have been saying is that your logic isn't enough to point out a first cause, since such conclusion is bound to the parameters of this reality. You can point out a first cause within our reality, beginning at the start of our dimensions; but you cannot conclude anything past that with it since there's no evidence for our reality functioning the same beyond the formation of it's foundation. Therefor, we can conclude there being a first cause at the point of the Big Bang, for this reality (as it operates on entropic causality) and it could be that it IS the first cause out of nothing, based on what I've been describing above. But a cyclic, and in our point of view, paradoxical looping universe is still a functioning hypothesis, and since such is beyond the logic of this reality, it breaks the logic in your argument.

Then you agree 100% with my OP. There's nothing else to discuss if you state this. — Philosophim

As said, it is one of the interpretations that exists, I don't adhere to the absolutism of any single interpretation just because the logic I find is sound, because there are too many possible interpretations that include mathematical projections beyond our reality. Regardless, your reasoning is a totally unnecessary confusing web when we already have the math that points towards this outcome. It's all already there in the physics, but you seem to just want to lift up your argument and reasoning as something beyond it, which it's not. So I question the reason for this argument as physics already provide one with more actual physics-based math behind it and I question the singular conclusion of first cause as it doesn't counter the other interpretations that exist.

Just try to go into future threads with the intent to understand first before you critique. — Philosophim

I don't have to, I understand the physics instead. The conflict is in that you try to re-invent the wheel and demand others to accept your wheel when they already have perfect ones mounted. I critique the need for your argument. And since you demonstrate a shallow understanding of the physics at play; it all just looks like you are with force trying to mount your wheel on top of our already functioning wheels, not understanding that those wheels already work. -

A first cause is logically necessaryWhat caused the Planck scale to exist? — Philosophim

Nothing caused it to exist, it's like asking why 2 + 2 = 4. The Planck scale is the scale at which measurements stop making sense as reality becomes fundamentally probabilistic. It's not a "thing" it's the fundamental smallest scale possible for reality, which in my point was that such a scale and such a function rhymes with the theoretical physics of how the universe began. So there's no "cause" to the Planck scale, you've entangled yourself in a web of your own thinking here with no regard for what these things that you address actually means.

demonstrate why. — Philosophim

What should I demonstrate?You have no idea how versed I am in quantum mechanics. If I'm wrong, show why, do not make it personal please. — Philosophim

For one, your incorrect use of concepts like the Planck scale shows how versed you are. The lack of understanding of the uncertainty principle is another, especially since you claim that we "just don't have the tools to decode it". I'd just answer like Feynman did: "If you think you know quantum mechanics, you don't". I've given a run through of how causality can appear out of nothing at the point of Big Bang, something that's much closer to what scientists actually theorize. This is far from making it personal, I'm just pointing out that you mostly use bad reasoning here and back it up with "you don't know how versed I am in quantum mechanics.", which isn't saying anything, especially when you don't seem to show it.

What do you mean by need? A first cause doesn't care about our needs. Its not something we invent. It either exists, or it doesn't. Logically, it must exist. Until you can counter the logic I've put forward, you aren't making any headway. — Philosophim

Again, you don't understand what the Planck scale is. It is not an invention by us and I don't know why you keep implying that.

No, it cannot. A first cause is by definition, uncaused. You are stating that a first cause is caused by the quantum fluctuations before the big bang. That's something prior. Meaning your claim of a first cause, is not a first cause. — Philosophim

Do you know what these quantum fluctuations implies? It's a fundamental randomness of probabilities that do not act according to general relativity. The concept of spacetime, in essence, causality, breaks down and have no meaning at that point. Regardless of how we view the Big Bang, all projections starts the universe at such a dense point that it fundamentally becomes zero dimensional and there can be no such thing as a first cause before this since there's no spacetime in this state. Without dimensions, there's no causality and no cause. If we take the fact that quantum randomness and rogue probabilities increase in likelihood the smaller in scale you go, then at a scale so small it basically becomes dimensionless, there would be a singularity of probabilities. A probable event occurring, a fluctuation, in a state without spacetime, would instantly become. Without dimensions the only way to fit that fluctuation would be for it to expand "somewhere", producing the necessity for dimensions, and that causes a basically infinite density to expand into those dimensions.

You can't have spacetime at such a dense point, and without spacetime you have no causality, therefore you cannot find a first cause before it that aligns with how we view deterministic causality in our reality. You can only find a first cause after spacetime appears, after our dimensions formed.

What caused it to be a fundamental absolute probability? — Philosophim

How does anything without spacetime act as a causal event? A quantum probability doesn't need spacetime as it can exist in all states at once. If that state was because of a big crunch, a higher dimensional looping state that we leak out of or if it was a fundamental paradox of dimensionless nothingness that have no beginning, it still places our reality at a point in which no causality existed before it existed. So if you're looking for a first cause, I've already pointed at it; the first event of time and causality at the point of the big bang. That is the first cause and it has no prior cause due to no causality existing before it. It's the logical conclusion of the state of the universe at that point and the cosmological models support it.

Did you read the actual OP? I clearly go over this. Please note if my point about this in the OP is incorrect and why. — Philosophim

No, you clearly misunderstand everything into your own logic and you have become so obsessed with that logic that you believe the Planck scale is an invention and disregard how general relativity breaks down at a singularity point. If a star of defined mass produce a black hole where spacetime breaks down, then imagine a singularity or a Planck scale dense form of our entire universe. Then explain how causality would work in that state. You seem to forget that our laws of physics break down at that point and that our dimensions stop making sense. If causality breaks down, then you can have no causes before this event as there's no spacetime there to produce it. That's where logic takes you based on the current understanding of physics and quantum physics. -

A first cause is logically necessaryYes it is. Let me explain what probability is. When you roll a six sided die, you know there are only six sides that can come up. Any side has a 1 out of 6 chance of occurring. What is chance? Chance is where we reach the limits of accountability in measurement or prediction. Its not actual randomness. The die will roll in a cup with a particular set of forces and will come out on its side in a perfectly predictable fashion if we could measure them perfectly. We can't. So we invented probability as a tool to compensate within a system that cannot be fully measured or known in other particular ways.

So yes, causality still exists in probability. The physics of the cup, the force of the shake, the bounce of the die off the table. All of this cause the outcome. Our inability to measure this ahead of time does not change this fact. — Philosophim

You didn't read what I actually wrote. I'm talking about the idea of a first cause, as in the cause that kickstarted all we see of determinism. And how there's no need for one if the universe expanded from the Planck scale. That determinism is underlying our reality is not what I was talking about.

False. Quantum physics is not magic. It a series of very cleverly designed computations that handle outcomes where we do not have the tools or means to precisely manage or measure extremely tiny particles. That's it. — Philosophim

No it's not. Maybe you should read up more on quantum mechanics. "Cleverly designed computations" is a nonsense description of it, and sounds more like religious talk. Quantum mechanics isn't magic, but it's not how you describe it here. We can absolutely measure it, but we run into the uncertainty principle and the reason may be located outside of reality or our ability to measure it due to limitations in our dimensional perception.

Don't state something as false before leaving a description that isn't even close to how quantum physics are described. That's bias talking.

A first cause is something which exists which has no prior reason for its existence. It simply is. — Philosophim

A first cause is merely the first causal event and as I described it can simply be the first causal event out of the quantum fluctuations before the big bang. A dimensionless infinite probabilistic fluctuation would generate a something and still not be a first cause as it is a fundamental absolute probability. And even if it weren't it can also be explained by a loop system, infinitely cyclic like Penrose's theory. -

A first cause is logically necessary

A first cause isn't necessary within a probabilistic function.

Causality, as in deterministic events, follows entropy only on scales above the Planck scale. Virtual particles, as understood right now, does not have a first cause, they are probabilistic random existences.

If the universe extended out from this Planck scale; in which determinism exists as an irrational system due to the absolute probabilistic chaos that exists there; then the first cause is basically defined as the first entities that acted upon another entity causing a chain reaction of events rather than randomness. In essence, once there was an absolute probabilistic chaos and then one such instance acted upon another causing a chain reaction of events forming our universe.

So, through quantum physics, a first cause isn't a necessity. It's only a necessity for that which solidifies out of an absolute probabilistic system when that system's random probabilities reach such high certainty as to fundamentally make any other probable outcome impossible due to not being able to affect neighboring events. At a certain scale, the governing constants of the universe (which themselves may be part of the initial probabilistic random outcome during the Big Bang), form an alignment for probabilistic outcomes and as such all other probable outcomes collapse into what is most deterministically probable.

Like drops on a surface, their surface tension conform them into large actions and systems; the merging two drops is a highly probable event based on the laws of physics, regardless of the chaotic nature of the substance and its elementary quantum randomness. All other probable outcomes becomes only probably on such small scales as to be overridden by the emerging properties of the whole set.

Therefore, the universe is fundamentally probabilistic, but the likelihood of an event that overrides determinism in the universe is so low that it cannot happen during the entire timescale that the universe exist through. Like, for example, a drop of water splitting up into two parts by a reversal of the laws of physics governing that drop is on its large scale such an improbable event that it would take billions of times the timescale of our current universe lifetime for that to happen as a random event, and the deterministic outcomes would even then be insignificant to the universe as a whole.

Furthermore, spacetime singularities may be places in which matter and objects compress to such scales where they start to behave like pure probabilistic randomness, and since that produces a paradox in entropy, reality itself collapses into a feedback loop that becomes a "hole" in our reality as it cannot function together with the deterministic system outside of it.

Then there's the superposition of causal events that have the same outcomes regardless of which came first, and such an indefinite causal order. Although it doesn't change larger causal links.

https://www.quantamagazine.org/quantum-mischief-rewrites-the-laws-of-cause-and-effect-20210311/ -

Who's Entertained by Infant and Toddler ‘Actors’ Potentially Being Traumatized?So what? They're not that much fun to look at in real life. Use a Cabbage Patch Kid and it won't bother me — Vera Mont

That's not the point though? A realistic infant is needed to be able to tell a story. I don't understand this argument? It sounds more like you don't like seeing infants in movies? If so, no one's forcing you to watch movies.

in which the child's image will be recorded for some foreseeable future — Vera Mont

An infants likeness to their adult self or even child self is barely if at all recognizable. So why does this matter? The subject was about trauma due to on-set practices.

and available for commercial use to who knows what entities. — Vera Mont

For the specific movie or TV-series, yes, so I'm not sure what you are talking about? A production company doesn't own anything other than the filmed shots for the purpose of the movie, except for any trailers, posters and photos from that movie for marketing it. It barely even stretches into using shots from previous movies as "flashbacks" in sequels since it sometimes spawn lawsuits from the actor not getting paid for re-using those shots in a new project. Contracts are for the specific movie or TV-series specifically. A production company doesn't own any other type of use out of the images they shot. If some shots were edited out, they can edit them in with a director's cut without affecting anything, but that's about it. So I'm not sure what other "entities" you refer to it becoming available to?

Yes. That's where the kid is looking - not at the actor who is supposed to be their parent. That's why they're they're unconvincing in the scene. — Vera Mont

Depends on the shot and competence of how it's framed. Sometimes it's also possible to just digitally replace the head of a parent holding a child, to that of the actor. But most of the time, the parent, child and actor get to know each other before rolling (obviously also so that the infant feels comfortable in the arms of someone else), so that there's a more likely connection between the actor and the infant in the scene. I see no difference between that and a parent letting their friend hold their baby. And actually, a lot of times it is actually a friend of the actor who's the parent so there's even less issues. Of course, there's a lot of bad examples but nowadays I rarely see it. Mostly also because it's so easy to digitally change where someone is looking in a scene so productions do that if the child starts looking in another direction.

If the kids' lives get ruined, well, that happens a lot more without any cinematic intervention, just through unfortunate circumstances. — Vera Mont

I see the amount of incompetent parents as a bigger reason for traumatic childhoods. Domestic fights between parents, misbehavior due to alcohol etc. The common culprits of abuse are one thing, but even parents who aren't crossing such thresholds can cause traumas just by not being educated enough on child psychology. Like, in attachment theory, if a parent just leaves the apartment without signaling this to a child, even though the other parent is home; it can cause a traumatic feeling in the child as if the parent disappeared from their life. A continued behavior of this can cause long term trauma that could even form problems to handle social skills well as an adult.

Generally, modern life isn't adapted well to taking care of children. Parents today are forced away from the necessary time that should be given to the child and with the rise of neoliberal individualism as a societal ideal, parents are ill-equiped to move away from the self-centered lifestyle they have, and subsequent unintentionally harms their children psychologically.

As long as we have the forces of capitalism pressuring parents into living paycheck to paycheck, they will not ever find the time to parent for the sake of the child.

And that's ironic, since many modern parents are so overprotective of their children, they want to shield them from all the worlds harm to the point of wrapping them in bubble wrap; all while the harm might come from the parents themselves, without them even understanding or noticing it. -

Who's Entertained by Infant and Toddler ‘Actors’ Potentially Being Traumatized?I don't see that infants are necessary — Vera Mont

CGI isn't cheap. A digital baby that's supposed to be viewed in close ups can cost many millions of dollars to make, without any guarantee of it actually looking more convincing than a real infant. Children of Men is an example of it being very convincing, but that's a huge budget.

lack of informed consent — Vera Mont

But that's true for all situations an infant are in, and why it's the responsibility of the parent or caregiver. You can bring an infant out into a public place and that is also without consent. You can't get consent from an individual who does not have neither the communication capability or enough ability to understand the situation they are in. Usually, a film set is quieter and less stressful than a public street.

their caregiver is obviously somewhere off-stage. — Vera Mont

Parents are usually right next to the camera. They're right up close because that's part of the regulations, but even so, I've never even heard of any crew who would even think of separating the parent from the infant during a shoot and it's also crucial in order to even get a shot. When it comes to crying, there's been occasions when twins in which one of the infants usually cry a lot and the other not. So the crew simply stand by with the camera and quietly wait out until the child who cries a lot starts to naturally cry. Usually it only takes a few seconds of crying to get the shot needed and then the rest is just sound effects and a dummy. And when there's a need for calm, the other twin is filmed instead. Set's aren't mechanical brutal machineries, they're usually boring slow and a lot of waiting. It's often more of a problem that there's too much waiting rather than stress and when infants and children are involved, crews generally are super focused to quietly and quickly be ready before the infant is even brought on set. It's the same with animals, especially if they're not trained for filmmaking.

But there could definitely be shoots with malpractice of this. There's a lot of filmmaking taking place outside of regulated productions, so those should be criticized if they fail to comply. However, real productions are very careful, both because of all the regulations, but simply out of respect from the crew. -

Who's Entertained by Infant and Toddler ‘Actors’ Potentially Being Traumatized?the lighting, the noise, the presence of strangers, the incomprehensibility of the situation and the irregularity of schedules has to be stressful. — Vera Mont

Not accurate (and I've been on many film sets). Whenever infants are present there's a deep respect among the team members not to cause any stress and there are regulations and rules to follow for shoots involving children and animals. The experience for an infant is barely different from being with their parent's friends outside in public among people. So should we ban people in cities to walk outside with their children? That's far more stressful than a film set. And the time children are on set is usually very short, only for the shot and then they go home. If a child is in many scenes it usually involves twins in order to comply with the time rules and regulations for children and animals. And dummies are often used for any shot that isn't a close-up.

Those things happen one time, for a few hours, not long days of shooting. You don't know how many rehearsals, how many 'takes' and how much waiting around in between is behind a two-minute scene in which the audience actually sees the baby. — Vera Mont

Not accurate as I mentioned. There are no long days of shoots for infants, ever. Twins or triplings are utilized if the time exceeds the regulations which are strict. If someone breaks those rules, that's not an industry problem, that's a problem with those specific people, just like if some asshole treats a child bad in public you don't blame everyone on the street.

Small children - depending on how small - may enjoy the limelight, but they do tend to become damaged over time. — Vera Mont

If success creates mental health issues (in adults, too, incidentally) imagine what failure does to a little kid who was promised stardom. — Vera Mont

The reason why many child actors in Hollywood have gone down that route is because many parents and caretakers of these children are forcing their children into fame as an extension for themselves becoming famous. There's a culture around fame in Hollywood that is downright destructive to anyone's soul.

But that has nothing to do with film sets and set practices. And anecdotal cases from a small number of shoots with infants and children does not reflect the entire world of filmmaking. The problem has to do with the culture of fame, not film shoots.

Another problem as I see it is that there's an extreme obsession with protecting children today in a way that becomes destructive. Amateur psychologists or people who think they know child psychology make wild conclusions about what children can handle or not. What we've seen the last couple of years are Millennial parents who's so overprotective of their children that when they enter teenage years and later are unequipped to handle the complexity of adult life. The overprotection of children systematically makes the children, when later grown up, more susceptible to anxiety and depression because they've never been in enough situations to process difficulties of life. So facing challenges in childhood is not the problem, it's the lack of guidance and if the "guides" around children just push them into fame and that culture without such guidance on how to navigate such a sphere, that's what's causing these problems.

As someone who's been any many sets and actually knows that world it's frustrating to see people from the outside speak of something they clearly know very little about. Especially since the problems with child actors in Hollywood aren't seen in other film industries to the same extent and especially since the root cause of the problems often lie outside of the film sets. When it comes to children working as actors (not infants), more often than not it's the familiarity and "family" of the crew and cast that becomes their comfort and it's the cult culture around fame that's handled by their agents, parents and other people in Hollywood that's the destructive part, not sets or crews.

Pointing fingers at film crews like this is an extremely skewed situation that isn't fair to the people who actually functions as the best people around famous child actors. Anecdotal and situational accounts of bad practices on sets are no more common than having the children out in public or anywhere else. So please stop pointing fingers at film crews like this, it's not accurate. -

Donald Trump (All General Trump Conversations Here)Straight out of a right-winger's playbook. I can turn on our local right-wing tv station or listen to the right-winger opposition in our parliament, and it's the same kind of talk, the same arguments, just the names are different. — baker

What are you talking about? How is any of that right-wing? How is caring for democracy against the right-wing manipulation and power plays of demagogues even remotely similar to a right-wing playbook? I would say the same thing about Democrats, but since they've not displayed the same level of total ignorance of facts, knowledge and stability that the Republican party has displayed these recent years; the criticism needs to be aimed where it's currently needed to be aimed. Where's the competency on display in the Republican party? Like, just look at how they treated the house speaker situation last year. They act like spoiled children, constantly just doing whatever they can to keep fucking up things until they become the center of attention. The behavioral rot has spread from Trump and infected the entire party. I could make a long argument against the problems among the Democrats, because they're acting incompetent as well, but the level at which the Republican party operates today is just a laughing stock for all us in more functioning democracies in the world.

Whenever there's embarrassing turmoil in the Swedish, Nordic or even the EU government and I turn to read up on what's going on in US politics I'm stunned at the differences in competence. We view our politicians as incompetent, but in comparison to the US political scene we're like an utopia compared to a Mad Max film.

Caring for democracy is to get rid of the demagogues and the entire US system is built upon the actions of demagogues. Elections in the US are about appearances, not policies. It's about abstract values like "family" and "God", not philosophically sound moral principles. It's a theatre aimed at fooling the people to believe they have a good father or mother caring for them from their white house throne. It's an autocratic system in which an economic elite make shakespearian power plays for the throne and the servants in congress to play manipulation games while laws are controlled by a supreme court where enough deaths on one side can make the entire foundation of law fundamentally unbalanced.

Anyone who looks at the US system as some pinnacle of democracy needs to get their heads checked. -

The Thomas Riker argument for body-soul dualismThe problem is this. What happens to your consciousness when you get transported to a planet? — Walter

How do any of the crew know that you, the one who goes into the transporter, actually is the one ending up on the planet surface? Since a copy can be made, does that mean that the one going into the transporter essentially dies and a copy is being materialized on the planet surface?

With all memories intact due to the brain structure being intact, the one ending up on the planet surface will always have the experience of being "sent there", but they will never be able to know if their individual experience and life ends when being transported.

Without a proper wormhole portal that you "go through", it's more likely that everyone who's transported essentially just dies every time they are transported. It's impossible for them to be able to know the difference. -

Donald Trump (All General Trump Conversations Here)How is it even conceivable? — Wayfarer

Because the proper protections aren't in place. Maybe the US has never been in a situation like this in which someone who may implement anti-democratic policies gain power. I think the US and its people have been living in a fantasy in which they believe that such a thing will never happen in their own nation. That's something that happens in those other "backwards" countries, but not in the US because that's a nation of Godly blessed got damn freedom! If the US survives this overgrown manchild and finds some stability, I think that both the Democrats and Republicans need to implement a more rigid defense against this bullshit. Maybe look to more functioning democracies and stop believing they're the best and greatest nation in the world. Time to try out some humility and introspection and clean out the halls of power from power leeches and lobbyists, install safeguards that make it impossible for people who value themselves over their own people and the world to gain power.

I don't accept that Biden is feeble or senile or incapable. I do accept that he projects very poorly on the podium but considering the stuff he's having to deal with, and magnitude of the problems he and the world are dealing with, any number of which could literally be world-ending, I think he's doing a quite exceptional job. — Wayfarer

I'm not arguing against that, as I said, he's steering the half-sinking ship through a storm of global turmoil and economic hurricanes. But the risk is in his health, what if he suddenly dies, suddenly seriously fail in mental capacity. There has to be some actions taken right now on both sides to find some younger candidates to build up for the next 2028 election. It takes time to build up trust in candidates among the voters, so get some stable, non-bullshit, young people up there. And Republicans need to ditch trying to capture the Maga people's votes, even if they risk losing. Many other candidates feel like pseudo-Trumps, as if they just want to reach those voters. But that's not gonna hold, they need to focus on voters outside of that cult, and for that they need a proper candidate and not pushing forward other clowns. -

Donald Trump (All General Trump Conversations Here)That their cruel and imprudent behaviour to their brothers is now having undesirable consequences should have been predictable and should have been avoided, so perhaps they are not quite as clever and sensible as they think. — unenlightened

But was there such a behavior though? Weren't there enough good hearted people who cared for all people and wanted to help, just to get a shotgun to the face and screamed to get off their property? That there were enough people who tried to make things better for all, especially low-income low-educated people?