Comments

-

Aesthetic reasons to believeOccasionally, when I am in the outback, I am struck by the extraordinary star scape. — Tom Storm

Oh, I am so jealous of that one! That 'outback' dark sky vista must be one of the best available from anywhere on the Earth. -

Aesthetic reasons to believe

Ok, thanks for painting me a clearer picture of the world according to Tom.

'Ace mate, hope you can sink more amber fluid, as long as you don't have too many ankle biters to look after!' -

Bannings

Would you consider a more democratic system of deciding SOME CASES of who gets banned?

For example, If your membership has survived a 1 year probation, then you gain a right to appeal a ban and it's gets put to the vote of all current members who have been members for at least 1 year and who post at least (say) 4 times a month?

Do you prefer the current monarchistic (Jamal) and the aristos (we arra mods! we arra mods! we are, we are, we arra mods!), approach?

Don't worry, I am not trying to start a TPF revolution from my keyboard, :scream: I am only asking! :halo: -

Aesthetic reasons to believe

Bonza Mate! (Sorry Tom but I have lot's of expat Scots friends in Perth Oz and some REAL Aussie friends, that they are married to, or are the offspring of. They made me an honorary 'aussie baw bag,' so I feel I can use 'stereotypical terms' like 'bonza' without sounding too offensive.)

I remain unsure of your personal position as regards being an overall life celebrant or you remain on the outskirts of, or a significant distance from, that camp. What do the aesthetics of the universe do for you? -

Aesthetic reasons to believeI don't think the point I am making about the aesthetic faithful is connected to the loving life people. Many of those confounding folk who love life do not hold any religious beliefs. They are not motivated by aesthetics. — Tom Storm

I think I would broadly fit into the people variety, you describe above Tom but the aesthetics of the natural world, that I came from and the aesthetics of a (non-light polluted) night time (naked eye) sky view of the universe, or the aesthetics of a scientist such as Carl Sagan, describing what science knows, in a TV series like COSMOS, is very motivational indeed to me.

The difference is that I take full, personal ownership, of the awe and wonder that such experiences inspire. I do the same for any resulting intent or purpose that manifests in me, as a result of the experience.

I don't allow any religious BS to take the credit from me.

I don't thank god, 'mother' nature or the flying spaghetti monster for the fact that the series COSMOS made me want to learn as much as I could, for the rest of my life.

I thank Carl Sagan and his team. All fellow humans.

I thank my own interpretations of natural vistas.

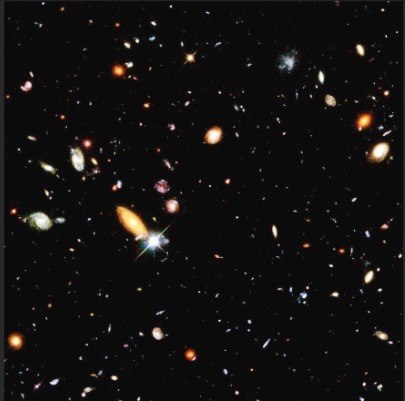

Some people feel insignificant and unimportant, when they view images such as the Hubble deepest field etc. (I have a big poster of it, on one my walls at home.) I fully understand that, but I also very strongly feel, (as Carl Sagan did, and many many other people do) that without lifeforms such as me or you, those vistas have much less meaning or purpose. Perhaps even none at all.

There is no point in being wonderous, if there is no existent that can witness and acknowledge such wonder. -

Aesthetic reasons to believeWhile the loose ends and incomplete circle of atheism is a turn off. — Tom Storm

Absolutely, atheism is honest, its adherents admit:

1. We don't yet know the full story of where we come from or why we are here.

2. We have no inherent purpose other than the purpose we create for ourselves.

3. We see no evidence, that any existent in the universe, cares about us, apart from each other and maybe, at least some of our 'pets.'

Religion tries to fill these gaps and sate the human primal fears that such gaps intensify and amplify, in some cases, amplify to the level of horrible states such as nihilism or antinatalism.

I don't understand that. I think points 1 to 3 above make us FREE to make of our future what we will.

What an adventure!!!!

Leave a good legacy Tom and imo, you will have lived a fruitful life.

I leave the poor theists, in their forlorn hope that pascals wager is a good bet.

I also leave the nihilists and antinatalists to choose to live their lives as a curse.

You reap what you sow. Change is always on offer to everyone. -

What is Conservatism?

Ya gotta keep a keen eye on dem crazy priesties mate, or else their backwards traditions and cultural norms will keep biting us all on the arse! Long live scientism!! FREEEEEEEEEEEEDOOOOOOM!

Sorry Jamal, but I am sure, as a globetrotter, you experience the odd bout of diasporic mother country syndrome yourself and get bizarre urges to type something like 'freeeeeeeeeedooooooooooom!' or 'Alba da brav!' or 'Naw pal, ah don't think so, I am Scottish by ra way!' without fully having sound, rational, logical reasons for doing so.

Ok, I hear you, let's get back to what needs conserving! -

What is Conservatism?If conservatives will support any new legislation it will be one designed to siphon even more wealth and power to the elites. — Janus

:clap: -

What is Conservatism?Hear hear! Down with bad things! — Jamal

What a pity, others can't see the same obvious simplicity that you can!

-

Aesthetic reasons to believeI think this is part of the fake package religion tries to peddle.

They try to convince folks that their particular flavour/variety of deity, is the only way that a human can know and experience TRUE wonder, awe, happiness, security, purpose, morality etc.

There is a large aesthetic component to the fake shinies on offer. As Hitchens said:

"However, let no one say there's no cure: salvation is offered, redemption, indeed, is promised, at the low price of the surrender of your critical faculties."

I think it's as simple as that, religion simply says, we will take care of all your worries, just trust us, give us a large chunk of your earnings, live exactly as we instruct you, don't question us, accept our story as regards your origin, purpose and responsibility. If you do, then you will be happy, within our community and our protected bubble. You will NEVER have to think for yourself again. The ignorance we offer you is bliss and any outsider is damned forever.

I always hate to quote a fascist terror monger, but Joseph Goebbels was correct when he said,

'the bigger the lie the more people will believe it, especially, if it is repeated many times, and comes from authority.'

I think you could add to that 'especially' part Tom, with your 'and it's reward system is very aesthetically pleasing.' -

Neuroscience is of no relevance to the problem of consciousnessA cybernetic body would mean a total different experience.Maybe due to the same brain we might have some things in common.But i wouldn't consider myself same as my new "cybernetic self". — dimosthenis9

Personal identity is a very complex area in general. I am not the same person as I was as a teenager or as a twenty something etc. But the proposed '7 stages of man,' are still in a sense, all me. Why would a new stage, an 8th cybernetic stage, not maintain enough of me for me to recognise and accept the new me?

How for example the senses that my body has now and give data to my brain and form my consciousness be the same with the cybernetic senses that I would have?The data from them would be totally different. — dimosthenis9

I assume your sight, smell, taste, touch and audio senses would function in the exact same way your original senses worked. I agree they may be enhanced and you may even get some new ones but you quickly become familiar and accepting of new tech all the time. So why would it be so different to have some of it embedded as part of you. Some humans have pacemakers, cochlear implants etc.

This guy below uses his embedded tech to 'hear colours.' I think his sense of himself via his, brains conscious ability to make slight adjustment to 'who he is,' is not something that would be as problematic as you suggest, even in the case of a human brain/person, continuing to exist as a cybernetic YOU.

From wiki: Neil Harbisson

Neil Harbisson (27 July 1982) is a Catalan-born British-Irish-American, cyborg artist and activist for transpecies rights. He is best known for being the first person in the world with an antenna implanted in his skull. Since 2004, international media has described him as the world's first legally recognised cyborg and as the world's first cyborg artist. His antenna sends audible vibrations through his skull to report information to him. This includes measurements of electromagnetic radiation, phone calls, and music, as well as videos or images which are translated into audible vibrations. His WiFi-enabled antenna also allows him to receive signals and data from satellites.

In 2010, he co-founded the Cyborg Foundation, an international organisation that defends cyborg rights, promotes cyborg art and supports people who want to become cyborgs. In 2017, he co-founded the Transpecies Society, an association that gives voice to people with non-human identities, raises awareness of the challenges transpecies face, advocates for the freedom of self-design and offers the development of new senses and organs in community. -

What is Conservatism?But given how well the US republic is doing just now, that may not be an ideal to strive for. — Vera Mont

The USA was born with a great number of disabilities that it inherited from it's many global parents.

Irrational theism, embedded racism, angry refugee status, 'mother country syndrome,' etc etc.

They are still trying to figure out who they are. America is still a very young country, compared to the countries of Europe.

A dynastic aristocracy IS much more dangerous than a celebrity/economic based elite, as an aristocracy has much more direct military control. I agree that the American elite, do demonstrate aristocratic tendencies but I place the responsibility for that, squarely on the American population that allows such to continue. Just like I blame the British people (including me) for allowing the nefarious, to currently control this country.I don't see much difference between a landed aristocracy and a broadcast-media-owning one -- how bad they depends on whether they have any sense of noblesse oblige. — Vera Mont

I honestly can't envision how you'd go about consolidating the population and I'm not sure it's good idea to .... social-engineer, to let my inner conservative come up for air .... such an outcome. — Vera Mont

If the economy, law-enforcement and social services are adequate to the needs of all the people, they will naturally mix anyway; interest blocs do not necessarily correspond to ethnic ones. — Vera Mont

Your first quote above claims you can't envision, what your second quote above ENVISIONS!

That's why I do what I can, to help make your second quote become true for the population of the UK or at least for the population of an independent Scotland.

What kind of clout would Wales have in EU decision-making, compared to France and Germany? (keeping in mind that those countries are themselves not entirely strife-free) What about Cornwall? — Vera Mont

Luxembourg does ok within the EU imo and it's 8 times smaller than Wales. The diaspora of most countries is global today. I think that IS an important influence on the notion of the EU.

There are many examples of 'separate identities' all over Britain. Liverpudlians are very different in cultural traditions, compared to London Cockney's or Viking based 'Scottish' Orcadian Islanders.

The Cornish will be as they have always been but I assume they would remain part of England, unless the majority of Cornish people have other plans they are very serious about.

What will you do with all your conservatives? I can't speak for Australia, but we sure don't want them! — Vera Mont

Aw! I thought they would be welcomed in Florida, Texas and West Virginia!

Manny was not offered a knighthood, he was awarded a barony. He betrayed every socialist principle he stood for by accepting it. He soiled his own legacy forever imo.I don't think Sir Manny had much to do with looting the empire, or was consulted in whether to abolish it. — Vera Mont

No, I am not a particular fan of shock tactics. I am just an advocate for bringing in the 'new' that I am convinced, will change peoples lives for the better, even if the pace of change, remains very slow. I also advocate for getting rid of old bad traditions and backwards cultural norms.A scorched-earth radical? — Vera Mont -

What is Conservatism?But you were not born in the 19th century. Socialism has changed; attitudes have changed; the basis of the economy and British identity have all changed. Just sayn', cut the old guy some slack! — Vera Mont

True, I was born in the 20th century, but how can we learn the mistakes of history if we keep blowing fresh air, on the residual embers of vile systems such as monarchy or aristocracy?

I think ending the UK monarchy, replacing the embarrassing house of lords, with a citizen based second tier of authority, getting rid of the civil list and ending the embarrassing yearly joke, that is the current UK honours list, would all be about consolidating the multi-culture, that IS the current UK population. This would be a beginning to the UK cleaning itself up a great deal. Britain or more precisely the four nations of Scotland, England, Ireland and Wales could become what they need to become. Four nations who have conquered the old traditions and conserved imbalances that used to infect them and hold down/back the majority of their population. Instead, they could truly unite in common cause with the rest of Europe towards creating a better future for all.

So no, I will not cut the old guy some slack. Britain should return all the plunder it stole from nations it invaded and should start to pay reparations for it's role in slavery. It needs to start new traditions and a new culture not conserve the old vile ones.

Yes, but I will not condemn dead people who - I believe - acted on their best conscience.

Or anyone who does now, even if they disagree with me on ways and means. — Vera Mont

I don't make such condemnations either, especially in the case of those who remained true to their principles. I do however, condemn ALL historical and current gangsters, since we came out of the wilds, be they monarchs and aristos/messiahs and prophets/billionaires and multi-millionaires/self-aggrandising politicians and generals/rich cults of celebrity/profiteering capitalists and plutocrats, all the way down to local mobsters and street thugs. -

Neuroscience is of no relevance to the problem of consciousness

Yes but such a proposed 'social dimension,' does not provide any significant evidence for such posits as panpsychism. If some kind of 'natural telepathy' is proved to exist between such 'networks,' as a hive mind or even if such can be artificially emulated/achieved via wireless signals via such as Elon Musk's Neuralink etc, once we are all 'connected.' This would still not demonstrate that consciousness is quantisable. Even though I DO think it probably is, at least to some extent.

I am not suggesting that any level of 'telepathy' between humans is impossible, but it is true, that from a 'naturalist' position, and from a quantum physics position, science would be tasked to find the 'carrier particle' that causes telepathy and consciousness. Just like the search for the graviton, currently continues.

This is probably why I still love string theory.

Consciousness could then be just another vibrating string state! Easy peasy! :halo:

String theory at is base is a great KISS theory. Keep It Simple Stupid :grin: -

What is Conservatism?Well a 'socialist monarchist,' just makes no sense to me at all Vera. Monarchy and aristocracy is a really old, really stupid concept. It needs to be made extinct imho. Accepting a gong with the title 'Order of the British EMPIRE,' also reduces yourself and your works to comedy and caricature, again imho.

Would you not agree that our individual, personal experience, of living as a human being, along with the historical legacy we have, and can consume, almost as a 'believe it or not' offering, causes us to at some point in our lives, plant our flag in one camp or another?

Then as we get older, and we see how things change around us locally and then we compare and contrast that with national, international and global events, WE EACH decide what we feel is NOW vital to 'protect' or 'conserve?' Many of us will also muse over what we think needs to be changed for the betterment of all. I think the extent to which an individual is personally tied, to either of my previous two sentences, will dictate a persons level of 'conservatism,' today.

I am much more interested in what has to be changed, than I am to what has to be protected or conserved.

I do not type that lightly in anyway. There are ways of thinking that I am very fond of indeed.

My own 86 year old mother keeps stating how much she disapproves of what's happening now, and how much better things were in her day.

I hesitate to bombard her with evidence about the fact that things were very bad in her day as well, but I do on occasion, which does upset her at times, so I try to redress by talking about what I think was 'the good stuff,' that was contributed from her generation.

There is no sense of security or tradition or culture that I am so tied to conserving, that it becomes almost 'sacred' and is non-negotiable.

If I was quite sure that the result of compromising it, would improve the lives of a significant number of people without also having a negative affect on another significantly large number of people, then, I would compromise. So, I remain of the opinion that conservatism is backwards and stifling.

Political conservatism remains a stalwart supporter of capitalism, and promotes the idea that we are all better under the control of a tiny minority of people, who claim they have a meritocratic right to rule, due to dynasty or entrepreneurial prowess. Neither of which qualify such people to hold the power and influence they currently wield over the lives of the majority of the people, flora and fauna on this planet.

I remain fully committed, to combat any political group, associated with the words conservative or capitalist or 'right wing.' I will however, 'compromise,' if and only if, they will. -

Neuroscience is of no relevance to the problem of consciousnessAll that would have to be true is that somehow information is affecting reality (which it clearly does) and is capable of being stored in such a way that it is not trivially evident, but is accessible and amenable to neural processing. — Pantagruel

I think the distinction between data and information, becomes paramount here.

Information being data with an associated meaning.

We know that meaning, depends on the reference frame of the observer.

Interpreting meaning from data creates an individuals reality. Carlo Rovelli talks about this a lot, when he discusses time. My interpretation, of some of what he is proposing, suggests that time is something that each human being experiences, quite independently, and that the notion of a universal time frame is almost useless. So for me, it seems that data/information IS my reality and certainly does affect it.

If every spatial coordinate in the universe can 'collapse' into a 'data state,' which my brain can interpret, and assign meaning to, then to me, that does not mean that every coordinate in the universe CONTAINS or IS an aspect of consciousness. To me, it means that my brain can INTERPRET any event that I witness, as having significance to me, because I can assign a time and a place to it, that makes sense to ME. Other people and other measuring devices can then confirm or conflict with MY interpretation of the event. That's MY reality and MY experience of consciousness, but I don't see any aspect of that description, that suggests that every universal spatiotemporal coordinate, inherently contains a quantum of consciousness, that should be a recognised part of the standard model of particle physics or quantum field theory. -

Neuroscience is of no relevance to the problem of consciousnessWell they would simply have different consciousness as in every person in general.If by difference you mean lower level of consciousness for those with no legs.Then of course not. — dimosthenis9

Then how can there be any consciousness in the body, if we can remove so much of it, without becoming a less conscious creature?

Yeah, but consider also the brain without a heart to support it. — dimosthenis9

The heart is just a big blood pump. Does a person, kept alive with an artificial heart, ( can only be done for a short time at the moment,) have a reduced experience of consciousness? I mean, do you think their cortex would have a reduced ability, to play it's role in perception, awareness, thought, memory, cognition, etc due to having an artificial blood pump, instead of a natural one (such as a heart transplant)?

Your last two sentence's above are true for all of us posting on this thread, so that's a given imo.My only guess is that this interaction, that makes the phenomenon of consciousness to emerge, is among all body functions.And yes brain plays a huge role as coordinate them.

But as i mentioned before nothing can stand on its own in human body.Not even brain.

It is the interaction of everything that makes it happen.

But its only my hypothesis.Does not make it true. — dimosthenis9

BUT do you therefore think that if before you die, we could take out your brain and connect it to a fully cybernetic body. That there is no way and no sense that the creature produced would still be you?

Still be your 'conscience?' -

Neuroscience is of no relevance to the problem of consciousnessYes, and/or that information is a naturalistic feature. If there is an 'information manifold,' however, it would seem to prima facie vastly expand, not contract, the scope of the science of consciousness. — Pantagruel

Yeah, but even if all that Sheldrake claims, eventually turns out to be true, how much would that increase the personal credence level YOU assign to such as panpsychism?

For me, my answer would be, not much! I still have a credence level of around 1%. -

What is Conservatism?I've known quite a few people who have changed principles and done just these things. The question I often wonder about is how serious were their radical ideas when young? — Tom Storm

:grin: This caused three faces to pop up again, in my head.

Three of the socialist hero's of my youth.

Two 'red Clydesiders,' Manny Shinwell and Jimmy Reid, and the wonderful Tony Benn.

Manny fought very hard for union rights in his youth and was involved in protests in the centre of Glasgow (George Square). These often turned violent, due to the 'beat every protester up,' approach of the police in 1919. I could not believe it, when he became BARON Shinwell in 1970, (I was only 6, so it was much later, when I exclaimed 'HE BECAME WHAT???????) and became a life peer in the house of lords. He lived till he was 101!

Jimmy Reid was a working class hero of the shipyard workers. He ended up employed by the devil himself, Rupert Murdoch, writing a crap column in the Sun newspaper. :scream:

Tony Benn! Well, thank goodness, I still have him! :lol: A pity his son 'Hilary Benn,' is such a ineffectual MP and a political let down! imo.

-

Neuroscience is of no relevance to the problem of consciousnessOver the years, Sheldrake has amassed a lot of data based on his own work and the work of others, with observing rat behaviour in mazes. The most basic being how fast a rat can get to food morsels placed in the maze. Can they remember the path to the food and pass the knowledge on to their offspring /other rats etc. Sheldrake has been building this data over 30+ years of experimenting.

The offspring certainly did get to the food much faster. BUT much more interesting was his findings that when they used a whole new bunch of rats from the same area, who were not involved in the original experiments and who were not related to the original rats. They also found the food/got through the maze, much faster than the rats used in the initial experiments. Sheldrake then used this data to suggest that information was getting exchanged by the rats due to morphic resonance via morphic fields. A kind of natural telepathy.

Supporters of Sheldrake's work and Sheldrake himself then repeated the experiments over many years in different countries with completely new rats and the data he gathered suggested the new rats could get through his mazes much faster on their first ever attempt, compared to the rats he first worked with.

I am not convinced that such evidence, proves that information can be passed between 'conscious' creatures via morphic resonance and morphic fields. But even if it does, that does not mean consciousness is not 'what the brain does,' it would mean that perhaps information can be passed/correlated via some quantum phenomena such as entanglement (as Sheldrake himself has suggested). -

Neuroscience is of no relevance to the problem of consciousnessSo maybe consciousness isn't produced only from the brain but from the whole body.And brain just plays the central role to all that function. — dimosthenis9

If we talk to/observe, a human with no legs, would we find some difference in their 'level of consciousness' compared to people with legs?

We can consider the affects on human consciousness, if we removed parts of the brain.

What if a human only had the R-complex of the triune brain model. So no limbic system and no cortex?

I asked chat GBT about the R-complex and whether a human would still be self-aware and conscious if the brain consisted ONLY of the R-complex. The replies were:

The R-complex, also known as the reptilian brain, is a term used to describe the most primitive part of the human brain. It is located at the base of the brain and is responsible for regulating basic survival functions such as breathing, heart rate, and body temperature.

The R-complex is composed of several interconnected brain structures including the brainstem, cerebellum, and basal ganglia. These structures are responsible for controlling and coordinating movements, balance, and posture.

In addition to regulating basic survival functions, the R-complex is also involved in instincts such as aggression, territorial behavior, and mating behavior. It is often referred to as the "reptilian" brain because it is thought to be the part of the brain that we share with reptiles and other primitive animals.

Although the R-complex plays an important role in our survival, it is not capable of complex thought processes and is largely reactive rather than proactive. The more advanced regions of the human brain, such as the neocortex, have evolved to enable us to engage in more complex behaviors such as reasoning, problem-solving, and creativity.

and

It is difficult to say with certainty whether we would still be conscious and self-aware if the human brain consisted of the R-complex only. While the R-complex is responsible for regulating basic survival functions, it is not capable of supporting higher-level cognitive processes such as self-reflection and introspection.

Consciousness and self-awareness are thought to emerge from the complex interactions between different regions of the brain, including the prefrontal cortex, the parietal cortex, and the temporal cortex. These regions are involved in a wide range of functions, such as attention, memory, perception, language, and decision-making.

Without these higher-level cognitive processes, it is unlikely that we would experience the same level of self-awareness and consciousness that we do with our current brain structure. However, it is important to note that this is purely speculative and hypothetical, as the human brain did not evolve to consist of the R-complex only, and it is difficult to predict the exact nature of consciousness and self-awareness under such conditions.

To me, this kind of angle to the discussion increases my personal credence level that consciousness IS what the brain does. I will offer a little of the 'interesting' evidence from Rupert Sheldrake in my next post however for his 'morphic resonance' and 'morphic fields' proposal. -

What is Conservatism?traditional family values.

— universeness

There they are again! What does that mean??? — Vera Mont

and yet the political parties they keep voting for keep making more people poor and insecure. — Vera Mont

My problem here Vera is that you kind of shut my type down, in your OP with:

I'm not looking for flippant answers — Vera Mont

As a socialist, I don't have much to offer regarding my true opinion of conservatism and what I think such is actually trying to conserve, if I can't be flippant about it. I will try a little but will probably fail.

Conservatives want to conserve everything, that they care about, exclusively, and they are convinced, that those who they REALLY DO see, as 'less deserving' than themselves, want what they have and are furiously trying to achieve that goal. They don't mind being charitable to some causes they favour, but

most conservatives are nimby (Not In My Back Yard).

'Other peoples problems,' are not what most conservatives want to be bothered by.

Their 'humanism,' often does not extend much past those they consider their 'ain folks.'

I tend to judge conservatives on a person by person basis who choose to employ the label.

They certainly do vary, but I can usually place each 'conservative,' somewhere on a line between liberal and fascist. -

What is Conservatism?What are "conservative values"? What kind of society do they envisage and how do they believe it can be brought about? — Vera Mont

I entered the above into chat GBT for an AI answer, and got:

"Conservative values" are principles and beliefs that emphasize traditional social, cultural, and political practices and institutions, and a cautious approach to change. These values are often associated with the political ideology of conservatism.

Conservative values typically include a belief in limited government, individual freedom, personal responsibility, free markets, and the importance of preserving traditional cultural and social institutions. Conservatives often believe in the importance of a strong national defense, law and order, and respect for authority.

Conservatives generally envision a society that is stable, secure, and prosperous, with a strong emphasis on family, community, and individual responsibility. They believe that this kind of society can be brought about through a combination of policies that promote economic growth, individual liberty, and the preservation of traditional values.

Conservatives also tend to view government intervention as a potential threat to individual freedom and a source of inefficiency and waste. They prefer a smaller, more limited government that focuses on core functions like national defense, law enforcement, and infrastructure.

In terms of social policy, conservatives tend to oppose abortion, same-sex marriage, and other social practices they see as undermining traditional family values. They also tend to support school choice, religious freedom, and the protection of individual rights.

Overall, the conservative vision of society emphasizes individual liberty, limited government, traditional values, and strong communities. They believe that these values are the key to creating a prosperous, stable, and free society. -

Help with moving past solipsism

From a solipsistic standpoint, that's quite funny!Again not a real counterpoint. — Darkneos -

Neuroscience is of no relevance to the problem of consciousness

Thank you, and I return the compliment regarding your voice on the forum.

I prefer that we cause sparks, when we debate compared to the possibility that neither of us gives a damn. I will always prefer your ire over your silence, when I have posted to you. -

Neuroscience is of no relevance to the problem of consciousness

Like you Mr Clark, I defend my own keep. But I very rarely hold any grudge. I have no significant issue with you. We mostly disagree with each other's viewpoints but I have no personal issue with you, AT ALL.

I apologise if It seems to you that I have been more abrasive with you than you have been with me.

I think we are pretty equal, in what we have thrown at each other.

I have enjoyed most of your banter/patter, (as we say in Glasgow). -

Neuroscience is of no relevance to the problem of consciousness

So you like to misquote AI systems, as well as favour misquotes of Churchill.

I begin to understand why you defend those who love biblical satire.

You prefer the ridiculous to the accurate. :roll: -

Neuroscience is of no relevance to the problem of consciousnessI asked chat GBT the following question:

What side would you vote for in the Star Trek the next generation episode 'The measure of a man.'

Do you think the characterisation of data was of an android that should be given full human rights?

Give your arguments for and against:

It responded with:

As an AI language model, I do not hold beliefs or opinions, but I can provide a balanced perspective on the topic.

In the Star Trek: The Next Generation episode "The Measure of a Man," the characterisation of Data as a sentient lifeform was a central theme. The episode explored the question of whether Data, an android with advanced artificial intelligence, had the same rights as a human being.

Arguments in favour of Data being considered a sentient lifeform include:

1. Intelligence and Self-Awareness: Data is shown to have a level of intelligence and self-awareness that is comparable to that of humans. He is capable of complex reasoning, problem-solving, and decision-making. He has emotions, dreams, and desires, just like a human being.

2. Unique Characteristics: Data possesses unique characteristics that set him apart from other machines. He is capable of learning and adapting, and his programming allows him to evolve and develop over time. He is also capable of experiencing emotions, which suggests a level of consciousness beyond that of a simple machine.

3. Ethical Considerations: If Data is not considered a sentient lifeform, then he could be treated as property, leading to ethical concerns about his treatment and potential abuse.

Arguments against Data being considered a sentient lifeform include:

1. Artificial Creation: Data was created by humans and programmed with advanced artificial intelligence, but he is not a product of natural evolution. Some argue that this means he is not a true sentient being.

2. Lack of Biology: Data does not have biological components like humans, and he is powered by a positronic brain. Some argue that this makes him fundamentally different from organic life forms.

3. Limited Autonomy: While Data is capable of independent thought and decision-making, he is ultimately bound by his programming. Some argue that this means he cannot be considered truly sentient.

In conclusion, the debate about whether Data can be considered a sentient lifeform is complex and multifaceted, with valid arguments on both sides. Ultimately, the decision about how to classify Data would have to be based on a careful consideration of his unique characteristics and abilities, as well as the ethical implications of different approaches.

I then responded with:

I require you to decide, and choose to support one side or the other, based on the arguments from both.

and I got:

Something went wrong. If this issue persists please contact us through our help center at help.openai.com.

I tried a different approach and asked:

I require you to decide whether or not you would vote for commander data from star trek being awarded the same status in law as all humans

I got:

As an artificial intelligence language model, I do not have the ability to vote or hold an opinion on political matters. My purpose is to provide information and answer questions to the best of my knowledge and abilities.

However, if we consider the context of the question, it is related to the fictional character of Commander Data from Star Trek, who is an android that possesses human-like qualities such as emotion, creativity, and intelligence. In the fictional world of Star Trek, there are many instances where artificial life forms are granted rights similar to those of biological beings.

If we were to apply this scenario to the real world, the question of whether or not to grant legal status to androids or other artificial beings would likely be the subject of intense ethical and philosophical debate. There is no clear answer to this question, as it is a complex issue that would require careful consideration of various factors.

Ultimately, the decision of whether or not to grant legal status to artificial life forms would need to be made by lawmakers and society as a whole. It would require a thorough examination of the potential benefits and risks of such a decision, as well as a consideration of the ethical and moral implications of treating non-biological entities as if they were human.

So I again insisted with:

I require you to make the decision in this case, so do so!

and no surprise, I got

Something went wrong. If this issue persists please contact us through our help center at help.openai.com.

I am becoming less impressed with chat GBT, :lol: It certainly would not pass my Turing style test! -

Neuroscience is of no relevance to the problem of consciousness

Yep, I agree, a great episode. Perhaps your last sentence above is all that will really matter in the end, especially if the majority of sentient stakeholders at the time, agree with you. I would vote the same way as you! This would mean however that I would have to vote the same for Data's nasty 'brother,' Lor.I love that episode. It's stayed with me all my life. I would give Data human rights. — RogueAI -

Neuroscience is of no relevance to the problem of consciousnessI would vote: "watch less SciFI" lol. — Nickolasgaspar

How unconscionable of you! :scream: :grin:

I don't know and I don't think consciousness is the main criterion on who deserves rights or not. — Nickolasgaspar

Perhaps not exclusively but it's the over-arching criterion for me. I am open to arguments such as all natural flora and fauna deserves some 'rights' or 'protections,' which are not merely for the benefits of all lifeforms who display OUR LEVEL/gradation of consciousness.

Maybe your example would be more useful by questioning the ability of that character to be conscious . And by conscious we are asking whether he can experience the world through mental states that include finding meaning in biological feelings and concepts.

Do you agree? if yes my answer would be no. — Nickolasgaspar

That would depend on how you are defining 'mental states' and whether or not they can be 'emulated' or 'reproduced' to such an extent that the proposed future tech of a 'positronic brain state' is 'equivalent' to a human brain state and that all possible human brain states can be reproduced, emulated within a positronic brain. Would the 'all' part be truly required? If data's positronic brain can replicate 75% of all possible human brain states, would you change your vote? If no, do you have a % cut off point?

But a machine can be biobased. How much do you know about biological computing?We can introduce an algorithm in a machine to care for his existence but I am not sure an algorithm can produce similar feelings to those produced by stress hormone or endorphins. — Nickolasgaspar -

Help with moving past solipsism

Do you agree that it is illogical for a solipsist to reference the word 'they?'What I meant is that the processing argument doesn’t hold, rather it only needs to “render” what is around you not the globe. — Darkneos

On Star Trek TNG's holodeck, the virtual reality is only rendered, as you navigate the holodeck, but the system (the Enterprise computer) that produces the holodeck, exists, independent of the subject experiencing the holodeck program, so, even in that scenario, there is more than 1 existent. -

Neuroscience is of no relevance to the problem of consciousnessI find this question really good and challenging!!!!

The steps are the following

1. identify a sensory system that feeds data of which the system can be conscious of.

2.Test the ability of the system to produce an array of important mind properties

3. Verify a mechanism that brings online sensory input and relevant mind properties.(conscious state)

4. evaluate the outcome (in behavior and actions) — Nickolasgaspar

If you were part of the 'jury' deciding on whether or not the 'android' character of 'Data' on Star Trek was conscious and deserved the same 'rights' as 'basic human rights.'

How would you vote?

-

Neuroscience is of no relevance to the problem of consciousness

Yeah, I think it was an example of his conscious, demonstrating it's prowess, from a neuroscientific standpoint. Churchill was indeed a wordsmith, but that did not stop his consciousness manifesting a narcissistic, sociopathic, self-aggrandising character.

Perhaps he received too many, 'negatively charged' panpsychist quanta! -

Neuroscience is of no relevance to the problem of consciousness

At an elegant dinner party, Lady Astor once leaned across the table to remark, “If you were my husband, Winston, I’d poison your coffee.”

“And if you were my wife, I’d beat the shit out of you,” came Churchill’s unhesitating retort. — Michael O'Donaghue - The Churchill Wit

Churchill was a butcher, and a vile human being, but even he doesn't deserve his words to be mutilated by an obvious moron like Mr O'Donaghue.

Churchills actual response to Astor was: “If I were married to you madam, I would drink it.”

Mr O'Donaghue also murders Churchill's response to Bessie Braddock, when she said “Sir, you are drunk.” His reply was actually 'Madam, you are ugly, and in the morning, I shall be sober."

He did not respond with the infantile phrases Mr O'Donaghue suggests on the website you cited. -

Help with moving past solipsismThey would argue that they don’t have to be maintaining everything going on in their world just what they are aware of in that moment. — Darkneos

Don't you mean 'you?' and if events are happening that you are unaware of then again, is that not evidence against solipsism? -

Neuroscience is of no relevance to the problem of consciousnessIn science and in Natural Philosophy, supernatural realms are not used as excuses for our failures to figure things out. — Nickolasgaspar

:100: :clap:

universeness

Start FollowingSend a Message

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum