-

Is 'information' physical?What I’m tempted to believe, is that those ‘formal constraints’ can be conceived as being latent possibilities that become actualised by evolutionary processes. — Wayfarer

Yep. That is structuralism. It is why maths of that ilk - the maths of symmetry - has proved so unreasonably effective in physical theory.

So plenty of different traditions of thought get it. -

Is 'information' physical?there is a world of difference between hylomorphic dualism.., — Wayfarer

Yep. But then also, hylomorphism is essentially triadic. The actuality of substantial being arises out of formal constraint on material uncertainty.

So what Pattee argues is this triadic story. Life and mind have substantial being as a result of the biological interaction between information and dynamics. -

Is 'information' physical?Killer argument for dualism, in my view. — Wayfarer

Whatever affects the physical is, at least in part, also physical. DNA, for instance, is physical, no? — 180 Proof

Hah. I just spent the morning on this point.

DNA is necessarily physical, but also as little involved in the physics of the world as possible. Every cell has 2m of genetic sequence - several billion nucleotide pairs - packed into space about 6 microns across. A compression ratio of 333,333 to 1.

So from the dynamical perspective of physics - real-time interactions in 4D - DNA barely registers as a dot of material. It has basically no dimensions to speak of. It is physical - but in a way that negates what we normally mean by physical.

However is that a killer argument for a dualism that is any way unphysical ... in the manner this is normally understood by idealists and others?

Well the whole point of Pattee is about the epistemic cut, the modelling relation. Genetic information is meaningful only insofar it regulates entropy dissipation. It is information doing work by running down environmental gradients. It is thus a response to the constraints of the laws of thermodynamics. It is local creativity harnessed to the same old general cosmic project of achieving the equilibrium of a heat death. -

Why Was There A Big Bangthe real world has both good & bad properties, from the human perspective, — Gnomon

Math includes both positive and negative values. — Gnomon

This doesn't stack up for me. And organic and immanent metaphysics instead emphasises the complementary nature of symmetry breakings and the reality of chance.

So if there is "good and bad" in the world, this is only a well-formed dichotomy if both sides are part of the one deal.

And if there is "positive and negative" in the world, this is just a simple mirror-level symmetry - easily reversed as it is a symmetry breaking on a single scale of being and so not the complete asymmetry of a dichotomy, a symmetry-breaking that itself produces hierarchical scale.

So symmetry-breakings that work - at the cultural level you seem concerned with - would be ones like competition~cooperation. A functional society is one that balances local or individual differentiation against global or general integration. There has to be the creative energy of selfish striving, and there has to also be a general social project that is the context and meaning for that individual freedom.

To talk of "good and bad" is way too simplistic - a dualism that wants to be reduced to a monism.

If you can't see the need for both sides of the equation - as you can with the matched and yet asymmetric social qualities of competition and cooperation, differentiation and integration - then you aren't thinking in sufficiently fundamental terms.

In maths, positive and negative add up to nothing. If you go left, you can go right, and end up back where you started. Unless your symmetry-breaking produces scale, every difference becomes just a self-annihilating fluctuation.

This is why the Big Bang needs some hidden asymmetry in its particle production. It has to make a difference to be a left-handed or right-handed particle, otherwise particles are produced and just as fast annihilated.

So we know from good argument that reality can only arise via proper dichotomies - ones that result in scale asymmetry. Each side of a pair is defined by it being as far away or unlike its "other" as possible. And yet also, that makes both equally necessary as each is the ground to its other.

Your argument falls apart before it gets started if it is couched in merely anti-symmetric terms like positive-negative and good-bad.

Since Reason, Character, and Emotion are characteristics of our world, specifically the Cultural aspects instead of the Natural properties, the First Cause must have possessed the Aristotelian Potential for those same qualities. — Gnomon

Biology is characterised primarily by the functional dichotomy of competition~cooperation. It is "reasonable" - in the Peircean sense - that life has hierarchical organisation. It is divided by the asymmetry of being locally spontaneous or indvidualistic, and globally cohesive or interdependent.

Even bacteria form biofilms. The planet's climate is regulated by a balance of photosynthesis and respiration that maintains a liveable atmosphere. So from top to bottom, over all scales, life is based on the "goodness" and "reasonableness" of being balanced by its two opposing tendencies.

Aristotle got it to the degree he stressed hierarchical order and the unity of opposites.

But you are taking things back to a simplistic religious framing that just accepts there is a problem of evil, or a problem if a creator isn't the determiner of every detail.

These are just problems if your metaphysics is stuck at the level of symmetry-breaking or dialectics which only thinks in terms of a single scale of being - a world where every left is matched by its right.

A more complete symmetry-breaking produces asymmetric scale or hierarchical order. You arrive at a local~global story where two opposites anchor the two extremes of scale. It then becomes clear that both extremes are necessary for there to be anything at all. As conflicting impulses, both are equally necessary.

Once you have developed your metaphysics to that point, all the causality is within the model. You have arrived at an argument with self-organising immanence.

As a result of programming a Singularity with design parameters (laws & initial conditions), a prolonged process of Evolution began, and will have an end. The End will be the output of the program. And, due to the inherent randomizing uncertainties, presumably even the Programmer does not know exactly what the Final Answer will be — Gnomon

But talking of a programmer immediately makes chance a big metaphysyical problem. Computers are deterministic devices. Chance doesn't even enter the story. And to claim some "swerve" to introduce uncertainty is a patent act of desperation.

So it is much better to argue like Peirce and other organicists. Chance and necessity become the complementary extremes of Being - the two poles that unite to arrive at the balance that is actuality.

And this is what physics argues about the Big Bang. Chance is real in nature as quantum indeterminism. Necessity is also real as the constraints of a decohering thermal structure. At the Planckscale, these two contrarieties have exactly the same scale. The universe is as curved as it is hot. The container is indistinguishable from its contents. But an instant later, the two are already being divided in their opposing directions by the dichotomy of cooling~spreading. The curvature flattens enough that there is some measurable degree of spacetime. The heat spreads enough that there is some measurable degree of localised energy density.

So your story predicts neither what physics has figured out about the start of the Universe, nor what sociology has figured out about the organisation of biological collectives.

So, my story can only be judged by its philosophical explanatory power, not by its empirical evidence. — Gnomon

Good metaphysics grounds good science. But even most scientists don't understand why they wind up where they do. That is why quantum indeterminism, or human altruism, become the scientific version of the old religious puzzles like the problem of evil, or the problem of God's omnipotence.

Everyone's metaphysics must divide the world somehow. Symmetries must be broken to get any kind of reasoning started.

But what we see is that most folk get stuck at the first step - a symmetry breaking that only speaks of two directions at the one scale of being. Go left, or go right. Add more, or subtract to get less. Say yes, or say no.

Productive metaphysics instead continues on from this kind of "dualism yearning to be monism" to a fully-broken dichotomy - one with the asymmetry of a hierarchical or triadically-developed scale. The division has to be complementary - mutually exclusive/jointly exhaustive - so that all its causes are to be found within it. No need for transcendence. -

Why Was There A Big BangSo, Transcendence wins by a mile. Yet, it is still Structuralism. :nerd: — Gnomon

Why would the Big Daddy in the Sky go to all the trouble of pre-arranging an anthropically structured Big Bang that takes 13 billion years to eventually deliver the fleeting blip of a biofilm on some random chunk of real estate?

Creating it in a week, complete as a Garden of Eden, makes more sense if you want to talk probabilities.

And the Las Vegas odds, of such a cosmic-coincidence-of-initial-conditions occurring in finite time (in eternity anything possible must happen), are a bad bet. — Gnomon

Remember that the claim of the Standard Model is about there being a very limited variety of mathematical symmetries for nature to pick from. And indeed, ultimately, just the one final one that is the simplest.

If you want to make an argument here, you need to argue against the odds of wheels being circular. And I note, you carefully avoided trying to argue against that. -

Why Was There A Big BangMany things we once considered brute facts have turned out to be explained by even more fundemental forces and particles. The onion keeps being peeled back. A lack of ability to progress in explanation does not mean there is no deeper explanation. — Count Timothy von Icarus

But if you study particle physics, you will find this isn't how it works.

We happen to now live in an era where the Cosmos is ruled by its mathematically simplest possible symmetry - the U(1) of electromagnetism.

And when we wind back to recover earlier higher symmetry states, like the SU(3) of the Big Bang's quark-gluon plasma, we see how everything we love and hold dear - all that "matter" and all that "void" - dissolves into a hot confusion of nothing very definite at all.

Keep winding the clock back to the Planckscale and all useful particle structure or spacetime geometry goes out the window. The maths of symmetry itself dissolves. There is a lack of constraint of any kind once you get out beyond 24-dimensional SU(5), or 248-dimensional E8, symmetry stories.

So modern physics rests on concrete knowledge of Lie algebra. The brute facts here are Platonic. :smile:

The onion is tightly structured when its dimensionality is as strongly limited as it is possible to imagine - when all dimensional possibility has been crunched down to just a 3D realm in which the U(1) of EM is present as the concrete limit. But then wind back from that final destination by adding back dimensionality and all that tight structure begins quickly to come undone.

Like leaving town for the country on a dark night, you soon pass by the bright-lit city limits with all its neat regularity of 24 and even edge-of-town 248 dimensional maths. Occasional flashes of sporadic simple groups light up the darkness like truckstops, but even those become increasingly rare.

Travel forever and you may even reach the Monster group.

But the point is that the onion fast runs out of layers to be peeled as its dimensionality expands so fast that it becomes an entirely different kind of thing - a beetroot perhaps. :grin:

And likewise, talk about the Big Bang has to give up on overly concrete notions like spacetime and matter. A 4D vacuum filled with a quantum foam is about where things begin to start.

Beyond that, and there ain't even enough dimensionality to constrain anything in a useful fashion. Everything is too curved or disconnected to be part of any larger coherent sense of structure.

So every philosophical debate about the creation of the Cosmos starts by taking stuff for granted that science and maths already tells us we shouldn't be taking for granted. Beyond the Big Bang, concrete dimensionality and materiality have already left the room. -

Why Was There A Big BangBut he seems to be in favor of “transcendental framing” of the FreeWill question, — Gnomon

He means that life and mind transcend their worlds by being organisms with an intentional point of view. They are in a semiotic modelling relation which puts them "outside" the material world they have a need to control.

So no. He doesn't argue for a spooky dualist transcendence. He is just talking about how organisms transcend their environments by being in a modelling relation with them.

An organism has choices due to genes and neurons. Humans have even more choices due to linguistic and numeric habits of thought.

So, you agree that the ultimate source of “habitual” [regular, reliable] behaviors, rather than acquired in the process of evolution, could inferred as laws of nature [necessities] that predate the Bang. By that I mean, if-then instructions for system operation that were programmed into the seed (Singularity) of the Big Bang? — Gnomon

I wouldn’t use that computer jargon. My argument is structural. Probabalistic systems go towards their equilibrium states.

That “duh, everybody knows about heat death” conclusion came as a surprise to Einstein, who assumed a stable and eternal universe in his calculations. And only when faced with contrary evidence, was forced to rename his Cosmological Constant as what we now know as Dark Energy. — Gnomon

Interesting version of the history.

To me, that “explanation” is what he is arguing against -- saying “they come to look less like explanations than descriptions". In other words, describing the effect is not the same as explaining the cause. — Gnomon

I don't believe you followed what he says. But then, I don't think Tallis is that hot a writer either.

Those who prefer to call those dependable regularities “habits” are implying that they could have been otherwise. — Gnomon

Nope. The structuralist view is just arguing that the regularities of nature are immanent rather than transcendent. They emerge from the chaos of possibility as structural inevitabilities, rather than being God-given laws that animate matter.

But how would they know that, except by re-running the program of evolution several times to see if each execution followed the same basic path. — Gnomon

It's like when Og invented the wheel. Re-run history as often as you like. Let the whole tribe test every geometric possibility. The story always comes out the same in the end. Wheels wind up being circular. Folk wind up getting into the habit of thinking of circles when they want stuff to roll, regardless of whether they have "freewill" or not.

All we know for sure is that Nature seems to be constrained by built-in limitations. So, if you imagine a reality with different constraints you will be dealing with imaginary “woo”, rather than with Reality as we know it. — Gnomon

I'm not arguing that different realities are possible. As a structuralist, I am instead saying our own Big Bang universe is very likely to be the only one of its kind as a consequence of the "strong structuralism" principle.

Maybe you might want to argue for worlds based on other symmetry breakings than the SU(3)xSU(2)xU(1) of the Standard Model. But maybe also, these just are the only series of phase transitions by which a material existence could emerge - one that, Og-like, had to fiddle around with triangular wheels, and square wheels, before arriving at the greatest simplicity of a round wheel.

Can you suggest a simpler baseline gauge symmetry than the U(1) of electromagnetism? The symmetry of a single rotation/translation, or sine wave?

Once you arrive at the universal simplicity of a circle, beyond that you can only aim at the greater simplicity of a circle that is even more circular. Or in other words, there is no beyond. You have arrived at the limit of that kind of "endless" possibility.

The contest here is between two ways of looking at the metaphysics of Being - the transcendent and the immanent. And it is not even a contest. -

Why Was There A Big BangHaving noted that [natural] "laws somehow act upon the 'stuff' of nature from outside it", and that [natural] "laws are a 'quasi-agency'", he seems to be poking his nose into fundamental mysteries. — Gnomon

He was pointing out how this way of speaking retains a transcendental framing that doesn’t make causal sense.

The error of thought is in thinking that the material world is essentially passive stuff that needs to be made to move. That then raises the question of how particles get moved by “laws”.

But if you switch to a constraints-based perspective - a Peircean metaphysics of habits - then the presumption is that nature starts “in motion” and becomes organised by globally emergent patterns. Temperature falls and “laws”, or the constraints of symmetry breaking, get locked in.

Speaking of "outside nature", how could the Big Bang -- the first stage of an ongoing series -- be labelled a "habit"? Are you implying that it was just another routine step in an eternal cycle of repetitions? — Gnomon

The start would be the least habitual possible state of being. It would thus be the most chaotic, the most vague, and the most symmetric state of being.

This we can know just by rewinding the way things are. The Planckscale gives us this answer. At the Planck temperature and energy density, fluctuations are the same size as the world that is meant to contain them. And talking of a time “before” such a state is like asking about a state more circular than a circle. Time only begins once energy fluctuations become smaller than the spacetime that contains them. It is only with cooling-expanding does the possibility of change, difference and history become a reality worth mentioning.

If the universe is prevented, by Entropy, from "ever returning" to it's initial state, that means it's a one-way trip. — Gnomon

You mean, the future is the Heat Death? Well, duh.

But, since the BB was indeed a "big deal" for those of us who ask "why" questions, trying to de-mystify the provenance of the BB is an act of Wisdom, not necessarily a slippery-slope to Woo. — Gnomon

But you can only argue this way by rejecting the alternative that Tallis writes about. As I say, if you presume matter is passive and at rest, then a transcendent hand is needed to get it moving. But if instead you presume matter starts free and restless - just a fluctuation - then organisation will emerge simply because fluctuations will all start to interact and collectively fall into constrained patterns. A history of accidents will accumulate in the same way randomly falling raindrops will start to carve the habit of a river in a landscape.

Have you simply misunderstood Tallis here? You are taking the view he critiques.

But, by reflexively labeling all such "before the beginning" questions as Woo or Weirdness, would tar many serious scientists and philosophers with the same brush as the "religious nuts" and "wacko weirdos". — Gnomon

You are quite right that many physicists just talk about the laws of nature as if they were written in the mind of God. Many are indeed believers in creators. Many believe in a time before the Big Bang. Many believe in all sorts of things consistent with transcendental causality.

I agree they are dealing in woo to the extent they remain mired in such an ontology. -

Why Was There A Big BangBy imposing its will, human nature gains the freedom from natural laws, that allow it to become a guiding agency astride the horse. Thus a Metaphysical Principle rules over the Physical Habits of Nature. Which raises the "dubious" question of who or what was the Lawmaker, Regulator, Selector, Agent, Rider for the powerful Big Bang horse. Is that too woo to be true? :smile: — Gnomon

Aren’t you just re-mystifying the view that Tallis wants to de-mystify?

The Big Bang falls within his description of Natural Habits. Regularity is emergent as symmetries are broken and the general cooling-expansion of the Universe prevents its ever returning to its less organised past.

The quark-gluon soup was a moment of featureless hot generality. It was followed by symmetry breakings that created a world organised by the strong, weak and EM force, that has Newtonian masses moving at slower than c, and so on. The laws of particle physics, then eventually elemental chemistry and code-regulated biology, eventually emerged.

The Universe kept cooling-expanding and further ever-more specified levels of “law” emerged like a rocky shoreline with the tide going out.

So everything is unified as a tale of dissipative structure, Chance becomes increasingly constrained in its forms.

But at the same time, chance is becoming increasingly specific in its form.

The quark-gluon soup becomes a collection of broken-out different forces and particles. You get the possibility of protons and electrons, thus atoms, and thus chemistry as a higher level of dissipative structure.

So from the generality of vanilla chaos, we get the specific randomness of chemistry on the surface of the earth. We have dissipative structures like geothermal ocean floor vents that are where life can gets its own metabolic start.

Thus a planet like Earth is already both severely constrained by an accumulation of cosmic constraints, and yet also left with matchingly definite local degrees of freedom. It is already a highly complex system just with its plate tectonics and atmospheric weather systems.

And then life and mind arise as another level of code-based causality - one both constrained and enabled by that accumulation of physics, chemistry and planetary geology. We have to obey the second law of thermodynamics, but we can also accumulate free energy to spend how we like.

Science simply becomes a way of looking at that situation through the eyes of a culture that wants to understand its reality in terms of the causal levers it can pull, the buttons it can push, to control the material possibilities we find in the world.

So where is the woo? The Universe is organised by thermodynamics. That results in pockets of complexity like a planet. Code-based dissipative structure like life arises within entropy gradients like a thermal vent, and then a photosynthetic flux. Eventually that life becomes organised by higher levels of code such as neurons, words and numbers. It develops a “selfish” point of view that imagines itself as the technological lord of creation.

Big deal. :razz: -

In the Beginning.....I didn’t say it was discovered there.

But looking forward to your book! Good luck. -

In the Beginning.....But the ideas, the imagination is what truly counts. Math hides this. — Prishon

Surely maths is what converts the intuitions into actual counting? It reveals the degree to which an idea works …. in terms of numbers to be read off instruments and dials.

I see irony here. Kant says we can’t access the noumenal. The pragmatist nods a head and says, yes, that is why we have to turn our descriptions of reality into a mathematical theory that takes as its evidence … tallies of marks that some meaning can be read into.

The constraints of phenomenology can’t be broken. But they can be better organised by a shift from everyday language to a rational structure that accepts, in the end, we are only assigning interpretations to numbers on a dial we claim to have accurately read.

I enjoy the confounding fact that science arrives at its realism by way of stringent Copenhagenism. In the end - to speak of the thing in itself - we just have to convince each other in our little circles of rational enquiry that we shared the exact same idea (some equation), and we observed the exact same numerals appear on a dial just as we were led to expect.

Talk about humble bragging!

No one can give a satisfactory account of mass creation (in the sense of saying what actually the math describes; — Prishon

But folk are always trying to provide those kinds of intuitive stories. Like a famous celebrity, a particle would cross a crowded room at light speed if it could. But it’s celebrity causes it to become entangled by the cloud of interactions with these well-wishers. It has a mass and so it’s progress is proportionately slowed.

Goldstone bosons eaten up by the gauge field fir the weak interaction? Nono. I donot buy that. They could be wrong you know. — Prishon

But how else to explain why the weak force is massive and yet the EM photon flies free … at a massless speed of light?

It could be wrong, as indeed any conception of the noumenal could be wrong. But again, that is another advantage of numbers over words. With the logical structure of mathematical claims, the restriction of all claimed evidence to numbers publicly displayed on the dials of instruments, the mathematically-expressed proposition can just be flatly wrong. Everyone present can point at the dial and laugh at the great embarrassment of the failure of a prediction.

But let folk mess around with words and they can come up with any number of confusions that claim to be “theories”, yet fall short of the dignity of even being able to be wrong.

Words are of course very good at telling truths, or falsehoods, at an everyday social level. As theory and evidence, that is the language game they were designed for.

But science is mathematically-definite claims married to numerically-precise acts of measurement. Agreeing that the appearance of a number on a dial proves a theory and ain’t just a lucky fluke requires another level of statistical super-structure. However that supports the general contention here.

So if you think the Higgs mechanism could be wrong - that there was something shonky about the dial reading at CERN - your doubt doesn’t mean much until it is elevated to a level that is itself framed with a technical precision.

You think I have collegues? I only studied there. Particle physicist is not my daily work. And luckily so! Im not bound and fixed to the standard model. — Prishon

You can’t both want to go public with your private theories and reject the rationale for that public approach.

Again, science is about making rash counterfactual claims in a completely public fashion - one framed with mathematical definiteness and so as little as possible wiggle room. Then the evidence is also public. We can all read off the numbers for ourselves.

Obviously you keep mentioning your pet theory that speaks of the Cosmos as a 4D torus in a 5D space that spits out 3D rishons. It sounds a bit mathematical. But is one a Euclidean manifold, the next a material field, the final step a spray of particles? What kind of “not even wrong” confusion of words are you throwing together here - even if it is very easy to see the labelled diagram of three kinds of shapes you likely have “in mind”.

Sure, I can visualise a drawing of a flat manifold with a hovering torus and jets of “rishons” and “anti-rishons” spurting out from both sides of its Janus-arse. Your word picture is constructible. But that ain’t sufficient proof it is true, let alone that it has the necessary logical structure to be making any grand claim about the Universe. -

In the Beginning.....Talk about primordiality to analytic philosopher and you will get only blank stares. — Constance

How are you defining “primordial” exactly? Is it an abstract term with some concrete meaning, or just a ritualistic and impressive noise one might make - a group identifying chant? -

Why Was There A Big BangWhich raises the question for both materialist physicists and non-materialist meta-physicists, "what caused that sudden symmetry break . . . that instant imbalance?" — Gnomon

The Universe was also expanding and cooling at an exponential rate while in that vanilla unbroken state - according to the simplest extrapolations. So the Universe just had to cross a threshold where the unified conditions finally broke in the usual phase transition way. Or not so usual if this breaking also released an inflationary spurt.

Admittedly, the latter is not an empirical scientific theory, but then neither is the imaginary Quantum Fluctuation scenario. So, why not give due consideration to both propositions? :cool: — Gnomon

But GR and QM are empirical. Phase transitions are empirical. Everything back to the Planck event horizon has at least an evidenced basis that constrains its speculations.

Even versions of teleology are empirical to the degree that quantum nonlocality and retrocausality are accepted as a thing - Jack Sarfatti offering an example of such a line of thought here.

So the reason to take one side seriously is that there is good evidence for its starting assumptions.

Which raises the question for both materialist physicists and non-materialist meta-physicists, "what caused that sudden symmetry break . . . that instant imbalance?" — Gnomon

An expanding and cooling space of fluctuations will at some point cross a threshold where the fluctuations cease to rule as correlated actions start to take over. The simple example is steam condensing into water. So order emerges as all the hot particles become regulated by some larger collective state. Lawfulness appears. It is almost as if a divine hand intervened … not. :razz:

So in general, once you have a Planckian world with the ingredients of spacetime and energy density, the future is baked into that material package. The puzzle - the need for new physics - lies in how to account for that starting point.

My own points here is about taking the emergence of spacetime and energy density seriously and looking for some kind of naked symmetry breaking story which produces that initial division itself. Don’t just keep shoving that basic step further back in “time” to some other “place” that has “infinite” energy to expend. The next step for cosmology has to be the one that breaks down the very notion of dimensionality and gives it an emergent explanation.

This is why loop quantum gravity was a promising approach. Many different versions were at least suggesting that naked 1D fluctuations - an action without a space to give it direction - could still knit together a web of correlations. A quantum foam would find its own emergent order that cooled its chaos. There was evidence for the speculation in terms of running computer simulations of the maths being proposed.

Materialist approaches also can claim to know what are the known unknowns. The key to unlocking progress is figuring out the grand unified symmetry that describes the Plankscale initial conditions - the one that unifies the Standard Model’s hierarchy of known symmetry breakings.

So the materialists have a pretty well defined project ahead of them. The issue of what came “before” the Big Bang is interesting. But there are big gaps to fill in the story of what the initial symmetry state looked like first.

Scientists tend to prefer a physical scenario, such as the Quantum Fluctuation hypothesis (due to random Chance). And some Philosophers prefer to consider a non-random lawful scenario, such as Aristotle's First Cause/Prime Mover (a deity of "pure form"). Which acts via teleological Intention. — Gnomon

My own approach is influenced by Peircean systems logic. And that would argue that the initial conditions were a vagueness - a “realm” where the principle of non-contradiction had yet to even apply.

So law and fluctuation would have been indistinguishable to the degree that both were present. They would have “existed” as just the latent possibility of such a division.

And this is what the reciprocal structure of the Planck constants tell us. At the beginning of the Big Bang, fluctuations had the Planck temperature and so were as big as the spacetime world they were happening in. The buckling effect of the hot contents was equal to the confining impact of its would-be container. There would thus be both law and chance in balanced existence, but right on top of each other in sharing the same scale, and so not yet actually distinguishable as two divided aspects of the one larger reality.

The Big Bang is the birth of the division and growth in scale that increasingly locates chance to the local scale of being, and law to the global scale of being.

This is why the Universe seems so perfectly divided in its era of Newtonian classicality. There is a rule by global law. And that allows the writing of prescriptive equations into which any "chance" measurement can be inserted as a local variable.

Chance is so constrained that you can count it as entropy, or distinguishable microstates. The only real fluctuation is quantum, and that has been tamed by decoherence now. Just as law has been pushed so far towards its global limit that it appears to transcend our Universe (becoming written in the mind of God so far as many materialists are concerned :smirk: ), so too chance has been pushed to the edge of the cosmological picture - and thus led to pathologies of extrapolation such as the many worlds interpretation of quantum theory.

So yes. We can boil it down to metaphysical first principles like the dialectical opposition of law and chance. But then we want to avoid the chicken and egg debates about which came first, or which is the ground to the other. That is the kind of causal logic that sets up the two sides of the one story as disjunct monisms. Both good old fashioned materialism and good old fashioned theist woo (or idealism) are logically in error because of their shared reductionism.

It is written into the Planck constant derived equations that describe spacetime and energy density that the logic is dialectical or reciprocal. Local chance and global law are themselves the two sides of reality that had to co-arise as a unity of opposites, a symmetry breaking that was self-organising. You have the triad that is the h that scales pure fluctuation or energetic curvature, the G that scales any deviations from global flatness, and then the c that is the scalefactor for their ever expanding and cooling trajectory towards their respective asymptotic limits.

This kind of logic ought to be very familiar for anyone who has studied ancient Greek metaphysics, or even Eastern approaches like Taoism and Pratītyasamutpāda. In more recent Western tradition, we have Hegel and Peirce.

But as it happens, even a central loops thinker like Rovelli can write an enthusiastic book about Anaximander as the first scientist ... and miss the essential metaphysical point ... of what Anaximander meant by ... apokrisis, or "separating out". -

Why Was There A Big BangI don't really have any objection to any of this — Seppo

If you're going to be so damn reasonable then I have to rescind that dogmatic comment. Bugger. :smile:

If the current projects/paradigms (string theory, supersymmetry, etc) were going to bear fruit, you would have hoped it would have happened by now... and that just hasn't happened, we've been spinning our wheels for decades. — Seppo

Yep.

I tend to be a bit more conservative in sticking to what is the widely held view of people with actual formal expertise on the subject, hence my comments here sticking to what I guess is sort of the party line on the topic RE quantum gravity and early Big Bang cosmology. — Seppo

Again, that's fair.

It it just that the party line too often feels like the party members papering over their own divisions and confusions so the general public/taxpayer funders don't catch on to what a mess they might be in.

In fact I parked particle physics and cosmology a decade ago to give them time to catch up with themselves and see if some actual new consensus might emerge. Loop and condensed matter approaches were encouraging at the time, but also starting to fall apart like strings did.

I think what did it for me was the loop guys suddenly promoting bounce cosmology as the kind of "theory" that a new multi-billion euro collider might just be able to test. Suddenly there was a new party line to be built around a ginormous funding application ... and let's not look too closely at its scientific merits.

But in case you are interested in where I am coming from, there was this really good blog post by the "Hammock Physicist", Johannes Koelman, in 2010. He was so on the money for me that it was no surprise he appeared to give up his academic ambitions and turn to making a living in industry soon after.

http://www.science20.com/hammock_physicist/physical_reality_less_more

I wrote up a precis at the time which I can simply paste here just in case it has value.

Preamble: Most modern metaphysics presumes the laws of reality, the structure of the cosmos, to be contingent. The laws are just whatever they are with no real explanation other than some kind of anthropomorphic accident. This is a view that drives Tegmark and his multiverse speculation and other expressions of modal realism.

But physics itself appears to be closing in on a tale of mathematical necessity, a tale of symmetries and symmetry breaking, which now in metaphysics is also inspiring new schools of thought like Ladyman and Ross’s ontic structural realism - http://www.amazon.com/Every-Thing-Must-Metaphysics-Naturalized/dp/0199573093

So this is a new “emergent Platonism”. It is not that there is a realm of infinite forms – a Platonic ideal for every possible particular entity from triangles to jam jars – but rather that there is a general mathematical inevitability to the structure of nature. Given a starting point of unlimited material freedoms, some kind of prime matter, apeiron or entropic gradient to shape, a world must then self-organise according to certain intelligible principles. And this is what fundamental physics has quietly been doing from Newtonian Mechanics right up to string theory and loop quantum gravity today – systematically following the path leading back to the deep mathematics, the ur-pattern shaping nature.

So this is post about the unification of physics project. And this excellent blog post by Johannes Koelman gives the guts of the argument - http://www.science20.com/hammock_physicist/physical_reality_less_more

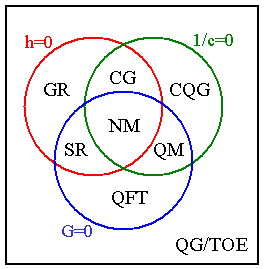

I will use it as a jumping off point, particularly this Venn diagram of how the theories form a three-cornered hierarchy of generalisation....

Planck constant triad: Where does it all start? With the idea of symmetry and symmetry-breaking. Or the birth of scale, the birth of difference within what was “the same as itself”. And so it is about a special kind of reciprocal dualism or asymmetric dichotomisation where the same becomes different by moving away from itself across local~global scale.

Now this is an unfamiliar idea to most even if it is very ancient – the basis of Anaximander’s cosmology, the very first true metaphysical system. But briefly, it is about inverse relationships. If you take a classical metaphysical dichotomy like flux~stasis, chance~necessity, discrete~continuous, etc, you can see how each pole defines itself as the reciprocal of the other. Stasis is the state where there is no flux, or the least possible flux. So stasis = 1/flux. That is, the larger you imagine flux to be, the smaller or more fractional the quantity of it you will find within stasis. And the converse applies. Flux = 1/stasis. The larger the amount of “no change” imagined, the less of that there is to be found in flux, and so the more “changeable” flux becomes. All regardless of any actual measurement or quantification.

So this is a special mathematical relationship that emerged in Ancient Greek metaphysics – the dialectic manoeuvre that drove its speculative twists and turns. And it has re-emerged centre stage in modern physics as symmetry-breaking and the various dualities or complementary relationships that are a feature of high-level theories.

Now on to those theories. As Koelman makes clear, it starts with Newtonian Mechanics (NM) where the local~global relationship, the primal dichotomisation of physical scale, was first properly quantified – but in an actually broken apart way.

NM presumed a fixed space and time backdrop and then defined the rules for quantifying local events within that absolute reference frame. That 'broken apart" classical view of nature certainly worked at the human scale of observation, where we are so far from the bounding limits of the cosmos. But as science developed particle accelerators and radio telescopes, physics had to expand its view too. It had to develop post-classical theories that included an account of the global container as well as the local contents.

And in brief, that has turned out to mean bringing a triad of "dimensionless" constants inside the picture we have of reality – the three Planck constants of h, G and c. (h = Planck’s constant that scales the quantum uncertainty of things, c = the “speed of light” or the constant that scales causal interaction, and G = Newton’s gravitational constant that scales mass/spacetime curvature.)

There are only these three critical “numbers”, all somehow tied to the most fundamental level of symmetry-breaking. And with NM, we start with them all outside the physical theory as values that have to be empirically measured – which is an immensely tricky and approximate story in itself. Then the story of modern physics has been about pulling the constants inside the theories, first in ones, then in twos, and finally, hopefully, with the ultimate Theory of Everything (ToE), getting all three inside the picture of nature together at once. At which point, physical theory would become completely rational, drawing up the ladder on the need for empirical measurement as the mathematical structure would be able to account for itself entirely.

This is because the constants will have been defined in the same self-explanatory way as the old metaphysical dichotomies like flux~stasis. The constants represent the action that breaks a symmetry, but now in both its “directions”. Asymmetrically or reciprocally across actual hierarchically-organised scale. What this means should become clearer as we see how physics has developed since Newton.

Newtonian Mechanics: As Koelman points out, NM was based on reducing the empirical measurement of reality to four quantities – distance, duration, force and inertia. The brilliant idea at the heart of scheme was to disconnect the local scale from the global scale by imagining the global scale to be a fixed, flat and eternal, backdrop. Space and time were made a static symmetry – you could go backwards or forwards in space’s three global dimensions or time’s single global dimension and they “didn’t care”. It was all the same, and so symmetrical.

This was of course a view of nature directly inspired by Ancient Greek atomism and its notion of an a-causal void, where similarly, all causality, all action or symmetry-breaking, involved local parts. Only material/efficient cause was real, making formal and final cause a fiction projected onto the emergent regularity of atoms contingently at play. And given that space and time were defined in this absolute sense – a matter of brute and immutable fact – this legitimated the use of clocks and rulers as universal measuring yardsticks. You could create a standard unit of distance or duration because such a human construct was underpinned by the concreteness of space and time themselves. At any place, in any era, and at any scale, these clocks and rulers would continue to function reliably because they were measuring something unimpeachably real.

So Newton – as a metaphysical premise – created an unchanging backdrop against which the kind of change we are most interested in, the middle-scale realm of lumpy objects, could be crisply measured. Now he only had to model a localised symmetry-breaking – the one between mass and force, or between the material property intrinsic to a body and the web of interactions between such bodies. This led to his three laws of motion.

Newton's first law defines the inertia of bodies. Massive objects can have a "resistance to change" in their motion if that motion does not break a global symmetry. So a ball can roll forever in a straight line due to translational symmetry, and it can spin forever due to rotational symmetry. The combination of mass and velocity gives a body a momentum value. A force - as then defined by the second law, F=ma - is a change in momentum imposed from without. Like by getting smashed into by another object. With absolute space and time as a fixed reference frame, these "hidden" local quantities of force and inertia could be read off the world in terms of localised symmetry breakings - a curving path or a change in velocity.

Then Newton’s third law of action~reaction restored the broken symmetry by creating a global energy conservation principle. Everything that got pushed, pushed back equally, leading to a net zero force at the global scale. Nothing happened to disturb the static stage upon which the mechanics played itself out.

Newtonian gravity: It was a neat system. But of course it only dealt with objects banging into each other. And Newton had to make another big leap of the imagination to deal with gravity – a global force that “acted at a distance”. His peers like Descartes had tried to make sense of gravity’s pull as a jostling of spatial atoms. The sun and planets were swirled around in circular orbits because they were caught in the flow of super-fine corpuscles. But Newton boldly posited gravity as an intrinsic property of mass. And one that scaled (inversely!) with distance. The greater the separation, the more weakly the gravity of a body was felt.

So Newton again used an absolute backdrop as a way to localise the notion of an action – the material cause of change, the thing that breaks a physical symmetry. And as the other forces like electro-magnetism became recognised, the same general mechanics could be used with them as well. They could be quantified as vectors – a small push or pull in a direction acting to disturb the inertial motion of a body.

Post-Newton theory: The Newtonian model worked so well because it homed in on symmetry-breaking on the human scale - where we are 33 orders of magnitude distant from the smallness/hotness of the quantum scale and 28 orders of magnitude away from the bigness/coldness of the visible Universe, the relativistic scale. Action or change might be taking place at the extremes of scale, but the Universe would still look a flat and unchanging backdrop because either the change up at the relativistic limit was so large and slow that it was beyond our field of view, or equally, down at the quantum limit, so small and rapid that it become an unbroken-looking blur.

But eventually it was realised that the Universe was dynamical over all its scales. For one thing, it was born in a Big Bang and is spreading/cooling towards an entropic Heat Death. So the extremes of scale had to be brought inside the general model and made subject to the same laws ruling change.

In prescient fashion, it was Planck in 1899 who saw that all mechanics could be boiled down to three constants – three dimensions quantifying the actions that break even the most global symmetries. Well, Planck thought it would be four as he included Boltzmann’s constant, k. But by the 1930s, Matvai Bronstein had clarifed that physics was looking for the magic trio of cGh. Well, in fact to show that it is only retrospectively that physics understood the deep logic of its own progress, the much more famous names of Gamow, Ivanenko and Landau cooked up this little insight as at first a joke, allegedly to impress a girl, and then 50 years later, Okun, another Russian rediscovered it and finally popularised the way cGh anchored all physical theory as “Okun’s cube”. Here are a couple of blog posts on this history.

http://backreaction.blogspot.com/2011/05/cube-of-physical-theories.html

http://blogs.scientificamerican.com/guest-blog/2011/07/14/why-is-quantum-gravity-so-hard-and-why-did-stalin-execute-the-man-who-pioneered-the-subje

So OK, it was not so obvious at the time. But it does explain why physics ended up organised like Koelman’s Venn diagram, a systematic attempt to turn three empirical and apparently arbitrary measurements into three reciprocally self-defining and so mathematically necessary global symmetry-breakings.

Special Relativity: Ticking through these quickly, first came Einstein’s Special Relativity (SR) which incorporated c into mechanics as a general yo-yo factor.

First space and time (representing stasis and flux in terms of locatedness and change) became glued together to become the one thing, a global scale symmetry balance that Koelman dubs “spacetime-extent”. Then c scales any breaking of this balance with extent multiplied by c = distance^2 and extent divided by c = duration^2. So in this way spacetime is changed from being a static backdrop to a dynamic dimensionality where the baseline of “no action” is effectively redefined as the expanding sphere of an event horizon. Events are physically separated by a distance and a duration in the way that the sun may have vanished four minutes ago, but it will take another four minutes before we can know about it. So to break spacetime in such a way as only to see “a distance”, you have to multiply by c to allow “enough time” for the distance to “happen”. And conversely, to recover “a duration”, locate it within a purely temporal dimension, you have to divide by c to remove the space over which it has has spread.

A simpler way to understand this is considering spacetime from the points of view of a massive particle and a light ray. Even if it is stationary, not moving in space, the particle is now moving in time. It is “travelling” into a future where the distance to any event horizon is getting constantly c-times more expanded. And conversely, the light ray may be moving at c through space, but now – being already “at” the event horizon – it is “stationary” in respect of the global dimension of time. So there are two ways to be standing still and two ways to be moving. And which way round you read off the symmetry breaking is scaled by c or 1/c.

Thus globally, spacetime was scaled by a reciprocal action. Then locally, the same was done to the Newtonian version of the action. Energy and momentum became glued together as a “general stuff” – spacetime-content – and this symmetry again broken by a yo-yo inverse relation. By E=mc^2, mass could be converted into a “times-c” amount of energy, while energy could be converted into a “times-1/c” amount of mass. The material contents of the Universe could be viewed either in terms of an energy density located to a spatial point, or smeared across a temporal sphere. The two were dichotomous ways of looking at the same thing. So “where you were” as an observer within the system had to be specified by an inertial reference frame if you wanted your Newtonian rulers and clocks to read off the same distances and durations. Nothing was absolute, but in a dimensionless way, you could still distinguish c from 1/c as the generalised limits on reality.

General Relativity: SR incorporated one of the three Planck constants, c, but left out h and G. So the quantum uncertainty and gravitational curvature of the Universe remained “measurements from outside” the system being measured. This didn’t matter outside the middleground scale of classical objects – lumpy masses bumping about in a cool/large void. But it did matter if SR was going to continue to measure the world accurately as it approached these two other limits of nature.

Einstein of course took the next step of extending SR by incorporating G alongside c to give General Relativity (GR). Spacetime was now made bendy, defined in local fashion by its energy density. Instead of distance and duration being flat and even dimensions – Euclidean as presumed by Newton – they were free to adjust their geometry according to the density differences in their material contents. Or being a bit more technical, spacetime and its contents became unified as flipsides of the same thing – the Einstein-Hilbert action. The reciprocal nature of the deal was again made explicit in the maths, spacetime being scaled by G/c^4 while the mass/energy contents was scaled by c^4/G.

Quantum Mechanics and Quantum Field Theory: The other big revolution going on was Quantum Mechanics (QM). It had been discovered that reality is scaled by an uncertainty relation – a yo-yo deal centred on h as its “physical” value. In QM they called this complementarity, making the connection with Eastern metaphysical thinking like Yin-Yang (which of course is another version of the same thing the Ancient Greeks were talking about with dichotomies). But anyway, what QM said was reality is fundamentally vague or indeterminate.

Measurements need to be made from a fixed point of view to have definiteness. And when you get down to nature’s essential symmetries (and the asymmetries that break them in local~global scale fashion) then trying to pin down one end to a crisp value sends the other off to unknowable indefiniteness. So ask about a particle or event’s position and its momentum goes off the other end of the scale. Zero in on it in terms of time, and its energy could have any value.

By including this yo-yo measurement issue in the physics, QM made explicit the way classical mechanic had been coarse-graining reality. When things are cold and large – far from h as a limit – then the Newtonian picture works well as reality is near to dammit determinate in its behaviour. But approach the complementary limits of the hot or the small, then the classical crispness breaks down in a well modelled exponential fashion.

So QM pulled h inside the mothership of Platonic physics, but like SR, it left the two other constants dangling – c and G. This was fixed by Quantum Field Theory (QFT) which repeated GR’s trick, this time combining h and c.

QFT is a relativised version of QM and it did this by treating particles as excitations in a field. So there was the jump from a Newtonian strict location of a symmetry and its breaking (a particle, its properties, the forces that might impinge on it) to a field view where everything becomes global and contextual. The key calculational breakthrough was Feynman’s concept of path integrals, or sum-over-histories. Uncertainty could be quantified as all the paths that a particle might have taken quantum mechanically and then the path “actually” taken as the shortest possible path to get where it got. So all the energy values the particle might have had (given the scale of the action) could be averaged across. And relativistic effects, like what speed does to mass and time, could be included as contributions to the final result as well, giving a picture of an action zeroed to some definite reference frame.

Cartan Gravity: SR, GR, QM and QFT are the familiar fab four. But far less well known is that there is a third leg to this story of the grand consolidation of the theories. As Koelman points out, logic demands there was also Cartan Gravity – an effort to match SR/c and QM/h with a generalisation of Newtonian mechanics that dealt solely with G. And then following that, even a Cartan Quantum Gravity that unified G and h.

Now the Cartan notion of space is based on torsion (as opposed to say curvature). But I confess I am not clear why it is not a big deal like quantum and theory and relativity. Perhaps combining G and NM has little technological value (QM especially has been the basis of valuable everyday application). Certainly Newtonian gravity deals with the classical scale of interaction perfectly adequately given that massive gravitational fields are not the kind of thing we can bring to bear on nature in the same way we can with c or h scaled phenomena.

Anyway it is said Cartan theory may yet come into its own as the basis for loop quantum gravity or other ultimate theories where forces have to be modelled as twists in space. And certainly it is a necessary third leg of the theory unification process. It is logical that this way of climbing the same mountain also is possible.

Theory of Everything: So that then just leaves one final step – a ToE or Quantum Gravity (QG) theory that hoovers up all three Planck constants, cGh, into the mothership of reciprocal dimensional maths.

As Koelmans says, this seems to require a further extension of the sum-over-histories approach where QFT is enlarged to include G or spacetime curvature. As well as averaging across the uncertain energy levels of a particle and any global relativistic contributions, the calculation would have to average across any local uncertainty in the spacetime the particle is meant to be travelling through (or the excitation and the field it is “happening” in). QFT can in fact give approximate answers of that kind by imposing a cut-off on local gravitational contributions, but a properly elegant way of doing this – one which shows how the three dichotomies can be both internalised and also connected to each other as some kind of fundamental geometric relationship – is still a work in progress.

-

Why Was There A Big BangPlanck probably thought that by calculating the smallest possible measurable time or length, that fades into asymptosis or ellipsis, would put an end to such "before the beginning" nonsense. — Gnomon

Planck was actually just thinking about the problem of why the heat radiated by an object didn’t add up to infinity like a simple extrapolation of know physics said it should. He introduced the notion of a quantum cut-off point. That ushered in physics’ next big paradigmatic revolution.

So that should tell us something about the need to avoid infinities if we are to have the bounded finitude we actually observe as our natural habitat. Existence is a limitation on too much everythingness. Creation is not about getting something out of nothing but of developing constraints on unbounded fluctuations.

I believe Anaximander said something along those lines 2500 years ago. Indeed, all pre-modern cosmologies seem to be the same creation story of order arising out of chaos.

Put together Planck’s h, Newton’s G and Einstein’s c to make Okun’s cube of physical theories and you have a model of a mechanism where the positive curvature of quantum fluctuations is balanced by the negative curvature of gravity at a rate that is scaled by the speed of light. We have a set of fundamental constants that are cast in a dialectical or reciprocal relation with each other.

So we discover there is this broken symmetry at the root of things. It is not unreasonable to wind that back to the symmetry state that marks its beginning.

Metaphysics has always reasoned this way. But modern thought - on both sides of the realist-idealist divide - has gotten into the habit of simple reductionism and it’s cause and effect monism. Creations can’t be self-organising say the reductionists. They demand a creator that stands outside the creation.

It would be nice, if for a change, we could just freely speculate on such pre-columbian "what's out there over the horizon?" scenarios, without coming to blows over which party is the biggest idiot : the short-cut-to-India optimists, or the sail-over-the-edge-pessimists. — Gnomon

That is true. But also, there is ample science to constrain the free speculation. Yet then cosmology is like quantum theory in that scientists themselves go crazy with their speculation as they have not invested too much effort in questioning the reductionist habits of thought that have generally made science so successful.

That makes it an interesting situation - like consciousness studies too - where those who are very well informed about the material facts are also blinded by the paradigm within which those facts were developed. Both the well informed and the uninformed can make dogmatic assertions about the nature of the Cosmos and the nature of the Mind that are ill thought out for their different reasons. -

Why Was There A Big BangThe prevailing view (the dogma) is that space can't be embedded in a higher dimensiolal one. But thats questionable, although the dogma forbids asking this. But 3d space can be immersed in 4d space. Causing expansion to be an illusion. — Prishon

You have clashing brane theories that make use of string theory’s higher dimensionality. So this is another example of reductionist desperation in my eyes. But mathematical physics is certainly not dogmatic about these kinds of things.

The Ekpyrotic Model of the Universe proposes that our current universe arose from a collision of two three-dimensional worlds (branes) in a space with an extra (fourth) spatial dimension.

But there are good reasons for just 3D, like the fact that gravity and other forces aren’t leaking away into this embedding space. They weaken at the square of their distance and not the cube.

And more to the point, cosmology noticed that all the stars become increasingly red shifted with distance from us. So unless the Earth is the still centre of an exploding creation, you have to accept the conventional Big Bang cosmology. -

Why Was There A Big BangNo, it's flat and Euclidean. — frank

You might be thinking just of space and not spacetime. Inertial expansion is flat but accelerating expansion is curved.

As I said, the prevailing view now is that there was no singularity of any kind. Big bangs happen from time to time in a greater universe that could be without limits. — frank

But even Linde’s eternal inflation is a story about a fractally branching multiverse so it indeed all branches from one initial starting point. There is a singularity in the need to explain why there was the first Planckian shoot that became the vast tree.

But if I had to pick a pre-Bang cosmology, an inflating multiverse seems the best candidate. It at least provides an anthropic reason why we live in a branch that happens to have the “right” randomly chosen physical constants - I mean all the constants besides the three key Planck ones the multiverse must also presume. :grin: -

Why Was There A Big BangBut I do object to the suggestion that there's anything "dogmatic" about pointing out that the parts of the BBT which are widely-accepted and observationally-corroborated don't include any beginning or origin of the universe. — Seppo

The dogmatism relates to the assumption of a beginning/origin having to exist at some smaller scale/hotter temperature beyond the Planckscale event horizon of the Big Bang.

Spacetime and energy density are so yoked together that going "smaller" and getting "hotter" really doesn't make sense if curvature reaches its maximum at that scale. We arrive at a "just before" that is all blackholes and wormholes – a quantum foam at best.

That might be still a "something" we can model as a pre-Bang state. It just wouldn't be any kind of time, place, or state of materiality, as we claim to know it from this side of the cosmological event horizon.

So the Big Bang would in that view be the start of the Universe in the sense that the term has any useful meaning.

After all, I'm sure you would agree with the conventional reply when folk ask what is the Universe expanding into. Very quickly you will say it is just the expansion of the metric itself. The Universe is not embedded in some larger space.

But why doesn't the same logic apply to the origin of the Universe? Why does it have to be developing out of something? Why can't the development itself be what produces a developed something?

My only purpose is to counter the familiar and misleading talking point (found mostly in popular-level content on cosmology/BBT) that this is a generally accepted or observationally well-established part of the standard cosmological model accepted by most cosmologists, or that the BBT is primarily a theory of the origin of the universe (rather than of its development). It just isn't. — Seppo

I'm trying to highlight the problem with what you say is the generally accepted metaphysics.

It could be the case that Universe didn't start at the "point in time" that is its Planckscale event horizon. It could be true that there is a lengthy pre-bang story along the lines of Linde’s eternal inflation or Big Bounce cosmology. It may well be that QG is a theory that sees beyond the Planckscale and finds some kind of spacetime/energy density story that pushes the origin of that spacetime/energy density story into realms that are simply just smaller and hotter.

But these ideas are speculative, simplistic, and don't even tackle the essential questions about why there are these things of spacetime and energy density. Again, we pull folk up who ask what space our Universe is growing into, yet seem untroubled by bouncing cosmologies or branching inflation fields that presume a familiar notion of passing time as the place in which our Big Bang universe appears as just another material development.

Nothing useful is added by these kinds of linear extrapolations. The question is why spacetime and energy density are even a thing that came into being. Telling people not to bother so much with the Big Bang, wait for the full story of the "universe" beyond the Planckscale, is buying into the bad metaphysics that simply makes good careers for mathematical physicists looking to stay relevant in a time where there is little actual progress to report.

My inclination is instead to turn things around, take the Big Bang seriously as its own origin point, and see how that fits what we already know in terms of GR, QFT, the Planck triad of constants, and general symmetry breaking and condensed matter physics principles.

The Planckscale describes the first moment when spacetime as the backdrop, and energy density as its contents, could be told apart. It's a tale of co-dependent arising. And that is already the story the Big Bang theory tells in its talk of a beginning that was a relativistic realm of vanilla GUT force fluctuations. In what sense did either distinct particles or a background vacuum exist when the world was still so hot that the void was completely filled by its own wild fluctuations? Big Bang theory then says that was the initial lack of proper separation that became fairly quickly an expanding~cooling process of increasing separateness.

You don't have to like the scenario. But again, the point is that we agree not much progress has been made these past 20 years or so. Conventional thinking might be that we just need to be able to punch through the Planckscale event horizon to discover what further cosmological structure lies beyond its veil. I say the event horizon most likely simply marks an actual limit on counterfactual being. And that is at least an alternative worth being discussed - as has indeed happened with some of the loop and condensed matter models. -

Why Was There A Big BangThey've actually looked to see if the universe shows overall curvature, and it doesn't. — frank

But they looked and found it has dark energy and so an "internal pressure" that means spacetime isn't contracting, nor even coasting to a gravitational halt, but is undergoing open-ended acceleration.

So there are three generalised curvatures the Universe could have had - closed and hyperspheric, flat and Euclidean, and open and hyperbolic. The surprise is that it looks to be hyperbolic and so the event horizon of the Big Bang can be matched by its inverse of the event horizon of a de Sitter space Heat Death.

The end of the Universe is also a problem. It was hard to believe it could actually be so finally balanced in terms of its gravitational contraction and thermal expansion that it might indeed just coast towards a stop over infinite time - another singularity! And if it had too much gravity, too little matter, then it is almost just as improbable that it would have lasted the 14 billion years until now without collapsing.

So a faint positive curvature allows the Universe to stay open and yet come to an eventual Heat Death halt in terms of its cosmological event horizon – the size of the region that counts as the observable Universe.

The source of that dark energy or cosmological constant still has to be explained. It would be nice if the simple theory - that it is curvature contribution from the quantum fluctuations of the vacuum itself - pans out. That would make the force something internal to the fabric of spacetime itself - all part of the Big Bang deal.

That's the prevailing view in physics right now. No singularity. — frank

Well singularity is a technical term in maths for some kind of radical break or discontinuity in the smooth continuity of a function. So it can take many shapes.

Folk who were rewinding the GR-regulated evolution of the Cosmos past the Planckscale were aiming at a singularity shaped like a zero-D point. They wanted to shrink things to where spacetime was infinitely small. But QM said that meant it also had to be infinitely hot - as such complete certainty about location was a matchingly complete uncertainty about momentum.

But actually tie to the two curves together by inserting all three Planck constants into your cosmological equations - as QG would have to do - and you get instead (hopefully) a smooth transition in terms of a singularity-masking event horizon. -

Why Was There A Big Bangwe need a quantum theory of gravity to describe what is happening... which we don't have. So we can't rewind any further, as we have no description of how physics works in those extreme conditions. — Seppo

Do you see how you just confused the expectation of being able to rewind (in linear fashion) beyond the Planckscale event horizon with the acceptance that it is the actual limit of such rewinding?

You have been very dogmatic about the Big Bang not counting as the actual beginning of the Universe, but that is just a failure to rid your metaphysics of this assumption about linearity - the ability to extend any straight line to infinity.

What QM tells us about GR is that its straight lines become eventually so completely curved that they turn into little circles. You wind up with a description of a spacetime foam that is populated by blackholes and wormholes. A mess of naked and disconnected fluctuations, in other words.

So the Universe - as we understand it to be, via our models - is this classical realm dominated by its Euclidean flatness. We then look a little closer and have to come up with further models like GR and QFT that introduce some curvature and uncertainty. Then we track those speed wobbles in our initial Newtonian determinism and find eventually it all goes completely out of control. All the straight lines turn into curves so tight they are a foam of circles. All the uncertainty becomes so great that all that exists is naked fluctuation with no grounding context.

QG might be regarded as a project that restores linearity to the physics in a way that will let us punch right on through the Planckscale event horizon and see what lies "beyond" ... as some extrapolatable continuation of a spacetime extent and its energy density content. But as with the Hartle–Hawking imaginary time proposal, everything we know and love as the metaphysically taken-for-granted might just curve into each other and thus vanish up its own collective arse. :razz:

So the problem lies with projecting linear expectations onto GR and QM, which are already themselves frameworks for dealing with the gathering curvature and uncertainty of reality, and then expecting QG to be the triumphant return of Newtonian straight-line metaphysics.

GR works because it uncouples the connection between spacetime extent and local energy density. So in a Universe that is generally large, cold and empty - which means what, about at least 10^-10 seconds old and down to barely 10^15 degrees? - the two halves of the one reciprocal deal can seem clearly separated. You have a backdrop of flattish spacetime in which reasonably well located events are taking place. The electroweak symmetry has cracked. The Higgs field is on. Particles now have a mass that means they can go much slower than light, even if they are still a long way from being at any kind of rest.

But as we wind the clock back towards the Big Bang, we see all that familiar asymmetry being swallowed up into the anonymity of an increasingly more general set of symmetries, until we arrive at a vanilla GUT force and a matchingly vanilla notion of a relativistic quark-gluon soup, just before everything merges into the one cosmic vagueness of an event horizon, beyond which lurks only our notions about a quantum foam of fluctuations that could also be described in GR terms as a host of tiniest possible circularities - a hot mess of spatial blackholes and temporal wormholes.

So quit holding out hope that a QG theory will restore linearity and so discover a time and a place (and a higher heat or energy density) that lies over the well-demarcated Planckscale event horizon. That will enable you to be less dogmatic in your proclamations about the Big Bang not marking the beginnings of metaphysical linearity as we know and love it. The Universe that is generally large, cold and just about empty. :smile: -

Why Was There A Big BangWhat bothers me is why did cosmologists stop the extrapolation at, to quote Wikipedia, "...hot dense state..." They could've simply drawn the trajectories of all the galaxies back to a point just as William Lane Craig and I thought. It's not that there was a law against it, right? — TheMadFool

But it makes a big difference whether you are imagining extrapolating a line - a linear relation - or instead an asymptotic curve.

Do the two parts of the cosmic equation - spacetime extent and energy density content - go to infinite value because they trace back all the way through the Planckscale event horizon and meet at a point, the confounding singularity, at some further distance beyond?

Or are the two parts of the equation yoked to each other in reciprocal fashion - as the Planck triad of c, G and h suggest - so that instead they converge asymptotically to create that event horizon that marks the beginning of both spacetime extent and its entropic spreading.

-

What is Information?After having now read a number of papers discussing Peircean semiotics in the context of a range of approaches within philosophy and psychology here are my tentative thoughts: — Joshs

It’s a good post. You set out the positions clearly. :up:

The second school is the pragmatism of Dewey, James and Mead, which , while sympathetic to Peirce’s approach , avoids the strict logic of his code-based semiotics in favor of an intersubjectively mediated empiricism. — Joshs

Yes. But my reason for championing Peirce here is his tight focus on the semiotic modelling relation and the mechanics of codes. This is key because what is generally missing from causal metaphysics is an account of how the two realms of mind and matter interact. Semiosis plugs that explanatory gap.

Peirce himself is pretty weak on how semiosis in fact applies to biology, neurology, psychology and sociology. His own phenomenology, or phaneron, just tries to shoehorn things into a trite trichotomy of faculties - feeling, volition and cognition.

Similarly, his agapism is toe-curling. He was mired in the theism and transcendentalism that was the norm for his cultural milieu.

But on the central issue - the generality of semiosis as a mechanism to connect the two divided aspects of nature - he is sound.

Their notion of semiotics is not code or logic based but instead compatible with Wittgenstein’s language games as forms of

life. They reject the concept of language as ‘meaning’ , of truth as propositional belief, and critique empiricism and the myth of the given. — Joshs

That’s fine. But my reply is that this is the view from just the level of semiosis that is language use, and so just the aspect of human psychology that is socially constructed,

My interest is in countering scientific reductionism and its idealistic counter-response, romanticism, with a systems or natural philosophy metaphysics - the tradition that traces itself back to the four causes of Aristotle.

This view is well developed especially in theoretical biology. As I have described, I was focused first on the socially constructed nature of the human mind (so Vygotsky was my man there), then on neuroscience and philosophy of mind, then on complexity theory, then eventually - as the best unifying perspective I found - the circle of theoretical biologists led by Pattee, Salthe and Rosen. And these guys in turn were moving from a general hierarchy theory and modelling relations perspective to one that acknowledged Peirce as offering a unifying logical story.

But also, biosemiotics now goes far past Peirce in grounding itself not just on a process and probabilistic ontology, but the more specific one of the thermodynamics of dissipative structures. So there is a general theory where the material aspect of being is all about entropy dissipation (with the Big Bang being the most general example). And then semiosis and code explains how informational mechanism can evolve to accelerate entropy production. So the argument becomes that everything - from the Cosmos to Mind - is just a thermodynamic drive. And complexity arises out of that as grades of organismic semiosis.

This is a scientific claim more than a metaphysical one now. It stands or falls on the evidence.

Enactivism is generally thought as shorthand for 4E: enactive, embodied, embedded , extended and affective. The system is not simply embodied in its biology, it is equally embedded in its physical-social environment and extended into that ecology via tools outside the strictly determined end of the body

that are nonetheless part of its functioning. — Joshs