-

Shakespeare Comes to AmericaWhatever mark of inferiority is stamped upon a segment of the population: race, gender, sexual persuasion etc., that segment needs to see one of its own up in power before it begins trusting in the national system as a whole. — ucarr

But Trump? Sure he was poor white folk passing – women and men, immigrants and rural. And rich Christian conservatives enabling.

So he was both very much one their own to these constituencies. While being neither poor nor Christian.

The question is can the US look at Harris and see what it gets? I mean, if I was being asked, I'd vote anyone but Trump, so she qualifies already.

But why were so many folk dismissing her for being shallow and brittle before the fickle finger of fate had to make its hasty choice? Can't fate do a better job of picking out a worthy choice to thrust the power on. Does being in possession of Biden's campaign war chest when the music stopped count as a sufficiently Shakespearean-level script? -

Is the real world fair and just?Where I disagree with Popper is that something needs to be falsifiable in order to be valuable on pain that history is not falsifiable, and that this is the only way we understand how science works — Moliere

That is why I like the modern systems approaches to history. Fukuyama for example offers the counter-examples that would inform this debate about how hierarchical social order should pan out.

There are horses for courses. But then also the deeper balancing principles show through.

So paraphrasing Fukuyama, he says the growing agrarian inequality within a Europe moving towards a continent of nation states and organised capital/property rights – a phase transition in terms of societies organised by the polarity of taxed masses and paid armies – sees a triadic balancing act with the king as its fulcrum.

The king is meant to do the double job of being the chief decider of the day to day but also God's representative of the long-term good here on Earth. There was not yet a proper institutional division of these powers that suited the new sovereign nation political formula – taxing agriculture for the surplus to feed the king's now centralised military. So kings struggled with this legitimacy problem and had to play off the aristocracy against the common folk.

Simply put, the dialectic balance from the king's point of view was deciding whether to side with the down trodden peasants or the entrenched aristocracy. Sweden and Denmark saw kings side with peasants when aristocrats were weak in 18th C. They pushed through land reform. But monarchs went the other way in Russia, Prussia and other parts east of the Elbe from 17th C. This saw the rise of serfdom with the collusion of the moneyed state.

Or giving a finer grain – more hierarchically fleshed out account – he says that during the general period of European state formation, the peasantry didn’t really count as it wasn't organised. The monarch just faced the three levels of social power in the nobility, gentry and the third estate. That is, the lords, small landowners and the city rich. So a four legged balancing act was emerging as industry joined agriculture as the new entropic driver of a nation and its taxable surpluses.

Fukuyama then details the four outcomes of this more complex industrial phase transistion happening even as the agricultural one was still playing out.

First was weak absolutism like the French and Spanish kings. Their nobility kept them in check. Russia manage successful absolutism as the nobility and gentry were tied into the monarchy in way that allowed the upper class to ruthlessly enslave and tax the peasant class.

Hungary and Poland he classes as failed oligarchies where the aristocracy kept the king so weak he couldn’t protect the peasants, who again got exploited to the hilt.

Then England and Denmark developed strong accountable systems with effective balances. Managed to organise militarily while preserving civilian liberty and property rights.

Beyond that, the Dutch republic, Swiss confederation and Prussian monarchy all add to the variety of outcomes seen.

So history does offer its retrospective test in that we can apply a dialectical (or rather triadic systems) frame on what occurred and find that it is all the same general dilemma getting solved in a natural variety of ways.

As the systems view emphasises, developmental histories are not deterministic. They are just constrained by the requirement to stabilise their entropy flows through optimising balances. Accidents of place and time just get either smoothly absorbed into the general outcome, or that particular historical offshoot goes extinct – absorbed into someone else's general entropic flow.

As to Prussia more particularly, Fukuyama says it makes the point that it matters which comes first, the state building or the democracy. Prussia was an example of a strong autocratic state arising before then opening up to modern democracy.

The UK and US were instead democratic ahead of becoming statist, so in fact had a lot of corrupt patronage until they both found social coalitions that could enforced reform. The US heard business complaining about the state of public administration, farmers complaining about the railways, urban reformers complaining about public infrastructure. All coalesced to force institutional change.

Fukuyama says Italy and Greece are soft states that got democracy without a matching internal competition to drive out corrupt public service tendencies.

Or for the fun of it, I will tack on a larger chunk of my notes from his masterful trilogy. The point is that dialectics might be a start. But where we want to end up is being able to see the dialectical difference at the hierarchical or systems level of what is truly general and what is properly accidental in a logic of evolutionary development. And a close study of history does reveal that in terms of the evidence of a set of counterfactuals.

Fukuyama on Hegel’s Prussian bureaucractic state….

The unification under Bismarck led to a stronger model of French state control. No democratic accountability but the Kaiser was only in charge through the filter of national law. Property rights and execution of justice were impartially enforced. This set conditions for rapid German industrialisation. Business could flourish in a stable regulatory setting.

Germany emerged out of a long struggle of fiefdoms, like China’s original Qin dynasty story. War was the organising need that led to meritocratic state order. Business is local in nature, but war is global driver.

Germany had become a fragmented landscape of “stationary bandits” - junkers - living off local peasants and fighting with hired mercenaries. In Prussia, a series of kings gradually centralised control after they started to maintain a standing army.

So control over money and command needed, and that caused the development of an efficient bureaucracy. Prussia became known as the army wth a country. King’s Calvanist puritan creed also a big influence as it led to state education and poor houses. Austerity imposed from the top.

But as Prussia tamed its neighbourhood, favouritism began to replace merit. Prussia lost its 1806 Battle of Jena-Auerstadt to the more modern Napoleonic army. Hegel saw Napoleon ride through Jena and said it was the arrival of fully modern rational state. Human reason become manifest, according to Phenomenology of Spirit and The Philosophy of Right.

In 1807, Prussia’s Stein-Hardenberg reforms saw noble privilege abolished and commoners allowed to compete for posts via public exams. The new aristocracy was based on education and ability over birth. Universities became the pathway to office.

So reforms like France, and like Japan’s Meiji Restoration. Commoners could now buy protected noble property. Social mobility created. The German notion of Bildung, or moral cultivation and education of the self, distilled Kant and other German rationalism.

Prussia was an autocratic and technocratic society, like Singapore. While it had a king, the new legal distinction between public and private meant that the sovereign ruled in the name of the global state - the wish of the people - in an abstracted sense that was enshrined in a scalefree national bureaucracy.

The bureaucracy became its own institutional statehood, with Royal line coming to be marginal. Hegel saw this as the rise of a universal class that represented the whole community.

Prussia’s unification of a Germany achieved in 1871 under chancellor Bismarck. His rule resisted both control by the Kaiser and also the calls for actual democracy. A technocracy in charge.

This became a problem after WW1 as the change to a federal democracy was weakened by the entrenched bureaucracy with its right wing and conservative character. This paved way for Hitler to take the reins. The military had become an isolated caste and stayed outside democratic control, used to being accountable to a Kaiser alone.

In the end, the bureaucratic service survived even the Nazis. Most Prussian civil servants were party members and got purged by the allies. But then had to be rehired to get post-war Germany going - p78.

So Germany - like Japan - got rational state ahead of democracy. Fukuyama says that works better and leads to sturdy, low corruption, tradition. Their industrial base also seems crucial though. -

Shakespeare Comes to AmericaConservation and access are the two tectonic plates of politics. — ucarr

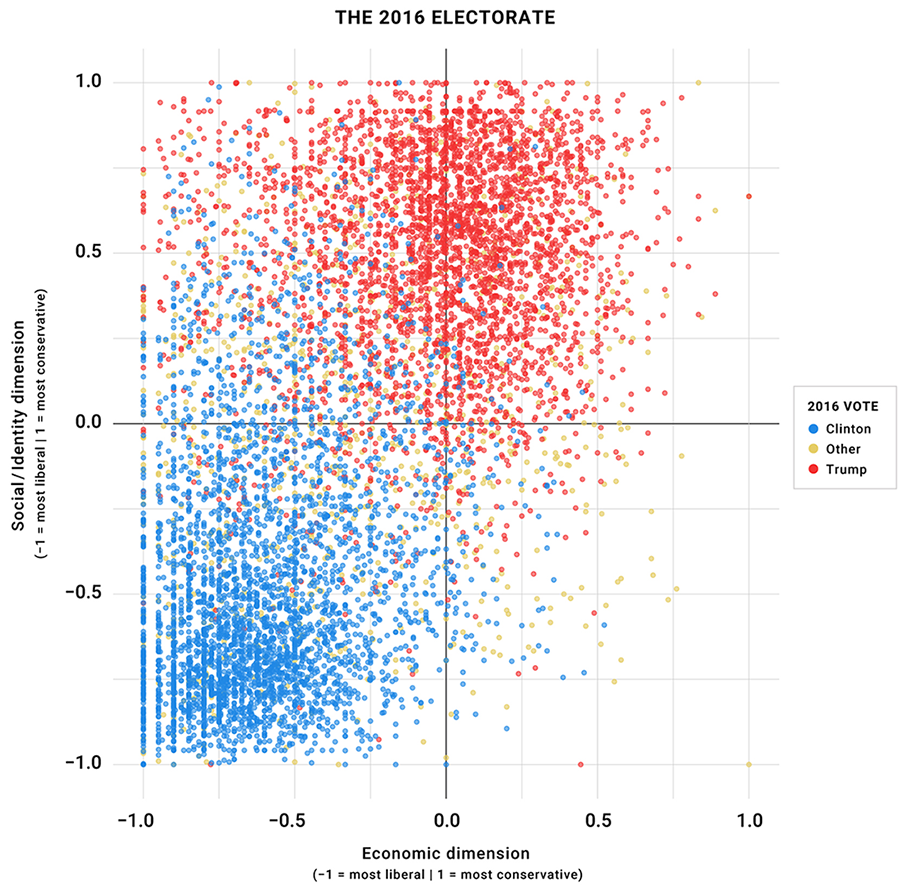

Who says this? Is this your framing or that of some source. The more usual polarities would be the four quadrants formed by the divides of liberal~conservative and economic~social. So for instance this snapshot of the US in 2016.

This data could be interpreted as a general opposition between those who prioritised personal free expression (as they ain't got the wealth) and those who prioritise the other thing of protecting their wealth (as they already can pay to do what they want).

That seems to lean into your phrasing? Capital is power and debt is slavery. Except all capital is now futurised debt in the current era.

the country is now experiencing a square dance do si do, with Democrats momentarily in the role of conservators and Republicans momentarily in the role of radicals. — ucarr

Or maybe there is a story in old wealth having the money to radicalise their own poor and young poor forcing big business over to their side as is just so obvious that 30 years of financially engineering a profit on the back of a depleting planet is not a good long-term plan?

Values aren't shifting so much as hardening. Both sides are beginning to show their desperation. The happy middleclass middle ground of debt-free boomer memory has vanished from underneath everyone's feet.

She possesses the depth of experience and brilliance of mind to advance the will of the people. — ucarr

Err ... evidence of this? Both sides are choosing bad leaders as they become opposing popularist camps and no longer a rational system of balancing the collective tensions of the social~economic system.

So, per Shakespeare, Harris, being the one in position, stands ready to have greatness thrust upon her. — ucarr

You are saying that despite the fact no one seemed to think she was any good when she was VP, maybe she will rise to the occasion as president? One would certainly hope so. And the US state apparatus may still not be broken to the degree it won't steer her right. The bureaucrats and techno-elite might be the ones who actually respond to having the greatness of the responsibility thrust on them? This will be the real test of the US as a political architecture.

Harris is a woman of color. She will magnetize the female vote across party lines, likewise, the youth vote across party lines. It’s time to listen to a woman as she roars. — ucarr

That is not a powerful argument in my book. It may indeed play out in the popular vote. But what the US needs more is something sustainable done about its wealth inequalities and environmental unsustainabilities. The deeply technical issues.

How much does a president actually run all that these days? But being able to articulate that path would be the inspiring start. Not gender or race.

If you just vote for those who look like you – white bread or suitably diverse – then that is how you continue to get what you already got. A country divided by populism rather than agenda. -

Is the real world fair and just?The reason for my references to Buddhism, is that I look to it for a normative framework, one that is separate from the cultural mainstream (hence, counter-cultural) . — Wayfarer

I should remind you of Joanna Macy who drew the parallels between systems theory and dependent co-arising.

https://www.amazon.com.au/Mutual-Causality-Buddhism-General-Systems/dp/0791406377 -

Is the real world fair and just?In Hegel the first moment, of "understanding", gives way to the instability of the second moment, the "negatively rational", and thence to the third moment, the "speculative" or "positively rational". — Banno

A good job Peirce fixed Hegel’s stab at a systems story of logical development then. The dialectic became the semiotic. The tale of how dichotomies start from a position of mutualising advantage. It is then reasonable that they would continue to develop in being properly balanced.

Other Enlightenment historians and political philosophers of course saw the necessity of exactly this self-organising logic. Social democracy emerged as a balancing of the “thesis and antithesis” which is the win-win story of a system able to equilbrate its contrasting tendencies towards competition and cooperation.

Good politics is systems thinking in action. It just doesn’t get called that as such reasonableness seems simply commonsense. -

Is the real world fair and just?My approach is like that of phenomenology - the world and mind are co-arising. — Wayfarer

And the better way to carve up this territory is semiotic. The enactive or embodied self that is sometimes the cognitive science approach you cite. Hence why you persist in a way that causes confusion.

In the semiotic story, the co-arising thing is the Umwelt. The view that has a self at the centre of its understood world.

The mind of a babe starts out vague. Self and world are not yet strongly formed as suitably dichotomised poles of its being. But learning quickly follows. A strong or crisp self/world duality becomes the regularising habit. That is the way mindfulness as a generality arises. In a nervous system set up to learn from experience that there can be both a world and its intentional master.

The conscious self is a construction that arises in the dialectical process that is a world-making.

But this is the remaking of the world as the thing in itself into our felt psychological reality.

And we know there is then also that world as a material reality as it stands in hard causal opposition to our intentions rather too often. Like when we kick a stone in fact.

To deal with this recalcitrant aspect of the world, we then seek to tame it via further semiosis. We eventually get to a scientific point of view where we become the technological gods whose every intention becomes exceptionless law.

We become not just selves but superbeings. Or just some kind of rebirth as supercharged and ill formed toddlers. Can’t decide which. :razz: -

Is the real world fair and just?Both the is/ought and the hard problem are to do with intentionality, — Banno

I.e.: Finality. And the degree it can be both an epistemic assumption and an ontic commitment in our metaphysical schemes. -

Is the real world fair and just?Peirce criticized Berkeley’s nominalism, the idea that universals are merely names without any real existence. Peirce, a classical realist, believed that general concepts and laws have a real existence independent of individual instances. He thought that Berkeley’s nominalism undermined the reality of general concepts, which Peirce saw as essential for a coherent theory of knowledge and science. — Wayfarer

I can't tell the difference between Wayfarer and ChatGTP anymore. :chin:

But this is another way of talking about that holism vs atomism division which a logic of vagueness hoped to resolve. The generality of form must be matched by the vagueness of matter. In this sense, matter can be seen as effete mind. Or rather less tendentiously put, the least structured form of Being. -

Is the real world fair and just?However I don't see the theoretical bridge in either case. — bert1

In general I argue for a metaphysical holism in opposition to a metaphysical atomism. But complexly, it is a holism that must recover an atomism within it as its own contrary or dialectical limit. It is thus a holism as understood in modern hierarchy theory, systems science and Peirce’s triadic semiotic logic. A holism bounded in terms of its modal dimensions of the vague-crisp and the local-global. A holism where substantial actuality emerges from the hylomorphic pincer movement of structural necessity acting on material possibility.

So that is always the connecting thread. One finds every argument being polarised into two rival camps, two rival claims to truth. That is just how debates go. One must find a side and join it. Collectively the two sides use each other to drive themselves ever further apart. Those involved seem powerless to resist the logic of this dynamic. To arrive at agreement seems impossible because one must always pass first through some absolutising division. You joined one team and not its “other”. Intellectual peace becomes impossible. Indeed no one wants the game to end even though the disputes are long past being productive.

But a larger view can see that this is a pattern that has its resolution. The dialectic is not resolved by its dissipation in some third thing of a synthesis exactly. It is instead resolved by becoming a division - a dynamic - that now can be seen to be basic and foundational over all scales of being. The division is how the system develops in general rather than being something which simply exists in a problem creating way. At any point in a world, you should be able to see that it is based on the fact that it is balanced between two complementary tendencies. What is actual is emergent from the mutuality of a reciprocal opposition, or the logic of a dichotomy.

So an example would be our best model of a society or an ecology as a holistic state of order. At every point, at every level, we should find that the system is in that state of tension - of criticality - that is a balance between the actions of competition and cooperation. The society or ecology must always apparently be torn between these two opposing tendencies, these two teams they want to follow. But in being a polarity framed within a hierarchy, there is now a global equilibrium balance that can be struck. The system can have its optimising goal of balancing that fundamental tension, that fundamental dynamic, over all its scales.

This is why the natural theory of well balanced societies is to see them as hierarchies of interest groups. They can collectively optimise as they can become as differentiated as they are integrated. If you want an association of pickleball players, then go for it. If that impinges on the paddleball fraternity, then constraints will be exerted. Competition forces cooperation and cooperation permits competition.

So long as this is a fluid and emergent causal story, a system can seek its holistic equilibrium while also being composed of its atomistic interest groups that range from the most obscure to the most globally applicable.

The problem thus is one finds stale debates that have folk trapped. They are locked into a dilemma and feel they must simply now assert one pole or its other as the only acceptable monistic choice. I can point the way out of this bind. But their own atomistic reasoning is what won’t let them free themselves. -

Is the real world fair and just?So the "leap" is : things are individuated, and they can only be so if they were an undifferentiated potential. — Moliere

It is not merely that they are individuated. Any particular thing would require some generalised dichotomy that allows it to be measurably this thing and not that thing.

So where is this particular thing on the scale spanning the gamut from chance to necessity, discrete to continuous, part to whole, matter to form, local to global, integrated to differentiated, construction to constraint, and so forth. All the grounding dichotomies that made metaphysics the generalised reality-modelling logic that it is.

In my discussions with fellow semioticians, a dichotomy of dichotomies emerged from this murk. The local~global and the vague~crisp.

The local~global encapsulates the idea of hierarchical structure in a pretty strong mathematical way. But it is the synchronic view. The view of everything as it is organised right now. You then need the developmental trajectory point from the vague to the crisp to capture the diachronic view. How things started and where they end as their reciprocal limit.

You can imagine the opposite also happening – the crisp dissolving back into the vague. That was Anaximander's model. But the Big Bang does argue for a one way symmetry-breaking arrow. Which makes sense if the vague~crisp is indeed the most fundamental level as existence is really a self-organising process of coming into being by becoming more locally~globally divided.

It is what cosmology tells us. The Universe exists because it does just this. It begins in the radical vagueness of the Planckscale – what could loosely be called a quantum foam. And then it expands and cools, becoming more definitely divided against itself which each tick of the cosmic clock. It doubles and halves, doubles and halves as its global expanse keeps growing while its local contents keep thinning.

So sure, the PNC can be applied to the particular individuated thing in the way classical logic likes to imagine. We can have one cow and then another cow, and indeed a modal infinity of cows. They seem to come ready-made as individuated. And each can be further individuated as substantial beings with any number of differentiated properties.

This cow could be black and this other cow white. But a cow couldn't be black and white all over. Or this cow could be in my paddock or in your paddock. Just not – barring quantum superposition – in both at once.

However I am talking at the level of universals. And what is a cow universalised? There we start moving into utterances that seem to have some vagueness about them. Or are generalised enough that we can see our working concept is suitably encompassing.

Is a cow still a cow if it has no legs? Is a cow still a cow if it is a hot air balloon flown over a rock concert.

What would it mean to have pinned down this vague~crisp axis of developmental or evolutionary ontology – metaphysics' diachronic vantage point – so as to make it a robust logical relation. The reciprocal or inverse relation that dialectics would suggest. A symmetry breaking taken to its opposing limits so that it indeed comes to be a fundamental asymmetry.

Can one see the cow in the larval sponge? Well we can think we find the first hazy vestiges of a creature having a segmented backbone. A vague potential now sufficiently differentiated to begin producing a whole host of further differentiations – variations of the same new gene program – to produce complex vertebrate bodies like a cow.

And can we see the crisp end outcome that factory farming puts on that continued differentiation – the further individuating of cow-substance into non-contradicting types? The kind of cow which one might start to order up in engineered fashion.

"I want an A2 milker and not an A1 as those give people leaky guts. And you risk getting sued if you can't deliver exactly the type that was asked."

Specificity becomes an open-ended possibility once a dialectical spectrum of choices has been set out in a suitably logical fashion. One that is indeed already able to support counterfactuality.

Vagueness is then that which swallows all counterfactuality like a black hole. Creating the famous black hole information paradox. -

Is the real world fair and just?The result of contradiction in classical logic is not just vague - it's quite literally anything. — Banno

You mean, any thing. Anything would be the generalising leap from the differentiated particular. And let’s not make the modalist mistake of believing in infinite sets except as a pragmatic tool for taking limits. -

Is the real world fair and just?The part here that I'd like to better understand -- and is part of why I find Hegel frustrating -- is the "leap" from prior-to-PNC to PNC, or some variation thereof. — Moliere

There is a retrojective argument. For things to be crisply divided then they would have had to have been previously just an undifferentiated potential. A vagueness being the useful term.

Our imaginations do find it hard to picture a vagueness. It is so abstract. It is beyond a nothingness and even beyond the pluripotential that we would call an everythingness. It is more ungraspable as a concept than infinity.

Even Pierce only started to sketch out his logic of vagueness. That is why it excited me as an unfinished project I guess. One very relevant to anyone with an evolutionary and holist perspective on existence and being as open metaphysical questions. -

Is the real world fair and just?aybe it's because the only aims in your philosophy are instrumental and pragmatic. No 'beyond'. — Wayfarer

Jeez, you’re right. Urging folk to wake up and smell the oil - understand what now drives our political and economic settings - is really pretty small beer compared to your uplifting moralising and and karmic acceptance.

You win the big prize for your heart being “in the right place”.

The reason you're critical of reductionism is not philosophical, but technical - semiotics provides a better metaphor for living processes than machines. And yet your descriptions are still illustrated with 'switches' and 'mechanisms' and energy dissipation — Wayfarer

Twist away but I’ve made it clear enough that biosemiosis is talking literally about the interaction between mechanical forces - the way an enzyme can close pincers around two molecules it wants welded together - and quantum forces like entanglement and tunneling, or how clamping two molecules allows them to quantum jump the chemical potential threshold and weld them “for free”. Or even for profit.

Sure, technology is now human ingenuity repeating the same biosemiotic trick. But without actual switches and ratchets and motors at the nanoscale level where biology meets chemistry, you wouldn’t even be here to dispute this as an essential fact about your being.

It’s a recent discovery of course. Barely 15 years old as science. Philosophers may catch up in their own sweet time. If they have nothing better to do. -

Is the real world fair and just?. First, and most obviously, in classical logic asserting something and its negation leads to contradiction, not to some third option. — Banno

If the PNC said it all, why does it lead on to the LEM as the third law of thought.

Peirce offered his answer. Vagueness is thus defined by that to which the PNC fails to apply, and generality is defined as that to which the LEM fails to apply.

Dichotomies arise out of monisms and cash out in trichotomous structure. The particular so beloved of logical atomism is thus always found betwixt the larger Peircean holism of the vague and the general. The logic of hierarchical order in its proper form. Not your levels of corporate management tale meant to conform to the brokenness of the is/ought divide.

And secondly, even if we supose that dialectic does not breach non-contradiction, the result is not clear. — Banno

The result is mathematically clear. Reciprocals and inverses are pretty easy to understand as approaches to mutually complementary limits of being. A dialectical “othering”.

The dialectic doesn’t have to worry about breaching the PNC. It is how the PNC is itself formed. It is the division of the vague on its way to becoming the holism that is the general - the synthesis following the symmetry-breaking. -

Is the real world fair and just?I can see why you would say that, but your perspective is predicated on the physicalist notion that mind is 'the product of' the brain. — Wayfarer

In what sense is that a notion? And don't forget that I treat mindfulness as a general term that would span the biosemiotic gamut from genes to numbers as levels of encoding. So it ain't all about the neurons says the true anti-reductionist here. :wink:

The brain doesn't generate consciousness. That is the kind of statement that betrays an idealist who wants to treat the mind as another kind of essential substance to stand alongside the substance that is matter.

What a biosemiotician would say that the nervous system is the mechanical interface between the organism's informational model of the world and the resulting self-interested metabolic and behavioural changes made to occur in that world.

It is the production of a living process rather than a material stuff or even informational state.

I find his metaphysics hard to fathom, but he does say that 'matter is effete mind'. — Wayfarer

Here we go. Just repeat the same old contextless quote. As I've said in the past, read Peirce and you can see he was absorbed by the way dissipative states of matter could look remarkably lively and self-organising. The line between the animate and inanimate looked rather small when you considered how matter could shape itself into orderly forms under the Second Law.

Peirce, who was Harvard's top chemistry student for his first degree, was up to date with materials science. But working quite a while before the genetic code was cracked. So he did not have the advantage of this epistemic cut to draw the proper line. His semiotics – as a general triadic logic of nature as well as of speech as a triadic sign system – simply anticipated what biology and neurology were shortly to discover within their own scientific domains.

That is central to his idea of agapē-ism, that love, understood as a creative and unifying force, plays a crucial role in the development and evolution of the universe and is a fundamental principle that guides the growth of complexity and order in the cosmos. — Wayfarer

Again we can forgive Peirce for being of his time and place. He was under great social pressure to sound and behave suitably christian – it was a requirement even of his Harvard position, and his only sponsor after being bounced out of academia for the scandal of living unmarried with a woman was also wanting publishable writings leaning in the same theological direction.

Replace love with cooperation or synergy and you can perhaps see why he might have focused on this as a missing aspect of the Darwinian evolutionary theory that was sweeping over his world at that time.

He believed that the creative and purposive aspects of evolution could not be fully explained by natural selection alone. In that he was a lot more like Henri Bergson than Richard Dawkins. — Wayfarer

Precisely. In the late 1800s, a good Episcopalian would frame a response to Darwinian competition that way. In the mid-1900s, the socially accepted frame became Marxian biology. And capitalists remain in favour of the original "red in tooth and claw".

Are your own views expressed here free from that kind of cultural entrapment? Isn't Eastern religious philosophy something that has swept through Western culture in a number of counter-culture waves over the past few hundred years.

From an idealist standpoint, it is equally plausible to see the emergence of organic life as the first stirrings of intentionality in physical form. — Wayfarer

But then you would have to explain how exactly. What changes? What creates this epistemic cut?

Otherwise just hand-waving – hand waving away the much better formed arguments that exist.

. Of course primitive and simple organic forms have practically zero self-awareness or consciousness in any complex sense, but already there the self-other distinction is operative .... Alan Watts' cosmic hide-and-seek, in which the Universe appears to itself in any number of guises. — Wayfarer

How easily you slide from the germane to the ridiculous. This is the hallmark of your style as folk keep noting. One minute we seem to have some kind of foothold on a sensible story, the next we are whisked away into spiritualist noodlings. And you seem always baffled when this is pointed out to you as an illegitimate chain of argument. -

Is the real world fair and just?I suspect that we both envision a middle-ground or transition between the poles of Mind and Matter. — Gnomon

Matter and form make the better metaphysical ground zero. Aristotle's hylomorphism. Or what might be called ontic structural realism in modern metaphysics.

A world of enmattered form or substantial being must exist as a starting point. And then life and mind evolve out of that as something further.

So now a new higher level dichotomy becomes required. We must make a distinction between the inanimate and the animate. We have to have something that separates the tornado – which can seem pretty lively in being a self-organising dissipative structure – from an actual organism. Even if the organism is also still a self-organising dissipative structure, just now self-organising in a self-interested fashion by virtue of being able to encode information.

So an organism is just as substantial as inanimate matter. All matter being in fact dissipative structure with its potential for topological order (the ontic structural realism thesis).

But an organism then adds the new thing of a modelling relation. It can encode informational states that mechanically regulate entropy flows.

A river just throws its snaking bends across the plains to optimise its long-run entropy production. A farmer can throw a whole system of drainage ditches and sluice gates across that landscape so as to harness the water flow for a useful purpose.

So the mistake in terms of idealism is to treat "mind" as something as foundational as substantial being. Substantial being has to evolve first – as enmattered form – to then become a material potential that itself can be harnessed by the mind of an organism. -

Semiotics and Information TheoryBits don't really work well as "fundamental building blocks," because they have to be defined in terms of some sort of relation, some potential for measurement to vary. — Count Timothy von Icarus

Information theory really is anti-semiotic in its impact. Interpretance is left hanging as messages are reduced to the statistical properties of bit strings. They are a way to count differences rather than Bateson’s differences that make a difference.

It becomes a theory of syntactical efficiency rather than semantic effectiveness. An optimisation of redundancy so signal can be maximised and noise minimised.

Which is fine as efficient syntax is a necessary basis for a machinery of semiosis. But the bigger question of how bit strings get their semantics is left swinging.

Computation seems to confuse the issue even more. The program runs to its deterministic conclusion. One bit string is transformed into some other bit string. And the only way this carries any sense is in the human minds that wrote the programs, selected the data and understood the results as serving some function or purpose.

In the 1990s, biosemiosis took the view that the missing ingredient is that an information bit is really an entropy regulating switch. The logic of yes and no, or 1 and 0, is also the flicking on and off of some entropically useful state. Information is negentropy. Every time a ribosome adds another amino acid to a growing protein chain, it is turning genetic information into metabolic negentropy. It is making an enzyme that is a molecule with a message to impart. Start making this or stop making that.

So information theory does sharpen things by highlighting the syntactical machinery - the bank of switches - that must be in place to be able to construct messages that have interpretations. There is the thing of a crisp mechanical interface between an organism’s informational models and the world of entropy flows it seeks to predict and regulate.

But in science more generally, information theory serves as a way to count entropy as missing information or uncertainty. As degrees of freedom or atoms of form. It is part of a pincer movement that allows order to be measured in a fundamental way as dichotomous to disorder.

Biology is biosemiotic as it is about messages with dissipative meanings. Thoughts about actions and their useful consequences.

But physics is pansemiotic as it becomes just about the patterns of dissipation. Measuring order in terms of disorder and disorder in terms of order. A way to describe statistical equilibrium states and so departures from those states. -

Immanent Realism and Ideasif there is such a thing as an idea that has a definable ontology, then it in some way would need to be instantiated. However how does an idea find its first instantiation? — Mark Sparks

If we take an idea to mean a form or structure of organisation, then it would be instantiated only upon its stable and persisting realisation.

That would be the concrete view. A form is what binds material possibility to some enduring state of order. So that is what is found by the end, in the limit, because change has effectively ceased. Instability is what has been eradicated.

This is, I would say, the ontology of quantum field theory and other physical theories based on the emergence of topological order. A hot iron bar has jiggling iron atoms with their magnetic dipoles pointing randomly in all directions. But as it cools, all the jiggling slows and the dipoles have to line up in some mutually agreed fashion. The iron bar itself now has the new emergent and topological state that we call a magnetic field.

So it is the same general logic. Platonic ideas were an early way to talk about what we would now think of as an ontology of structuralism. The world is bound by global mathematical forms – the constraint of symmetries – which it cannot in the end ignore.

These constraints "always exist" in some real sense. It is just that they exist as the final cause or goal state. They are concretely realised by the end when the forces of constraining order have put an end to the hot jiggle of the material possibilities. -

Is the real world fair and just?You are probably aware already of my disregard for the Kantian notion of the thing in itself. — Banno

It is apparent that there is a distinction between what we believe and how things are. This distinction explains both how it is possible that we are sometimes wrong about how things are, and how we sometimes find novelties. In both cases there must be a difference between what we believe is the case, and what is the case. We modify what we believe so as to remove error and account for novelty; which is again to seek a consistent and complete account. — Banno

This would make more sense if we paid attention to the dichotomistic manoeuvre involved.

How do we know we are wrong? That is measured by the degree to which we are not-right.

And how do we know then what is right? Well that is reciprocally measured in terms of the degree to which we are not-wrong.

That is how the mind works. It course-corrects from both directions as its seeks to zoom in on reality's true nature. It increases its resolution by minimising its errors of prediction. And what is believed to be true always harbours some doubt, what is doubted always can hold some germ of truth.

Pragmatism then says inquiry only has to reach the threshold of belief being predictive enough for the purpose in mind. We become right enough not to care about the wrong, and yet also still wrong in never quite reaching what would be some absolute and ideal state of truthiness.

Semiotics adds on top of that the fact our modelling relation with reality is never actually charged with capturing its full ontic truth. As biological creatures, we only need to insert ourselves into our worlds in a semiotically constructed fashion. The task is to build ourselves as beings with the agency to be able to hang together in an organismic fashion.

Which is where we get back to the "idealism" of embodied or enactive models of cognition that have finally become vogue after so many years. Philosophy of mind catching up with the psychological science in its own sweet time. -

Wittgenstein, Cognitive Relativism, and "Nested Forms of Life"Of course we can say: "but this is no issue. It would be a total violation of common sense to say we can't follow public rules. When someone violates a rule in chess we can see it and point it out to them. — Count Timothy von Icarus

A lot of sensible stuff has been said in this thread. I would argue that the central issue is with the idea of "following rules" which should be replaced by that of "acting freely within constraints".

Rule following is what you get when the constraints on free action become so restrictive as to completely eliminate an actor's degrees of freedom – which can include accidents and even just vagueness as well as other possible choices.

From a semiotic point of view, humans are shaped by constraints at the level our various informational coding mechanisms – genes, neurons, words, numbers. Each is some kind of system of syntax and semantics that can be used to construct states of constraint. Nature just comes with its physical constraints on the possibilities of actions. Life and mind then arise through the added semiotic machinery by which further constraints can be constructed on that physics and chemistry.

So when semiosis has evolved to the level of mathematical logic or computation, the constraints that can be imposed on a person's behaviour can seem so fixed and syntactic – lacking in semantic freedom and not in practical need of any personal interpretation – that they indeed become rule following. Arithmetic, chess games, rules of etiquette at a formal banquet, a computer program, are examples where the constraint on behaviour or action is intended to approach this mechanical extreme.

But down at a lower level, like the social sphere of human language, the constraints are deliberately more permissive. Rules are there to be interpreted by the person. An intelligent response is expected when applying a general limit to a particular occasion. It is this semantic relation which shapes humans as self-aware actors within a community of language users, all jostling to constrain each others actions in various pragmatic ways.

Further down the scale to neurology and biology and you see constraints being even less restrictive, more permissive. Our genetic programs indeed depend on the accidents of random recombination to try out a variety of rule settings and so let the constraints of the environment do their natural selecting and increasing the species' collective fitness.

So what confounds is the idea of black and white rule making which then treat all forms of freedom – other choices, accidents and chance, simple vagueness or ambiguity as to whether the rule is being broken or not – as a problem for the proper operation of a rules based order.

But hierarchy theory as applied by biologists is quite used to the idea of levels of constraint. You have general constraints and then more particularised constraints nested within them. This is how bodies develop.

Every mammal has a femur as a general rule. Then it can grow longer or shorter, thicker or thinner. For a species it will have some average size but also a genetic variety. Or it may be stunted by accidents like a break or period of starvation, over-grown due to some disease. The outcome is always subject to a hierarchy of constraints – constraints that are informational in being syntactically-encoded semantics. But eventually the constraints peter out as either the resulting differences in outcome become too vague, too unpredictable, or in fact part of the requisite variety that needs to be maintained just so that the lifeform as a whole remains selectively evolvable.

And we can look at humans – living as biological organisms at their language-organised sociocultural level – continuing the same causal story. Constraints on behaviour can be constructed by utterances. But utterances need to be interpreted. Some social constraints can in fact be quite soft and permissive, others rigorously enforced. And then the free or unconstrained actions of the interpreting individual could be due to the assertion of some personal agency, or just some accident of understanding, or even just a vagueness as to whether the demands made by a constraint are being met or not.

It is not a cut and dried business when language is the code. Maths/logic arose as an evolutionary next step to tighten up the construction of constraints so as to remove ambiguity, accident and agency from the semiotic equation.

The problem is that making behaviour actually mechanical is not necessarily a good thing in life. It rather eliminates the basis of a self-balancing or adaptive hierarchical order. -

Is the real world fair and just?we are also agents who make choices and hold ourselves responsible for our actions, and need to sense that we are participants in a meaningful cosmos, not just ‘heat sinks’ doing our own little bit towards maximising entropy. — Wayfarer

Sure, we can construct our moral economies, but they are founded on entropic economies. We can't actually become detached from the world in practice – which is kind of the Buddhist vision you are preaching? A monk must still be fed to muster the strength to sit absolutely still and attempt not to even think but just open up.

What I preach is then this connection that runs through life and mind all the way up through human social organisation and arrives at the technological state that is our mindsets today.

You don't have to like this outcome to accept that it just is a continuation of the basic thermodynamic imperative. But the moral debates have to recognise the reality upon which they are founded to have any real traction on our social worlds as they are.

We are hooked on burning fossil fuels like a drug addict on heroin. India and China – homes to Buddhism – are not notable as nations resisting the current moral order which is mainlining the stuff.

If the atmosphere was an actual heat sink rather than an insulating blanket blocking the exit route to out space, then this explosive leap into a technological age perhaps wouldn't even matter. To connect to the OP, it was just bad luck that reality had this practical limit.

So no one is saying that we must exist to entropify, even though we must entropify to exist. What I am saying is the second part of that relation must be grounding to any discussion of what we might actually want to do with the agency that entropification grants us.

We have lucked into technology and the fossil fuels that can rocket power us somewhere. But there are also these pesky limits on burning it all in as short a time as possible. It seems we have locked ourselves into the most mindless track because we haven't focused enough on the science that can see the two sides to the story. Instead, folk just want to dream about peace and love, truth and justice – all civilisation's luxuries without regards for any of civilisation's costs. -

Is the real world fair and just?It’s not a question of whether the ‘wave function’ is or isn’t mind-dependent. The equation describes the distribution of probabilities. When the measurement is taken the possibilities all reduce to a specific outcome. That is the ‘collapse’. Measurement is what does that, but measurement itself is not specified by the equation, and besides it leaves open the question of in what sense the particle exists prior to measurement. — Wayfarer

What might help is to consider that "the measurement" involves both preparing the coherent state and then thermally interacting with the system so as to decohere it.

A deflationary understanding of the collapse issue is that first up, we accept our experiments demonstrate there is something to be explained. Quantum physics shows that entanglement, superposition, contextuality, retrocausality (as time entanglement) are all things that a larger view of the real world, of Nature in itself, has to take into account. A classical level of description only emerges in the limit of a grounding quantum one.

But then what is going on to decohere the quantum?

To demonstrate the quantum nature of reality in the lab, we have to first prepare some particle system in a state of coherence. So the scientist makes what is then broken. Nature is set up for its fall by first that fall being prevented from happening in its own natural way – just by the fact there is always usually dust, heat, and other sources of environmental noise about at our human scale of physics – and then allowed to happen at a moment and in a way of the scientist's choosing.

So a pair of electrons are entangled in sterile conditions that quite artificially create a state of quantum coherence. The scientist's artfully arrange machinery – built with scientific know-how, but still a material device designed to probe the world in a certain controlled fashion – manufactures a physical state that can be described by a probabilistic wave function.

Now this wavefunction already includes a lot of decohered world description. It assumes a baseline of classical time already. In quantum field theory, the Lorentz invariance that enforces a global relativistic classicality is simply plugged in as a constraint. So a lot of classical certainty is assumed to have already emerged via decoherence starting at the Big Bang scale that now allows the scientist to claim to have the two electrons that are about to perform their marvellous conjuring trick.

A state of coherence is prepared by the larger decohering world being held at bay. A wavefunction is calculated to give its probabilities of what happens next. The wavefunction builds in the assumption about the lack of dust, vibrations, heat in the experimental array. Those are real world probabilities that have been eliminated for all practical purposes from the wavefunction as it stands.

Instead the experiment is run and the only decohering constraints that the electron states run into are the specific ones that the scientist has in advance prepared. Some kind of mechanical switch that detects the particle by interacting thermally with it and then – because the switch can flip from open to closed – report to the scientist what just happened in the language that the scientist understands. A simple yes or no. Left or right. Up or down. The coherent state was thermally punctured and this is the decoherent result expressed in the counterfactual lingo of a classicality-presuming metaphysics.

Out in the real world, decoherence is going on all the time. Nature is self-constraining. That is how it can magic itself into a well formed existence. It is a tale of topological order or the emergence of complex structure. Everything interacts with everything and shakes itself down into some kind of equilibrium balance. Spacetime emerges along with its material contents due to the constraints of symmetrical order. Fundamental particles are local excitations forced into a collective thermal system by the gauge symmetries of the Standard Model – SU(3)xSU(2)xU(1).

The quantum is already tightly constrained by the Big Bang going through a rapid fire set of phase transitions in its first billionth of a second. The wave function at the level of the universe itself becomes massively restricted very quickly.

So in our usual binary or counterfactual fashion, we want to know, which is it? Is the cosmos fundamentally quantum or classical. But as an actuality, it is always an emergent mix. The realms we might imagine as the quantum and the classical are instead the dichotomous limits of that topological order.

There never is a pure state of quantum coherence (and so indeterminacy) just as there never is a pure state of classical determinacy. The Big Bang quickly bakes in a whole bunch of constraints that limit the open possibilities of the Universe forever. Thermal decoherence reigns. The Universe must expand and cool until the end of time.

As humans doing experiments, we step into this world when it is barely a couple of degrees away from absolute zero. We can manipulate conditions in a lab to demonstrate something that illuminates what a pure quantum metaphysics might look like in contrast to a pure classical metaphysics. We can filter out the dust, heat and vibration that might interfere with the coherent beam of a laser or whatever, and so start to see contrary properties like entanglement and contextuality. Then we can erase that view by imposing the click of a mechanical relay at the other end of maze of diffraction gratings half-silvered mirrors that we have set up on a table in a cool and darkend air-tight room.

But the Cosmos was already well down its thermal gradient and decohered when we created this little set-up. And even our "collapse of the wavefunction" was bought at the expense of adding to that thermal decoherence by the physical cost of some mechanical switch that got flipped, causing it to heat up a tiny bit. Even for the dial to get read, some scientist's retina has to be warmed fractionally by photopigments absorbing the quanta of its glowing numerals. The scientist's brain also ran a tiny bit hotter to turn those decoded digits into some pattern of interpreting thought.

"Aha, it happened! I see the evidence." But what happened apart from the fair exchange of the scientist's doing a little entropy production in return for a small negentropic or informational gain?

What collapses the wavefunction? Well who prepared it in the first place. The Universe expended unimaginable entropy in its Big Bang fireball to bake in a whole lot of decoherent constraints into the actuality of this world. The needle on any quantum purity was shoved way over to the other side of the dial even in the first billionth of a second.

A complication in the story is that it is in fact right about now, 13 billion years down the line, that we are nearest a classical realm with its rich topological order. Matter has become arranged into gas clouds, stars, planets, and scientists with their instruments and theories. But in the long run, all that matter gets returned towards an inverted version of its original near-quantumly coherent state. The Heat Death de Sitter void where all that exists is the black body radiation of the cosmic event horizon.

But anyway, right now there is enough negentropy about in terms of stars and habitable planets to feed and equip the scientist who wants to know how it all works. The inquiring mind can construct a delicate state of coherence on a laboratory bench and run it through a maze that represents some counterfactual choice. Does the wave go through one or other slit, or both slits at the same time? A classical metaphysics seems to say one thing, a quantum metaphysics demands the other.

Which reality we then see depends on at which point we thermally perturb the set-up with our measuring instrument. If reading the dial and becoming conscious of the result mattered so much, then we would likely have to take greater precautions about keeping them well clear of the equipment too, along with the dust, vibration and other environmental disruptions.

The scientist of course finds the result interesting because it says both understandings of reality seem true. Particles are waves and waves are particles. Reality is quantum in some grounding way – the Big Bang and Heat Death look to confirm that's were everything comes from and then eventually returns as some kind of grand dimensional inversion. Hot point to cold void. And then what we call classicality is the topological order that arises and reaches its passing height somewhere around the middle. Like right about now. You get electrons and protons making atoms, which make stars, which make planets, which get colonised by biofilms that earn their keep by keeping planetary surfaces about 40 degrees C cooler than they would otherwise be if they were left bare.

And so there you have it. Mindfulness is life doing its thing of accelerating cosmic entropification – creating states of coherence and then decohering them down at the level of enzymes and other molecular machines. A scientist can play the same game on a bench top. Spend a little energy to construct a state of poised coherence. Report what happens when a little more energy is spent on decohering it within the contexuality of different maze configurations.

Well designed, a contrast between a quantum metaphysics and a classical physics can be demonstrated. We can take that demonstration and apply it to the entirety of existence as if that existence were entirely hung up on the question of which kind of thing is it really – pure quantum or pure classical?

Or we can instead look a little closer and see that the quantum and the classical are our abstracted extremes and what is really going on is an act of cosmic decoherence within which we can roll the decoherence back a little bit towards a dust-free and isolated coherence and then let it catch up again rather suddenly at the click of a mechanical relay. The almost costless informational transaction that still nevertheless has its thermal cost, as would be measured by a thermometer attached to the mechanical relay. -

Is the real world fair and just?If you looked at the Mind Created World piece, I explicitly state that I am not arguing for any such thing. — Wayfarer

I think a few folk are frustrated that this is something you dance around. The implication always seems to be there in what you post.

The only salient point for my argument is the sense in which the measurement problem undermines the presumptively mind-independent nature of sub-atomic particles - that at some fundamental level, the separation of observer and observed no longer holds. And that’s because in the final analysis, reality is not objective but participatory. We’re not outside of or apart from reality - one of the fundamental insights both of phenomenology and non-dualism. It’s easy to say, but hard to see. — Wayfarer

So here we go again.

Sure, the Copenhagen interpretation in the reasonable form I hear from its defenders is that if we can’t draw a line between observers and observables then we do have to say the only certainty is that the outcome of the probabilistic prediction can only be found by somebody actually checking the reading on an instrument. That might suck, but it is where things stand.

Yet we also know that we are built of biology where our god damn enzymes, respiratory chains, and every bit of basic molecular machinery couldn’t function unless they could “collapse the wavefunction” to get the biochemistry done. Nothing would happen without our genetics being able to regulate thermal decoherence at that level of cellular metabolism.

You could call this participatory, but it is only that in the physicalistic semiotic sense, the modelling relation sense - a sense far more subtle than the hoary old subjective/objective or ideal/real sense.

The deep question, to refer you to Pattee again, is how can a molecule be a message? How does genetic information regulate a metabolic flow?

Or at the level of the neural code, how does the firing of neurons regulate a metabolic flow at the level of intelligent organisms navigating a complex material environment.

Figure this out as a scientific story at the biophysical level and see how it then provides a physicalist account - that is “participatory” if you insist - at all levels of organismic structure. -

Is the real world fair and just?But how does that detract from what I’m saying? — Wayfarer

First job was to wind you back from confusing cognition as epistemic method with cognition as some kind of ontological mind stuff that grounds mind-independent reality.

Then second I offered the expanded view of how the scientific method is just more of the same. All cognition follows the same rational principles. -

Is the real world fair and just?So we will all agree on north and south and many other facts. But all of those agreements still rely on perspective, we're part of a community of minds agreeing and disagreeing. — Wayfarer

The sense that the world exists entirely outside and separately to us is part of the condition of modernity, in particular, summarized by the expression 'cartesian anxiety': — Wayfarer

You've neglected the epistemic fact that an organism's reality modelling demands this twin move of generalising and particularising, abstracting and individualting, intergrating and differentiating, just to produce the symmetry-breaking contrast that renders the world intelligible in the first place.

You are trying to paint this modelling dynamic as a move from a subjective pole to an objective pole. Somehow big bad reductionist science – with its epistemic system of laws and measurements – is abandoning the personal for the impersonal. The particular is being sacrificed on the altar of the general.

But – as Gestalt psychology and indeed neurobiology in general tell us – organisms understand their world in terms of the construction of contrasts.

If we couldn't generalise, your landscapes would just be a blooming, buzzing confusion of specks of light. We would not parse into shapes and objects of some more generalised type. We couldn't imagine the land held stories that might connect it as a more general historical flow.

And equally, in generalising the notion of say a mountain or river, that allows us to be more particular about this or that mountain or river. This or that mountain/river in terms of its material potentials, or its tribal significance, or indeed its corporate significance.

So this all sits on the modelling side of the equation. It is part of the epistemology. Generalising and particularising is simply the crisp division that a mind would have to impose on its world to get the game of modelling going.

You say see that butterfly. Do I need to ask what a "butterfly" generally is? Can I now have my attention quickly drawn to some particular butterfly you have singled out for some reason"?

But if you exclaim, just look at that quoll, well then – without being equipped with that general concept – I might be equally lost to understand the particular experience being referred to.

So science arises in the same fashion as it is just another level of semiotic world modelling. It's encoding language is just mathematical rather than linguistic, neural or genetic.

Science takes abstraction and individuation to their practical limits at the communal level of human modelling. Science combines Platonic strength mathematical form with the specificity of marching around the world equipped with calibrating instruments such as a clock, ruler, compass and – a thermodynamicist might add – thermometer.

So nothing beats science for abstracting because nothing beats science for individuating. This why as a way of modelling reality, it certainly transcends our evolved neurobiological limits, and our socially-constructed linguistic limits. It arrives at its own physical limits – those set by the current state of development of the mathematical generalisations and the sensitivity available to our measuring instruments.

But again, this is all on the epistemic side of the equation. We are getting to know the real world better by transcending our previous epistemic limitations, not by actually stepping outside them.

Nor are we abandoning the particular for the general. We are refining ours senses as much as our concepts.

Quantum theory draws attention to how much more sharply we now see. Look, a counter just ticked! We must have made a measurement and "collapsed a wavefunction". Whatever that now means in terms of a mathematical theory that folk feel must be transcribed back into ordinary language with its ordinary cultural preconceptions and ordinary sensory impressions of "the real world".

In sum, epistemology is organised by the dichotomy of the general and the particular. It is how brains makes sense of the world in the first place. The cognitive contrast of habits and attention. The gestalt of figure and ground.

Science then just continues this useful construction of a world – the phemenology that is a semioic unwelt, a model of the world as it would be with an "us" projected into it – at a higher level of generality and particularity.

Sure, after that you can start asking about what is then lost or gained for all us common folk just going about our daily lives. The difference between just being animalistically in the world in a languageless and selfless neurobiological sense vs being in the world in a linguistically-based and self-monitoring social agent sense vs being in the world in the third sense of a rationalising and quantifying "techno-scientific" sense – well, this may itself feel either a well integrated state or you might be rather focused on its jarring transitions and disjointed demands.

So of course, there is something further to discuss about scientism and the kind of society it might seem to promote.

But the same applies to romanticism which wants to fix our world model at the level of the everyday idealism and even frank animism familiar in cultures dependent on foraging or agriculture as the everyday basis of their entropy dissipating.

And I don't think anyone really advocates dropping right down the epistemic scale of existence to becoming wordless creatures once more – just animals, and whatever that level of reality modelling is truly like from "the inside".

So yes, modernity might create Cartesian anxiety. But that arises from a dichotomising logic being allowed to make an ontic claim – mind and matter as two incommensurate substances, two general forms of causality – and failing to see that the ontic position is that the cosmos just happens to have these epistemising organisms evolving within it as a further expression of the Second Law.

We are modellers that exist by modelling. There are naturally progressive levels to this modelling. Words and then numbers have lifted humans to a certain rather vertiginous point. Numbers as the ultimate abstractions – variables in equations matched to squiggles on dials – take the basic epistemic duality of generalisation and particularisation to their most rarified extreme. I don't really see what comes next, particularly once we get into the adventures of algebraic geometry and its ability to give an account of the world in terms of its fundamental symmetries, or the emerging maths of topological order that speaks to the breaking of those symmetries. -

Is the real world fair and just?Epistemic idealism is sufficient in my view. — Wayfarer

Then there is no ontic case to answer.

I'm not agreeing that 'the world would still exist in the absence of the observer' - what is, in the absence of any mind, is by definition unknowable and meaningless, neither existent nor non-existent. — Wayfarer

Even if we are trapped inside our models, we can aspire to better models. And biosemiosis would remind this is also how we can aspire to be better as the humans populating our self-created dramas.

You hate on science. But what was the Enlightenment and Humanism but the application of the same more objectified and reasoned take on the human condition?

Through social science, political science, ecological science, economic science, we can finally imagine actors of a different quite kind.

Of course economic science is the problem child here. But that is another story, :razz: -

Is the real world fair and just?Rather it is built up or constructed out of the synthesis of (1) external stimuli with (2) the brain's constructive faculties which weave it together into a meaningful whole (per Kant). — Wayfarer

Well that is just an epistemic issue and not an ontic issue. We can all agree that we are modellers of our world. Semiotics makes that point. What we experience is an Umwelt, a model of the world as it would be with us ourselves in it.

We don't just represent the outside world to our witnessing mind, and our mind might come with all sorts of preconceptions that distort our appreciation of what is out there to see. Instead, being mindful is to have precisely the kind of modelling relation that is imagining an "us" out there also in the world doing stuff. Imposing our agency and will on its physical flow.

The modelling relation which is pragmatically formed between the brain and its environment has to build in this ur-preconception that we exist as separate to the world we desire to then regulate. A belief in a participatory ontology is the basic epistemic trick. It is why we believe that we are minds outside a mindless world. That is why idealism has its grip on the popular imagination. It is how we must think to place ourselves in the cosmic story as conscious actors.

But then the job we give science is to deflate that built-in cognitive expectation. We want to find out exactly how our being arises within its being in some natural material way. Which is why biosemiosis matters as the sharpest general model of that modelling relation.

And applying biosemiotic principles to the wavefunction collapse or measurement issue sorts it out quite nicely. It doesn't turn quantum ontology classical. But going to the point you are making, it does explain exactly where we can draw the epistemic cut between the thermal decohering the Universe just does itself and the way we can manipulate that decoherence as semiotic organisms with a metabolism to feed and an environment to navigate. Or as technologists, how we can manipulate quantum decoherence to produce our modern world of cell phones and LED screens.

The presumption of the mind-independence of reality is an axiom of scientific method, intended to enable the greatest degree of objectivity and the elimination of subjective opinions and idiosyncracies. — Wayfarer

As I say, that is the presumption that founds life and mind as processes in general. The modelling relation is based on the trick of putting ourselves outside what we wish to control, and that then gives us the main character energy not to just bobble about as the NPC's of the game of life. The model makes us feel as if we stand apart, and that is what then puts us into the world as a centre of agency.

How do you know when you turn your head fast that it is you spinning in the world rather than the world spinning around you? You subtract away the motor intention from the sensory outcome. And that neatly splits your world into a binary subjective~objective division.

The illusion of an ontic division – in the epistemic model – is so strong that this leads to the idealist vs realist debate that confounds philosophy. A constructed divide is treated as a real world divide – a world which is now "mindless" in its materiality and lack of purpose, lack of mindful order. The world with us not now in it because we exist ... somewhere else outside.

The necessary epistemic illusion of being a separated self is promoted to the status of an ontic fact of nature. And endless talking in circles follows.

Quantum physics offers us enough new information on how the world "really is" not to have to deal with all the mind~body woo as well. We have to maintain a clarity as we work our way through the metaphysics necessary to ground all the twists and turns of our inquiry into Nature as the thing in itself. Before we evolved to take advantage of its entropy flows with our entropy regulating mindsets.

Whenever we point to the universe 'before h.sapiens existed' we overlook the fact that while this is an empirical fact, it is also a scientific hypothesis, and in that sense a product of the mind. Only within ourselves, so far as we know, can that understanding exist. — Wayfarer

OK. So you are still arguing epistemology and not ontology when it comes to the participatory hypothesis. Will we get to the stronger idealist interpretation shortly?

Objects in the unobserved universe have no shape, color or individual appearance, because shape and appearance are created by minds. — Wayfarer

Well yes. If it is our biosemiotic modelling that constructs a world of objects for us – the world as it should be for an object manipulating creature – then science must be right not to just take that humancentric view.

If objects turn out to be interactions in quantum fields – from the point of view of the scientist trying to model the world "as it really is" – then if that is what works as the model, that is the model which at least gets us that much nearer to the "reality" of whatever a cosmos is.

We are trapped in epistemology. Objectivity is wishful thinking. But subjectivity is such an elaborate social and neural construct that science of course would have to de-subjectify its models as much as possible. Even metaphysics has that aim. We can develop models that are able to revise their ontic commitments in a useful fashion – as the application of science as technology shows.

So what I'm arguing is that the fabric of the Universe has an inextricably subjective pole or aspect upon which judgements about the nature of reality are dependent. — Wayfarer

And now we slide from a generally agreed epistemic point towards the strong and unwarranted ontic claim?

Again you slip in "fabric" as the weasel word. Do you mean the fabric that is the general coherence of a model – our experiential Umwelt – or the fabric that is what constitutes the material of the actual world in which we place ourselves as the further thing of a locus of agency?

Likewise your leap to "an inextricably subjective pole or aspect". This implies that our subjectivity is necessary to the objective being of the cosmos. The ontic claim. Yet that then doesn't square with your apparent acceptance that the world would still exist if we hadn't been biological organisms around to impose our Umwelt of scents, colours, sounds, shapes, feels, etc, on it. The deflationary epistemic view of this debate.

So you provide a lot of words to support what seems you contention. But it all turns out to be making the epistemological points I already agree with and not making a connection to an ontic strength version of the contention that "consciousness caused its own universe to exist/the quantum measurement issue is the proof". -

Is the real world fair and just?There's no reason to think we need anything more than particles, in definite locations at any time, behaving randomly to explain quantum mechanics. — Apustimelogist

Perhaps not quantum mechanics, but I don’t see how this works for quantum field theory.

I am all for minimising the mysteries, but quantum properties like contextuality, entanglement, non-locality all speak to a holism that is missing from this kind of bottom-up construction view. -

Is the real world fair and just?are integral to the fabric of existence — Wayfarer

So these are your words. They imply no observers means no fabric, no world.

The interpretations generally want to argue some position on observers participating in the production of crispy counterfactual properties, But where is some fabric of relations or probability in doubt?

Perhaps the wave function must be collapsed. But where is the argument that it needs our participation to even exist?

Take away that need to collapse the wavefunction and thermal decoherence gives you everything we see in terms of a reality that is classical or collapsed just by its own self-observing interference. The wavefunction simply gets updated by constraints on its space of quantum probabilities.

Humans then only come into this no collapse story as creatures who can hold back decoherence until some chosen moment when they suddenly release it with a suitable probe.

It is a bit of a party trick. Keep things cold and coherent enough and then let them hit something sharply interacting. One minute, they were entangled and isolated, the next as thermally decoherent as the world in general.

So we can mechanically manipulate the “collapse of the wavefunction”, or rather mix some isolated prepared state with its wider world. But that participation doesn’t also have to collapse the entire wider world into concrete being. The self interaction of decoherence has been doing that quite happily ever since the Big Bang. -

Is the real world fair and just?There are plenty other than me that call that into question — Wayfarer

I’m sorry but which of these interpretations say that human minds are what cause the Universe to be?

That the Universe resists the simplicities of our attempts to frame it as classical is something rather less problematic. -

Is the real world fair and just?This is not woo-woo - it is also consonant with the realisation of cognitive science that the world as we experience it is a product of the constructive activites on the brain - as you yourself well know — Wayfarer

Nonsense. One is an exaggerated ontic claim, the other a modest epistemic fact of neurocognitive processes, easy to demonstrate.

You conflate them. But no point taking it further. -

Is the real world fair and just?Without repeating all the detail, the salient point is the emphasis on a kind of constructivist idealism - that what we perceive as the objective, mind-independent universe is inextricably intertwined with our looking at it - hence the title of the article. — Wayfarer

But there is a difference between there being an "observer problem" as a general epistemic issue and then making it the motivation of some strong ontic claim. You haven't managed to show that quantum weirdness is somehow an ontic issue and not just an epistemic issue. You just jumped right to the conclusion you wanted to reach.