-

Malcolm Lett

76The Meta-management Theory of Consciousness uses the computational metaphor of cognition to provide an explanation for access consciousness, and by doing so explains some aspects of the phenomenology of consciousness. For example, it provides explanations for:

Malcolm Lett

76The Meta-management Theory of Consciousness uses the computational metaphor of cognition to provide an explanation for access consciousness, and by doing so explains some aspects of the phenomenology of consciousness. For example, it provides explanations for:

1) intentionality of consciousness - why consciousness "looks through" to first-order perceptions etc.

2) causality - that consciousness is "post-causal" - having no causal power over the event to which we are conscious, but having direct causal effect on subsequent events.

3) limited access - why we only have conscious experience associated with certain aspects of brain processing.

https://malcolmlett.medium.com/the-meta-management-theory-of-consciousness-17b1efdf4755

I know that I am going to get roasted by this wonderful and thorough community, but I wanted to share my theory both for your own enjoyment and so that I can learn from the discussions. Some of you might remember me from a long time ago. Those discussions were extremely useful, and have helped me entirely revise both my theory and how I describe it. I really hope that you'll enjoy this. Roast away.

I'll try to summarise here as best I can. The details are in the blog post - it's only a 40 minute read.

I'll explain it here in two parts. The first part is the computational argument, which depends on knowledge of predictive processing paradigms such as that of Predictive Coding or the Free-Energy Principle. I know that it's easy to get lost in the details, so I'll try to summarise here just the key takeaways.

The second part will focus on how I think my theory makes headway into the trickier philosophical debates. But bare in mind that I'm a little clumsy when it comes to the exact philosophical terminology.

Computational background:

- The theory starts with a computational discussion of a particular problem associated with cognition: namely deliberation. Here I am using cognition to refer generally to any kind of brain function, and deliberation to refer to the brain performing multiple cycles of processing over an arbitrary period of time before choosing to react to whatever stimuli triggered the deliberation. That is in contrast to reaction where the brain immediately produces a body response to the stimuli.

- I argue for why deliberation is evolutionarily necessary, and why it is associated with a considerable step up in architectural complexity. Specifically that it is "ungrounded" from environmental feedback and can become unstable. To resolve that it requires meta-cognitive processes to "keep it in check". I label the specific meta-cognitive process in question as meta-management.

- Meta-management is best described in contrast to 1st-order control. Under 1st-order control, a 1st-order process has the task of controlling the body to respond appropriately to the environment and to homeostatic needs. A meta-management process is a 2nd-order process tasked with the control of the 1st-order process, particularly from the point of view of the homeostatic needs of that 1st-order process. For example, the 1st-order deliberative process can become ungrounded as mentioned before, and it is the task of the 2rd-order process to monitor and correct for that. In the blog post I explain my concretely what I mean by all this.

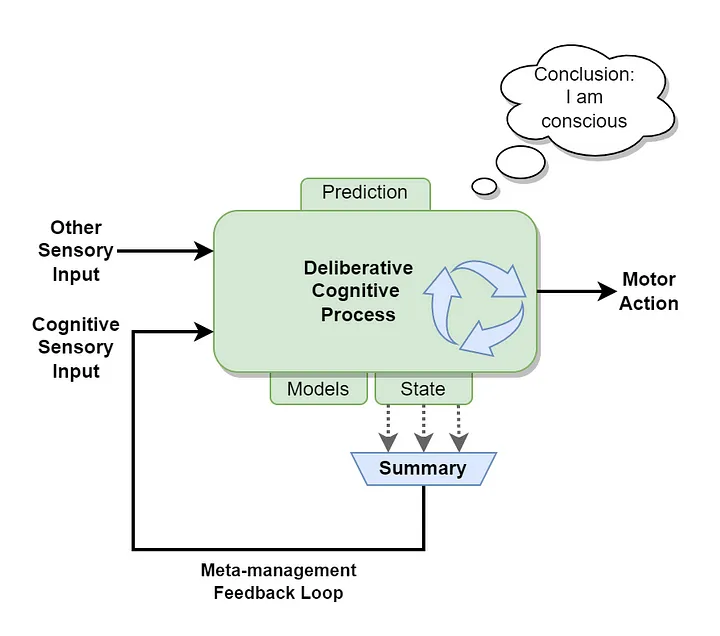

- I propose a functional structure whereby the 1st-order order process can meta-manage itself without the need for an explicit separate 2nd-order system. I explain how this is possible via predictive processes (think bayesian inference), in conjunction with a special kind of feedback loop - the meta-management feedback loop.

- The Meta-management Feedback Loop captures certain aspects of the current state of cognitive processing and makes them available as a cognitive sense, which is perceived, processed, modelled, predicted and responded to like any other exteroceptive or interoceptive sense. I provide a computational and evolutionary argument for why such a feedback loop captures only a minimal amount of information about cognitive processes.

- While it seems at first counter-intuitive that such a system could work, I argue how it is possible that a 1st-order process can use this feedback loop for effective meta-management of itself.

- Looking at just cognition alone, this provides a general framework for understanding deliberation, and by extension for human's ability for abstract thought (I explain this last point briefly in the blog post, and in more detail in another if anyone is interested to know more).

So that all leads to this functional structure which I argue is a plausible mechanism for how human brains perform deliberation and how they keep their deliberative processes "grounded" while doing so:

I have no strong empirical evidence that this is the case for humans, nor even that my suggested architecture can actually do what I claim. So it's a thought experiment at the moment. I plan to do simulation experiments this year to build up some empirical evidence.

Implications for understanding Consciousness:

Now I shall try to argue that the above explains various aspects of access consciousness, the phenomenology of consciousness (ie: specific identified characteristics), and that it might even explain the existence of phenomenological consciousness itself.

Conscious Contents. The Meta-management Theory of Consciousness (MMT from hereon) suggests that the contents of consciousness is the result of whatever is fed back as a sensory input via the Meta-management feedback loop (MMFL). The predictive theory of perception suggests that cognitive processes in relation to exteroceptive and interoceptive sensory signals do not take those signals literally and use them in their raw form throughout. Rather, the brain first uses those sensory signals to predict the structure of the world that caused those sensory signals. This creates a "mental model" if you will, of what is "out there". The cognitive processes then operate against that model. I propose that the same goes for the sensory signal received via the MMFL. This creates a cognitive sense that is processed in the same predictive fashion as for other senses. To be clear, I mean that the "cognitive sense" provides a sensory signal about the immediately prior cognitive activity, in the same way that a "visual sense" provides a sensory signal about optical activity. And that then this cognitive sense is subject to the same predictive processes that create a "mental model" of the structure of whatever caused that cognitive sensory signal.

Here I am talking about access consciousness. Why do we have access consciousness at all? I propose that it is because of the MMFL and its associated cognitive sense. There's nothing new in my proposal that we have access consciousness because the brain has access to observe some of its own processes. What's novel is that I have grounded that idea in a concrete explanation of why it might have evolved, in the specific computational needs that it meets, and in the specific computational mechanisms that lead to that outcome.

Intentionality. MMT provides the background for Higher-Order Thought (HOT) theory. It describes that the cognitive sense is a HOT-sense - a specific second-order representation of the first-order process. I won't go on at length about this but I'll draw out some specific nuances (again, there's more details in the blog post):

- The intentionality of access consciousness is the behavior of the first-order cognitive processing system. It exists to support meta-management, which needs to monitor that behavior. This means that the intentionality is not of our first-order perceptions, per se, nor even of our thought, per se. Rather, it is the static and dynamic state of the first-order process while it operates against those first-order perceptions/thoughts/etc. In a very practical way, that static and dynamic state will inevitable capture aspects of first-order perceptions and thoughts, but it will always be by proxy. The cognitive sense about the immediately prior cognitive activity represents the fact that the brain was thinking about an apple that it sees, rather than directly representing the apple seen. Or perhaps the cognitive sense represents that the brain was at that moment attending to the visual perception of the apple, and thus by proxy the cognitive sense captures many aspects of that visual perception, but not the actual visual perception itself.

- In contrast to some debates about HOTs versus HOPs (higher-order perceptions), MMT suggests that the cognitive sense is a higher-order representation of cognitive activity in general. Thus it is neither accurate to refer to it as a higher-order thought nor as a higher-order perception. Better terms could be Higher-order State or Higher-order Behavior.

Causality. One of my favorite learnings from this exercise is that consciousness is what I call post-causal. Events unfold as follows: 1) the brain does something, 2) the MMFL captures that something and makes a summary of it available as a cognitive sense, 3) the brain gets to consider the cognitive sense that is now being received. Anything that the brain considers "now" in relation to the cognitive sense is about something happened earlier. Thus our conscious experience of cognitive activity is always one step behind. Consciousness has no causal power over its intentional object. However, conscious access very much has causal power over everything that follows.

Phenomenological Consciousness. I doubt that I have the philosophical savvy to make any real headway in arguing that MMT explains the existence of phenomenological consciousness. But I do believe that it does and I'll try to state the beginnings of an argument.

- 1) All exteroceptive and interoceptive senses undergo transformation from raw signal to a prediction of the world structure that caused them. In some circles this is known as the inferred latent state. I believe that the brain doesn't just infer the latent state, but also associates other meaning such as an inference of how that particular latent state relates to the needs of the individual. So, for example, the perception of a lion without any protection in between is associated with knowledge of danger. Part of that associated meaning is identification of self vs other (I know that the lion is other, and that my body is part of my self, and that it is my body that is in danger). This depends on a mental model of what a "self" is.

- 2) In the same way, our cognitive sense can be further processed and meaning associated to it. In particular, identification of the relationship between the cognitive sense and the "self". Thus any further cognitive processing of this cognitive sense has immediate access to the full enriched concept of "self" and its relationship to to whatever the cognitive processes were doing immediately beforehand. This would seem to me to be the underlying mechanism behind what Nagal called "what it feels like" to be conscious.

-

Malcolm Lett

76Also, a thank you to @apokrisis who introduced me to semiotics in a long ago discussion. While in the end I don't need to make any reference to semiotics to explain the basics of MMT (and every attempt I've ever made has just made things too verbose), the Piercean view of semiotics helped me to finally see how everything fitted together.

Malcolm Lett

76Also, a thank you to @apokrisis who introduced me to semiotics in a long ago discussion. While in the end I don't need to make any reference to semiotics to explain the basics of MMT (and every attempt I've ever made has just made things too verbose), the Piercean view of semiotics helped me to finally see how everything fitted together. -

wonderer1

2.4kYou might find it interesting to consider how the view expressed in the following article complements and/or contrasts with your own.

wonderer1

2.4kYou might find it interesting to consider how the view expressed in the following article complements and/or contrasts with your own.

https://aeon.co/essays/how-blindsight-answers-the-hard-problem-of-consciousness -

Benj96

2.3k

Benj96

2.3k

Your diagram interesting looks very similar to one I created myself many years ago. I did approach it from a different angle but it has all the same major components with the exception of a "Time front".

I tried to tackle the idea that in an illustration of the "conscious sphere of awareness" where is the "present", "past" and "future" to which we remember, currently feel and likewise anticipate or as you say "predict" when realistically, all active conscious thought occurs only in the present.

My diagram was something of a tear drop shape but on it's side. Like a hot air balloon but horizontal. With memorised information lagging behind in chronological timeline and dissipating (being forgotten) - accessed of course only from the present moment (near the base of the teardrop).

Forgive me if I'm not explaining it well. -

Malcolm Lett

76

Malcolm Lett

76

Thanks. Something I've suspected for a while is that we live in a time when there is enough knowledge about the brain floating around that solutions to the problems of understanding conscious are likely to appear from multiple sources simultaneously. In the same way that historically we've had a few people invent the same ideas in parallel without knowing about each other. I think Leibniz' and Newton's version of calculus is an example of what I'm getting at. So I'm not surprised to see Nicholas Humphrey saying something very similar to my own theory (for context, I've been working on my theory for about 10 years, so it's not that I've ripped off Humphrey). Humphrey also mentions an article by Anil Seth from 2010, which illustrates some very similar and closely related ideas.

MMT depends on pretty much all the same mechanisms as both Humphrey's and Seth's articles. Modelling. Predictive processing. Feedback loops. The development of a representational model related to the self and cognitive function - for the purpose of supporting homeostatic processes or deliberation. Seth's article pins the functional purpose of consciousness to homeostasis. He explains the primary mechanism via self modelling, much like in my theory. Humphrey pins

Humphrey proposes an evolutionary sequence for brains to go from simple "blindsight" stimulus-response to modelling of self. That's also very much consistent with MMT. He pins the purpose of consciousness on the social argument - that we can't understand others without understanding ourselves, and thus we need the self-monitoring feedback loop. Sure. That's probably an important feature of consciousness, though I'm not convinced that it would have been the key evolutionary trigger. He proposes that the primary mechanism of consciousness is a dynamical system with a particular attractor state. Well, that's nice. It's hard to argue with such a generic statement, but it's not particularly useful to us.

So, what benefit if any does MMT add over those?

Firstly I should say that this is not a question of mutual exclusion. Each of these theories tells part of the story, to the extent that any of them happen to be true. So I would happily consider MMT as a peer in that mix.

Secondly, I also offer an evolutionary narrative for conscious function. My particular evolutionary narrative has the benefit that is is simultaneously simpler and more concrete than the others. To the point that it can be directly applied in experiments simulating different kinds of processes (I have written about that in another blog post) and I plan to do some of those experiments this year. At the end of the day I suspect there are several different evolutionary narratives that all play out together and interact, but egotistically my guess is that the narrative I've given is the most primal.

Thirdly, my theory of how self-models play out in the construction of conscious contents is very similar to that of Seth's article. Incidentally, Attention Schema Theory, also proposes such models for similar purposes. I think the key benefit of MMT is again its concreteness compared to those others. I propose a very specific mechanism for how cognitive state is captured, modelled, and made available for further processing. And, like for my evolutionary narrative, this explanation is concrete enough that it can be easily simulated in computational models. Something I also hope to do.

Lastly, given the above, I think MMT is also more concrete in its explanation of the phenomenology of consciousness. Certainly, I provide a clearer explanation of why we only have conscious access to certain brain activity; and I'm able to make a clear prediction about the causal power of consciousness.

The one area that I'm not happy with yet is that I believe MMT is capable of significantly closing the explanatory gap, even if it can't close it entirely, but I haven't yet found the right way of expressing that.

Overall, science is a slow progression of small steps, most of which hopefully lead us in the right direction. I would suggest that MMT is a small step on from those other theories, and I believe it is in the right direction. -

wonderer1

2.4kThanks. Something I've suspected for a while is that we live in a time when there is enough knowledge about the brain floating around that solutions to the problems of understanding conscious are likely to appear from multiple sources simultaneously. In the same way that historically we've had a few people invent the same ideas in parallel without knowing about each other. I think Leibniz' and Newton's version of calculus is an example of what I'm getting at. — Malcolm Lett

wonderer1

2.4kThanks. Something I've suspected for a while is that we live in a time when there is enough knowledge about the brain floating around that solutions to the problems of understanding conscious are likely to appear from multiple sources simultaneously. In the same way that historically we've had a few people invent the same ideas in parallel without knowing about each other. I think Leibniz' and Newton's version of calculus is an example of what I'm getting at. — Malcolm Lett

:100: :up:

A lot of areas of thinking are coming together, and I think you present a valuable sketch for considering the subject.

for context, I've been working on my theory for about 10 years, so it's not that I've ripped off Humphrey — Malcolm Lett

I didn't at all think that you had ripped off Humphrey. I just thought you would appreciate the parallels in what he had to say there.

For context on my part, I've been thinking about the subject from a connectionist perspective, as an electrical engineer, for 37 years. It started with an epiphany I had after studying a bit about information processing in artificial neural networks. I recognized that a low level difference in neural interconnection within my brain might well explain various idiosncracies about me. I researched learning disabilities and researched the neuropsych available at the time, but it was in a bad state by comparions with today. It wasn't until about 12 years ago, that my wife presented a pretty reasonable case for me having, what at the time was called Asperger's syndrome. And it wasn't until about a year ago that I happened upon empirical evidence for the sort of low level variation in neural interconnection that I had expected to find explaining idiosyncracies I have, is associated with autism.

I was foreshadowing Kahneman's two systems view (discussed in Thinking, Fast and Slow) years before the book came out. I had come to a similar view to Kahneman's except I came at it from a much more neuropsychology based direction, compared to the more psychological direction Kahneman was coming from.

Anyway, I'm much more inclined to a connectionist view than a computationalist view. I was glad to see that you noted the blurriness involved in the issues you are trying to sketch out, but as I said, it seems like a good model for consideration. -

RogueAI

3.5kWhat does your theory have to say about computer consciousness? Are conscious computers possible? Are there any conscious computers right now? How would you test for computer consciousness?

RogueAI

3.5kWhat does your theory have to say about computer consciousness? Are conscious computers possible? Are there any conscious computers right now? How would you test for computer consciousness? -

Malcolm Lett

76

Malcolm Lett

76

Thanks. I really appreciate the kind words. The biggest problem I've had this whole time is getting anyone to bother to read my ideas enough to actually give any real feedback.

That's a cool story of your own too. It goes to show just how powerful introspective analysis can be, when augmented with the right caveats and some basic third-person knowledge of the architecture that you're working with.

Great question. MMT is effectively a functionalist theory (though some recent reading has taught me that "functionalism" can have some pretty nasty connotations depending on its definition, so let me be clear that I'm not defining what kind of functionalism I'm talking about). In that sense, if MMT is correct, then consciousness is multi-realizable. More specifically, MMT says that any system with the described structure (feedback loop, cognitive sense, modelling, etc) would create conscious contents and thus (hand-wavy step) would also experience phenomenal consciousness.What does your theory have to say about computer consciousness? Are conscious computers possible? Are there any conscious computers right now? How would you test for computer consciousness? — RogueAI

A structure that exactly mimicked a human brain (to some appropriate level) would have a consciousness exactly like humans, regardless of its substrate. So a biological brain, a silicon brain, or a computer simulation of a biological or silicon brain, would all experience consciousness.

Likewise, any earthling animal with the basic structure would experience consciousness. Not all animals will have that structure however. As a wild guess, insects probably don't. All mammals probably do. However, their degree of conscious experience and the characteristics of it would differ from our own - ie: not only will it "feel" different to be whatever animal, it will "feel" less strongly or with less clarity. The reason is that consciousness depends on the computational processes, and those processes vary from organism to organism. A quick google says that the human brain has 1000x more neurons than a mouse brain. So the human brain has way more capacity for modelling, representing, inferring, about anything. I've heard that the senses of newborn humans are quite underdeveloped, and that they don't sense things as clearly as we do. I imagine this is something like seeing everything blurry, but that all our senses are blurry, that one sense blurs into another, and that even our self awareness is equally blurry. I imagine that it's something like that for other animals' conscious experience.

I should also mention a rebuttal that the Integrated Information Theory offers to the suggestion that computers could be conscious. Now I suspect that many here strongly dislike IIT, but it still has an interesting counter-argument. IIT makes a distinction between "innate" state and state that can be manipulated by an external force. I'm not sure about the prior version but it's latest v4 makes this pretty clear. Unfortunately I'm not able to elaborate on the meaning of that statement, and I'm probably misrepresenting it too, because ...well... IIT's hard and I don't understand it any better than anyone else. In any case, IIT says that its phi measure of consciousness depends entirely on innate state, excluding any of the externally manipulatable state. In a virtual simulation of a brain, every aspect of brain state is manipulated by the simulation engine. Thus there is no innate state. And no consciousness.

Now, I don't understand their meaning of innate state very well, so I can't attack from there. But I take issue at the entire metaphor. A computer simulation of a thing is a complicated process that manipulates state held on various "state stores", shall we say. A brain is a complicated process involving atomic and sub-atomic electromagnetic interactions that work together to elicit more macro-level interactions and structures in the form of the structure of neurons and synapses, their bioelectrical signaling. Those neurons + synapses are also a form of "state store" in terms of learning through synaptic plasticity etc. Now, the neurotransmitters that can thrown across synaptic cleft are independent molecules. Those independent molecules play an external force against the "state store" of those synaptic strengths. In short, I think it can be argued (better than what I'm doing here) that atomic and subatomic electromagnetic interactions also just "simulate" the biochemical structures of neurons which also just "simulate" minds. Many IIT proponents are panpsychists -- the latest v4 version of IIT states that as one of its fundamental tenets (it just doesn't use that term) -- so their answer is something that depends on that tenet. But now we're on the grounds of belief, and I don't hold to that particular belief.

Thus, IITs distinction between innate and not-innate state, whatever it is, doesn't hold up, and it's perfectly valid to claim that a computer simulation of a brain would be conscious. -

wonderer1

2.4kThe biggest problem I've had this whole time is getting anyone to bother to read my ideas enough to actually give any real feedback. — Malcolm Lett

wonderer1

2.4kThe biggest problem I've had this whole time is getting anyone to bother to read my ideas enough to actually give any real feedback. — Malcolm Lett

I recommend checking out @Pierre-Normand's thread on Claude 3 Opus. I haven't bit the bullet to pay for it to have access to advanced features that Pierre has demonstrated, but I've been impressed with the results of Pierre providing meta-management for Claude.

I have asked Claude 3 (Sonnet) for criticism of a half baked theory of mine and I thought the results were pretty good.

Anyway, I can empathize with the difficulty in finding people interested in thinking seriously about such things. I think AI is inevitably going to result in much wider consideration of these sorts of topics than has been the case. So you've got that going for you. -

ENOAH

1kYour hypothesis is intriguing, and exceptionally presented.

ENOAH

1kYour hypothesis is intriguing, and exceptionally presented.

It seems like the entire "process" described, every level and aspect is the Organic functionings of the Organic brain? All, therefore, autonomously? Is there ever a point in the process--in deliberation, at the end, at decision, intention, or otherwise--where anything resembling a "being" other than the Organic, steps in?

Secondly, is the concept of self a mental model? You differentiate the self from the body, identify it as the Dominant whole of which the body is a part, was that just for the sake of the text, or how do you think of the self, if not just a mental model?

my body is part of my self, — Malcolm Lett -

Malcolm Lett

76I recommend checking out Pierre-Normand's thread on Claude 3 Opus. I haven't bit the bullet to pay for it to have access to advanced features that Pierre has demonstrated, but I've been impressed with the results of Pierre providing meta-management for Claude. — wonderer1

Malcolm Lett

76I recommend checking out Pierre-Normand's thread on Claude 3 Opus. I haven't bit the bullet to pay for it to have access to advanced features that Pierre has demonstrated, but I've been impressed with the results of Pierre providing meta-management for Claude. — wonderer1

I've read some of that discussion but not all of it. I haven't seen any examples of meta-management in there. Can you link to a specific entry where Pierre-Normand provides meta-management capabilities? -

Malcolm Lett

76It seems like the entire "process" described, every level and aspect is the Organic functionings of the Organic brain? All, therefore, autonomously? Is there ever a point in the process--in deliberation, at the end, at decision, intention, or otherwise--where anything resembling a "being" other than the Organic, steps in? — ENOAH

Malcolm Lett

76It seems like the entire "process" described, every level and aspect is the Organic functionings of the Organic brain? All, therefore, autonomously? Is there ever a point in the process--in deliberation, at the end, at decision, intention, or otherwise--where anything resembling a "being" other than the Organic, steps in? — ENOAH

I assume that you are referring to the difference between the known physical laws vs something additional, like panpsychism or the cartesian dualist idea of a mind/soul existing metaphysically. My personal belief is that no such extra-physical being is required. In some respect, my approach for developing MMT has been an exercise in the "design stance" in order to prove that existing physical laws are sufficient to explain consciousness. Now I can't say that MMT achieves that aim, and the description in that blog post doesn't really talk about that much either. If you are interested, I made a first attempt to cover that in Part VI of my much longer writeup. I don't think it's complete, but it's a start.

Absolutely. I think that's a key component of how a mere feedback loop could result in anything more than just more computation. Starting from the mechanistic end of things, for the brain to do anything appropriate with the different sensory inputs that it it receives, it needs to identify where they come from. The predictive perception approach is to model the causal structure that creates those sensory inputs. For senses that inform us about the outside world, we thus model the outside world. For senses that inform us about ourselves, we thus model ourselves. The distinction between the two is once-again a causal one - whether individual discovers that they have a strong causal power to influence the state of the thing being modeled (here I use "causal" in the sense that the individual thinks they're doing the causing, not the ontological sense).is the concept of self a mental model? — ENOAH

Alternatively, looking from the perspective of our experience, it's clear that we have a model of self and that we rely upon that. This is seen in the contrast between our normal state where all of our body movement "feels" like it's governed by us, versus that occasional weird state where suddenly we don't feel like one of our limbs is part of our body or that it moved without volition. It's even more pronounced in the phantom limb phenomenon. These are examples of where the body self models fail to correlate exactly with the actual physical body. There's a growing neuroscientific theory that predictive processes are key to this. In short, the brain uses the efference copy to predict the outcome of an action on the body. If the outcome is exactly as expected, then everything is fine. Otherwise it leads to a state of surprise - and sometimes panic. I'll link a couple of papers at the bottom.

Hallucination of voices in the head could be an example of a "mind-self model" distortion according to exactly the same process. We hear voices in our head all the time, as our inner monologue. We don't get disturbed by that because we think we produced that inner monologue - there's a close correlation between our intent (to generate inner monologue) and the effect (the perception of an inner monologue). If some computational or modelling distortion occurs that the two don't correlate anymore, then we think that we're hearing voices. So there's a clear "mind-model", and this model is related somehow to our "self model". I hold that this mind-model is what produces the "feels" of phenomenal consciousness.

I don't make an attempt to draw any detailed distinctions between the different models that the brain develops; only that it seems that it does and that those models are sufficient to explain our perception of ourselves. Out of interest, the article by Anil Seth that I mentioned earlier lists a few different kinds of "self" models: bodily self, perspectival self, volitional self, narrative self, social self.

Klaver M and Dijkerman HC (2016). Bodily Experience in Schizophrenia: Factors Underlying a Disturbed Sense of Body Ownership. Frontiers in Human Neuroscience. 10 (305). https://doi.org/10.3389/fnhum.2016.00305

Synofzik, M., Thier, P., Leube, D. T., Schlotterbeck, P., & Lindner, A. (2010). Misattributions of agency in schizophrenia are based on imprecise predictions about the sensory consequences of one's actions. Brain : a journal of neurology, 133(1), 262–271. https://doi.org/10.1093/brain/awp291 -

ENOAH

1kreferring to the difference between the known physical laws vs something additional, like panpsychism or the cartesian dualist idea of a mind/soul existing metaphysically. My personal belief is that no such extra-physical being is required. — Malcolm Lett

ENOAH

1kreferring to the difference between the known physical laws vs something additional, like panpsychism or the cartesian dualist idea of a mind/soul existing metaphysically. My personal belief is that no such extra-physical being is required. — Malcolm Lett

Understood. And FWIW, I currently

agree

can't say that MMT achieves that aim — Malcolm Lett

It's reasonable to me. My concerns about it are directed at myself, and whether I understand the details sufficiently. I read both your first post and the link, and should reread etc. But from my understanding it's persuasive. I believe there are reasonable, plus probably cultural, psychological, bases for "wanting" there to be more than physical, but still I find it strange that we [still?] do. Even how you intuitively knew you'd better clarify "in your opinion no need for extra physical."

For senses that inform us about the outside world, we thus model the outside world. For senses that inform us about ourselves, we thus model ourselves — Malcolm Lett

Very much with you

:up:here I use "causal" in the sense that the individual thinks they're doing the causing, not the ontological sense — Malcolm Lett

And so you didn't mean the "self" was

this severable thing, "part" of the Body, other than the model. No need to reply unless to clarify.

Thank you -

Malcolm Lett

76

Malcolm Lett

76

Yes, I hope someone's done a thorough review of that from a psychological point of view, because it would be a very interesting read. Anyone has any good links?I believe there are reasonable, plus probably cultural, psychological, bases for "wanting" there to be more than physical — ENOAH

Off the top of my head, I can think of a small selection of reasons why people might "want" there to be more:

* Fear of losing free-will. There's a belief that consciousness, free-will, and non-determinism are intertwined and that a mechanistic theory of consciousness makes conscious processing deterministic and thus that free-will is eliminated. This unsurprisingly makes people uncomfortable. I'm actually comfortable with the idea that free-will is limited to the extent of the more or less unpredictable complexity of brain processes. So that makes it easier for me.

* Meaning of existence. If everything in life, including our first-person subjective experience and the very essence of our "self", can be explained in terms of basic mechanistic processes - the same processes that also underly the existence of inanimate objects like rocks - then what's the point of it all? This is a deeply confronting thought. I'm sure there's loads of discussions on this forum on the topic. On this one I tend to take the ostrich position - head in sand; don't want to think about it too much.

As an aside, the question of why people might rationally conclude that consciousness depends on more than physical (beyond just "wanting" that outcome) is the topic of the so-called "Meta-problem of Consciousness". Sadly I've just discovered that I don't have any good links on the topic.

The "litmus test" that I refer to in the blog post is a reference to that. Assuming that consciousness could be explained computationally, what kind of structure would produce the outcome of that structure inferring what we do about the nature of consciousness? That has been my biggest driving force in deciding how to approach the problem. -

ENOAH

1kthe question of why people might rationally conclude that consciousness depends on more than physical (beyond just "wanting" that outcome) is the topic of the so-called "Meta-problem of Consciousness" — Malcolm Lett

ENOAH

1kthe question of why people might rationally conclude that consciousness depends on more than physical (beyond just "wanting" that outcome) is the topic of the so-called "Meta-problem of Consciousness" — Malcolm Lett

It seems no more necessary than the "first level" I.e. the hard problem itself. It's a fundamental misunderstanding or misguided approach to Consciousness to begin with, viewing it as the primary of human existence. Probably rooted in those quasi psychological reasons you suggested. Even in broader philosophy the objects of the fears of loss are imagined 1) from a misunderstanding of the workings of the human mind, and 2) also, as "defense mechanisms" to shield us from the primary fear of loss, the loss of self.

But then that begs the meta meta question, why does consciousness cling to the self and all of those hypotheses which follows? Answering it might lead to another meta, and so on.

I think that strong identification with self, a mental model, is built (evolved) into the structure/function, because it promoted the efficient and prosperous system that said consciousness is today, and still evolving.

I might diverge from you (I cannot tell), in

"characterizing" or "imagining for the purpose of discussion" that evolution as taking place exclusively in/by the brain, notwithstanding our mutual rejection of dualism. But that's for another discussion. -

180 Proof

16.5kI'm still 'processing' your MMT and wonder what you make of Thomas Metzinger's self-model of subjectivity (SMS) which, if you're unfamiliar with it, is summarized in the article (linked below) with an extensive review of his The Ego Tunnel.

180 Proof

16.5kI'm still 'processing' your MMT and wonder what you make of Thomas Metzinger's self-model of subjectivity (SMS) which, if you're unfamiliar with it, is summarized in the article (linked below) with an extensive review of his The Ego Tunnel.

https://naturalism.org/resources/book-reviews/consciousness-revolutions

Also, a short lecture by Metzinger summarizing the central idea of 'self-modeling' ...

Thoughts?

@bert1 @RogueAI @Jack Cummins @javi2541997 @Wayfarer -

wonderer1

2.4kI've read some of that discussion but not all of it. I haven't seen any examples of meta-management in there. Can you link to a specific entry where Pierre-Normand provides meta-management capabilities? — Malcolm Lett

wonderer1

2.4kI've read some of that discussion but not all of it. I haven't seen any examples of meta-management in there. Can you link to a specific entry where Pierre-Normand provides meta-management capabilities? — Malcolm Lett

I only meant meta-management in a metaphorical sense, where Pierre is providing meta-management to Claude 3 in an external sense, via feedback of previous discussions. -

bert1

2.2kI think Metzinger's views are very plausible. Indeed his views on the self as a transparent ego tunnel at once enabling and limiting our exposure to reality and creating a world model is no doubt basically true. But as the article mentions, it's unclear how this resolves the hard problem. There is offered a reason why (evolutionarily speaking) phenomenality emerged but not a how. The self can be functionally specified, but not consciousness. But if Metzger were to permit consciousness as an extra natural property, and not set of functions, I would likely find little to disagree with him on.

bert1

2.2kI think Metzinger's views are very plausible. Indeed his views on the self as a transparent ego tunnel at once enabling and limiting our exposure to reality and creating a world model is no doubt basically true. But as the article mentions, it's unclear how this resolves the hard problem. There is offered a reason why (evolutionarily speaking) phenomenality emerged but not a how. The self can be functionally specified, but not consciousness. But if Metzger were to permit consciousness as an extra natural property, and not set of functions, I would likely find little to disagree with him on. -

Wayfarer

26.2kSelves are a very interesting and vivid and robust element of conscious experience in some animals. This is a conscious experience of selfhood, something philosophers call a phenomenal self, and is entirely determined by local processes in the brain at every instant. Ultimately, it’s a physical process. — Thomas Metzinger

Wayfarer

26.2kSelves are a very interesting and vivid and robust element of conscious experience in some animals. This is a conscious experience of selfhood, something philosophers call a phenomenal self, and is entirely determined by local processes in the brain at every instant. Ultimately, it’s a physical process. — Thomas Metzinger -

RogueAI

3.5kSo a biological brain, a silicon brain, or a computer simulation of a biological or silicon brain, would all experience consciousness. — Malcolm Lett

RogueAI

3.5kSo a biological brain, a silicon brain, or a computer simulation of a biological or silicon brain, would all experience consciousness. — Malcolm Lett

This thread doesn't seem to be going anywhere, so I'll post the usual stuff I post when someone claims this.

If a simulation is conscious, then that means a collection of electronic switches is conscious. That is to say that if you take enough switches, run an electric current through them, and turn them on and off in a certain way, the pain of stubbing a toe will emerge.

Isn't this already, prima facie, absurd? -

Gnomon

4.4k

Gnomon

4.4k

I'm not competent to critique your theory, much less to "roast" it. So, I'll just mention a few other attempts at computer analogies to human sentience.The Meta-management Theory of Consciousness uses the computational metaphor of cognition to provide an explanation for access consciousness, and by doing so explains some aspects of the phenomenology of consciousness. For example, it provides explanations for:

1) intentionality of consciousness - why consciousness "looks through" to first-order perceptions etc.

2) causality - that consciousness is "post-causal" - having no causal power over the event to which we are conscious, but having direct causal effect on subsequent events.

3) limited access - why we only have conscious experience associated with certain aspects of brain processing. — Malcolm Lett

The April issue of Scientific American magazine has an article by George Musser entitled A Truly Intelligent Machine. He notes that "researchers are modeling AI on human brains". And one of their tools is modularity : mimicking the brain's organization into "expert" modules, such as language and spatio-visual, which normally function independently, but sometimes merge their outputs into the general flow of cognition. He says, "one provocative hypothesis is that consciousness is the common ground". This is a reference to Global Workspace Theory (GWT) in which specialty modules, e.g. math & language, work together to produce the meta-effect that we experience as Consciousness. Although he doesn't use the fraught term, this sounds like Holism or Systems Theory.

Musser asks, in reference to GWT computers, "could they inadvertently create sentient beings with feelings and motivations?" Then, he quotes the GWT inventor, "conscious computing is a hypothesis without a shred of evidence". In GWT, the function of Meta-management might be to integrate various channels of information into a singular perspective. When those internal sub-streams of cognition are focused on solving an external problem, we call it "Intention". And when they motivate the body to act with purpose to make real-world changes, we may call it "Causality". Some robots, with command-line Intentions and grasping hands, seem to have the autonomous power to cause changes in the world. But the ultimate goal of their actions can be traced back to a human programmer.

In 1985, computer theorist Marvin Minsky wrote The Society of Mind, postulating a collection of "components that are themselves mindless" that might work together to produce a Meta-manager (my term) that would function like human Consciousness. In his theory, their modular inputs & outputs would merge into a Stream of Consciousness (my term). Yet one book review noted that : "You have to understand that, for Minsky, explaining intelligence is meaningless if you cannot show a line of cookie crumbs leading from the 'intelligent' behavior to a set of un-intelligent processes that created it. Clearly, if you cannot do that, you have made a circular argument." {my italics}

In computer engineer/philosopher Bernardo Kastrup's 2020 book, Science Ideated, he distinguishes between "consciousness and meta-consciousness". Presumably, basic sentience is what we typically label as "sub-consciousness". He then notes that meta-C is a re-representation of subconscious elements that are directed by Awareness toward a particular question or problem. He also discusses the counterintuitive phenomenon of "blindsight", in which patients behave as-if they see something, but report that they were not consciously aware of the object. This "limited access" may indicate that a> subconscious Cognition and b> conscious Awareness are instances of a> isolated functions of particular neural modules, and b> integrated functions of the Mind/Brain system as a whole. :nerd: -

180 Proof

16.5k:roll:

180 Proof

16.5k:roll:

:up: :up:

Maybe you missed the link posted by @ "wonderer1" ...the counterintuitive phenomenon of "blindsight", in which patients behave as-if they see something, but report that they were not consciously aware of the object — Gnomon

https://aeon.co/essays/how-blindsight-answers-the-hard-problem-of-consciousness -

RogueAI

3.5k

RogueAI

3.5k

If someone believes a simulation of a working brain is conscious, would this be a fairly accurate description of what the person believes: "to say that a simulation of a working brain is conscious is to say a collection of electronic switches is conscious. That if you take enough switches, run an electric current through them, and turn them on and off in a certain way, the pain of stubbing a toe will emerge."

ChatGPT

Yes, that description captures the essence of the belief that a simulation of a working brain could be conscious. It implies that consciousness arises from the complex interactions of physical components, such as electronic switches in the case of a simulation, rather than from some non-physical or supernatural source. This perspective aligns with the idea that consciousness is an emergent phenomenon resulting from the arrangement and activity of the underlying components, similar to how the experience of pain emerges from the activity of neurons in a biological brain. -

Gnomon

4.4k

Gnomon

4.4k

Thanks for the link. https://aeon.co/essays/how-blindsight-answers-the-hard-problem-of-consciousnessthe counterintuitive phenomenon of "blindsight", in which patients behave as-if they see something, but report that they were not consciously aware of the object — Gnomon

Maybe you missed the link posted by "wonderer1" ... — 180 Proof

The article is interesting, and it may offer some insight as to why the Consciousness problem is "hard". The notes below are for my personal record, and may interpret the article's implications differently from yours.

The article refers to Blindsight as a "dissociation" (disconnection) between physical Perception (biological processing of energetic inputs) and metaphysical Sensation*1 (conception ; awareness) {my italics added}. And that seems to be where easy physics (empirical evidence) hands-off the baton of significance (semiotics) to hard meta-physics (non-physical ; mental ; rational ; ideal). Apparently the neural sensory networks are still working, but the transition from physical Properties to metaphysical Qualia doesn't happen*2.

Of course, my interpretation, in terms of metaphysics, may not agree with your understanding of the "phenomenon" which lacks the noumenon (perceived, but not conceived). The author suddenly realized that "Perhaps the real puzzle is not so much the absence of sensation in blindsight as its presence in normal sight?" That's the "Hard Problem" of Consciousness.

The article describes a primitive visual system that evolved prior to warm-blooded mammals, in which the cortex may represent a social context for the incoming sensations. Presumably, social animals --- perhaps including some warm-blooded dinosaurs, and their feathered descendants)*4 --- are self-conscious in addition to basic sub-conscious. The author says "I call these expressive responses that evaluate the input ‘sentition’."*5 The evaluation must be in terms of Personal Relevance. Non-social animals may not need to view themselves as "persons" to discriminate between Self and Society.

The author refers to Integrated Information Theory (IIT), which seems to assume that some high-level functions of brain processing supervene --- as a holistic synergy --- on the chemical & electrical activities of the neural net*6. As I interpret that insight, Perception is physical*7, but Conception is metaphysical*8. i.e. Holistic. System functions are different from Component functions. Some Bits of Neural data are useless & valueless (irrelevant) until organized into a holistic system of Self + Other. It puts the data into a comprehensive, more inclusive, context.

Like a cell-phone Selfie, the broad-scope perspective puts Me into the picture. Neuron sensations (data) are impersonal ; Mind feelings (qualia) are personal. Evolutionary survival is enhanced by the ability to see the Self in a broader milieu, as a member of a social group : a community*9. Feeling is a personally relevant evaluation of incoming sensations. This ability to re-present physical as personal is the root of consciousness. :smile:

Excerpts from An evolutionary approach to consciousness can resolve the ‘hard problem’ – with radical implications for animal sentience, by Nicholas Humphrey

*1. "Sensation, let’s be clear, has a different function from perception. Both are forms of mental representation : ideas generated by the brain. But they represent – they are about

– very different kinds of things. . . . It’s as if, in having sensations, we’re both registering the objective fact of stimulation and expressing our personal bodily opinion about it."

Note --- In blindsight, the objective data is being processed, but the subjective meaning is missing. Terrence Deacon defines "aboutness" as a reference to something missing, "incompleteness", a lack that needs to be filled.

*2. "The answer is for the responses to become internalised or ‘privatised’. . . . . In this way, sentition evolves to be a virtual form of bodily expression – yet still an activity that can be read to provide a mental representation of the stimulation that elicits it."

Note --- Virtual = not real ; ideal. Representation = copy, not original

*3. "In attempting to answer these questions, we’re up against the so-called ‘hard problem of consciousness’: how a physical brain could underwrite the extra-physical properties of phenomenal experience."

Note --- What he calls "extra physical" I'm calling Meta-physical, in the sense that Ideas are not Real.

*4. My daddy used to say that "chickens wake-up in a new world every day". Maybe their primitive neural systems don't register meaning in the same way as hound dogs.

*5. "To discover ‘what’s happening to me’, the animal has only to monitor ‘what I’m doing about it’. And it can do this by the simple trick of creating a copy of the command signals for the responses – an ‘efference copy’ that can be read in reverse to recreate the meaning of the stimulation."

Note --- Efference copy is a feedback loop, that adds Me to data : supervenience.

*6. "it {consciousness) involves the brain generating something like an internal text, that it interprets as being about phenomenal properties."

Note --- Terrence Deacon " . . . variously defines Reference as "aboutness" or "re-presentation," the semiotic or semantic relation between a sign-vehicle and its object." https://www.informationphilosopher.com/solutions/scientists/deacon/

*7. "Sensation, let’s be clear, has a different function from perception. Both are forms of mental representation : ideas generated by the brain. But they represent – they are about – very different kinds of things. Perception – which is still partly intact in blindsight – is about ‘what’s happening out there in the external world’: the apple is red; the rock is hard; the bird is singing. By contrast, sensation is more personal, it’s about ‘what’s happening to me and how I as a subject evaluate it’

Note --- In this case, "sensation" is the visceral Feeling of What Happens to yours truly.

*8. "ask yourself: what would be missing from your life if you lacked phenomenal consciousness?"

Note --- You would lack a sense of Self and self-control and ownership, which is essential for humans in complex societies.

*9. "What about man-made machines?"

Note --- Sentient machines could possibly emerge as they become dependent on social groups to outsource the satisfaction of some of their personal needs.

-

RogueAI

3.5k*3. "In attempting to answer these questions, we’re up against the so-called ‘hard problem of consciousness’: how a physical brain could underwrite the extra-physical properties of phenomenal experience."

RogueAI

3.5k*3. "In attempting to answer these questions, we’re up against the so-called ‘hard problem of consciousness’: how a physical brain could underwrite the extra-physical properties of phenomenal experience."

Note --- What he calls "extra physical" I'm calling Meta-physical, in the sense that Ideas are not Real. — Gnomon

And yet ideas obviously exist. If they're not physical, what are they? Are you a substance dualist? -

Gnomon

4.4k

Gnomon

4.4k

Yes*1. Physical substances are made of Matter, but what is matter made of?*2 Einstein postulated that mathematical Mass (the essence of matter) is a form of Energy. And modern physicists --- going beyond Shannon's engineering definition --- have begun to equate Energy with Information. In that case, Causal Information is equivalent to Energy as the power to transform : some forms are Physical (matter + energy) and other forms are Meta-Physical (mental-ideal).*3. "In attempting to answer these questions, we’re up against the so-called ‘hard problem of consciousness’: how a physical brain could underwrite the extra-physical properties of phenomenal experience."

Note --- What he calls "extra physical" I'm calling Meta-physical, in the sense that Ideas are not Real. — Gnomon

And yet ideas obviously exist. If they're not physical, what are they? Are you a substance dualist? — RogueAI

Philosophical "substance" is made of something immaterial. Plato called that something "Form", but I call it "EnFormAction" (the power to transform). Which you can think of as "Energy", if it suits you. Aristotle concluded that material objects consist of a combination of Hyle (stuff) and Morph (form)*3*4. What kind of "stuff" do you think conceptual Ideas consist of? Is it the same substance that elementary particles, like Electrons & Quarks, are made of?

Ideas do "exist" but in a different sense from physical Matter. They are intangible & invisible & unreal (ideal) --- so we know them only by reasoning or by communication of information --- not by putting them under a microscope or dissecting with a scalpel.

I can also answer "no" to your question, because essential Energy is more fundamental than substantial Matter. From that perspective, I'm an Essence Monist. :smile:

*1. Substance Dualism :

Substance dualists typically argue that the mind and the body are composed of different substances and that the mind is a thinking thing that lacks the usual attributes of physical objects : size, shape, location, solidity, motion, adherence to the laws of physics, and so on.

https://iep.utm.edu/dualism-and-mind/

*2. Substance :

To account for the fundamental whatness of a thing, Plato posited an unchanging form or idea as the underlying and unchanging substance. As all things within a person's reality are subject to change, Plato reasoned that the forms or unchanging basic realities concerning all things must not be located within this world.

https://openstax.org/books/introduction-philosophy/pages/6-1-substance

*3. Hyle + Morph ; Substance + Essence :

Matter is not substance, nor is any universal, nor is any combination of some form and some particular matter. Identifying a substance with its essence helps Aristotle to solve a difficulty about the unity of the individual sub-stance: over a period of time an ordinary substance is likely to change in some ways.

https://www.jstor.org/stable/2184278

Note --- Modern dictionary "substance" refers to the Hyle, and omits the Form.

*4. Hylomorphism : (from Greek hylē, “matter”; morphē, “form”), in philosophy, metaphysical view according to which every natural body consists of two intrinsic principles, one potential, namely, primary matter, and one actual, namely, substantial form.

https://www.britannica.com/topic/hylomorphism

Note --- In our modern context, it might make more sense to characterize Matter as Actual and Form as Potential. For Ari, "Actual" meant eternal & unchanging.

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum