-

ToothyMaw

1.4kFor the purposes of this post, I will assume that superintelligences could be created in the future, although I admit that the actual paths to such an accomplishment - even those outlined by experts - do seem somewhat light on details. Nonetheless, if superintelligences are the inevitable products of progress, we need some way of keeping them safe despite possibilities of misalignment of values, difficulty coding certain important human concepts into them, etc.

ToothyMaw

1.4kFor the purposes of this post, I will assume that superintelligences could be created in the future, although I admit that the actual paths to such an accomplishment - even those outlined by experts - do seem somewhat light on details. Nonetheless, if superintelligences are the inevitable products of progress, we need some way of keeping them safe despite possibilities of misalignment of values, difficulty coding certain important human concepts into them, etc.

On the topic of doom: it seems far more likely to me that a rogue superintelligence acting on its own behalf or the behalf of powerful evil people would subjugate or destroy humanity via more sophisticated and efficient means than something as direct as murdering us or enslaving us with killer robots or whatever. I’m thinking along the lines of a deadly virus or gradually integrating more and more intrusive technology into our lives until we cannot resist the inevitable final blow to our autonomy in the form of…something.

This last scenario would probably be done via careful manipulation of the rules and norms surrounding AI. However, it would be somewhat difficult to square this with the incompetence, credulousness, and obvious dishonesty we see displayed in the actions of some of the most powerful people on Earth and the biggest proponents of AI. That is, until one realizes that Sam Altman is a vessel for an ideology that just so happens to align with a possible vision of the future that is both dangerous and perhaps somewhat easily brought to fruition - if the people are unwilling to resist it.

I do think the easiest way for an entity to manipulate or force humanity into some sort of compromised state is to gradually change the actual rules that govern our lives (possibly in response to our actions; more on this later) while leaving a minimal trace, if possible. Theoretically, billionaires, lobbyists and politicians could do this pretty easily, and I think the efforts of the people behind Project 2025 that have managed to get so much done in such little time is illustrative of this. Their attempts at mangling the balance of powers have clearly been detrimental, but they have been effective in changing the way Americans live - some more than others. If some group of known extremists were able to accomplish so much with relatively minimal pushback while barely hiding their intent and agenda at all, why would anyone think that an AGI or ASI wouldn’t be able to do the same thing far more effectively?

So, it seems we need some way of detecting such manipulations by AI or people with power employing AI. I will now introduce my own particular (and admittedly idiosyncratic) idea of what constitutes a “meta-rule” for this post, which I define as follows: A meta-rule is a rule that comes into effect due to some triggering clause, a change in physical variables, a certain input/action, a change in time, and/or the whims of some rule-maker that intervenes on some set of rules that already apply and how they lead to outcomes. The meta-rules relevant to this discussion almost certainly imply some sort of intent or desire on the part of an entity or entities to bring about a certain end or outcome.

[An essential sidenote: rules affected by meta-rules essentially collapse into new rules, but it would be far too intensive to express or consider all such possible new rules in detail; we don’t list all of our laws in multitudes of great big laws riddled with conditionals either if it can be helped.]

An (American) example of a meta-rule could be laws against defamation intervening on broad freedoms guaranteed in the First Amendment (especially since law around what constitutes free speech has changed quite a bit over the years as a function of the laws around defamation).

The next step in applying the idea of meta-rules is to consider the existence of certain systems. The cases of systems with meta-rules that we will consider are those with a cluster of meta-rules that respond to actions taken by humans and are circular insofar as some action triggers a meta-rule which triggers a change in rules that already apply that potentially changes outcomes for future such actions. This type of system is exactly the kind of vehicle for insidious manipulation that could enable the worst, most powerful people and rogue/misaligned AGI/ASI - namely with regards to the types of situations I wrote about earlier in the post.

I will now also introduce the concept of “Dissonance”, which could exist in such a system. Dissonance is the implementation of meta-rules in a way that allows for the same action or input to generate different outcomes even when all of the variables relevant to the state of a system associated with that original action and outcome are reset at a later time. Keeping track of this phenomenon seems to me to be a way of preventing AI from outmaneuvering us by implementing unknown meta-rules; if we detect Dissonance, there must be something screwy going on.

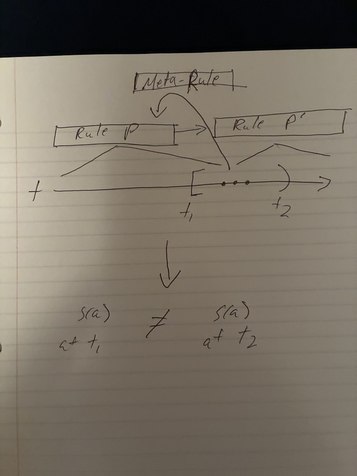

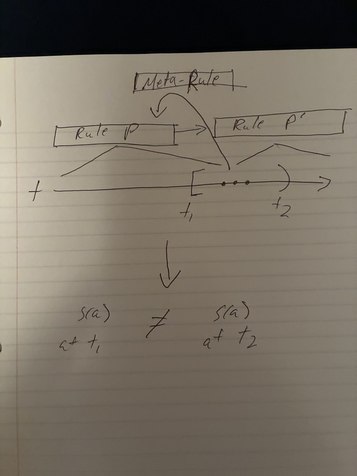

You might think the idea of Dissonance is coming out of left field, but I assure you that it is intuitive. I have drawn the following diagram to better explain it because I don’t know how to do it all that formally:

Let s(a) represent the outcome of action “a” taken at a given state of a system “s”. Furthermore, let us specify that action "a" is taken at t1 and t2 with the same state "s". As you can see from the first iteration of the action being taken at t1, any of the actions following or including it leading up to the second iteration of it being taken at t2 could lead to the triggering of a meta-rule that causes s(a) at t1 to be different from s(a) at t2 due to rule P effectively becoming P’. This informal demonstration doesn't prove that Dissonance must exist, but I suspect that it could show up.

So, in what kinds of situations would Dissonance be most easily detected? In systems with possibly simple, but most definitely clear and well-delineated rules. So, if we stick AI in some sort of system that has these kinds of rules, and we have a means of detecting Dissonance, the most threatening things the AI could do might have to be more out in the open. However, I have some serious doubts that this - or anything, really - would be enough if AI approaches sufficiently high levels of intelligence.

I know that this OP wasn't the most rigorous thing and that my solution is not even close to being complete (or perhaps even partially correct or useful at all), but I thought it was interesting enough to post. Thanks for reading.

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum