-

MikeL

644I originally posted this in Artificial Intelligence and the Intermind Model, but I think I caught the end of the thread, so I hope the moderators don't mind that I put it as a new post.

MikeL

644I originally posted this in Artificial Intelligence and the Intermind Model, but I think I caught the end of the thread, so I hope the moderators don't mind that I put it as a new post.

What if we designed a robot that could act scared when it saw a snake? Purely mechanical of course. Part of the fear response would be the hydraulic pump responsible for oiling the joints speeds up, and that higher conduction velocity wires are brought into play to facilitate faster reaction times. This control system is regulated through feedback loops wired into the head of the robot. When the snake is spotted the control paths in the head of the robot suddenly reroute power away from non-essential compartments such as recharging the batteries and into the peripheral sense receptors. Artificial pupils dilate to increase information through sight, and so on.

This robot has been programmed with a few phrases that let the programmer know what is happening in the circuits, "batteries low" that sought of thing. In the case of the snake it reads all these reactions and gives the feedback "I'm scared."

Is is really scared?

Before you answer, and as you probably know, a long long time ago they did live vivisections on dogs and other animals because they did not believe they actually felt pain. The pain response- all that yelping and carrying on, was nothing more than a set of reflexes programmed into the animals, the scientists and theolgists argued. Only humans, designed in God's image actually felt pain as we know it. -

Janus

17.9kRobots don't feel pain. Animals feel pain, but are not self-aware they are feeling it. Humans feel pain and are (sometimes at least) self-aware they are feeling it, and they may also be conscious of the pain as an indication of a threat to life, or as a prison they fear they may escape from only by dying. These kinds of human experience of pain probably make the pain much worse and harder to bear.

Janus

17.9kRobots don't feel pain. Animals feel pain, but are not self-aware they are feeling it. Humans feel pain and are (sometimes at least) self-aware they are feeling it, and they may also be conscious of the pain as an indication of a threat to life, or as a prison they fear they may escape from only by dying. These kinds of human experience of pain probably make the pain much worse and harder to bear. -

praxis

7.1kThis robot has been programmed with a few phrases that let the programmer know what is happening in the circuits, "batteries low" that sought of thing. In the case of the snake it reads all these reactions and gives the feedback "I'm scared."

praxis

7.1kThis robot has been programmed with a few phrases that let the programmer know what is happening in the circuits, "batteries low" that sought of thing. In the case of the snake it reads all these reactions and gives the feedback "I'm scared."

Is is really scared? — MikeL

It's theorized that interoception and affect are major aspects of human emotion. Also in the mix are our past experiences and emotion concepts, like the concept of fear. So for a machine to have a human like experience of fear, at a minimum it would need the concept and its associated interoceptive sensations. As you describe it the interoceptive sensations for the robot would be predictive feedback loops associated with "hydraulic pumps," "higher conduction velocity wires," "batteries," etc. And of course the robot would need the capacity to consciously recognize these sensations in the context of a snake, which it has learned to fear for some reason, to conclude "I'm scared." -

MikeL

644The robot has been programmed to assess threats to its structure. As its impossible to program for everything, part of the program says if the unidentified object is mobile and unidentified, activate the fear response. It has identified the snake which is in its list of threatening objects and thus the program has activated. A physiological response is occurring within the robot.

MikeL

644The robot has been programmed to assess threats to its structure. As its impossible to program for everything, part of the program says if the unidentified object is mobile and unidentified, activate the fear response. It has identified the snake which is in its list of threatening objects and thus the program has activated. A physiological response is occurring within the robot.

The code of the robot has been divided into a simple executive program that can activate a range of other codes. All the executive code has to do is call on the correct program and it will execute as a hard wired reflex. As it sits atop these other programs the executive code is not 'aware' of how they operate (one program is written in C++ and one in Cobalt and except for the interface they are incompatible). In a way, there is a mask separating them. All the executive programs knows is that after identifying the snake, the hydraulic pump sped up, the large conduction velocity wires began to hum, its vision became brighter, and other background programs such as do the vaccuuming have shut down. It is also aware that the snake may cause damage to its shell and it is programmed to avoid that. -

praxis

7.1kAll the executive program knows is that after identifying the snake, the hydraulic pump sped up, the large conduction velocity wires began to hum, its vision became brighter, and other background programs such as do the vaccuuming have shut down. — MikeL

praxis

7.1kAll the executive program knows is that after identifying the snake, the hydraulic pump sped up, the large conduction velocity wires began to hum, its vision became brighter, and other background programs such as do the vaccuuming have shut down. — MikeL

It's believed that this works the other way around in people. The predictive brain, operating subconsciously as in your model, would direct the release of adrenaline etc. after recognizing a serious threat. At some point the more 'executive' consciousness would realize what was going on and perhaps think something like "I'm scared." -

szardosszemagad

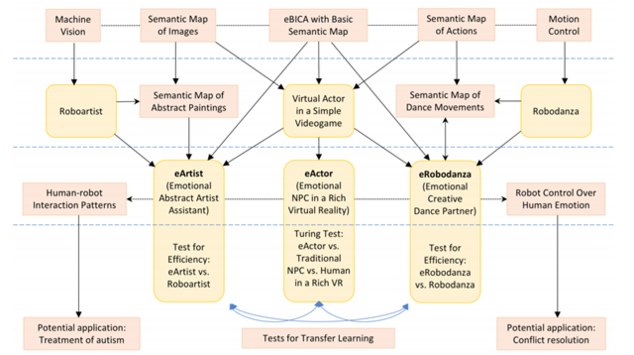

150Road map is nice and symmetrical. A beauty. Too complex for me to examine. Arrows can mean anything -- processes, feeback, feed forward, reaction, action, feeling, emotion, motivation, action. Too much. I like a two-way road map, such as two arrows pointing in opposite directions, side-by-side, parallel to each other. THAT I understand. To understand this road map would take a long time and reading a magazine article, and even that is not a guarantee I'd understand it when all is said and done.

szardosszemagad

150Road map is nice and symmetrical. A beauty. Too complex for me to examine. Arrows can mean anything -- processes, feeback, feed forward, reaction, action, feeling, emotion, motivation, action. Too much. I like a two-way road map, such as two arrows pointing in opposite directions, side-by-side, parallel to each other. THAT I understand. To understand this road map would take a long time and reading a magazine article, and even that is not a guarantee I'd understand it when all is said and done.

Clearly, my ineptitude, not that of the author of the diagram.

My point is, one should always make a point he or she herself understands, otherwise the point is lost even on him. This is clearly lost on me. -

Cavacava

2.4kI am certainly no expert, that is why I referenced the article and short 7 page paper written by people who have a much better understanding. -

szardosszemagad

150I apologize I was not referring to you at all when I mentioned "some people don't understand this chart". After all I don't know you and to the point of your most recent post I had no clue what you knew and what you didn't. But it was nice of you to volunteer that you are no expert either. :-)

szardosszemagad

150I apologize I was not referring to you at all when I mentioned "some people don't understand this chart". After all I don't know you and to the point of your most recent post I had no clue what you knew and what you didn't. But it was nice of you to volunteer that you are no expert either. :-) -

Cavacava

2.4k

Yes, did you catch Elon Musk's tweet from yesterday?

China, Russia, soon all countries w strong computer science. Competition for AI superiority at national level most likely cause of WW3 imo.

5:33 AM - Sep 4, 2017

He and 116 other international technicians have asked the UN to ban autonomous weapons, killer robots. UK has already said it would not participate in ban....and I am sure that US, China, Russia and others will also not participate in ban. -

praxis

7.1kWell, being dim witted and poorly informed can have its advantages at times. For instance I now realize that I've been enjoying the naive notion in the back of my mind that AI could be used for good, rather than a tool for the rich a powerful to acquire more wealth and power.

praxis

7.1kWell, being dim witted and poorly informed can have its advantages at times. For instance I now realize that I've been enjoying the naive notion in the back of my mind that AI could be used for good, rather than a tool for the rich a powerful to acquire more wealth and power.

People suck. :’( -

Nelson

8For a robot to truly feel scared it must truly be aware, like a human. My definition of being aware is: being able to act and think without input, have illogical fellings develop out of the self(scientists programming illogical fellings into you would therefor not count), being able to remember and being able to act on said memories. The amount of awarenes a creature possesses is determined by how mutch it fits into these criteria. The robot is felling real fear if it has all these qualities.

Nelson

8For a robot to truly feel scared it must truly be aware, like a human. My definition of being aware is: being able to act and think without input, have illogical fellings develop out of the self(scientists programming illogical fellings into you would therefor not count), being able to remember and being able to act on said memories. The amount of awarenes a creature possesses is determined by how mutch it fits into these criteria. The robot is felling real fear if it has all these qualities. -

MikeL

644

MikeL

644

Hi Nelson,My definition of being aware is: being able to act and think without input, have illogical fellings develop out of the self — Nelson

This is well a considered point.

Why wouldn't a program that allows for illogical feelings count though? Scientists are, afterall, designing the 'self'. When the weighting of an electro-neural signal exceeds the threshold of 8 for example, we may program the neuron to start firing off in random fashion to random connections.

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Is dark energy the outflow of dark matter from a universal black hole?

- Is the galaxy "missing dark matter" because it is too diffuse to displace the dark matter?

- If dark matter is displaced by visible matter then is its state of displacement curved spacetime?

- Dark matter and dark energy

- Are de Broglie's "subquantic medium" and the superfluid dark matter the same stuff?

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum