-

Hachem

384

Hachem

384

The Aperture Problem

Did you know that the size of the diaphragm has no influence whatsoever on the field of view? You probably did, after all, when you take a picture with a camera it does not matter whether you use a small (f22) or a large (f2.8) aperture, you still keep exactly the same scene. The reason why is quite mysterious when you come to think of it. Normally one would think that looking through a larger window will show more of the view outside, just as it would show people outside more of the room inside. Well, it does not work this way for a camera. All a larger opening does is let more light through, which gives brighter images, with smaller openings yielding of course darker images. And that is completely in accordance with our daily experience with windows. A larger window means a brighter room. That makes the lack of influence on the field of view even stranger.

The reason why? Optics tells us that light rays, after crossing a lens or group of lenses, cross each other and swap places as it were. But they do that only after meeting at the focal point, and from there rays coming from the bottom continue to the top, and vice versa.

The image therefore is first reduced to a point, so only an aperture that would be smaller than this focal point would have any influence on the field of view. But that is another issue altogether.

If aperture has no bearing on the field of view, I do not see how it could influence the depth of field, especially in the middle of the image. Obviously the same field of view will get through however small the aperture is. Also, imagine the following scene: a small child standing between his parents, under a tree, in front of a tall building. The rays falling on the child will for the most part be let through the lens, while the parents will have to look darker, the tree and the building even more, the smaller the aperture is. This is obviously wrong, the question is how.

Another problem with aperture is that the extra light that gets through the lens does not necessarily come from the objects depicted in the image. Think of the tapetum lucidum, the shining layer at the back of a cat's eyes that makes them shine in the dark. It reflects light back onto the retina and makes it possible for the cat to see better in the dark. It is apparently the same principle as a larger iris, a way of letting more light through. But is this extra light reflected off the objects we are seeing, or simply ambient light with no specific target?

If that light came from the objects themselves we would be confronted with the previous problem: larger objects would be more hindered by smaller apertures than smaller objects, and should therefore appear relatively darker. Since that is not the case, we have to assume that that extra light we are letting through is simply ambient light. It seems as if the image on our retina reacts to light just like a real object: the more light we shine on it the more visible it gets. A little bit like a slide in a projector. The more powerful the lamp is behind the slide (and the condenser lens), the brighter the image.

Here is the problem: the image shown by the projector does not come from the light, even if we assume that once the light goes through the slide it gets colored just like the slide before it finally hits the screen. In the case of a camera or our eye, we would have to accept the idea that the image is somewhere on or in the lens, and that light from the outside shines through it and projects it on our retina or the sensor area. But how did the image get in the lens if not carried by the light rays?

Okay, maybe it was carried by the light rays reflected off the objects, and once it was in the lens extra light shone through it?

This is all very complicated. Maybe there is someone out there with more knowledge of optics who would kindly explain it to me?

To sum up, if aperture has no influence on the field of view, how could it affect the depth of field? And please do not think that this is a technical issue which concerns only hobby photographers. I dare say that an answer to this question is an answer to the nature of light. -

apokrisis

7.8kIn the case of a camera or our eye, we would have to accept the idea that the image is somewhere on or in the lens, and that light from the outside shines through it and projects it on our retina or the sensor area. But how did the image get in the lens if not carried by the light rays?

apokrisis

7.8kIn the case of a camera or our eye, we would have to accept the idea that the image is somewhere on or in the lens, and that light from the outside shines through it and projects it on our retina or the sensor area. But how did the image get in the lens if not carried by the light rays?

— Hachem

It might help to think about how a mirror works as well. So what you are talking about with a system of lenses is a way to reflect the light from some distant scene onto a flat surface. That creates "the image". The image is not carried in the reflected light as such. You have to do the extra thing of cutting across that light with a plane that then makes a particular image.

With a mirror, there is no lens getting in the way. And depending on where you stand looking at the image in a mirror, you will see a different view.

The lens then does the extra thing of fixing a point of view. The aperture can be made pin-hole sized so the reflection is an image from just one place - mimicking the way we ourselves impose just one point of view on what we see. We have to stand somewhere even to look at a mirror, which is why we see only one of the vast number of possible images we might see in its reflecting plane.

The lens itself does the other thing of making a very large world of illuminated objects tractably small. This is why it is useful to make the light "cross over" at the pinhole of the aperture. You can then place your retina at a comfortable distance to make the image. The aperture shrinks the "mirror" to a pinhole size, then the "reflection" you are looking at begins to expand again to the other side. It is an extra bit of mechanism to reduce the world to a size that an eye could process without having to be as big as the world it sees. -

Hachem

384

Hachem

384

I am glad you mention mirrors and even pinholes. They make the matter in fact not simpler but vastly more complicated.

Mirrors reflect scenes straight up, while lenses reflect them up upside down.

Pinholes create upside down images without a lens! The image on the back of a pinhole camera is perceived upside down. The retinal image we would receive if we were to stand in the camera obscura would be straight up, meaning that we would also see an upside down image.

The question now is, how come light rays travel in straight horizontal lines when dealing with mirrors, but suddenly start crossing each other when going though a pinhole or a lens?

I think, without any proof, that instead of thinking of rays that somehow know whether they will be dealing with mirrors or pinholes/lenses, we must think in terms of the receiving surface.

When the opening of a room keeps shrinking to the point where only a small pinhole is left, the only way to look outside and see anything is to lower your body to look up, and stand on your toes to look down.

Translated to what the molecules of the wall or screen can do, they can only capture light coming from the opposite corner. If it is down left, light has to come from up right to be captured or seen, and so on.

In other words, we must at some point stop thinking in the way light behaves, and concentrate on how reflecting surfaces, including lenses, behave. -

apokrisis

7.8kThe question now is, how come light rays travel in straight horizontal lines when dealing with mirrors, but suddenly start crossing each other when going though a pinhole or a lens? — Hachem

apokrisis

7.8kThe question now is, how come light rays travel in straight horizontal lines when dealing with mirrors, but suddenly start crossing each other when going though a pinhole or a lens? — Hachem

The behaviour of the light doesn't change. It is scattered in every direction off illuminated objects. But the arrangement of pinholes and image forming planes that reflect that light then samples that light from some particular point of view.

The light does not in fact have to travel in "a straight line", just the "shortest/quickest path". So that is where refraction or bending of the path comes in when you have a lens made of glass.

As to the image inversion issue, when you look into a mirror, you can only see one direction reversed or inverted. You are pointed at the mirror and so your reflection is pointed back at you. But when we are talking about a pinhole casting an image, then we are standing back at yet another point of view where we can see the inversion of direction in the other two planes.

It is like seeing the inversion that would result from the mirror being beneath our feet or off to our side, rather than front on. -

Hachem

384Have you ever looked at the reflection of a tree or a pole on the water? When you walk around it, you can only see it from one angle. Also, you do not see it anymore where you saw it before. If light were reflected in all directions, you would expect to see the tree or pole reflected all around.

Hachem

384Have you ever looked at the reflection of a tree or a pole on the water? When you walk around it, you can only see it from one angle. Also, you do not see it anymore where you saw it before. If light were reflected in all directions, you would expect to see the tree or pole reflected all around.

Another thing: the idea that light is reflected in all directions may be mathematically plausible, it is physically much less. Each point in space will probably only be able to reflect light in one direction, or at least in a limited number of directions. Also, I think that the images we receive of objects are just too neat for the idea of an infinite number of directions from each point in space. Sharp images would be a miracle, don't you think? -

apokrisis

7.8kEach point in space will probably only be able to reflect light in one direction, or at least in a limited number of directions. — Hachem

apokrisis

7.8kEach point in space will probably only be able to reflect light in one direction, or at least in a limited number of directions. — Hachem

Now you are on to the quantum mysteries of how light acts as both a particle and a wave.

The photon emitted by a radiating atom goes in every direction as a potential event. It radiates as a spherical wavefront travelling outwards at c. But then an observation - some actual interaction which absorbs that potential - collapse things so it looks like the photon was a particle travelling in a straight line to its eventual target.

So once you get into the quantum level understanding of optics, you really do have to give up what seem to be your commonsense intuitions about light as a ray travelling from this place to that, crossing over, or bending, or whatever.

The idea of a wave and the idea of a particle are the two ways we need to think about it to fully describe the physical mechanics of optics. They are the two complementary viewpoints that encompass the whole of what is going on.

But just as obviously, they are two exactly opposite notions of nature. And that is where the metaphysical problems start .... if you think our models of nature are meant to represent nature in some naively intuitive fashion. -

Hachem

384Yes, Quantum Theory and The Mysteries of Creation. If I were the pope I would declare Bohr and his companions all saints.

Hachem

384Yes, Quantum Theory and The Mysteries of Creation. If I were the pope I would declare Bohr and his companions all saints.

I would be really interested in what you think of my claim in the other thread: https://thephilosophyforum.com/discussion/2024/romer-and-the-speed-of-light-1676#Item_14

We see objects where they are at the moment they are there. That is the only thing that explains how a telescope functions.

I see an obvious link between both threads as you may easily imagine.

Concerning Fermat's Principle: https://philpapers.org/post/26486 -

Rich

3.2kLet's see what we have here:

Rich

3.2kLet's see what we have here:

Perception is the potential to act upon. Distance is the ability to act upon, or otherwise the ability to change the holographic field.

We have observed non-local action at a distance at the molecular level, so theoretical all actions effect our fields but we observe (without instrumentation) only those that are local. So there remains sooner c sense of persistence.

So what needs to be better understood is the difference and similarities between perception and distance. When we reach into a holographic image nothing happens and nothing changes. This would be similar to grasping at a star. However, if we alter the hologram itself, they are changes in the field. The marriage between perception and distance is interesting.

BTW, I'm just ruminating out loud. -

Hachem

384Interesting, but you are considering the phenomenon distance as experienced by the subject as potential or actual action. I was more thinking in terms of what distance means first independently of the perceiving subject. The moon gets reflected on the surface of the lake whether there is a witness or not.

Hachem

384Interesting, but you are considering the phenomenon distance as experienced by the subject as potential or actual action. I was more thinking in terms of what distance means first independently of the perceiving subject. The moon gets reflected on the surface of the lake whether there is a witness or not.

If we accept the idea that we see objects there where they are, at the moment they are there, we have to accept the fact that the reflection of the moon on the lake obeys the same principles. Non-locality, or rather, trans-locality should be considered as an objective phenomenon. A phenomenon, allow me to empathize it once again, that is incompatible with the idea of images being transported by light through space in time. -

Hachem

384Distance and Size

Hachem

384Distance and Size

One of the most obvious but still unexplained phenomenon is that objects appear smaller with distance. It is obvious, and we could not imagine it being otherwise, even if we dot really understand how that works. Let me reiterate: our spatial logic is based on this fact and it will probably seem strange to even make it a point of discussion.

Here is the problem I see. Objects do not simply appear to become smaller, apparently they become smaller in reality, even if this shrinking down is only relative to an observer! Who needs Quantum mysteries when we experience them everyday!

Imagine holding an infinitely extendable rope attached to the outside edges of a vehicle. The farther the vehicle will move away from you, the smaller the distance between the both ends of the rope you are holding will be. In other words it seems like the vehicle is shrinking in width.

That is obviously not the case. Still, now the same vehicle, which was much wider than you when it was close to you, has become small enough to take but a fraction of the space on your retina. The distance between both edges of the car, in fact all its dimensions, has shrunk just like the distance between the ends of the rope your are still holding. The object has shrunk not only optically, by geometrically. The example with the ropes and the vehicle can easily be put in the drawing of an isosceles triangle and lines parallel to the base all the way to the top, these rungs symbolizing the width of the vehicle. Geometry tells us that objects shrink with the distance for a particular observer. Why didn't Einstein think of that? -

Hachem

384Femto-photography

Hachem

384Femto-photography

You have probably heard of the experiment in which the speed of light, or at least its motion, was captured on film. One could see how a ray of light propagated from one location to the other, confirming if ever the need was, that light is not instantaneous but has to travel through space and matter to reach its goal.

Imagine you are standing in the path of the beam, looking at it approaching. Then you will see the head of the ray first, slowly (!) followed by its tail. But there is something funny going on here. When we watch the clips in which the ray of light is slowly coming to life, we see it appear too soon. That is of course because the pictures have been taken one at a time long before, and we are watching it from aside. We are not watching a live event, even if that it is the impression that is conveyed.

Still, let us imagine, for the sake of argument, that we are filming with a highly advanced camera the way light propagates from a distant object towards the lens. Just like a human observer, we should see first element1 appear, then element2, and so on. The ray would appear to our eyes, and to the camera, as moving away from us, starting from our own location. At the risk of becoming a second Poisson, the blundering scientist, not the gifted mathematician, I will say that this would be quite strange, though of course not impossible. After all, we are all familiar with wheels turning backward in movies.

Still, maybe a remake of Fizeau's experiment would be possible in the near future. It could look like this:

1) a telelens directed at the light source to register the exact moment light appears after the switch has been locally turned on.

2) Fast digital cameras to capture the (time) path of the light beam.

This would help us, hopefully once and for all, determine whether light appears at any location only after the time necessary to reach that location. Or if it can be seen as soon as it appears, there where it appears, from any location in space.

To see the beam of light as approaching us we would need to perceive it as growing slowly towards us. That is we would have to see each photon as it is created, without having to wait for it to reach our eyes.

http://web.media.mit.edu/~raskar/trillionfps/ -

Hachem

384Interference is a central concept of the wave theory of light. The idea is deceptively simple. Waves that end up at the same location while having different phases will destroy each other. Simply put, a crest and a crest reinforce each other, while a crest and a trough cancel each other out. This is also believed to be the case when light has to go through one or more slits creating fringes of light and dark spots. The dark spots are supposed to be the result of destructive interference.

Hachem

384Interference is a central concept of the wave theory of light. The idea is deceptively simple. Waves that end up at the same location while having different phases will destroy each other. Simply put, a crest and a crest reinforce each other, while a crest and a trough cancel each other out. This is also believed to be the case when light has to go through one or more slits creating fringes of light and dark spots. The dark spots are supposed to be the result of destructive interference.

I find the concept of interference, in spite of the many examples and arguments, quite unconvincing. For one, it is based on an arbitrary definition of color whereby white and black are defined respectively as "all colors" and "no color" and even "no light".

Here is what I think is an argument against the validity of Interference.

When one shines primary colors on a screen, the point where all three colors meet is white. Using complementary colors instead, we get a black spot. This is seen, and I find this very strange indeed, as negative interference, and the black is an indication of this. The center of the complementary beams is something that does not exist!

Usually a white screen is used, so you would expect simply to see the still existing screen. But somehow even the white screen disappears behind the non-existent phenomenon.

Which makes you wonder: if primary colors still show the white screen, shouldn't we consider the result of their mix as transparent? It would make a lot of sense since ambient light allows us to see through and walk around, which would be very difficult if it were white.

Transparent light as the result of primary colors, and an opaque black when complementary colors are involved. Makes sense to me.

Another thing. Interference is supposed to be dependent on the difference in arrival time. If two waves differ by an odd multiple of the wave length then they will interfere destructively with each other. That is something that is easily investigated. All we have to do is shine three complementary beams on a screen and vary their respective distances enough to cover all possibilities. I wonder, do you think there will be settings where three complementary colors would not give black? I must admit that I have not tried it myself, but I would certainly be interested in the outcome.

"You have to use monochromatic light!" I hear you objecting. Okay, let us do it with two beams of the same wave length and shine them on a screen, varying the distance between the sources incrementally. However difficult it might be, there must be a way of showing interference patterns?

"You have to use one or more splits!" Ah, that is more problematic. See, I want to understand interference, not the effect of splits on light beams. I already know that there will be fringes with bright and dark spots. What I am interested in, is what those fringes means. And for that, I need to understand what destructive interference is. -

oysteroid

27Hi Hachem,

oysteroid

27Hi Hachem,

I think it might be helpful if we make more clear how a pinhole camera works and how a lens works. Let's model this with simple rays first, without thinking about waves.

First, the ideal pinhole. Why is an image formed on a plane behind the pinhole? Imagine that you are inside a dark room looking through a small hole out onto a street scene. The hole is very small. The wall in which the hole resides is very thin, infinitely thin, in fact, so that this is an ideal hole, not a tube, so that rays can pass through at angles approaching parallel with the wall. Now, just move your head around while looking through the hole. If the hole is small enough, you only see a small point of color. Suppose your head is in such a position that you see a point of red on the door of a parked car. Move your head much lower and you might see a point of blue from the sky, since you'll be looking up through the hole. The important thing here is to see that any given point in space is only receiving light from one point in the scene, found by tracing a line from that point in the room to that point in the scene.

The image that is formed on a screen inside the dark room behind the pinhole is simply a consequence of the above. Each point on the screen is receiving light only from one point in the scene. And the reason for this is nothing other than that the pinhole is restricting the light arriving at each point to light from a particular point in the scene. If you enlarge the hole, the image becomes brighter but less sharp. If you enlarge the hole all the way, or remove the front wall altogether, the screen will be broadly illuminated by the scene. Why? It is because the light arriving at a given point on the screen is coming from multiple points in the scene, all over the scene in fact. There is light from the sky, light from the car, light from the fruit stand, and so on, all adding up at that point on the screen. And light from a particular part of the car, if the surface is a diffuse reflector, is scattering to multiple points on the screen. The larger the aperture, the less the light on any given point on the screen is restricted to just one part of the scene. So, being less restricted, there is more light, but less sharpness.

Consider what happens if you move the pinhole up or down or left or right in the front wall. The image on the screen will change. The perspective changes. The point where your head needs to be to see the door on the car outside will change. Now imagine what would happen if you poked a second hole in the wall. You'd have two overlapping images. Poke a third hole. Poke a fourth. More light is reaching the screen, but you have multiple overlapping images, and so you are losing clarity. Light from bright parts of one image are getting to dark parts of another. Keep poking holes all over the wall. Pretty soon, you can't make out a clear image at all. Keep poking until the wall is totally gone.

When you have just one pinhole, it is like you are simply excluding all the other possible pinhole images. When you have no wall, it is like you have all the possible pinhole images at once.

Why is the image from a pinhole upside down? Imagine moving your head to look through the hole to see different parts of the scene again. You must put your head low to see the sky through the hole. You must put your head high to see the ground near the front wall outside. So the image projected onto the screen, the image plane, will be upside down. Light from the sky strikes it low. Light from the street strikes it high. Light from the right side of the scene (as you look out) strikes the screen on the left, behind you. So all is flipped over, as we would expect from our observations.

"Did you know that the size of the diaphragm has no influence whatsoever on the field of view?"

The reason for this can be seen by considering the above thought experiment. Making the hole larger or smaller does not change the fact that you can see through it from any angle behind the wall. The field of view captured in an image on the screen is given, as we would expect from the geometry, by the distance between the screen and the hole, not the size of the aperture.

"instead of thinking of rays that somehow know whether they will be dealing with mirrors or pinholes/lenses, "

There is no need to even consider the possibility that rays know anything about what they are dealing with. All we have here is simple geometry and selection. What I mean by selection is how a camera, whether a simple pinhole camera or one with complex lens, restricts the light arriving at a point on the screen to light coming from a point in the scene. The setup is selecting for certain rays. The problem with many optical path diagrams is that they don't show the rays that are excluded or not of interest and so they sort of wrongly imply that light is choosing to go through a pinhole or lens. Consider our pinhole room again. If light bounces off of the red car door towards the wall with the hole in it, it doesn't aim for the hole. It isn't specially directed at all. Where the light goes is simple geometry. There is much light in the scene that isn't going through the hole. The hole simply restricts the light arriving at the screen to that light that is passing through the hole. All the other light is blocked by the front wall around the hole. That's why the outside of the front wall is illuminated by the scene. Imagine it like a rain gauge catching only certain water drops. No drops are aiming for the rain gauge. The drops that end up in it are there only because the rain gauge was placed in the path on which they would have travelled anyway.

One important thing to notice about the pinhole is that if we move the screen, the image plane, nearer to or farther from the hole, the image is always in focus. It just changes size on the image plane, getting smaller as the image plane gets nearer the hole. This is because the rays describing, say, the car, are spreading outward from the hole. The further away the image plane, the more the rays have spread apart. This is just geometry.

Next, let's consider the lens. How does it focus light? Once again, let's leave waves out of our model for now. Let's just allow that a lens bends light paths according to certain rules. If light passes from one medium into another, its angle changes by a certain amount that is a function of the angle of incidence. We don't need to get into the math or Snell's law. This diagram will suffice:

Rays perpendicular to the surface of the lens are not bent. Rays entering a denser medium at an angle are bent, and are bent more the nearer that angle is to parallel to the surface of the lens. Rays entering a less dense medium are bent the other way.

The production of an image with a lens is a little more complicated. Let's image that we have the same setup, except that instead of a pinhole, we have a larger lens at the same location. But realize that regardless, we already and unavoidably have a pinhole camera at work here. Its aperture is the outside edge of the lens. If we remove the front wall and have only a lens, we will not do a good job forming a clear, contrasty image. This is because we would not be excluding the light that is not passing through the lens. We want to exclude that light first of all. So once again, we have this selection going on, this reduction of the light that is arriving at the screen.

The advantage of a lens over a tiny pinhole is that it allows us to get a focused image with a larger aperture. How does it accomplish this? Imagine the door of our red car. In a very small area of that door, there will be light reflecting out from the imperfections in its surface at a wide variety of angles, not all of which would pass through our tiny pinhole. Since the rays spread out from that small region with distance, the larger our aperture, the more rays pass through, hence more brightness on our screen. However, as we saw earlier, with just a pinhole, as the hole gets larger, the image becomes less focused, because each point on the back screen is receiving light from a larger variety of points in the scene, those seen through the hole from that point. So the light coming from a certain point in scene is not restricted to arrival at only one point on the screen. And light arriving at any one point on the screen is not restricted to light from only one point in the scene.

The lens serves to focus that unfocused light. Imagine the many rays emanating from one tiny region of the door of the car spreading out in all directions. The larger our lens, the more of them arrive at the surface of the lens. The lens then bends those rays such that they all arrive at a single point in space inside our large camera. But this focus point is not necessarily on the screen! It depends on the angles of the surface of the lens, its curvature, how close it is to the screen, and so on. If we move the car farther away from the lens, the angles of the light rays arriving at the lens surface change so that the focal point is further behind the front wall.

What we want, ideally, is to capture as much of the light as possible coming from one point in the scene and direct it to exactly one point on the screen. This is the purpose of the lens. Whether it is a pinhole or a lens, the purpose is to make sure that only light from one point in the scene is arriving at only one point on the screen. But because a pinhole is so small, only a small portion of the light emanating from a point in the scene goes through it.

The problem with the lens is that the focal point, as we pointed out earlier, moves deeper into the room if our car moves further away. So with any given placement of the back screen, only parts of the scene at a certain distance are in focus, only those whose focal points happen to coincide with the screen.

What is depth-of-field? Why does it happen?

Remember that with our pinhole, the smaller the hole, the sharper the image is, with the cost of reduced brightness. And also remember that the image is always in focus no matter the distance of the objects in the scene or the back screen. The lens, on the other hand, produces an image that is only focused at a certain distance. Suppose we have the screen, lens, and car arranged such that the car door is in focus on the screen. If we move the screen back a little bit, the image becomes slightly unfocused. The more we move it back, the more unfocused. This is because the light rays are spreading out from the focal point.

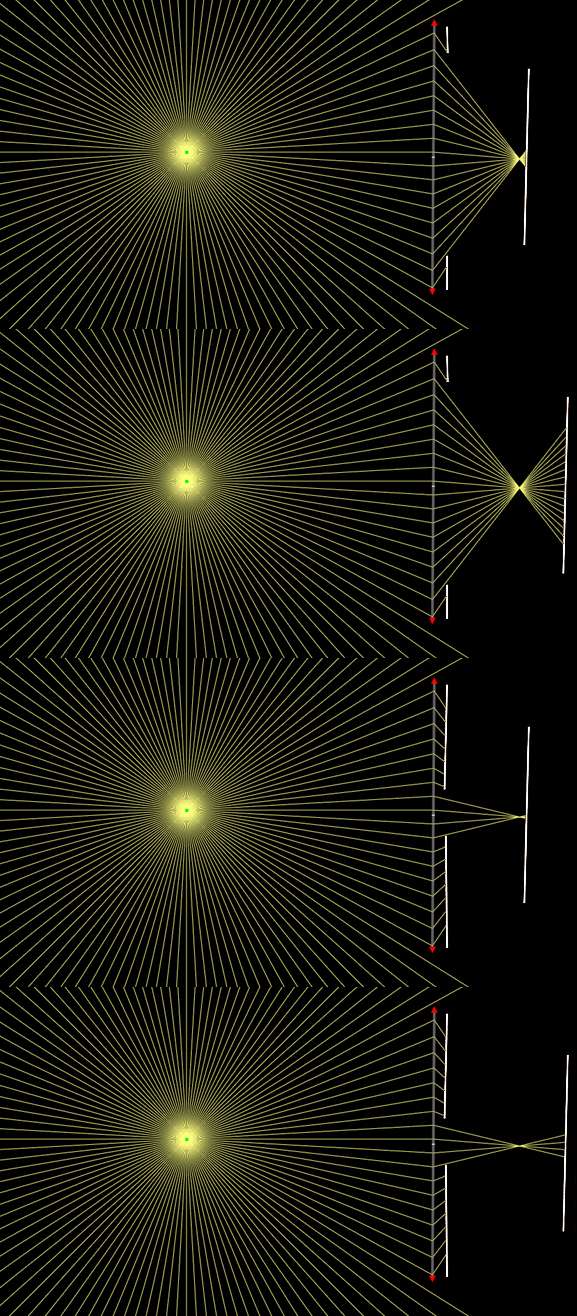

To understand this, it helps to play with a simple simulation. Please try this if it will work on your setup:

link

Or this image might help:

If we move the pencil in the above image away from the lens a bit, the sharp image projected to the right of the lens will move closer to the lens. So you'd need to move your projection screen, film, or sensor closer to the lens to get a sharp image. The closer the pencil is to the lens, the further from the lens the screen needs to be.

Now consider that with a lens combined with a changeable aperture, we are combining the principles of the lens with the pinhole camera. The aperture is just a large pinhole. A large pinhole by itself won't produce a sharp image. The larger the hole, the softer the image. Consider the geometry of many light rays spreading out from a point in the scene and arriving at the opening of a large aperture behind a lens. The rays come to a focal point as usual with a lens, but if restricted by a smaller aperture, the circle of out-of-focus light arriving at the screen when the screen is further away than the focal point will be smaller, meaning the image will be sharper. And the angles at which the light from the focal point to the screen spread out, being restricted, will spread out less at a given distance. So the smaller the aperture, the sharper the image, though the image won't be pin-sharp except when the screen and focal point coincide. But that circle-of-confusion might well be small enough that you can't tell the difference.

Using a nice simulator (link), I made these four images (click image below to enlarge if it shows up small) to show wide and narrow aperture with the screen beyond the focal point at two distances to show how, with a larger aperture, the circle of confusion grows more rapidly as the screen is moved away from the lens. The lens here is an ideal lens represented unfortunately as that vertical line segment with arrows on the ends. The point from which all the light rays emanate can be though to be any point in the scene from which light is being emitted, say a point on the car door.

Because of the wave-like properties of light, other factors come into play like diffraction, which limits sharpness with small apertures, but that is another topic altogether.

"Another problem with aperture is that the extra light that gets through the lens does not necessarily come from the objects depicted in the image. Think of the tapetum lucidum, the shining layer at the back of a cat's eyes that makes them shine in the dark. It reflects light back onto the retina and makes it possible for the cat to see better in the dark. It is apparently the same principle as a larger iris, a way of letting more light through. But is this extra light reflected off the objects we are seeing, or simply ambient light with no specific target?"

What extra light that gets through the lens doesn't come from objects depicted in the image? With a less than ideal lens, like a real lens, you get lens flare and whatnot. But the phenomenon in a cat's eye you are talking about is just light, focused as usual by lens and iris, that happens to miss the rods and cones striking material slightly beyond the rods and cones and reflecting back toward the rods and cones, where it has a second chance of being detected. This increases the sensitivity of their eyes. But some of that light misses the rods and cones even on the rebound and manages to exit their eye, where you see it. Not all the light entering their eye is absorbed by the rods and cones. In our case, the material beyond the rods and cones, instead of reflecting the light back for a second chance, absorbs many of the photons that miss the rods and cones. There is probably an advantage in visual acuity here. Cat eyes probably sacrifice sharpness for light efficiency. Those reflecting photons may reflect at such an angle that they hit rods or cones slightly off the proper location, thus reducing sharpness.

"If that light came from the objects themselves we would be confronted with the previous problem: larger objects would be more hindered by smaller apertures than smaller objects, and should therefore appear relatively darker. "

I don't understand your thinking here at all.

"Here is the problem: the image shown by the projector does not come from the light, even if we assume that once the light goes through the slide it gets colored just like the slide before it finally hits the screen. "

What happens with a projector is as follows. Light comes from a lamp and is sent through a condenser lens and then passes through the film. Let's stop here for a moment. What is happening at the film is once again nothing but restriction of the light. The film is basically casting a shadow. The light coming through the slide does not "get colored". The light before the slide is already composed of photons of many different wavelengths. The slide filters that light. A portion of the slide that allows red light to pass but not blue or green is simply absorbing the blue and green photons at that point while allowing the red ones to pass on through. The slide is clear in some places, allowing all colors of photons, which results in white light being perceived on the screen. After the light passes through the slide, it is focused by a lens onto the screen, which is white to reflect all colors and has a diffuse texture so that it will scatter light in all directions. So the photons that make it through the filter (the slide), are directed by the lens to specific locations on the screen, from which they then are reflected, whereupon your eyes receive some of that light and you see the image.

"In the case of a camera or our eye, we would have to accept the idea that the image is somewhere on or in the lens, and that light from the outside shines through it and projects it on our retina or the sensor area. But how did the image get in the lens if not carried by the light rays?

Okay, maybe it was carried by the light rays reflected off the objects, and once it was in the lens extra light shone through it?"

Why this extra image that gets "in the lens", through which light then shines? No such thing is needed or happens. What we have is just photons passing through air and lenses and being recorded somewhere. The image that results is quite simply a consequence of pure geometry, as we've been describing.

Of course, waves, diffraction, interference, quantum mechanics, and imperfect lenses and so on complicate all this. What does it mean, for example, for a photon to "pass through" a lens from a QM standpoint? That gets more involved. But the essential geometric ideas expressed here should go a long way toward clarifying matters.

The issue of mirrors is simpler still. Just picture light emanating from a point in a scene, reflecting from the mirror according to the law that angle of incidence equals angle of reflectance, and you are all set.

Why is the image from a mirror right-side-up? Just think about it. You are standing in front of a mirror, very close to it. Light from your forehead is going to bounce from the mirror up near your forehead, not down near your feet. In order for the mirror image to be upside down, the photons would have to be teleported to the other end of the mirror. A photon would leave your forehead, strike the mirror up near your forehead, and then jump down low inside the mirror, and then come out by your feet. Obviously, this doesn't happen! And why is the mirror image, while right-side-up, reversed left-to-right? Same reason. The light from your right hand is hitting the mirror near your right hand and reflecting back out from the same location. The image is directly reversed in a sense, but not by any flipping. Rather, it is reversed in depth because of reflection.

The reason pinhole and lens images are upside down I addressed above. It is all simple geometry and all makes sense if you draw out the light paths.

Phew! That was long! I really hope it helps! -

Hachem

384What extra light that gets through the lens doesn't come from objects depicted in the image? With a less than ideal lens, like a real lens, you get lens flare and whatnot. But the phenomenon in a cat's eye you are talking about is just light, focused as usual by lens and iris, that happens to miss the rods and cones striking material slightly beyond the rods and cones and reflecting back toward the rods and cones, where it has a second chance of being detected. — oysteroid

Hachem

384What extra light that gets through the lens doesn't come from objects depicted in the image? With a less than ideal lens, like a real lens, you get lens flare and whatnot. But the phenomenon in a cat's eye you are talking about is just light, focused as usual by lens and iris, that happens to miss the rods and cones striking material slightly beyond the rods and cones and reflecting back toward the rods and cones, where it has a second chance of being detected. — oysteroid

You have given a crash course in optics and I think that many people will find it very useful; I thank you in their name. I will not try to reply to all the points you have made, it would take many days, if not weeks, and more patience than anyone might have on this forum. Let me first state that you obviously know the subject as it is taught in colleges and universities. My aim is to show that the established theories leave many questions unanswered, among those, the point you bring up in the quote above.

Light that "happens to miss the rods and cones" is reused, enhancing the sensibility of the eye cells. You will agree with me that where they get reused is, from our point of view, quite random. The point is, light that is not directly reflected by an object can be used to brighten the image of this object.

There is an old technique in analogue photography called pre-flashing. It consists in exposing very briefly the film to unfocused white light, and this has as a consequence that the emulsion as it were awakens and stands to attention. The real story concerns the number of ions and electrons that are activated by this pre-flash, and which get added to those activated by the subsequent shot, but details are unimportant.

What is important is the fact that light that does not come from or is not reflected off an object contributes to its brightness and visibility. That is also the main point I am trying to make when I argue that when we are looking at an object through a telescope, we are seeing the object there where it is, at the moment it is there. That is impossible according to Optics which says that the light reflected or emanating from the object must reach our eyes first. I beg to differ. Telescopes would be of no use whatsoever. -

Hachem

384Move your head much lower and you might see a point of blue from the sky, since you'll be looking up through the hole. The important thing here is to see that any given point in space is only receiving light from one point in the scene, found by tracing a line from that point in the room to that point in the scene. — oysteroid

Hachem

384Move your head much lower and you might see a point of blue from the sky, since you'll be looking up through the hole. The important thing here is to see that any given point in space is only receiving light from one point in the scene, found by tracing a line from that point in the room to that point in the scene. — oysteroid

I honestly do not know what makes us see objects. In my example of a distant star for instance, I do believe that we see it there where it is, at the moment it is there, just like we see a person coming in the distance, and it takes him time to reach us. It takes him time, because the light he reflects is so fast that we don't even have time to blink (even if it is finite).

Am I advocating action at a distance? At this point all I can say is that I am not excluding anything, and I do not consider the concept of field anymore immune to criticism than the ether was.

I am inclined to agree with your description of each point on the screen receiving light from the scene. Only, I would put the emphasis on the receiving side. I also have to confess that my hypothesis still needs a lot of work and I understand the appeal of ray tracing. -

Hachem

384Interference and Anti-Sound

Hachem

384Interference and Anti-Sound

Destructive interference has until now apparently already found a practical application in the audio-field. One can even find clips on Youtube made by wizkids that demonstrate this peculiar phenomenon.

The problem is that we are in fact dealing with a self-fulfilling prophecy. Here is what Wikipedia has to say on the subject:

"Modern active noise control is generally achieved through the use of analog circuits or digital signal processing. Adaptive algorithms are designed to analyze the waveform of the background aural or nonaural noise, then based on the specific algorithm generate a signal that will either phase shift or invert the polarity of the original signal. This inverted signal (in antiphase) is then amplified and a transducer creates a sound wave directly proportional to the amplitude of the original waveform, creating destructive interference. This effectively reduces the volume of the perceivable noise."

In other words, the so-called anti-noise is in fact a software routine.

Theoretically it should be possible to create the antithesis to any sound. The movement of the inner membrane in the ear can be expressed mathematically, and the inverse formula can be calculated. But before that we would have to decipher, in real time, the serial sequence produced by the so-called "hammer" or malleus hitting the anvil or incus. It is this serial sequence, which, again theoretically, can easily be translated into a binary progression, that moves the membrane in a certain way.

As you see, anti-sound is certainly not impossible, only very improbable in the near future. And as for destructive interference? It is still merely a theoretical suggestion.

By the way, optical destructive interference has been known already since the 19th century, with the advent of photography: each time you hold a negative in your hand, you are looking at the product of what could be called destructive interference: white becomes black and vice versa. -

Hachem

384What happens with a projector is as follows. Light comes from a lamp and is sent through a condenser lens and then passes through the film. Let's stop here for a moment. What is happening at the film is once again nothing but restriction of the light. The film is basically casting a shadow. The light coming through the slide does not "get colored". The light before the slide is already composed of photons of many different wavelengths — oysteroid

Hachem

384What happens with a projector is as follows. Light comes from a lamp and is sent through a condenser lens and then passes through the film. Let's stop here for a moment. What is happening at the film is once again nothing but restriction of the light. The film is basically casting a shadow. The light coming through the slide does not "get colored". The light before the slide is already composed of photons of many different wavelengths — oysteroid

Again, I cannot fault your knowledge of Optics. I think ray tracing, unlike wave theory, is very intuitive, and both do a great job at explaining what we see. It is very difficult to falsify these theories based on immediate perception. We can see light rays being bent by lenses, crossing each other at the focal point and then creating a (real) image on a screen. Thats is certainly the strength of Optics in all its versions, geometric, physical and quantic.

My issue with Optics is that it does not explain vision, and that therefore what we see is more complicated than the optical theories want us to believe. I will not repeat my arguments about distant objects and their relation to the speed of light but let me mention a very simple argument concerning vision.

We can see illuminated objects even if, at least apparently, no light is reflected into our eyes. We can stand in complete darkness and spy on people.

Huygens' Principle speaks about wavelets that would somehow keep the main wave going. In other words, he was trying to take into account a very fundamental and elementary empirical fact: light beams when they have a certain direction do not disperse sideways indiscriminately. The more they disperse, with distance, the more light looses intensity. Therefore, light does not go on indefinitely but ceases altogether after a while. And we are now expected to believe that light coming from trillions of kilometers away can still somehow reach our eyes? For that we have to accept what every scientist considers as an anathema: perpetuum mobile. An electric field creates a magnetic field that creates an electric field... ad infinitum.

If light could go on indefinitely why does it stop when going through matter? Friction? Sure, that is as good an explanation as any. But tell me, suppose you shine a powerful light on earth, would it be visible from the moon? Why, after all, it would have stopped after a few kilometers?. Did it somehow regenerate during its travel to the moon?

Back to Huygens. If you suppose that somehow a light beam directed away from us, or an illuminated scene we watch from a dark and hidden corner, reach our eyes anyway, then you must accept the principle that they will remain visible from any distance. Just like the beam is supposed to go on indefinitely.

But then, that would contradict Huygens' Principle in which the wavelets contribute to the sustenance of the main beam. If they have to use energy to propagate in all directions, whatever the direction of the main beam, then how could light go on indefinitely? Where would the energy come from?

These are some of the reasons why I think Optics, and with it the theory of the dual nature of light, are lacking in explanation power.

A last, but certainly not least argument, is the fact that we can cross immense distances without having to move from our place. Telescopes, and microscopes, seem not only to manipulate light, they manipulate space. Maybe all we are seeing is an epiphenomenon, hoe light reacts in a spatial field distorted by lenses.

I will be first to admit that this a very far-fetched solution and I do not know how seriously we should take it. Still, that is certainly something to consider if we accept the fact that telescopes show us objects where they are, at the moment they are there. -

oysteroid

27Light that "happens to miss the rods and cones" is reused, enhancing the sensibility of the eye cells. You will agree with me that where they get reused is, from our point of view, quite random. The point is, light that is not directly reflected by an object can be used to brighten the image of this object.

oysteroid

27Light that "happens to miss the rods and cones" is reused, enhancing the sensibility of the eye cells. You will agree with me that where they get reused is, from our point of view, quite random. The point is, light that is not directly reflected by an object can be used to brighten the image of this object.

I am not sure how you are picturing this exactly. Let's follow a photon into a cat eye, one that misses a photoreceptor and reaches the tapetum lucidum. When it reflects, it has a good chance of striking a rod, basically from behind, very near where it would have been recorded had it collided with a rod on the first pass. But since there is a little distance, though it is very small, between the tapetum lucidum and the photoreceptor cells, the photon might strike a photoreceptor at a very slightly different location on the retina than it would have if it had been recorded on the first pass. So, I would say that it is a tiny bit random, hence the slight loss of sharpness, not "quite random". I am wondering if you are imagining the photon reflecting from the back of the eye out further into the eye and then reflecting back again to the retina where it has another chance of being recorded. In fact, some photons will do that! But they don't offer any advantage in useful image formation. On the contrary, they just fog the image a bit. It is like the fogging of an image that you get with a camera when the sun is shining directly into the aperture of your lens. The light bounces between surfaces of your lens elements and maybe your digital sensor too and fogs your image, reducing contrast.

I don't see that there is any particular mystery here. It is just a question of where photons are going.

There is an old technique in analogue photography called pre-flashing. It consists in exposing very briefly the film to unfocused white light, and this has as a consequence that the emulsion as it were awakens and stands to attention. The real story concerns the number of ions and electrons that are activated by this pre-flash, and which get added to those activated by the subsequent shot, but details are unimportant.

On pre-flashing, or pre-exposure, from The Negative, by Ansel Adams, page 119:

"When photographing a subject of high contrast, the normal procedure is to use contracted development to help control the negative scale, but in some cases the reduced contrast, especially in the lower values, is not acceptable. It is helpful in such cases to raise the exposure of the dark (low luminance) subject values. This effect can be achieved using pre-exposure of the negative, a simple method of reinforcing low values.

Pre-exposure involves giving the negative a first exposure to a uniform illumination placed on a chosen low zone, after which the normal exposure of the subject is given. The pre-exposure serves to bring the entire negative up to the threshold, or moderately above, so that it is sensitized to very small additional levels of exposure. It will then retain detail in deep shadow values that would otherwise fall on or below the threshold and therefore not record."

Later, on page 122, Adams says:

"Pre-exposure is entirely satisfactory for supporting small areas of deep shadow, but it should be used carefully if the deep-shadow areas are large. Under these conditions it can appear as a false tonality, and large shadow areas of uniform value can take on the appearance of fog."

From a forum on the web (https://www.photrio.com/forum/index.php?threads/film-pre-exposure-post-exposure.41367/):

"...there's a certain amount of exposure that accumulates before you actually get an image. I'll use "units" of light as simple example. You expose film or paper to 10 units of light. You develop and you get Nothing. It's as if you didn't expose it at all. But if you expose the film or paper to 11 units of light, you get an image. Ten units is the threshold you have to pass to get something you can see.

So with pre-flashing, what you're doing is exposing the film (or paper) to that 10 units of light that's just under the threshold, so that any additional amount, no matter how little you add, will register. That's how you get a usable image in the low-density areas, because you've already built up that base that otherwise doesn't register.

It seems to me (in theory anyway) that post-flashing would have the same effect. But I've never tried it and I've never read a study where someone tried one vs. the other. It would be an interesting project to try. "

What about this do you find particularly mysterious?

What is important is the fact that light that does not come from or is not reflected off an object contributes to its brightness and visibility.

Imagine that you have film covered with little cells that fill with photons. These cells are black. But if they fill up with photons to a certain point, they start to lighten in increments. Lets say that 110 photons will turn them completely white. But just one photon won't start the brightening process. They can hold ten photons before starting to change color. One more will push them over the threshold. So there is a 100 photon range between white and black. There is no visible difference between a cell with 0 photons and a cell with 9, while there is a visible difference between 10 and 11 photons. If we expose this paper to light from a scene, the bright areas will easily push many cells in the corresponding region of the film over that threshold and we will see their relative differences in brightness. But darker areas that emit few photons might not. One little area might get 2 photons per cell. Another will get 9. But since both of these are still below the threshold, when we look at the exposed paper, we will see no difference between these areas, even though they had different luminances in the real scene. However, if we start by putting ten photons into all the cells right away, the situation changes. They are all already right at the threshold, so even one photon will cause a visible change. Now, those two regions will have cells with 12 photons and 19 photons, respectively, and we will be able to discern a difference between them. So we have shadow detail where we otherwise might not have.

There is a cost, however. The problem is noise. The ten units below the threshold would normally have cut off the bottom of the scale such that much random, low-level noise from various sources would not be visible on the film. With this pre-exposure, that same noise will be visible. Suppose some area of the film, even with the pre-exposure, still receives no photons from the scene because that area is pitch dark. Without noise, all the cells in that region would have ten photons and no differences would be seen between them. But the noise, which is equivalent to random extra photons here and there, will result in some of those cells holding 11 photons, some 13, some 10, and so on. This will result in visible brightness variations at small scales that are false, or do not represent actual differences in the scene. Seen from a distance, since the noise is fairly evenly distributed at larger scales, it will appear as fog.

That is also the main point I am trying to make when I argue that when we are looking at an object through a telescope, we are seeing the object there where it is, at the moment it is there. That is impossible according to Optics which says that the light reflected or emanating from the object must reach our eyes first. I beg to differ. Telescopes would be of no use whatsoever.

I am afraid I don't follow your thinking on instant light propagation at all and I don't see how it relates to cat eyes and pre-exposure of film. There is a mountain of evidence for the fact that it takes time for light to travel and that when we see distant objects, we are seeing how they were in the past. We can actually see this. Distant galaxies are younger the more distant they are. We can also measure the speed of light with various kinds of experiments. We also have delays in the signals sent using EM radiation, or photons, to and from our space probes and whatnot. There is much consilience here, which is a strong indicator that we are on the right track.

Why would telescopes have no use if they don't allow us to see things as they presently are? It is much better to see Pluto as it was hours ago than to not see it at all! Surely it is good to be able hear someone from across the room even though it takes time for the waves in the air to reach my ear!

I didn't address this earlier:

One of the most obvious but still unexplained phenomenon is that objects appear smaller with distance.

This is easy to explain. Once again, just consider the geometry. This says all that needs to be said on the matter:

link

Another:

link

Why complicate things, trying to find mystery where there isn't any? There are plenty of deep and real mysteries, consciousness for example, existence itself for another. -

Hachem

384I am afraid I don't follow your thinking on instant light propagation at all and I don't see how it relates to cat eyes and pre-exposure of film. There is a mountain of evidence for the fact that it takes time for light to travel and that when we see distant objects, we are seeing how they were in the past. We can actually see this — oysteroid

Hachem

384I am afraid I don't follow your thinking on instant light propagation at all and I don't see how it relates to cat eyes and pre-exposure of film. There is a mountain of evidence for the fact that it takes time for light to travel and that when we see distant objects, we are seeing how they were in the past. We can actually see this — oysteroid

That is I think the central point of this discussion. Though, if you have read my posts carefully you will know that I do not believe in instant propagation of light. My post on femtophotography makes it clear that I consider propagation of light as something that happens in time.

I have the impression that instead of answering to my objections you find it sufficient to refer to established and accepted theories. The point you bring up, "when we see distant objects, we are seeing how they were in the past." is what I am denying. I wish you could explain to me how we can see somebody, or something, from a very large distance, and that person or object only arrives hours after we have made the observation. If we were observing the past how could there ever be such a discrepancy between past and present. It takes only a few moment for light to reach our eyes, while the object of our observation has to cross the distance at whatever speed is available. -

Hachem

384This is easy to explain. Once again, just consider the geometry. This says all that needs to be said on the matte — oysteroid

Hachem

384This is easy to explain. Once again, just consider the geometry. This says all that needs to be said on the matte — oysteroid

To be honest, I find your objections quite inadequate. You make it look like I am at loss concerning the geometric issue and you simply ignore the reason why I pose the problem. It is easy to refer to scientific authority as being the final judge in History, but please remember that Aristotle was the sole source of wisdom for 2000 years, and that the concept of the ether has been considered for longer valid than has the concept of field. The fact that the majority or even all the scientists today agree on something is not a guarantee of its validity.

As far as mysteries are concerned, I agree with you about the importance of say, consciousness, but that does not mean that we should consider the Book of Nature as already read.

edit: I could not find Einstein's quote about the error of thinking that we already know what light is. -

Hachem

384Why would telescopes have no use if they don't allow us to see things as they presently are? It is much better to see Pluto as it was hours ago than to not see it at all! Surely it is good to be able hear someone from across the room even though it takes time for the waves in the air to reach my ear! — oysteroid

Hachem

384Why would telescopes have no use if they don't allow us to see things as they presently are? It is much better to see Pluto as it was hours ago than to not see it at all! Surely it is good to be able hear someone from across the room even though it takes time for the waves in the air to reach my ear! — oysteroid

Tell it to the military of all nuclear (super) powers that are counting on detecting a hostile missile enough time in advance to react before they are destroyed.

Concerning the fact that it takes time for sound to reach your ears, I can't recall ever claiming that it was not true. -

Hachem

384The larger the aperture, the less the light on any given point on the screen is restricted to just one part of the scene. So, being less restricted, there is more light, but less sharpness. — oysteroid

Hachem

384The larger the aperture, the less the light on any given point on the screen is restricted to just one part of the scene. So, being less restricted, there is more light, but less sharpness. — oysteroid

I agree with this description of the way the amount of light affects visibility. It seems reasonable also to me to assume that a larger aperture will mean a brighter image. Where I depart from your conceptions is by the role this ambient light plays in the forming of images.

Let me take the same example as previously of a spy in the dark watching people unseen. If he decides to take pictures of the (illuminated) scene, he will have to take into account not his location, in darkness, but the light falling on the people, incident light. He won't be able to get any closer to take a reading of reflected light, so incident light, with the proper light-meter, will have to do.

Here is the crux: even though he is in complete darkness he will have to take into account the light illuminating the scene, and adjust the shutter and aperture accordingly. His camera is also in complete darkness, but still, closing the aperture will result in darker images that would have to be compensated with longer shutter speeds, and vice versa.

The question now is, why does he need to do that if no light is reaching him or his camera? Or is this assumption wrong? Can we say that the images registered by his eyes and through his camera have been somehow projected by light rays on his retina and the sensor area? If that is the case, how could he remain invisible to the other people? -

Hachem

384Candle Light

Hachem

384Candle Light

Here is an experiment I wish I could do myself, but I am afraid my apartment is not big enough.

Choose a large closed space with no windows, and light a candle at the end of the room. Hold or hang a mirror at the other end, and see if the image reflects the light of the candle.

The distance between the candle and the mirror must be of course large enough, and the room must be dark enough, that without the candle the mirror remains invisible.

There are as far as I can see only two possibilities, both very interesting.

1) No image on the mirror. This would be very improbable, after all, if we can see the candle, why would the mirror not reflect it?

2) The candle is reflected on the mirror. But then, how is the image projected through space onto the mirror through the dark? But then, how are stars in the sky visible to the naked eye?

edit: the mirror has of course to remain invisible even after the candle has been lit, at least, from where the candle is standing, even if that would not work for the reflection of the light. -

Hachem

384The larger the aperture, the less the light on any given point on the screen is restricted to just one part of the scene. So, being less restricted, there is more light, but less sharpness. — oysteroid

Hachem

384The larger the aperture, the less the light on any given point on the screen is restricted to just one part of the scene. So, being less restricted, there is more light, but less sharpness. — oysteroid

The second half of the quote is even more interesting, and concerns what I have called the Aperture problem, and which you have so conveniently ignored.

Your idea is ambient light coming from all directions, and rays reflected by the objects, also coming from all directions, contribute to the sharpness of the picture. I find it a very strange argument seen as the aperture, as we both agree, does not have any influence on the field of view, however strange it might seem.

Let us suppose that we are filming in a closed space, a box, with measuring marks on the bottom through the whole length or width. Let us now take different pictures with the camera as close to the box as possible to make sure only light within the box is reflected back. We will use different apertures, and appropriate lighting, to see if the depth of field changes.

According to your explanations, there should not be any difference between the different shots, at least, not in sharpness and depth of field, if of course we have adjusted the shutter speed accordingly.

After all, with each aperture we are receiving light back from the same enclosure. In fact, with adjusted shutter speeds, we are receiving the same amount of light back.

Add to that the fact that light rays are supposed to cross each other at the focal point, and we have no reasons left to think that the image should in any way be altered by different apertures.

I would be very interested in your opinion as to why, under such circumstances, we would still witness a change in sharpness and depth of field with different apertures. -

Hachem

384Now, just move your head around while looking through the hole. If the hole is small enough, you only see a small point of color. Suppose your head is in such a position that you see a point of red on the door of a parked car. Move your head much lower and you might see a point of blue from the sky, — oysteroid

Hachem

384Now, just move your head around while looking through the hole. If the hole is small enough, you only see a small point of color. Suppose your head is in such a position that you see a point of red on the door of a parked car. Move your head much lower and you might see a point of blue from the sky, — oysteroid

I would like to present you with another problem related to the Aperture Problem.

You give in your description of image formation in a camera obscura, the example of a person looking through the pinhole and seeing different parts of the scene according to his positioning. I have no problem whatsoever with such a description because it starts with the receiver, the eye, and not the sender, the light source.

I will just mention in passing that the size of the hole certainly determines how much the eye can see.

Imagine now that our spy not only is hidden in a dark corner, but that he is also in a dark closet with a very small peephole, a camera obscura as it were.

We may assume that no light, as far as we can perceive, reaches the closet nor the peephole, leaving the spy confident in his hiding place, even though he can still see the people he is spying on some distance away in a bright place.

Obviously, when putting his eye to the hole, he will see the scene straight up because it is projected upside down on his retina, as it would on the back wall.

That is in fact my question. assuming the scene is reflected, upside down, but that is unimportant for now, on the back wall of the closet, how would you explain it? Did the light rays stop illuminating the part of the space closest to the closet just to start again when they hit the wall?

Please let me know what you think of this.

edit: obviously if the peephole is too small there will be no visible reflection on the wall, but since we are not interested in sharp images, all we need is a bright spot as an acceptable image of the scene. -

oysteroid

27Your idea is ambient light coming from all directions, and rays reflected by the objects, also coming from all directions, contribute to the sharpness of the picture.

oysteroid

27Your idea is ambient light coming from all directions, and rays reflected by the objects, also coming from all directions, contribute to the sharpness of the picture.

That is not my idea. You've misunderstood me. Read what I wrote again and think about it carefully, visualizing what's going on geometrically.

Also, in my last post, the images near the end didn't work for some reason, so I just changed them to links. If you didn't follow those URLs earlier, click those links and see the diagrams that show why distant objects appear smaller.

This is becoming too time-consuming. And I anticipate little possibility of progress given the agenda that you are clearly pursuing.

I assure you, none of these things you are seeing here as problems are real problems. This stuff is well-understood and highly simulable.

I'm sorry, but I'll not be contributing to this thread any further. -

Hachem

384I will take it as meaning that you have no further arguments to present except the expectation that everybody should accept the rules of Optics as they are presented in textbooks. I will indeed continue pursuing my own agenda. You came out very strong, but in the end all you can refer to is the authority of science. You could of course very well be on the right track, and I could be all wrong, but your attitude is not really convincing and does you no honor.

Hachem

384I will take it as meaning that you have no further arguments to present except the expectation that everybody should accept the rules of Optics as they are presented in textbooks. I will indeed continue pursuing my own agenda. You came out very strong, but in the end all you can refer to is the authority of science. You could of course very well be on the right track, and I could be all wrong, but your attitude is not really convincing and does you no honor. -

Hachem

384Where is my image now?

Hachem

384Where is my image now?

Imagine you are using a ray box like this gentleman , with 5 beams you pass through a converging lens. Where do you think an image will be formed of those 5 beams?

At the focal point of course?

You sure about that? It seems to me that we have to go beyond the focal point to see anything resembling individual beams.

Imagine you put a screen exactly at the focal point, I think what you will get is, literally, a burning point, the literal translation of focal point in Dutch and German.

If you wanted an image of all 5 beams, and of their spatial relationships, you would have to go beyond the focal point. And the farther you go, the larger the image will be.

Should the image be at its sharpest at the focal point? Very doubtful, when all beams are inextricably mixed with each other. In fact, if you look carefully at the clip you will see that there is a moment where all 5 beams are clearly distinguishable. I would say, that is the smallest and sharpest image of all five beams, even if it lies quite a (relative) distance from the focal point.This distance will of course depend on the resolution of your optical devices.

That is also the position given usually by ray tracing. So, what is the use of the focal point?

I would say that the view of five beams coming out of a ray box should convince anybody of the falsity of ray tracing.

Ray tracing would take each beam and consider it as propagating rays in all directions through the lens, and it would be these rays, coming from a single point that would cross each other not at the focal point, but somewhere before or after, and create a virtual or real image.

In a way, ray tracing is right. There can be no image of an object at the focal point, only the smelting of all rays in one bright spot. It is only after rays start distancing themselves from each other that an image is formed.

But if we look at the video clip we clearly see that each beam goes its own way, even if they all meet at the focal point. There is no propagation of light in all directions from each point of the source. -

Hachem

384Where is my image now? (2)

Hachem

384Where is my image now? (2)

Instead of beams, imagine that the ray box was housing five colored windows whose reflections did not reach beyond a few centimeters. How would the image of the ray box have looked like?

I will tell you. We would have had an inverted image of the ray box with its five bright windows. The question is, would the images of these windows meet at a single point, like the beams do? Or would the whole front surface of the ray box be projected on a screen?

My point is that the beams attract all the attention and make us forget that it is the whole scene we should be considering.

It is a little bit like the use of a magnifier to start a fire by reflecting the sun onto a single point. The accepted wisdom is that the point is in fact an image of the sun. I find this very improbable. It is as little an image of the sun as the bright spot formed by the ray box is an image of the 5 beams.

If you want an image of the sun you would have to go beyond the focal point.

There is a hidden hint here somewhere.If we want an image of the lights in the ray box, we need to go beyond the focal point, somewhere where the image of the whole ray box is projected. And if we want an image of the ray box, we would need to temper somehow the beams, just like we use a gray filter to be able to look at the sun.

The hidden hint seems to me to be the following: the beams of light do not constitute the image of the objects, but only an image of themselves. We have to go beyond the beams to be able to see the objects or their images. -

Hachem

384The Aperture Problem (2)

Hachem

384The Aperture Problem (2)

The video clip mentioned earlier is a perfect illustration of the AP. Imagine putting a diaphragm right after the convex lens, and slowly closing it. It is immediately obvious that rays will be cut off and less than 5 beams will be projected on the screen.

Such a mutilation of the original scene would of course be very noticeable, and the fact that it does not happen with cameras teaches us something that hardly gets any attention in textbooks: the aperture has to be placed at or very close to the focal point. That is the only way a closed diaphragm cannot have any influence on the field of view.

My question therefore remains: how can a smaller aperture have any influence on the depth of field?

The accepted explanation is that superfluous rays are weeded out by the diaphragm, enhancing hereby the sharpness of the image.

I also found a preposterous explanation based of the degree in which rays diverge from each other around the focal point. https://www.youtube.com/watch?v=6XMk9jFcnlA

These explanations all sound logical when one accepts the rules of ray tracing. But only then. -

Hachem

384Why does ray tracing work (even if it is wrong?)

Hachem

384Why does ray tracing work (even if it is wrong?)