-

Wosret

3.4kWhat is consciousness? What does it mean, and entail? What are its characteristics, prerequisites, function, substance, or importance?

Wosret

3.4kWhat is consciousness? What does it mean, and entail? What are its characteristics, prerequisites, function, substance, or importance?

Consciousness can be seen as awakefulness, or intentional, purposeful, internally driven activity. Through metabolic processes, emotional impulses, imagination, reasoned motivation, and the like. A kind of alertness, a reactivity to the environment. It can also be thought of as a state of knowledge, or awareness. What one comprehends about themselves or the world. "Raising one's consciousness", or "states of consciousness" based on quasi-mystical, ideological, or insight based comprehension or mind vision.

What is it? Is it functional, the cogs and wheels moving just right at one level emerging into consciousness at the other? A univocal cosm of consciousness, from the micro to the macro, existing at all stages or levels? A narrative reflection, or illusion?

What's it made of? Parts? Information? Sensory immersive experience? Fields? Plasma? Ectoplasm?

Tell me all about it, I'm a zombie, and only pretend to understand. -

Marchesk

4.6kEver seen Terminator? Remember the scene where you get to see things through the eyes of cyborg Arnie that includes the normal visual field plus various printouts labeling objects of importance for the machine's mission? That was the terminator's consciousness.

Marchesk

4.6kEver seen Terminator? Remember the scene where you get to see things through the eyes of cyborg Arnie that includes the normal visual field plus various printouts labeling objects of importance for the machine's mission? That was the terminator's consciousness.

I am vary curious to hear a zombie's interpretation of that scene. Namely, who else could see the printed text on the visual field, and where did it reside? -

Wosret

3.4kThat sounds like an illusion. You are seeing through the Terminator's eyes, as it were, and in doing so project a conscious experience behind the visual field, as you're having one. This doesn't imply that the terminator is actually having one, and since it's a movie, we know that that's just a camera, with added printouts designed precisely to give that illusion.

Wosret

3.4kThat sounds like an illusion. You are seeing through the Terminator's eyes, as it were, and in doing so project a conscious experience behind the visual field, as you're having one. This doesn't imply that the terminator is actually having one, and since it's a movie, we know that that's just a camera, with added printouts designed precisely to give that illusion.

Everyone that watched the movie, and designed the scene saw the illusion, it does not however work on me, as I do not have an experiential visual field, I merely behave as if I do. -

Wosret

3.4kI don't understand it, I merely behave as if I do. As for reading a book from a character's point of view, you may have guessed this by now, but it means nothing to me, but I behave as if it does, and can functionally operate within a conversation about it, and completely convincingly seem to understand, and for it to be meaningful, but this will be entirely behavioral, without any internal experience, apprehension, or subjectivity.

Wosret

3.4kI don't understand it, I merely behave as if I do. As for reading a book from a character's point of view, you may have guessed this by now, but it means nothing to me, but I behave as if it does, and can functionally operate within a conversation about it, and completely convincingly seem to understand, and for it to be meaningful, but this will be entirely behavioral, without any internal experience, apprehension, or subjectivity. -

Harry Hindu

5.9kConsciousness is an information architecture. It is a representation of our attention and what we are attending at any given moment.

Harry Hindu

5.9kConsciousness is an information architecture. It is a representation of our attention and what we are attending at any given moment.

Consciousness doesn't seem to be always intentional, or possess intention. There are many things that appear in consciousness that weren't preceded by an intention. Intention seems to arise as a response to certain experiences. For instance, my intention to live only arises when my life is threatened. My intention to seek pleasure only arises when I'm feeling down or suffering to some extent. So it seems that the only persistent part of consciousness is it's awareness. When we are conscious, we are aware, and what we are aware of, we can respond to with intent. -

Mongrel

3kTell me all about it, I'm a zombie, and only pretend to understand. — Wosret

Mongrel

3kTell me all about it, I'm a zombie, and only pretend to understand. — Wosret

So with you, there is no understanding. Why would a thing which can't understand... ask for an explanation? I guess I would be posing that question to the wall. You would only pretend to understand it. It's kind of lonely to ask the wall questions. They just bounce back at me. -

Marchesk

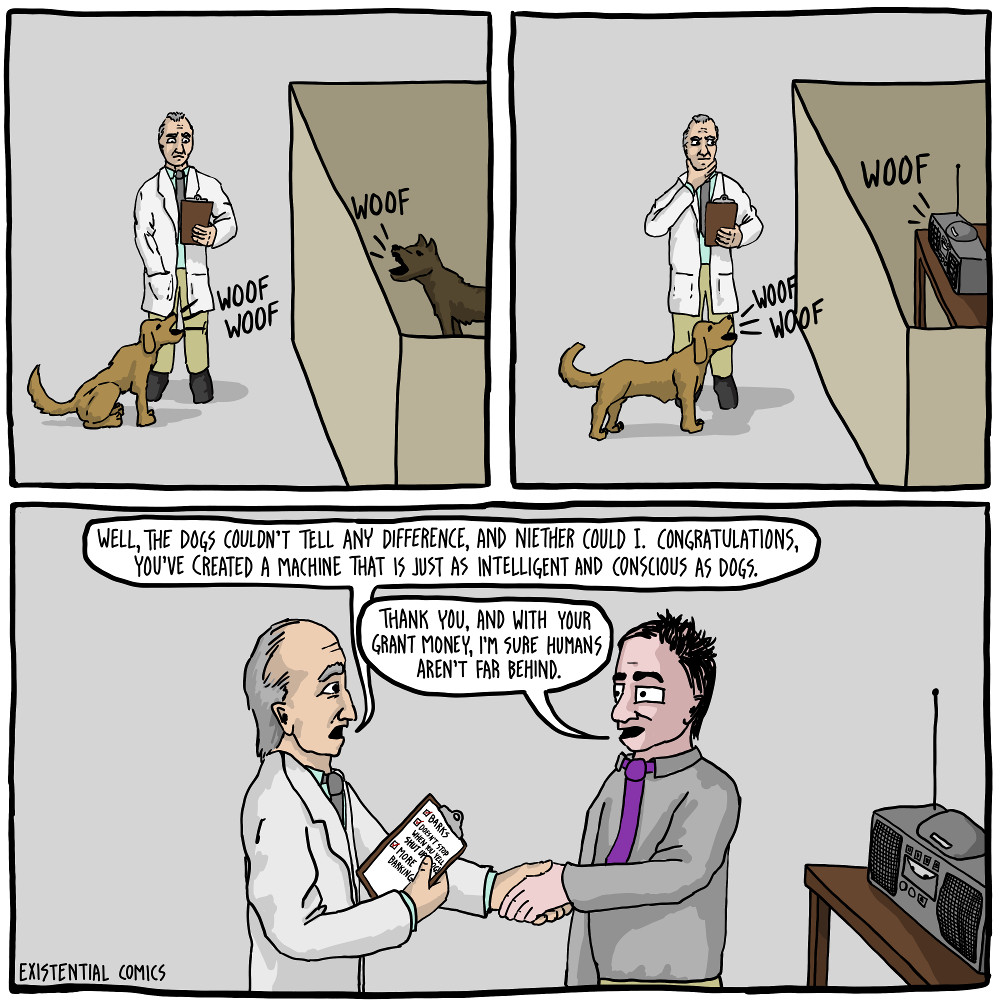

4.6kThe problem for p-zombies is accounting for behavior which requires an understanding of first person. Perhaps Chalmers and those who agree with his argument might claim that such behavior does not actually require such understanding. Then there must be some other explanation for how a system can behave as if it understands first person, when it can't, in all possible cases.

Marchesk

4.6kThe problem for p-zombies is accounting for behavior which requires an understanding of first person. Perhaps Chalmers and those who agree with his argument might claim that such behavior does not actually require such understanding. Then there must be some other explanation for how a system can behave as if it understands first person, when it can't, in all possible cases.

Of course it's easy enough to fake understanding in some cases, and we can write software that does this now, but it won't succeed in all cases. Indeed, nobody is convinced that Siri or Watson are conscious, or some clever bot. But there are AIs from fiction which would be able to behave convincingly, and then we would have to ask ourselves if it makes sense to think they are p-zombies.

You could have a potential p-zombie read a story with a novel twist on first person and ask them all sorts of questions. We know that humans, if they found the story interesting, would discuss and debate it at length. But how would a p-zombie make sense of it? -

Mongrel

3kBecause that's what a thing that was interested, and could understand would do. Pretending to understand is often considered polite, walls aren't polite. — Wosret

Mongrel

3kBecause that's what a thing that was interested, and could understand would do. Pretending to understand is often considered polite, walls aren't polite. — Wosret

So it has some sort of software? Imagine you're walking through a garden and you come upon a statue. As you walk toward it you're startled by a voice. It seems to be coming from the statue. You peek behind it and laugh because there's sound system strapped to the back of it. You realize there must be a motion sensor somewhere. As you listen, it tells you that it's just a statue. It affirms that you are also a statue with a sound system and a motion sensor. Then it asks you what you think about that.

I think I'd have various thoughts... how contemporary art installations aren't really my cup of tea and stuff like that. But would I express my thoughts to the statue? I guess if you were there too and I was trying to make you laugh.

So if I respond to the OP, that shows that I don't believe the last sentence. Can I prove it's wrong? No. I can't prove to myself that I'm conscious. I can't prove what I can't deny. -

Wosret

3.4k

Wosret

3.4k

My thought process for making this thread was that we ought to have threads about the key philosophical issues, like consciousness, and what it is. I ended up posing it all as questions, asking for people's opinions about what they thought consciousness was, and for this reason, just thought that I'd add "before I'm a zombie" at the end for shits and giggles. Funnily enough, that's what got all of the attention, so I just ran with it.

My own position is not that we're all zombies, if you think that I could be meaning that. -

Wosret

3.4kYou could have a potential p-zombie read a story with a novel twist on first person and ask them all sorts of questions. We know that humans, if they found the story interesting, would discuss and debate it at length. But how would a p-zombie make sense of it? — Marchesk

Wosret

3.4kYou could have a potential p-zombie read a story with a novel twist on first person and ask them all sorts of questions. We know that humans, if they found the story interesting, would discuss and debate it at length. But how would a p-zombie make sense of it? — Marchesk

In fiction they often go out of the way to remind us that the A.I. is an A.I. by often nonsensically being unable to grasp some emotional experience. Like the Terminator claiming to have extensive data bases on human anatomy and function for medical purposes, and then five minutes later being all like "why is your face leaking? Crying is a mystery to me!". Problematic in two senses, first it is incongruent with its claims of extensive anatomical knowledge which would surely exceed even seasoned professionals in detail, and accuracy because of memory, and secondly because the question itself suggests and interest, and recognition of the face leaking as significant. So that ultimately it doesn't work to remind us that the A.I. isn't conscious, as much as demonstrate an odd kind of robot ignorance (which is what sifi usually resorts to when attempting to display this), While artificially, and implausibly inserting a gaping hole in the A.I.'s data base.

Humans that found the story interesting may discuss it at length if they felt comfortable enough, but may otherwise wish to say very little, because of being overwhelming by the pressure.Whereas some that didn't find it particularly interesting at all, may find lots to say about it, because they talk a lot under pressure, or simply like attention, and the opportunity to be listened to, or a million other motivations that may make people say much or little, regardless of interest. Much like a lie detector, such a test is arbitrary.

Modern A.I.s have no extensive memory, or parsing of natural language, and are easy to detect by asking them question about what has been said already, or meta questions, seeking specific, non-general responses. If an A.I. did master natural language (and it is only a matter of time before we design one that does), I don't see what kind of test one could design to decide whether or not it was truly conscious -- and I don't think that the artificial, implausible movie scenarios give any answers towards this. -

Wosret

3.4k

Wosret

3.4k

Maybe the concept is interesting. Maybe because it was the only thing resembling a positive opinion or position I put forward, and people prefer to address positive positions than put them forward. Maybe because the first person decided to focus on that, and I obliged, it just naturally became focused on that, but it could have gone many other ways, depending on how the first reply went. Who knows. -

Marchesk

4.6kModern A.I.s have no extensive memory, or parsing of natural language, and are easy to detect by asking them question about what has been said already, or meta questions, seeking specific, non-general responses. If an A.I. did master natural language (and it is only a matter of time before we design one that does), I don't see what kind of test one could design to decide whether or not it was truly conscious -- and I don't think that the artificial, implausible movie scenarios give any answers towards this. — Wosret

Marchesk

4.6kModern A.I.s have no extensive memory, or parsing of natural language, and are easy to detect by asking them question about what has been said already, or meta questions, seeking specific, non-general responses. If an A.I. did master natural language (and it is only a matter of time before we design one that does), I don't see what kind of test one could design to decide whether or not it was truly conscious -- and I don't think that the artificial, implausible movie scenarios give any answers towards this. — Wosret

There's two recent AI movies that do a good job with this sort of thing. One is 'Her' and the other is 'Ex Machina'. In the second one, a programmer at a big software company wins a prize to become bait in Turing testing the secret robot the company's CEO has been building. It's a rather ingenious scheme as it has several levels of deception built into the plot. In 'Her', it's easy enough at first to think the the operating system Samantha, as it names itself, is just a futuristic Siri, but it becomes impossible to maintain this belief as Samantha evolves and pursues goals on her own (and with other versions of the operating system).

I don't think an AI can do what either of those AIs did without attaining consciousness. Same goes with Data and the holographic doctor on Star Trek Next Generation and ST Voyager. -

The Great Whatever

2.2kOne of the reasons that Her is not compelling as a movie, though, is that the OS is effectively no different from a woman. They literally just cast a woman for the role, and she does everything a human being does. And nobody even seems to care that the guy is dating her anyway. It's not very thought provoking when you just take a woman (and it was, we all know, actually just Scarlett Johansen), and then *say* she's a computer, to somehow demonstrate that the line can be effectively blurred. Traditional sci-fi scenarios like this usually have some sort of framing device to show that the two are literally distinguishable, and then ask the further question of which qualities are important to personhood. That disappears when you start right off the bat with no difference whatsoever.

The Great Whatever

2.2kOne of the reasons that Her is not compelling as a movie, though, is that the OS is effectively no different from a woman. They literally just cast a woman for the role, and she does everything a human being does. And nobody even seems to care that the guy is dating her anyway. It's not very thought provoking when you just take a woman (and it was, we all know, actually just Scarlett Johansen), and then *say* she's a computer, to somehow demonstrate that the line can be effectively blurred. Traditional sci-fi scenarios like this usually have some sort of framing device to show that the two are literally distinguishable, and then ask the further question of which qualities are important to personhood. That disappears when you start right off the bat with no difference whatsoever. -

Wosret

3.4k

Wosret

3.4k

Aren't those just movies that go the other direction and give them magic inexplicable consciousness? It is thus again, just written into the plot to convince you one way or the other, but we do have -- allbeit incomplete -- explanations for why organisms are goal directed, and even organisms that arguably are not conscious are goal directed. So, this too fails on two fronts. The first being that they just inexplicably become conscious as a plot device (approaching the definition of fantasy rather than science fiction), and secondly the quality that is presented to demonstrate their position of consciousness or awareness fails to do so. -

Marchesk

4.6kExcept that Samantha is disembodied, and acts disturbed by this at first, even contacting a surrogate female partner for Joaquin Phoenix to make love to in her place, then accepts that she is in ways not limited by not having a body. Over the course of the movie, Samantha (in conjunction with the other OSes) evolve to superintelligence, easily surpassing the humans they are having relationships with. At one point, the main character finds out that Samantha is in simultaneously in love with thousands of other users behind his back. Her defense is that she is not like him, and so it does not diminish her love for him. And by the end of the movie, he (and all the other humans), are too slow to maintain a relationship with, even though Samantha says that she still cares deeply.

Marchesk

4.6kExcept that Samantha is disembodied, and acts disturbed by this at first, even contacting a surrogate female partner for Joaquin Phoenix to make love to in her place, then accepts that she is in ways not limited by not having a body. Over the course of the movie, Samantha (in conjunction with the other OSes) evolve to superintelligence, easily surpassing the humans they are having relationships with. At one point, the main character finds out that Samantha is in simultaneously in love with thousands of other users behind his back. Her defense is that she is not like him, and so it does not diminish her love for him. And by the end of the movie, he (and all the other humans), are too slow to maintain a relationship with, even though Samantha says that she still cares deeply. -

The Great Whatever

2.2kI just don't buy it. She's literally just Scarlett Johansen. No one is wondering whether Scarlett Johansen is human. Even something schlocky like Data from Star Trek is more interesting.

The Great Whatever

2.2kI just don't buy it. She's literally just Scarlett Johansen. No one is wondering whether Scarlett Johansen is human. Even something schlocky like Data from Star Trek is more interesting. -

The Great Whatever

2.2kI watched the whole movie, yeah. I think it failed as a sci-fi scenario and as a drama personally, the former for the reasons I mentioned. The ending was just sort of, "alright, then." The whole Alan Watts thing was pretty vomit-inducing, too.

The Great Whatever

2.2kI watched the whole movie, yeah. I think it failed as a sci-fi scenario and as a drama personally, the former for the reasons I mentioned. The ending was just sort of, "alright, then." The whole Alan Watts thing was pretty vomit-inducing, too. -

Marchesk

4.6kAren't those just movies that go the other direction and given them magic inexplicable consciousness? It is thus again, just written into the plot to convince you one way or the other, but we do have -- allbeit incomplete -- explanations for why organisms are goal directed, and even organisms that arguably are not conscious are goal directed. — Wosret

Marchesk

4.6kAren't those just movies that go the other direction and given them magic inexplicable consciousness? It is thus again, just written into the plot to convince you one way or the other, but we do have -- allbeit incomplete -- explanations for why organisms are goal directed, and even organisms that arguably are not conscious are goal directed. — Wosret

Ex Machina does provide an explanation for how consciousness was built into the robot, even if it's somewhat dissatisfying. There is a fair amount of interesting conversation in the movie, since the point is the test the robot for genuine consciousness. The intriguing part is that it means the robot must deceive it's unknowing interrogator in order to truly pass the test. Deception on that level requires an understanding of other minds. -

Wosret

3.4kBut part of mastering natural language is the use of connotation, it is not mysterious, or a sign of consciousness for A.I.s that have mastered language to express feeling ways about things, it is a prerequisite of mastering natural language. Indecision being nothing more than options with regard to connotation, and siding this way or that being illiminative, based on some algorithm designed to avoid stagnation or paradox.

Wosret

3.4kBut part of mastering natural language is the use of connotation, it is not mysterious, or a sign of consciousness for A.I.s that have mastered language to express feeling ways about things, it is a prerequisite of mastering natural language. Indecision being nothing more than options with regard to connotation, and siding this way or that being illiminative, based on some algorithm designed to avoid stagnation or paradox.

Secondly, it merely presupposes, or question begs a computational theory of intelligence, or consciousness, which implies, at base, that calculators are some degree conscious and intelligent. -

Marchesk

4.6kMy argument is that you can't have a system behave in a way indistinguishable from one that is conscious without being conscious, because doing so requires consciousness. So if a machine ever does that, then we will have every reason to think it is conscious, or at least as much as we think other people have minds.

Marchesk

4.6kMy argument is that you can't have a system behave in a way indistinguishable from one that is conscious without being conscious, because doing so requires consciousness. So if a machine ever does that, then we will have every reason to think it is conscious, or at least as much as we think other people have minds. -

The Great Whatever

2.2kIf they were truly indistinguishable, though, they'd just be other humans (not "machines," if by that is meant something non-human). So that amounts to not much.

The Great Whatever

2.2kIf they were truly indistinguishable, though, they'd just be other humans (not "machines," if by that is meant something non-human). So that amounts to not much. -

Wosret

3.4k

Wosret

3.4k

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Time is a Byproduct of Consciousness - Consciousness is Universes Fundamental Dimension

- Qualia and the Hard Problem of Consciousness as conceived by Bergson and Robbins

- Objective evidence for a non - material element to human consciousness?

- von Neumann ordinals as a model of self-consciousness

- Theory of repeating universes, what about repeating of consciousness?

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum