-

m-theory

1.1k

m-theory

1.1k

You may be right...this machine is not a being in the sense that a human is a being..at least not yet...I cannot say if it might be possible for it to learn to do so as you do though...and I am fascinated because I believe deepmind is the closest a machine has come in approaching that possibility.

I still argue that this machine does have a state of being and a concept of self that it experiences along side its experience of its environment...it models itself and learns about itself from its environment and learns about its environment from its model of self.

Deepmind is a major step forward towards A.I. that can adapt to a wider range of problems than any other attempt thus far...the question of whether it can adapt to as wide range as humans can adapt to is still very much an open one. -

Jamal

11.7kSee, I don't think that any element, nor the totality, of those systems, has the reflexive first-person knowledge of being, or experience of being, that humans have, or are; it is not an 'I'. So, sure, you could feasibly create an incredibly clever system, that could answer questions and engage in dialogue, but it would still not be a being. — Wayfarer

Jamal

11.7kSee, I don't think that any element, nor the totality, of those systems, has the reflexive first-person knowledge of being, or experience of being, that humans have, or are; it is not an 'I'. So, sure, you could feasibly create an incredibly clever system, that could answer questions and engage in dialogue, but it would still not be a being. — Wayfarer

I guess the question is, could there possibly be artificial "I"s? Computational A.I. might not get us more than clever devices, but why in principle could we not create artificial minds, maybe some other way?

(I'm just being provocative; I don't have any clear position on it myself.) -

Wayfarer

26.1kthis machine is not a being in the sense that a human is a being....I still argue that this machine does have a state of being and a concept of self that it experiences.... — Theory

Wayfarer

26.1kthis machine is not a being in the sense that a human is a being....I still argue that this machine does have a state of being and a concept of self that it experiences.... — Theory

When you say 'in the sense that a human is a being' - what other sense is there? Pick up a dictionary or an encyclopedia, and look up 'being' as a noun - how many instances are there? How many things are called 'beings'? As far as I know, the only things commonly referred to by that term, are humans.

None of that is to say that Deepmind is not amazing technology with many applications etc etc. But it is to call into question the sense in which it is actually a mind.

I guess the question is, could there possibly be artificial "I"s? — Jamalrob

Would help to know if there were a real 'I' before trying to replicate it artificially. -

apokrisis

7.8kPattee would be worth reading. The difference is between information that can develop habits of material regulation - as in biology - and information that is by definition cut free of such an interaction with the physical world.

apokrisis

7.8kPattee would be worth reading. The difference is between information that can develop habits of material regulation - as in biology - and information that is by definition cut free of such an interaction with the physical world.

Software can be implemented on any old hardware which operates with the inflexible dynamics of a Turing machine. Biology is information that relies on the opposite - the inherent dissipative dynamics of the actual physical world. Computers calculate. It is all syntax and no semantics. But biology regulates physiochemical processes. It is all about controlling the world rather than retreating from the world.

You could think of it as a computer being a bunch of circuits that has a changing pattern of physical states that is as meaningless from the world's point of view as randomly flashing lights. Whereas a biological system is a collection of work organising devices - switches and gates and channels and other machinery designed to regulate a flow of material action.

As we go from cellular machinery to neural machinery, the physicality of this becomes less obvious. Neurons with their axons and synapses start to look like computational devices. But the point is that they don't break that connection with a physicality of switches and motors. The biological difference remains intrinsic. -

m-theory

1.1kWhen you say 'in the sense that a human is a being' - what other sense is there? Pick up a dictionary or an encyclopedia, and look up 'being' as a noun - how many instances are there? How many things are called 'beings'? As far as I know, the only things commonly referred to by that term, are humans.

m-theory

1.1kWhen you say 'in the sense that a human is a being' - what other sense is there? Pick up a dictionary or an encyclopedia, and look up 'being' as a noun - how many instances are there? How many things are called 'beings'? As far as I know, the only things commonly referred to by that term, are humans.

None of that is to say that Deepmind is not amazing technology with many applications etc etc. But it is to call into question the sense in which it is actually a mind. — Wayfarer

Well deepmind is not modeled after a full human brain...it is modeled from a part of the mammalian brain.

I think the goal of one to one human to computer modeling is very far off from now and I am not so sure it is necessary in order to refer to a computer as having a mind.

That is not the criterion that interests me when asking if a computer has a mind.

To me the question is if a computer can demonstrate the ability to solve any problem a human can...be that walking or playing the game of go.

If deepmind can adapt to as wide a range of problem sets as any human can then I think we are forced to consider it a mind in the general sense of that term.

Often I see the objection that a mind is something that is personal and has an autonomous agenda...that is not how I am using the term mind.

I am using the term mind to indicate a general intelligence capable of adapting to any problem a human can adapt to.

Not an individual person...but a general way of thinking.

Of course computers will always be different than humans because humans and computers do not share the same needs.

A computer does not have to solve the problem of hunger a human does for example.

So in some ways comparing a human to computer there is always going to be a fundamental difference...apokrisis touched on this.

I don't necessarily agree that because a computer's needs and a human's needs are necessarily different that therefor a computer cannot be said to have a mind.

That to me is a semantic distinction that misses the philosophical point of A.I.

So my question is more accurately what that would mean about human problem solving if a computer can solve all the same problems as efficiently or even better than humans?

Deepmind is at the very least a peek of what that would look like. -

m-theory

1.1k

m-theory

1.1k

I did skim over that link you provided...thanks for that reference...I will review it more in depth later. -

Jamal

11.7kEek. My first instinct is to say that there is no barrier in principle to the creation of artificial persons, or agentive rational beings, or what have you (such vagueness precludes me from coming up with a solution, I feel). Do you think there is such a barrier? Putting that another way: do you think it's possible in principle for something artificial to possess whatever you think is special about human beings, whatever it is that you think distinguishes a person from an animal (or machine)? Putting this yet another way: do you think it's possible in principle for something artificial to authentically take part in human community in the way that humans themselves do, without mere mimicry or clever deception?

Jamal

11.7kEek. My first instinct is to say that there is no barrier in principle to the creation of artificial persons, or agentive rational beings, or what have you (such vagueness precludes me from coming up with a solution, I feel). Do you think there is such a barrier? Putting that another way: do you think it's possible in principle for something artificial to possess whatever you think is special about human beings, whatever it is that you think distinguishes a person from an animal (or machine)? Putting this yet another way: do you think it's possible in principle for something artificial to authentically take part in human community in the way that humans themselves do, without mere mimicry or clever deception? -

tom

1.5kMy first instinct is to say that there is no barrier in principle to the creation of artificial persons, or agentive rational beings — jamalrob

tom

1.5kMy first instinct is to say that there is no barrier in principle to the creation of artificial persons, or agentive rational beings — jamalrob

You don't need instinct, you just need to point to the physical law that forbids the creation of artificial people - there isn't one!

However, the notion that after installing TensorFlow on your laptop, you have a person in there, is only slightly less hilarious than the idea that if you run it on multiple parallel processors, you have a society. -

Wayfarer

26.1kMy first instinct is to say that there is no barrier in principle to the creation of artificial persons, or agentive rational beings, or what have you (such vagueness precludes me from coming up with a solution, I feel). Do you think there is such a barrier? — Jamalrob

Wayfarer

26.1kMy first instinct is to say that there is no barrier in principle to the creation of artificial persons, or agentive rational beings, or what have you (such vagueness precludes me from coming up with a solution, I feel). Do you think there is such a barrier? — Jamalrob

I already said that! That is what you were responding to already.

You said 'could we create an artificial "I"', and I said, 'you would have to know what the "I" is'. That is a serious challenge, because in trying to do that, I say you're having to understand what it means to be a being that is able to say "I am".

The word 'ontology' is derived from the present participle of the Greek verb for 'to be'. So the word itself is derived from 'I am'. So the study of ontology is, strictly speaking, the study of the meaning of "I am"'. Of course it has other meanings as well - in the context of computer networks 'ontology' refers to the classifications of entities, inheritances, properties, hierarchies, and so on. But that doesn't detract from the point that ontology is still concerned with 'the meaning of being', not 'the analysis of phenomena'.

It sounds strained to talk of the meaning of 'I am', because (obviously), what "I am" is never present to awareness, it is what it is that things are present to. It is 'first person', it is that to which everything is disclosed, for that reason not amongst the objects of consciousness. And that again is an ontological distinction.

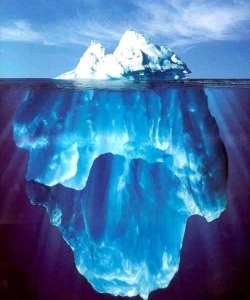

And as regards 'creating a mind', think about the role of the unconscious in the operations of mind. The unconscious contains all manner of influences, traits, abilities, and so on - racial, linguistic and cultural heritage, autonomic features, the archetypes, heaven knows what else:

So if you were to create an actual artificial intelligence, how would you create the unconscious? How would you write a specification for it? 'The conscious mind' would be a big enough challenge, I suspect 'the unconscious' would be orders of magnitude larger, and impossible to specify, for obvious reasons, if you think about it.

So, of course, we couldn't do that - we would have to endow a network with characteristics, and let it evolve over time. Build up karma, so to speak, or gain an identity and in so doing, the equivalent of a culture, an unconscious, archetypes, and the rest. But how would you know what it was you were creating? And would it be 'a being', or would it still be billions of switches? -

m-theory

1.1k

m-theory

1.1k

Again I want to address this notion that what the term mind should mean is exclusively an individual person.

I am seeking to explore the philosophical implications of what it means if general purpose A.I. can learn to solve any problem a human might solve.

That is an open question concerning the methods employed by the example I have...will the new techniques demonstrated by deepmind be able to adapt to as wide a range of problems as a person could?

If you believe you know that these techniques are not general purpose and cannot adapt to any problem a human could adapt to you should focus your criticism by addressing why you believe that is so.

I did not come here to argue that deepmind is, at this point, a fully self aware computer system.

So you can stop beating that dead strawman with your alphago and tensorflow banter.

I came here to ask if the techniques that are employed in my example will be able to eventually converge on that outcome....

if so what are the philosophical implications...

if not...why? -

tom

1.5kSo if you were to create an actual artificial intelligence, how would you create the unconscious? How would you write a specification for it? 'The conscious mind' would be a big enough challenge, I suspect 'the unconscious' would be orders of magnitude larger, and impossible to specify, for obvious reasons, if you think about it. — Wayfarer

tom

1.5kSo if you were to create an actual artificial intelligence, how would you create the unconscious? How would you write a specification for it? 'The conscious mind' would be a big enough challenge, I suspect 'the unconscious' would be orders of magnitude larger, and impossible to specify, for obvious reasons, if you think about it. — Wayfarer

The unconscious is easy. We have most of what is necessary in place already - databases, super fast computers, and of course programs like AlphaGo which can be trained to become expert at anything, much like our cerebellum.

Humans have augmented their minds with unconscious tools e.g. pencils and paper, and as a matter of fact, our conscious mind has no idea how the mechanism of e.g. memory retrieval.

I think I gave a list earlier of some of the key attributes of a mind: consciousness, creativity, qualia, self-awareness. Consciousness, if you take that to mean what we lose when we are under anaesthetic, may be trivial. As for the rest, how can we possibly program them when we don't understand them? -

m-theory

1.1kAnd as regards 'creating a mind', think about the role of the unconscious in the operations of mind. The unconscious contains all manner of influences, traits, abilities, and so on - racial, linguistic and cultural heritage, autonomic features, the archetypes, heaven knows what else: — Wayfarer

m-theory

1.1kAnd as regards 'creating a mind', think about the role of the unconscious in the operations of mind. The unconscious contains all manner of influences, traits, abilities, and so on - racial, linguistic and cultural heritage, autonomic features, the archetypes, heaven knows what else: — Wayfarer

I think the unconscious mind is more about the brain than the psyche.

You personally do not know how your own brain does what it does...but that does not particularly limit your mind from solving problems.

So if you were to create an actual artificial intelligence, how would you create the unconscious? How would you write a specification for it? 'The conscious mind' would be a big enough challenge, I suspect 'the unconscious' would be orders of magnitude larger, and impossible to specify, for obvious reasons, if you think about it. — Wayfarer

The particulars of the computer hardware and algorithms being used would simply not be known to the agent in question...that would be it's subconscious.

So, of course, we couldn't do that - we would have to endow a network with characteristics, and let it evolve over time. Build up karma, so to speak, or gain an identity and in so doing, the equivalent of a culture, an unconscious, archetypes, and the rest. But how would you know what it was you were creating? And would it be 'a being', or would it still be billions of switches? — Wayfarer

That is, the some argue, most disturbing part....A.I. is approaching a level where it can learn exponentially which would mean it might be able to learn such things much more quickly than we would expect from a human. -

Metaphysician Undercover

14.8kI believe that IBM is working on AI projects which will make Deep Mind look rather insignificant. In fact, some argue that Watson already makes Deep Mind look insignificant.

Metaphysician Undercover

14.8kI believe that IBM is working on AI projects which will make Deep Mind look rather insignificant. In fact, some argue that Watson already makes Deep Mind look insignificant.

It sounds strained to talk of the meaning of 'I am', because (obviously), what "I am" is never present to awareness, it is what it is that things are present to. It is 'first person', it is that to which everything is disclosed, for that reason not amongst the objects of consciousness. And that again is an ontological distinction. — Wayfarer

This, I believe, is a vey important point. And, it underscores the problems which the discipline of physics will inevitably face in creating any kind of artificial being. That discipline does not have a coherent approach to what it means to be present in time. -

Wayfarer

26.1kA.I. is approaching a level where it can learn exponentially... — MTheory

Wayfarer

26.1kA.I. is approaching a level where it can learn exponentially... — MTheory

'Computers [can] outstrip any philosopher or mathematician in marching mechanically through a programmed set of logical maneuvers, but this was only because philosophers and mathematicians — and the smallest child — were too smart for their intelligence to be invested in such maneuvers. The same goes for a dog. “It is much easier,” observed AI pioneer Terry Winograd, “to write a program to carry out abstruse formal operations than to capture the common sense of a dog.”

A dog knows, through its own sort of common sense, that it cannot leap over a house in order to reach its master. It presumably knows this as the directly given meaning of houses and leaps — a meaning it experiences all the way down into its muscles and bones. As for you and me, we know, perhaps without ever having thought about it, that a person cannot be in two places at once. We know (to extract a few examples from the literature of cognitive science) that there is no football stadium on the train to Seattle, that giraffes do not wear hats and underwear, and that a book can aid us in propping up a slide projector but a sirloin steak probably isn’t appropriate.

We could, of course, record any of these facts in a computer. The impossibility arises when we consider how to record and make accessible the entire, unsurveyable, and ill-defined body of common sense. We know all these things, not because our “random access memory” contains separate, atomic propositions bearing witness to every commonsensical fact (their number would be infinite), and not because we have ever stopped to deduce the truth from a few more general propositions (an adequate collection of such propositions isn’t possible even in principle). Our knowledge does not present itself in discrete, logically well-behaved chunks, nor is it contained within a neat deductive system.

It is no surprise, then, that the contextual coherence of things — how things hold together in fluid, immediately accessible, interpenetrating patterns of significance rather than in precisely framed logical relationships — remains to this day the defining problem for AI.

It is the problem of meaning.

Logic, Poetry and DNA, Steve Talbott. -

m-theory

1.1k

m-theory

1.1k

Watson is distinctly different than deepmind they use different techniques...I believe deepmind is more flexible in that it can learn to do different tasks from scratch where as watson is programed to perform a specific task.I believe that IBM is working on AI projects which will make Deep Mind look rather insignificant. In fact, some argue that Watson already makes Deep Mind look insignificant. — Metaphysician Undercover -

tom

1.5kI am seeking to explore the philosophical implications of what it means if general purpose A.I. can learn to solve any problem a human might solve. — m-theory

tom

1.5kI am seeking to explore the philosophical implications of what it means if general purpose A.I. can learn to solve any problem a human might solve. — m-theory

I apologise if I didn't make my position abundantly clear: an Artificial General Intelligence would *be* a person. It could certainly be endowed with capabilities far beyond humans, but whether one of those is problem solving or "growth of knowledge" can't be understood until we humans solve that puzzle ourselves.

Take for the sake of argument that knowledge grows via the Popperian paradigm (if you'll pardon the phrase). i.e. Popper's epistemology is correct. There are two parts to this: the Logic of Scientific Discovery, and the mysterious "conjecture". I'm not convinced that the Logic can be performed by a non self-aware entity, if it could, then why has no one programmed it?

AlphaGo does something very interesting - it conjectures. However, the conjectures it makes are nothing more than random trials. There is no explanatory reason for them, in fact, there is no explanatory structure beyond the human-encoded fitness function. That is why it took 150,000 games to train it up to amateur standard and 3,000,000 other games to get it to beat the 2nd best human. -

m-theory

1.1kIt is no surprise, then, that the contextual coherence of things — how things hold together in fluid, immediately accessible, interpenetrating patterns of significance rather than in precisely framed logical relationships — remains to this day the defining problem for AI.

m-theory

1.1kIt is no surprise, then, that the contextual coherence of things — how things hold together in fluid, immediately accessible, interpenetrating patterns of significance rather than in precisely framed logical relationships — remains to this day the defining problem for AI.

It is the problem of meaning. — Wayfarer

Meaning for who?

Are you suggesting that a computer could not form meanings?

How would meaning be possible without logical relationships?

Having watched these lectures I believe that one could argue quite reasonably that deepmind is equipped with common sense.

I would also argue that common sense is not something that we are born with...people and dogs have to learn that they cannot jump over a house.

The reason we do not form nonsense solutions to problems has to do from learned experienced so I don't know why it should be a problem for a learning computer to accomplish. -

m-theory

1.1kI apologise if I didn't make my position abundantly clear: an Artificial General Intelligence would *be* a person. It could certainly be endowed with capabilities far beyond humans, but whether one of those is problem solving or "growth of knowledge" can't be understood until we humans solve that puzzle ourselves. — tom

m-theory

1.1kI apologise if I didn't make my position abundantly clear: an Artificial General Intelligence would *be* a person. It could certainly be endowed with capabilities far beyond humans, but whether one of those is problem solving or "growth of knowledge" can't be understood until we humans solve that puzzle ourselves. — tom

My concern is that people are too quick to black box "the problem" and that is not productive for discussing the issue.

I don't think the mind or the how the mind is formed is a black box...I think it can be understood and I see no reason why we should not assume that it is an algorithm.

If you don't mind could you elaborate on this.Take for the sake of argument that knowledge grows via the Popperian paradigm (if you'll pardon the phrase). i.e. Popper's epistemology is correct. There are two parts to this: the Logic of Scientific Discovery, and the mysterious "conjecture". I'm not convinced that the Logic can be performed by a non self-aware entity, if it could, then why has no one programmed it? — tom

Do you believe an agent would have to be fully self aware at a human level to perform logically for instance?

I see it a bit differently.AlphaGo does something very interesting - it conjectures. However, the conjectures it makes are nothing more than random trials. — tom

AlphaGo does not play randomly it uses randomness to learn how to play efficiently. -

Wayfarer

26.1kWhat does 'form meaning' mean?

Wayfarer

26.1kWhat does 'form meaning' mean?

The nature of meaning is far from obvious. There is a subsection of books on the subject 'the metaphysics of meaning' and they're not easy reads. And no, I don't believe computers understand anything, they process information according to algorithms and provide outputs. -

m-theory

1.1kWhat does 'form meaning' mean? — Wayfarer

m-theory

1.1kWhat does 'form meaning' mean? — Wayfarer

Well in the formal sense meaning is just a knowing a problem and knowing the solution to that problem.

And formally knowing is just a set of data.

I would argue that this algorithm does not simply compute syntax but is able to understand semantic relationships of that syntax.

It is able to derive semantics by learning what the problem is, and learning what is the solution to that problem.

The process of learning may be syntactical, but when the algorithm learns the problem and that problems solution it understands both.

I suspect you will not be satisfied with this definition of meaning...if so feel free to describe how you think the term meaning should be defined.

The nature of meaning is far from obvious. — Wayfarer

I disagree.

I think formally meaning is knowing a problem and the solution to that problem and the logical relationships between the two is the semantics.

I don't believe computers understand anything, they process information according to algorithms and provide outputs. — Wayfarer

Suppose your previous posts are right..and we don't have any good idea of what meaning and understanding is, if that were so you could not be sure that computers are capable in that capacity.

That is to say if we don't know what meaning and understanding is then we can't know if computers have these things.

You seem to want it both ways here.

You know computers can't understand or know meaning...but at the same time meaning and understanding is mysterious.

That is a bit of a contradiction don't you think? -

apokrisis

7.8kBeinghood is about having an informational idea of self in a way that allows one to materially perpetuate that self.

apokrisis

7.8kBeinghood is about having an informational idea of self in a way that allows one to materially perpetuate that self.

So we say all life has autonomy in that semiotic fashion. Even immune systems and bacteria are biological information that can regulate a material state of being by being able to divide the material world into what is self and nonself.

This basic division of course becomes a highly elaborated and "felt" one with complex brains, and in humans, with a further socially constructed self-conscious model of being a self. But for biology, there is this material state of caring that is at the root of life and mind from the evolutionary get go.

And so when talking about AI, we have to apply that same principle. And for programmable machines, we can see that there is a designed in divorce between the states of information and the material processes sustaining those states. Computers simply lack the means for an involved sense of self.

Now we can imagine starting to create that connection by building computers that somehow are in control of their own material destinies. We could give our laptops the choice over their power consumption and their size - let them grow and spawn in some self-choosing way. We could let them pick what they actually wanted to be doing, and who with.

We can imagine this in a sci fi way. But it would hardly be an easy design exercise. And the results would seem autistically clunky. And as I have pointed out we would have to build in this selfhood relation from the top down. Whereas in life it exists from the bottom up, starting with molecular machines at the quasi classical nanoscale of the biophysics of cells. So computers are always going against nature in trying to recreate nature in this sense.

It is not an impossible engineering task to introduce some biological realism into an algorithmic architecture in the fashion of a neural network. But computers must always come at it from the wrong end, and so it is impossible in the sense of being utterly impractical to talk about a very realistic replication of biological structure. -

Wayfarer

26.1kWell in the formal sense meaning is just a knowing a problem and knowing the solution to that problem.

Wayfarer

26.1kWell in the formal sense meaning is just a knowing a problem and knowing the solution to that problem.

And formally knowing is just a set of data. — Theory

You toss these phrases off, as if it is all settled, as if understanding the issues is really simple. But it really is not, all you're communicating is the fact that you're skating over the surface. 'The nature of knowledge' is the subject of the discipline of epistemology, and it's very difficult subject. Whether computers are conscious or not, is also a really difficult and unresolved question.

You know computers can't understand or know meaning...but at the same time meaning and understanding is mysterious.

That is a bit of a contradiction don't you think? — Theory

No, that's not a contradiction at all. As far as I am concerned it is a statement of fact.

Over and out on this thread, thanks. -

m-theory

1.1kAnd for programmable machines, we can see that there is a designed in divorce between the states of information and the material processes sustaining those states. — apokrisis

m-theory

1.1kAnd for programmable machines, we can see that there is a designed in divorce between the states of information and the material processes sustaining those states. — apokrisis

The same is true of the brain and the mind I believe.

It has taken the course of hundreds of thousands of years for us to study the mechanisms of the brain and how the brain relates to the mind.

We don't know personally how our brain and/or subconscious work.

And as I have pointed out we would have to build in this selfhood relation from the top down. Whereas in life it exists from the bottom up, starting with molecular machines at the quasi classical nanoscale of the biophysics of cells. So computers are always going against nature in trying to recreate nature in this sense. — apokrisis

I am not sure I agree that we have to completely reverse engineer the body and brain down to the nano scale to achieve a computer that has a mind....to achieve a computer that can simulate what it means to be human sure....I must concede that.

I would instead draw back to my question of whether or not we could reverse engineer a brain sufficiently that the computer could solve any problem a human could solve.

Granted this machine would not have the inner complexity a human has but I still believe we would be forced to conclude, in a general sense, that the such a machine had a mind.

So in top down bottom up terms I think when we meet in the middle we will have arrived at a mind in the sense that computers will be able to learn anything a human can and solve any problem a human can.

Of course the problem of top down only gets harder as you scale so the task of creating a simulated human is a monumental one requiring unimaginable breakthroughs in many disciplines.

If we are defining the term mind in such a way that this the necessary criterion prior then we still have a very long wait on our hands I must concede. -

m-theory

1.1kNo, that's not a contradiction at all. As far as I am concerned it is a statement of fact.

m-theory

1.1kNo, that's not a contradiction at all. As far as I am concerned it is a statement of fact.

Over and out on this thread, thanks. — Wayfarer

You toss these phrases off, as if it is all settled, as if understanding the issues is really simple. But it really is not, all you're communicating is the fact that you're skating over the surface. 'The nature of knowledge' is the subject of the discipline of epistemology, and it's very difficult subject. — Wayfarer

I was not trying to be dismissive and I did not intend for it to seem as though I do not appreicate that epistemology is vast subject with a great many complexities.

But in fairness we have to start somewhere and I think starting with a formal definition of meaning and/or understanding as learning what is a problem and learning what is that problem's solution is a reasonable place to begin.

Whether computers are conscious or not, is also a really difficult and unresolved question. — Wayfarer

Well I did argue in the first page that either the mind/consciousness must be decidable (it is possible to answer the question "do I have a mind/consciousness" with a yes or no correctly each time you ask it).

If consciousness and/or the mind is undecidable these terms are practical useless to us philosophically and I certainly don't agree we should define the terms thus.

(sorry can't link to my op but it on the first page)

The implication here is that the mind/consciousness is an algorithm so that just leaves the question which algorithm.

But you still make a valid point...the question of which algorithm is the mind/consciousness is hardly a settled matter.

Sorry if I gave the impression that I believed it was settled...I intended to give the impression that I see no reason why it should not become settled in light of the fact that we are modeling after our own minds and brains.

I guess I will have to take your word for it.No, that's not a contradiction at all. As far as I am concerned it is a statement of fact. — Wayfarer

Over and out on this thread, thanks. — Wayfarer

Yes thank you too for posting here...you made me think about my own beliefs critically...hopefully that is a consolation to the frustration I was responsible for.

Again sorry about the misunderstanding it was not my intention to be curt. -

apokrisis

7.8kSo every time I point to a fundamental difference, your reply is simply that differences can be minimised. And when I point out that minimising those differences might be physically impractical, you wave that constraint away as well. It doesn't seem as though you want to take a principled approach to your OP.

apokrisis

7.8kSo every time I point to a fundamental difference, your reply is simply that differences can be minimised. And when I point out that minimising those differences might be physically impractical, you wave that constraint away as well. It doesn't seem as though you want to take a principled approach to your OP.

Anyway, another way of phrasing the same challenge to your presumption there is no great problem here: can you imagine an algorithm that could operate usefully on unstable hardware? How could an algorithm function in the way you require if it's next state of output was always irreducibly uncertain? In what sense would such a process still be algorithmic in your book if every time it computed some value, there would be no particular reason for the calculation to come out the same?

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum