-

dukkha

206If your conscious experience is caused by your brain, this means that the body (including your head, which you believe contains a physical brain which is causing your experience) and the world around that you perceive - perceptions being conscious experience - must therefore be caused by your brain.

dukkha

206If your conscious experience is caused by your brain, this means that the body (including your head, which you believe contains a physical brain which is causing your experience) and the world around that you perceive - perceptions being conscious experience - must therefore be caused by your brain.

So you're left in the horrible epistemic position of the brain state that gives rise to/is equal to your conscious experience not being within your head that you feel, see, touch, etc. Rather, all those sense experiences, and the perceived world around you, and the people you interact with, must all already be caused by/equal to the particular state of a brain.

So basically you're a homunculus. An onboard body/world model within the brain of a physical human.

This is a horrible epistemic position to be on, because from the position of the onboard self/world model, you do not have any access to anything BUT the model. So if you have no access to the supposed brain which is carrying the conscious experience which you exist as within itself (or, as itself/as it's state), then I don't see how you can justify even positing it's existence. You can't know anything about it from your position, all you have access to is your phenomenal world.

Basically, if a brain is giving rise to your conscious experience, it can't be located within your head. Your perceived head would already be being caused by a brain, and so the brain causing this perception can't be located within this perception. You can't locate the brain that is causing your conscious experience, within your conscious experience of a head. It has to be the other way round, with perceptions being located within a brain, perceptions of your head included. But if this is so, then from your epistemic position you can have no knowledge of this brain which is causing your experience, including whether it even exists or not. -

Barry Etheridge

349You want to try again? Your first sentence amounts to ...

Barry Etheridge

349You want to try again? Your first sentence amounts to ...

If conscious experience is caused by your brain, conscious experience is caused by your brain.

And I would have thought it obvious that we have no knowledge of our own brains. It could not be otherwise. I cannot for the life of me see why that should be a cause of angst! -

dukkha

206And I would have thought it obvious that we have no knowledge of our own brains. It could not be otherwise. — Barry Etheridge

dukkha

206And I would have thought it obvious that we have no knowledge of our own brains. It could not be otherwise. — Barry Etheridge

My point is not that we can't perceive the brain in our heads. -

Terrapin Station

13.8kI agree that brains do not cause conscious experience. Rather, brains, in particular states, ARE conscious experience. It's not a causal relationship. It's a relationship of identity.

Terrapin Station

13.8kI agree that brains do not cause conscious experience. Rather, brains, in particular states, ARE conscious experience. It's not a causal relationship. It's a relationship of identity. -

Terrapin Station

13.8kBut it's hard to see how that could actually be, when one is a subjective lived experience and the other is an object in the world. How could they be the very same thing? — dukkha

Terrapin Station

13.8kBut it's hard to see how that could actually be, when one is a subjective lived experience and the other is an object in the world. How could they be the very same thing? — dukkha

If identity is the case, they're both subjective experience (I don't know what "lived" adds to that) and an object in the world, of course. What's causing the dichotomy is considering the thing in question from two different reference points, and again, it's important to remember that EVERYTHING is from some reference point. There's a reference point of being a brain, and a reference point of observing a brain that's not one's own. Those are different reference points.

Also, just as an fyi aside, if you want to make sure that I see a post and respond (well, or if you want to have a better chance of that), hit "reply" on my post. I often just check the number next to "YOU" in the menu here. -

swstephe

109If your conscious experience is caused by your brain, this means that the body (including your head, which you believe contains a physical brain which is causing your experience) and the world around that you perceive - perceptions being conscious experience - must therefore be caused by your brain. — dukkha

swstephe

109If your conscious experience is caused by your brain, this means that the body (including your head, which you believe contains a physical brain which is causing your experience) and the world around that you perceive - perceptions being conscious experience - must therefore be caused by your brain. — dukkha

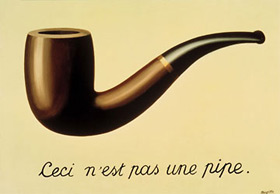

What if you substituted body/brain with "camera" and perception/experience with "image"? Does it make sense to say that cameras don't take pictures because they could potentially take a picture of themselves, (in a mirror)?

So you're left in the horrible epistemic position of the brain state that gives rise to/is equal to your conscious experience not being within your head that you feel, see, touch, etc. Rather, all those sense experiences, and the perceived world around you, and the people you interact with, must all already be caused by/equal to the particular state of a brain. — dukkha

The treachery of images is that the image is not the thing. A picture of a pipe is not a pipe, but it is possible that some real pipe was involved in the process of making the image. It is only horrible if you assume that images and the things they represent are identical and have some vested interest in everything being real. So it is a kind of paradox -- trying to deal with the contradiction that everything is an image, but everything must be real.

Basically, if a brain is giving rise to your conscious experience, it can't be located within your head. Your perceived head would already be being caused by a brain, and so the brain causing this perception can't be located within this perception. You can't locate the brain that is causing your conscious experience, within your conscious experience of a head. It has to be the other way round, with perceptions being located within a brain, perceptions of your head included. But if this is so, then from your epistemic position you can have no knowledge of this brain which is causing your experience, including whether it even exists or not. — dukkha

Well, even if an image/perception isn't the same as the thing being shown/perceived resolves the first problem, you might still have a problem that you ultimately can't completely perceive your perceptions, because you end up with infinite sets-of-sets. I don't think that is a problem, just a hardware limitation. It is still possible for something else to be capable of perceiving everything you perceive without contradiction if perceptions are finite. -

tom

1.5kI agree that brains do not cause conscious experience. Rather, brains, in particular states, ARE conscious experience. It's not a causal relationship. It's a relationship of identity. — Terrapin Station

tom

1.5kI agree that brains do not cause conscious experience. Rather, brains, in particular states, ARE conscious experience. It's not a causal relationship. It's a relationship of identity. — Terrapin Station

Well, it can't be an identity. The information content of DNA is not the DNA. The program that won at Go is not the hardware, the symphony is not the notes on paper. -

Terrapin Station

13.8kWell, it can't be an identity. The information content of DNA is not the DNA. The program that won at Go is not the hardware, the symphony is not the notes on paper. — tom

Terrapin Station

13.8kWell, it can't be an identity. The information content of DNA is not the DNA. The program that won at Go is not the hardware, the symphony is not the notes on paper. — tom

Just to keep this simple to start, the relation can't be one of identity because of other things that you would say are not identity relations?

(Part of what I'm trying to do in this comment by the way is to get you to clarify an argument, including clarifying just what relation(s) you're saying those other things have, and why you're saying that they're the same as the brain/mind relation.) -

tom

1.5k

tom

1.5k

I don't know what you mean. The information (though more precisely knowledge) encoded in DNA can be transferred through multiple media and preserved. There has to be something objectively independent of DNA for that to be possible.

That "thing" is the subject of information theory. -

Terrapin Station

13.8k

Terrapin Station

13.8k

Re what I mean, there has to be some relation between DNA and the information content of DNA for example, right? -

tom

1.5k

tom

1.5k

If Einstein's theories were written in incomprehensible code, then the books and papers would be forgotten.

DNA contains information that, when instantiate in a suitable environment, causes itself to be replicated.

The weird thing about this, is that information theory is fundamentally counterfactual. If you do not accept that the code could have been otherwise, then information does not exist.

So, what do you think, determinism or information? -

Terrapin Station

13.8k

Terrapin Station

13.8k -

Wayfarer

26.1kThere is a relationship between a code and its meaning. but the relationship is not one of identity for the reasons @Tom has given.

Wayfarer

26.1kThere is a relationship between a code and its meaning. but the relationship is not one of identity for the reasons @Tom has given.

Why this is so is a very complicated question involving philosophy, semiotics, and cognition. -

Metaphysician Undercover

14.8kWhy this is so is a very complicated question involving philosophy, semiotics, and cognition. — Wayfarer

Metaphysician Undercover

14.8kWhy this is so is a very complicated question involving philosophy, semiotics, and cognition. — Wayfarer

"Why this is so" is not at all complicated. It's a rather simple thing called intention. It so happens though, that most people deny the existence of intention. And, by trying to explain matters of intention without referring to intention, they produce a very complicated issue. -

Terrapin Station

13.8kThere is a relationship between a code and its meaning. but the relationship is not one of identity for the reasons Tom has given. — Wayfarer

Terrapin Station

13.8kThere is a relationship between a code and its meaning. but the relationship is not one of identity for the reasons Tom has given. — Wayfarer

So assuming that we were to agree on that for a moment, and ignoring that we're not actually saying what the relation is, but instead we're just saying what it isn't, the idea is supposed to be that DNA/DNA informational content, computer hardware/the program that won at Go, notes on pqper/a symphony all have the same code/meaning relation, as does brain/mind? -

Marchesk

4.6kthe idea is supposed to be that DNA/DNA informational content, computer hardware/the program that won at Go, notes on pqper/a symphony all have the same code/meaning relation, as does brain/mind? — Terrapin Station

Marchesk

4.6kthe idea is supposed to be that DNA/DNA informational content, computer hardware/the program that won at Go, notes on pqper/a symphony all have the same code/meaning relation, as does brain/mind? — Terrapin Station

To the extent that brains/minds give them the same meaning, which some are happy to do, like Dennett and Kurzweil, and others, less so, like Lanier. -

Marchesk

4.6kt's not a causal relationship. It's a relationship of identity — Terrapin Station

Marchesk

4.6kt's not a causal relationship. It's a relationship of identity — Terrapin Station

So you agree with Searle that it's a biological property, and not something that can be functionally recreated in a computer. -

Wayfarer

26.1kthe idea is supposed to be that DNA/DNA informational content, computer hardware/the program, notes on paper/a symphony all have the same code/meaning relation, as does brain/mind? — TS

Wayfarer

26.1kthe idea is supposed to be that DNA/DNA informational content, computer hardware/the program, notes on paper/a symphony all have the same code/meaning relation, as does brain/mind? — TS

They are all examples of the idea that information and the way the meaning is information is encoded are separable.

A more simple example is that you can take any proposition and translate it into a variety of languages, or media, or codes. Take a proposition that is determinative - the instructions for constructing a device or formula or recipe. That means that, no matter how it is represented, the output or result is invariant - otherwise the devise or meal or chemical substance won't turn out correctly. So the information is quite exact. But the means of representing it, in terms of which language, which media type, and so on, can vary enormously.

So this suggests that the information or meaning or propositional content is independent of the physical media type or type of symbol.

f your conscious experience is caused by your brain... — Dukkha

I think 'caused by' is an problematic. The brain is obviously fundamental to conscious experience, but the lesson of embodied cognition is that brains are always associated with nervous systems, sensory organs, bodies, and environments.

But I think your post is grappling with a real philosophical problem, i.e. 'knowledge of the external world'. -

Wayfarer

26.1kInteresting question! I suppose in computer science the rules are clear enough, computer language>assembly langage>machine code (something like that, I haven't studied programming formally.) The structure covered by the general description of syntax, isn't it? Syntax governs the rules, semantics is concerned with the meaning. So they're separable also.

Wayfarer

26.1kInteresting question! I suppose in computer science the rules are clear enough, computer language>assembly langage>machine code (something like that, I haven't studied programming formally.) The structure covered by the general description of syntax, isn't it? Syntax governs the rules, semantics is concerned with the meaning. So they're separable also.

I guess you could say that in the case of speech, first there's the intentional meaning - what needs to be communicated - and then you 'search for the words' to encode the meaning, then put them together according to the syntactical or grammatical rules. Similar with programming - required output, modelling, then coding. The lower-level code is what actually translates the commands into binary and executes it on the level of microprocessors.

How that happens on the physiological level is definitely beyond my pay-grade.

But I think my original point still stands. -

Wayfarer

26.1kConsciousness is obviously a biological phenomenon.

Wayfarer

26.1kConsciousness is obviously a biological phenomenon.

It is spoken about from a biological perspective, but that doesn't mean it can be understood solely in biological terms. To say that it is just or only a biological phenomenon is the precise meaning of 'biological reductionism'. It simply provides a way of managing the debate from a point of view which is understandable by the physical sciences, in the absence of any other agreed normative framework. -

Marchesk

4.6knteresting question! I suppose in computer science the rules are clear enough, computer language>assembly langage>machine code (something like that, I haven't studied programming formally.) The structure covered by the general description of syntax, isn't it? Syntax governs the rules, semantics is concerned with the meaning. So they're separable also. — Wayfarer

Marchesk

4.6knteresting question! I suppose in computer science the rules are clear enough, computer language>assembly langage>machine code (something like that, I haven't studied programming formally.) The structure covered by the general description of syntax, isn't it? Syntax governs the rules, semantics is concerned with the meaning. So they're separable also. — Wayfarer

Sure, but let me ask the question a different way. From the POV of physics, does any of that exist? Or, to describe the physics of a computer, is there any need to invoke software at all? -

Wayfarer

26.1kFrom the POV of physics, does any of that exist?

Wayfarer

26.1kFrom the POV of physics, does any of that exist?

No. But this is where the 'epistemic cut' comes in. There is a level of order found in biological systems and language, which is not reducible to physics. That area is @Apokrisis' speciality, although he still claims to be a physicalist (but I think that is because in modern philosophy, the concept of mind is irretrevably tied to Descartes' reified abstraction, i.e. there isn't a proper lexical framework within which to discuss 'nature of mind'.) -

Real Gone Cat

346jkop came closest to the answer when the word "interact" was used.

Real Gone Cat

346jkop came closest to the answer when the word "interact" was used.

The reason that DNA/DNA info, computer hardware/rules of GO, and notes on paper/symphony are not comparable to brain/mind is because all of those examples are static, whereas brain/mind is dynamic. This is not a small point. When a computer wins at GO, the game it plays is comparable to mind, not the information stored in the circuitry. And that exact game (which is dynamic) can never be reproduced simply by transferring the stored (static) information to another computer or device. Thus it is not the arrangement of neurons that is identical to a mind, but rather the synaptic activity that is identical to mind. -

Terrapin Station

13.8kTo the extent that brains/minds give them the same meaning, which some are happy to do, like Dennett and Kurzweil, and others, less so, like Lanier. — Marchesk

Terrapin Station

13.8kTo the extent that brains/minds give them the same meaning, which some are happy to do, like Dennett and Kurzweil, and others, less so, like Lanier. — Marchesk

And me. I'm a physicalist/identity theorist, though I'm not an eliminative materialist.

So you agree with Searle that it's a biological property, and not something that can be functionally recreated in a computer.

We know it to be a biological property at this point, and there's no reason yet to believe that functionalism is true. I wouldn't say that functionalism couldn't be true, but there's just no reason to believe that it is yet. There's no reason to believe that substratum dependence isn't the case. Mentality might be properties unique to the specific materials, structures and processes that comprise brains. -

Terrapin Station

13.8kThey are all examples of the idea that information and the way the meaning is information is encoded are separable. — Wayfarer

Terrapin Station

13.8kThey are all examples of the idea that information and the way the meaning is information is encoded are separable. — Wayfarer

Not that I agree with that view (which I can get into in a moment, although you should already know why I don't agree from comments I've made previously, and some explicitly in response to you), but I'm more curious re what the argument is supposed to be for brains and minds being the same relation in that regard? People are always criticizing comments for not being arguments. Well, where's the argument here?

The stuff you typed after what I quoted is by no means an argument for this, unless you classify very loose heuristic rhetoric as an argument. Your paragraph beginning with "A simple example" is actually just fleshing out the claim you're making--stating it more verbosely, the claim that I quoted from you above, and without any argument regarding how it fits any of the pairs given earlier (DNA/DNA informational content, etc.), including not being an argument for how it fits the brain/mind pair. -

Real Gone Cat

346

Real Gone Cat

346

What of my argument (above)? The reason that none of the cited examples are analogues for mind/brain is that they are static, and mind/brain is dynamic.

It is also important to understand the level of complexity represented by our brains. Estimates for the number of synaptic connections in the human brain are roughly 100 trillion, which is many orders of magnitude above the number of stars in the Milky Way! And those connections are highly plastic. Thus the human brain is a massive, mutable structure that incorporates feedback loops. No computer that currently exists (or that will exist in the foreseeable future) even comes close.

One structure that does come close to the complexity of the human brain is the internet. But here, the mutability is controlled by outside agents (i.e., users), so the internet will probably never attain consciousness either. -

Terrapin Station

13.8k

Terrapin Station

13.8k

I don't agree with your argument because I don't agree that anything exists that isn't dynamic.

The problem, in my view, with what their argument is turning out to be is that they're positing real (read "extramental") abstracts, such as meaning (and apparently "information"), where they believe that the same x (a la logical identity) can be achieved by different physical phenomena. Or in other words, they're arguing for multiple realizability (and in a broader context than just brain/mind).

I don't buy that there are any real abstracts.* I'm a nominalist (more generally), too. And of course I thus don't buy multiple realizability. So their argument fails because the premise is flawed.

* Things like meaning exist, of course, but they're not real abstracts. Meaning consists of particulars--particular mental events in the brains of individuals.

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum