-

Gnomon

4.3k

Gnomon

4.3k

Neurotransmitters all work together. But I was referring specifically to the "pleasure & reward" system, which lets you know that what you did was good for you. Or, rather, for your genes. Sometimes, what's good for your amoral genes is not so good for your moral "self". I suspect that most criminals feel good about themselves, until they face the legal consequences. :smile:Dopamine works to create refreshment, calibration, etc. To appease the side effect of calibration as a reward is criminal-ish, no(petty)? — Varde

Dopamine and serotonin regulate similar bodily functions but produce different effects. Dopamine regulates mood and muscle movement and plays a vital role in the brain's pleasure and reward systems. Serotonin helps regulate mood, sleep, and digestion.

https://www.verywellhealth.com/serotonin-vs-dopamine-5194081 -

Gnomon

4.3k

Gnomon

4.3k

I doubt that the subconscious mind "allows" you to think rationally. Instead, the executive Conscious mind must occasionally overrule the default motivations of the Subconscious. If your worldview is somewhat Fatalistic, you may not believe that you have Freewill to choose a conscious logical method, instead of being driven by the animal-like, automatic, subconscious, instinctive reaction to every situation.In my opinion the true reason/motivation why your subconscious allows you to think in some situations and not in others could be key to understand if logic is of any value at all. — FalseIdentity

To clarify the long-debated question of FreeWill, I have developed a philosophical scenario of the human Mind, based on the model of a large business. It has many well-trained low-level subordinates, a few mid-level managers over departments, and one chief executive officer who rules them all. Typically, the business runs smoothly without direct orders from the top, as each subordinate level does its job almost automatically. But when the firm faces an unusual or difficult problem, the subordinate subconscious (instincts ; emotions) may report to the top, with a quick pre-set solution, or with a menu of options.

If the dire situation is too complex & critical, or portends bad consequences for the business (as a whole system), it's the job of the executive (conscious Reason) to leave the golf-course, and come into the office, to make the hard choices, as a singular official decision. Normally, the rational faculties lie dormant, until the quarreling instincts report that they are confused, and unable to reach a unified decision. That's why a past president once said, "the buck stops here", at the top. The human mind is not a discordant anarchy, or an oppressive dictatorship, but it does have a remote semi-retired chief executive officer : Reason.

Some of the subordinates may think the golf-playing CEO is a freeloader, who doesn't do any of the "real" work. But when a crisis portends, they all look to the Boss to set a direction for the company. David Hume may have spoken tongue-in-cheek, when he said, "reason is, and ought only to be, the slave of the passions". By "passions", Hume was referring to the emotionally-mature Character (virtue) of a person (a logical value system of what's important), not to irrational, crazy, anything-goes, spontaneous, emotional outbursts. :cool:

I summarize my personal hypothesis of FreeWill Within Determinism as follows : Freewill is the ability of self-conscious beings to choose preferred options from among those that destiny (or subconscious) presents. In the complex (non-linear) network of cause & effect, a node with self-awareness is a causal agent. With multiple Pre-determined inputs, and many Potential outputs, the Self can choose from a wide range of Possibilities, creating local novelty within a globally-deterministic system.

http://bothandblog5.enformationism.info/page14.html

Hume's Passions :

https://psyche.co/ideas/neuroscience-has-much-to-learn-from-humes-philosophy-of-emotions

Moral Character :

https://plato.stanford.edu/entries/moral-character/ -

Srap Tasmaner

5.2kHe's the place we're trying to avoid ending up when talking about active inference. — Isaac

Srap Tasmaner

5.2kHe's the place we're trying to avoid ending up when talking about active inference. — Isaac

I can't tell you how relieved I am to hear you say that. That guy is full of shit. He seems determined to be the new Sheldrake. -

TheMadFool

13.8kOk, here's a little something to ponder upon. I'd love it if 180 Proof weighs in.

1. Epistemic responsibility is, well, a really good idea. Beliefs have moral consequences - they can either be fabulously great for our collective welfare or they could cause a lot of hurt.

2. Epistemic responsibility seems married to rationality for good, there's little doubt that that isn't the case. Rationality is about obeying the rules of logic and, over and above that, having a good handle on how to make a case.

So far so good.

3. Now, just imagine, sends chills down my spine, that rationality proves beyond the shadow of a doubt that immoralities of all kinds are justified e.g. that slavery is justified, racism is justified, you get the idea. This isn't as crazy as it sounds - a lot of atrocities in the world have been, for the perps, completely logical.

Here we have a dilemma: Either be rational or be good. If you're rational, you end up as a bad person. If you're good, you're irrational.

As you can see this messes up the clear and distinct notion of epistemic responsibility as simulataneously endorsing rationality AND goodness.

Thoughts.. — TheMadFool -

Isaac

10.3k

Isaac

10.3k

Yes, I think active inference is walking the same knife edge that quantum physics has to walk. On one side is a much better understanding of how the brain works, on the other is "woooah...I mean what is, like... really real man". I get the fascination. I think active inference accounts of perception are eye-opening, they're certainly more than just mundane bits of cognitive psychology and I believe they can give us some insights into other areas of psychology, but we mustn't fall of the rails in doing so. Hoffman has. -

Wheatley

2.3k

Wheatley

2.3k

He doesn't say anything definitely. :yawn:I strongly reccomend watching the video in the link to understand this better — FalseIdentity -

SophistiCat

2.4kWell, yes and no. That's the difficulty which gives Hoffman the space in which he can introduce this theoretical 'veil' without abandoning all credibility. The problem is that the result of our prediction (the response of the hidden states) is just going to be another perception, the cause of which we have to infer. No if we use, as priors for this second inference, the model which produced the first inference (the one whose surprise reduction is being tested), then there's going to be a suppresive action against possible inferences which conflict with the first model. String enough of these together, says Hoffman, and you can accumulate sufficient small biases in favour of model 1, that the constraints set by the actual properties of the hidden causal states pale into insignificance behind the constraints set by model 1's assumptions.

SophistiCat

2.4kWell, yes and no. That's the difficulty which gives Hoffman the space in which he can introduce this theoretical 'veil' without abandoning all credibility. The problem is that the result of our prediction (the response of the hidden states) is just going to be another perception, the cause of which we have to infer. No if we use, as priors for this second inference, the model which produced the first inference (the one whose surprise reduction is being tested), then there's going to be a suppresive action against possible inferences which conflict with the first model. String enough of these together, says Hoffman, and you can accumulate sufficient small biases in favour of model 1, that the constraints set by the actual properties of the hidden causal states pale into insignificance behind the constraints set by model 1's assumptions.

The counter arguments are either that the constraints set by the hidden causal states are too narrow to allow for any significant diversity (Seth), or that there's never a sufficiently long chain of inference models without too much regression to means (which can only be mean values of hidden states). I subscribe to a combination of both. — Isaac

When we develop models analytically, such as in science or in everyday reasoning, it is certainly possible - and seductive - to come up with a model that is resistant to falsification. But it seems to me that such a modelling system would be difficult to evolve in the first place, because the selective pressure would be weak to non-existent. -

Banno

30.6k

Banno

30.6k -

FalseIdentity

62

FalseIdentity

62

To be able to occasionally overrule the motivation it must always have the first access to information and decision about such informations or the occassional overuling would not work reliably -this is what I wanted to explain with my primary survival reflex example. This first access should give it absolute power over what happens with the information it lets through. If I would have first access and hence full controll over all information you ever receive in your life you may call yourself my manager and I might find it conveniend to let you think you are my manager to avoid unecessary quarells but if I decide what your reality is by controlling all the information I should have absolute controll over you. Let me say that I am neither an opponent of free will nor that I am convinced that the mind really works like materialist think it works. I just tried to think this philosophy through too it's conclusion.I doubt that the subconscious mind "allows" you to think rationally. Instead, the executive Conscious mind must occasionally overrule the default motivations of the Subconscious. -

FalseIdentity

62I think you have a very good point here. With all the disbute about Hoffman the focus was lost that my complaint is that logic is evil. If Hoffmann would be right ( I start to have some doubt now too but I will need more time to think this through) this would not have made logic really evil it would have just made it into an intelectual disapointment. What could make it evil is more it's origin in predation.

FalseIdentity

62I think you have a very good point here. With all the disbute about Hoffman the focus was lost that my complaint is that logic is evil. If Hoffmann would be right ( I start to have some doubt now too but I will need more time to think this through) this would not have made logic really evil it would have just made it into an intelectual disapointment. What could make it evil is more it's origin in predation.

Sophisticat had mentioned here that the ultimate test of the truth of our mental models is if we can do predict events correctly. At this point it makes sense to look more on how a brain actually learns to make predictions. Any neural network has to be "backtrained" what was a wrong prediction and what was a correct prediction in several rounds. When the prediction was false some neural connections will be cut in the hope that the error they produced will not reoccur. When the prediction was right the connections that this prediction made are reinforced so that the network get's better with every training round. Now I am afraid that the ultimate measure for the brain of what was a wrong prediction and what was a correct prediction is if it gained or lost energy through this prediction. But you can gain energy only by stealing (either directly or indirectly) life energy from other life forms. And since other life forms don't want their life energy to be stolen, the only way to train your brain is by constantly breaking the golden rule. The idea that we are morally allowed to take the life of so called " inferior" species is highly dubious and sounds like an excuse. I think there would be strong opposition if "intelectually superior" aliens came here and would harvest us justifying this with the same line of reasoning.

Now the neural network that is trained for this purpose and in this way it should have strong limitations in what kind of intelectual problems it can solve. For example in computer science you can't use a neural network that was trained for language recognition to recognize images.

In the case of the human brain that was in effect trained on how to break the golden rule most efficiently I am for example sure that it can not know what evil is in the metaphysical sense. I agree hence with the critique that - if I am really only that network - I can not know what evil is either. But if I am unable to recognize what evil is I could commit very evil deads all the time without noticing and that alone bothers me.A second unexpected but straightforward conclusion is that if it's impossible to understand for humans what evil is, they should stay away from building counter proof of god based on that term (I mean the problem of evil).

Idenpendent of if or not Hoffmann is right - it should be hard to impossible for this network to understand anything that is not either food or can be used as a tool to obtain food. If you see everything just as food or as a tool to food this will preclude you from understanding it's deeper nature. Understanding that deeper nature would just waste energy and maybe it even would over time degrade the strength of the network at least in relation to the task of gathering energy efficiently.

This network will as well have difficulty to recognize life forms that are to strong to be harvestable. If the universe is such a life form that can not be predated or if god is one, this explains why it would be impossible to discover them. -

FalseIdentity

62

FalseIdentity

62

That is nice, I will try to remember it :) In fact evolution could have selected some people in a way that they try to solve their problems more in intelectual ways and some people to solve them more with physical violence like the witch hunters did. That evolution can select people positivly that willfully ignore scientific facts is already a bad sign for what evolution is doing when trying to train our brains. Clearly truth is at least not always the main concern of evolution when you look at that people. If you are interested in the subject: there is a measure called "social dominance orientation" and it has been proofed that 1. This trait is genetic. 2. That such people despise science and scientists:As David Hume asserted "reason is . . . a slave to the passions"

https://en.wikipedia.org/wiki/Social_dominance_orientation https://journals.sagepub.com/doi/full/10.1177/1368430221992126

They are as well low in agreableness (means they are egoistic and hart hearted). I am sure they are prime material for a witch judge. Even if we do not agree on everything I wish you all the best and thanks for joining the discussion! -

FalseIdentity

62Serotonine is something extremely complicated. It is not only involved in body functions and in decision processes (it's levels seem to rise in the same moment than dopamine goes down and vice versa at least for the parts of the brain where direct measurements could be made) it's as well as status hormone (if you are a high status individual you have high amounts of it) and in higher doses it can decrease empathy.

FalseIdentity

62Serotonine is something extremely complicated. It is not only involved in body functions and in decision processes (it's levels seem to rise in the same moment than dopamine goes down and vice versa at least for the parts of the brain where direct measurements could be made) it's as well as status hormone (if you are a high status individual you have high amounts of it) and in higher doses it can decrease empathy. -

FalseIdentity

62Well I am already changing my mind in relation to Hoffmann, or lets say I suspended my opinion about him until I understand the counter proof in that study which was linked here better. But the arguments why logic should not be evil are not convincing yet.

FalseIdentity

62Well I am already changing my mind in relation to Hoffmann, or lets say I suspended my opinion about him until I understand the counter proof in that study which was linked here better. But the arguments why logic should not be evil are not convincing yet. -

TheMadFool

13.8kI think you have a very good point here. With all the disbute about Hoffman the focus was lost that my complaint is that logic is evil. If Hoffmann would be right ( I start to have some doubt now too but I will need more time to think this through) this would not have made logic really evil it would have just made it into an intelectual disapointment. What could make it evil is more it's origin in predation. — FalseIdentity

Why the asymmetry - predators being more intelligent than prey animals - you think? The most intelligent species, debatable, on this planet at least is an apex predator viz. h. sapiens BUT, this is where it gets interesting, we're the only ones that have a sense of right and wrong.

So, yeah, logic (intelligence), say, evolved with predation but look at what it's telling us - predation is bad. It's kinda like a reformed criminal, we should give the devil his due.

Sophisticat had mentioned here that the ultimate test of the truth of our mental models is if we can do predict events correctly. At this point it makes sense to look more on how a brain actually learns to make predictions. Any neural network has to be "backtrained" what was a wrong prediction and what was a correct prediction in several rounds. When the prediction was false some neural connections will be cut in the hope that the error they produced will not reoccur. When the prediction was right the connections that this prediction made are reinforced so that the network get's better with every training round. Now I am afraid that the ultimate measure for the brain of what was a wrong prediction and what was a correct prediction is if it gained or lost energy through this prediction. But you can gain energy only by stealing (either directly or indirectly) life energy from other life forms. And since other life forms don't want their life energy to be stolen, the only way to train your brain is by constantly breaking the golden rule. The idea that we are morally allowed to take the life of so called " inferior" species is highly dubious and sounds like an excuse. I think there would be strong opposition if "intelectually superior" aliens came here and would harvest us justifying this with the same line of reasoning. — FalseIdentity

Yes but as I said, again it's logic (intelligence) that made the case for the golden rule. Going by how (some) women are turned off by men being mean, I'd say breaking the golden rule is not going to be advantageous to evolutionary success but...in the animal world, the rules maybe radically different (even herbivores engage in violence in the mating season).

Now the neural network that is trained for this purpose and in this way it should have strong limitations in what kind of intelectual problems it can solve. For example in computer science you can't use a neural network that was trained for language recognition to recognize images. — FalseIdentity

Agreed! Our brains may have become specialized over time, literally putting some problems, the solutions to which beyond our ken.

In the case of the human brain that was in effect trained on how to break the golden rule most efficiently I am for example sure that it can not know what evil is in the metaphysical sense. I agree hence with the critique that - if I am really only that network - I can not know what evil is either. But if I am unable to recognize what evil is I could commit very evil deads all the time without noticing and that alone bothers me.A second unexpected but straightforward conclusion is that if it's impossible to understand for humans what evil is, they should stay away from building counter proof of god based on that term (I mean the problem of evil). — FalseIdentity

Possible. Our brains, if adapted only for food & mates, may have been desensitized/become accustomed to true evil, so much so that it might even seem good and if not that, at least normal.

Yet, as I mentioned before, logic (intelligence), seem to have made some progress - ever wonder why religion seems so, well, obsessed with, as Sam Harris (atheist, neuroscientist, author) puts it, "...what we do naked..." and also with what we eat (halal, kosher, vegetarianism).

Idenpendent of if or not Hoffmann is right - it should be hard to impossible for this network to understand anything that is not either food or can be used as a tool to obtain food. If you see everything just as food or as a tool to food this will preclude you from understanding it's deeper nature. Understanding that deeper nature would just waste energy and maybe it even would over time degrade the strength of the network at least in relation to the task of gathering energy efficiently. — FalseIdentity

Yep! Too bad. Read what I wrote vide supra. -

FalseIdentity

62

FalseIdentity

62

In reality prey animals prey too: they take away the food of other animals. That might look peacefull as long as there is plenty of food but if there is not you can see herbivourous animals fighting in quiet unfriendly ways for it.Why the asymmetry - predators being more intelligent than prey animals - you think?

This is not to say that there is no difference at all in herbivores and predators. Prey animals have to pay attention to all of their surounding all the time (predators could come from any direction) while predators rather scan their surounding meter by meter and than hyperfocus once they spot something. In humans I guess that there are people who pay more attention to their surounding/are more prey like (this are the creative people, there is some relation to how distractable you are by your surounding and how creative you are) and there are people who are more classic predators like psychopaths. In psychopaths it's strange that if a strategy once was successfull but than is not anymore they will take longer time to realize that and drop the strategy than normal people. This is a form of hyperfocusing. In a chase hyperfocusing makes sense because many animals live in groups to confuse predators. If you have the skill to always focus your attention on the same prey/strategy than you will not be confused and you can tire your prey out. If you can not focused you will change prey again and again and the prey will tire you out isntead.

If you think more like a prey you can be for example a good artist and good at any task that requires holistic thinking and integrating large amount of information (seeing the larger picture). If you think extremely focused an logical ... well I hope srongly that not all people who can do that are psychopaths but it starts to scare me.

I might see as well why creative people tend to not get along well with the more "focused" part of humanity :)

Is it really logic alone that makes the case for the golden rule? I think that the golden rule is true, this is either a brain error of me or someone outside evolution is telling me that it is true :)Yes but as I said, again it's logic (intelligence) that made the case for the golden rule. -

TheMadFool

13.8kThe quintessential prey - plants - seem to have given up running from their persecutors (herbivores). I suppose the moment they lost their ability to feel (pain & joy too), their instinct to flee predators also vanished with it and they got, quite literally, stuck to a given spot by their roots.

Lepers for spies! Lepers for spies! -

Gnomon

4.3k

Gnomon

4.3k

In the business model example, there are different levels of "access to information". The workers on the front lines (physical senses) typically receive new information first. They then pass it up the hierarchy, where it is sorted based on the need to know. So the CEO at the top is usually unaware of the bulk of information flow. He/she only receives the most important or urgent data, after it is filtered up through the system. However, an alert CEO may also have his/her own "spies" to actively look for relevant unfiltered information, before it is affected by the mundane priorities of lower levels.To be able to occasionally overrule the motivation it must always have the first access to information and decision about such informations or the occassional overuling would not work reliably — FalseIdentity

Presumably, an alert rational mind also has feelers out for direct access to what's happening inside and outside the system. That doesn't come naturally though. It is learned through experience and special training for the job of chief executive. Philosophy is one method for training the mind to be prepared for unexpected events and sudden crises. You learn to be on the lookout for the warning signs of danger, before it becomes obvious to the lower level senses. Some people call this a "sixth sense", but it's simply what Reason does. The Boy Scout motto was "be prepared".

For a different metaphor : the sailors run the ship, even while the Captain is asleep. But once the ship hits an iceberg, the Captain is aroused, and begins to issue direct orders to all levels of the hierarchy. Even though the Captain didn't have first access to the knowledge that the ship was in danger, and even based on limited knowledge of what's happening, the Captain's general orders overrule the specific instincts of the sailors attempting to repair the breech. For example, don't try to fix the devastating damage, just seal-off the compartment, and retreat to a safer place. :smile: -

dimosthenis9

846But the arguments why logic should not be evil are not convincing yet. — FalseIdentity

dimosthenis9

846But the arguments why logic should not be evil are not convincing yet. — FalseIdentity

Really??So the simple fact that this human a priori ability to search for truth can never be evil isn't good enough for you .How could ever an a priory human feature to be "evil"? Is our capacity of walking also evil then?

I guess it must sound too logical for your taste. -

Gnomon

4.3k

Gnomon

4.3k

I had never heard of "predatory logic" before. But, after a brief review, I see it's not talking about capital "L" Logic at all. Instead, it refers to the innate evolutionary motives that allow animals at the top of the food chain to survive and thrive. PL is more of an inherited hierarchical motivation system than a mathematical logical pattern. Logic is merely a tool that can be used for good or bad purposes. To call the "logic" of an automobile "evil" is to miss the point that a car without a driver, is also lacking a moral value system. It could be used as a bulldozer to ram a crowd of pedestrians, or as an ambulance to carry the wounded to a hospital. The evil motives are in the moral agent controller, not the amoral vehicle.predatory logic — FalseIdentity

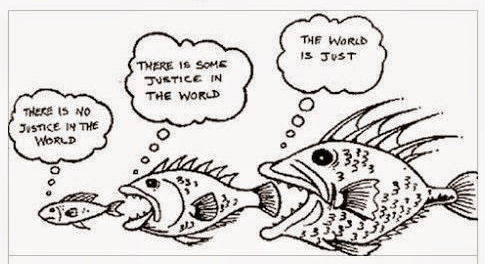

It may be true that predators possess an innate "logical" pattern of predation. But it's also true that their prey have a "logical" pattern of evasion. Those patterns are simply what actions have worked in the past to allow the animal to survive long enough to reproduce. For example, african ungulates have typically relied on their speed & evasive maneuvers to outrun their predators with sharp teeth & claws. Yet, on the whole, there is a balance of power between prey & predator. Only in unusual circumstances does that balance tip one way or the other. If the prey escape every time, the predators starve. But, if the predators are too successful, again some of them starve. So generally, the predator/prey equation remains balanced, Hence, there is no moral inequity that one could justify labeling Nature or Logic as "Evil".

The term "Evil" is a generalization or personification of the outside world, as related to the self. And it would more accurately be labeled "imbalance" or "unfairness" or "injustice". Is it unfair for a lion to use its "predatory logic" & natural weapons to overwhelm a gazelle? Philosophically, we tend to think of Nature as amoral, and reserve immoral or "evil" labels for human behaviors, assuming that they should know better. Yet, only recently has the notion of Ecological Balance occurred to humans. And we are only gradually learning how to apply that knowledge, without tipping the balance against the survival of homo sapiens. To do so, would be Misanthropy, which is an injustice to the majority of innocent humans, who live modest & moral, sometimes oppressed, lives.

BTW, None of that Natural Logic is what Hoffman was talking about in the OP video. He was talking specifically about our innate blindness to the underlying logical mechanisms of nature, which we "see" only in the abstract. Yet again, that's normally enough information for our species to survive and thrive. Those short-sighted "wise apes", as a group, are indeed at the top of the world's food chain. And the natural balance has certainly become temporarily imbalanced, due to human Culture (reason) & Technology (tools). So, predatory humans do use their innate advantages, including the applied logic of Science & Engineering, to modify natural niches to suit human preferences. But, I wouldn't put the blame on the tool : Logic. As gun advocates accurately point out : "guns don't kill people -- people with guns kill people". Should we lobotomize people who are guilty of using Logic?

We civilized apes just happen to be in a position similar to the (formerly extinct) wolves, returned by humans to Yellowstone, to reset the imbalance of over-populated prey animals. Due to their innate talents & tools, including Predatory Logic, they quickly became too successful. So now, environmentalists are calling for culling. Not because their (wolf & human) logic is evil, but because "success" is a two-edged sword. Fortunately, humans, being moral agents, are capable of setting limits (government, laws) on their own group behavior. That doesn't convert Devils into Angels, it merely restores temporary balance to a dynamic world. :naughty: :halo:

Moral agency is an individual's ability to make moral judgments based on some notion of right and wrong and to be held accountable for these actions. A moral agent is "a being who is capable of acting with reference to right and wrong."

___Wikipedia

FOOD CHAIN JUSTICE

PS__A logical system without a good/bad value system is merely a dumb mechanism. -

TheMadFool

13.8k

A serious and good philosophical work could be written consisting entirely of jokes. — Ludwig Wittgenstein -

TheMadFool

13.8kI had never heard of "predatory logic" before. But, after a brief review, I see it's not talking about capital "L" Logic at all. Instead, it refers to the innate evolutionary motives that allow animals at the top of the food chain to survive and thrive. PL is more of an inherited hierarchical motivation system than a mathematical logical pattern. Logic is merely a tool that can be used for good or bad purposes. To call the "logic" of an automobile "evil" is to miss the point that a car without a driver, is also lacking a moral value system. It could be used as a bulldozer to ram a crowd of pedestrians, or as an ambulance to carry the wounded to a hospital. The evil motives are in the moral agent controller, not the amoral vehicle. — Gnomon

I was just wondering how the body structure of predators seem more complex, very designed (retractable claws, pincers, stereoscopic vision, fangs, agility, power, clubs, etc.) compared to those of prey. If I were the creator, I'd have to put more work into the blueprint for a predator than a prey.

Basically, the point I'm trying to get across is that predators need to be more intelligent than prey. Planet earth is a case in point - the most intelligent organism viz. humans are predatory, in fact they're the apex predator. Makes me wonder about the wisdom of the Arecibo Message, SETI, Voyager Golden Record. Are we sending out an invite for a gala feast, us on the menu? -

Isaac

10.3kWhen we develop models analytically, such as in science or in everyday reasoning, it is certainly possible - and seductive - to come up with a model that is resistant to falsification. But it seems to me that such a modelling system would be difficult to evolve in the first place, because the selective pressure would be weak to non-existent. — SophistiCat

Isaac

10.3kWhen we develop models analytically, such as in science or in everyday reasoning, it is certainly possible - and seductive - to come up with a model that is resistant to falsification. But it seems to me that such a modelling system would be difficult to evolve in the first place, because the selective pressure would be weak to non-existent. — SophistiCat

Yes, I agree. I think the development of beliefs is far more directly causal than that. I see hidden states of the system as causing the states internal to the system which, by interaction, seeks to minimise the surprise those external states can generate, not teleologically, but as a simple free energy reduction. There's a good paper by Friston (although very speculative, I should stress) on how this might come about.

https://discovery.ucl.ac.uk/id/eprint/1399513/1/Friston_Journal_of_the_Royal_Society_Interface.pdf -

GraveItty

311The "good" that you mention is nothing more than a human invention!! There is no good or bad in universe. — dimosthenis9

GraveItty

311The "good" that you mention is nothing more than a human invention!! There is no good or bad in universe. — dimosthenis9

I'm not sure I understand the exclamation marks. Why? Even if it is, aren't people part of the universe and thus good and bad? -

GraveItty

311I watched the video. A new propagandist for the sciences and the central dogma of biology (equivalent to that dumb selfish gene interpretation of Dawkins, another propagandist), covered with a sausage of metaphysical BS about a reality in which the space and objects around us are compared with symbols on a computer screen. It's pretty obvious that a red apple is a construction of the mind. And so is his conception of the red apple. What exactly his "transistors and diodes and electrical currents" are becomes not apparent in the video and I don't bother to invest further. As a physicist I stick to the physical interpretation endowed with a magical ingredient giving rise to consciousness. Which is an interpretation too, but that's all we have. There is not one ultimate truth (so hammered upon by the sciences). The bottle-fucking beetle is fun though! Imagine that would be our reality... Jack Daniel's replacing my wife... "It's been a long time Jack, welcome back! How's Johnnie Walker doing?"

GraveItty

311I watched the video. A new propagandist for the sciences and the central dogma of biology (equivalent to that dumb selfish gene interpretation of Dawkins, another propagandist), covered with a sausage of metaphysical BS about a reality in which the space and objects around us are compared with symbols on a computer screen. It's pretty obvious that a red apple is a construction of the mind. And so is his conception of the red apple. What exactly his "transistors and diodes and electrical currents" are becomes not apparent in the video and I don't bother to invest further. As a physicist I stick to the physical interpretation endowed with a magical ingredient giving rise to consciousness. Which is an interpretation too, but that's all we have. There is not one ultimate truth (so hammered upon by the sciences). The bottle-fucking beetle is fun though! Imagine that would be our reality... Jack Daniel's replacing my wife... "It's been a long time Jack, welcome back! How's Johnnie Walker doing?" -

dimosthenis9

846Even if it is, aren't people part of the universe and thus good and bad? — GraveItty

dimosthenis9

846Even if it is, aren't people part of the universe and thus good and bad? — GraveItty

Is there any good or bad in universe except human societies? Aren't these simple things that people try to define as to make our societies and our living together function??

Can an a priory human skill like Logic ever be bad or evil? Especially logic which is our "searching for truth engine" , which helped us the most to evolve?

We could discuss maybe if we make sometimes bad use of some of such a priory human skills. But regarding to Logic, I don't see not even one harm that could bring or brought into humanity.

On the contrary, I see many harms caused by lack of it. Underestimating it and ignoring it.

Logic is our most precious virtue. Nothing evil with that. -

GraveItty

311Is there any good or bad in universe except human societies? Aren't these simple things that people try to define as to make our societies and our living together function?? — dimosthenis9

GraveItty

311Is there any good or bad in universe except human societies? Aren't these simple things that people try to define as to make our societies and our living together function?? — dimosthenis9

"Human societies", like "a country", are abstract concepts and can as such not be good or bad. A country or society has no mind of its own. Nor has a society. Good and bad are not "defined", they are just human qualities (in this sense, a society trying to get rid of the bad is more inhumane than one in which it can exist).

Can an a priory human skill like Logic ever be bad or evil? Especially logic which is our "searching for truth engine" , which helped us the most to evolve? — dimosthenis9

To answer your first question, it can. And in science-based societies it's doing even evil, with no bad intentions though. Look at the state of the world. Look at the harm done to Nature.

The remark following is, excusez les mots, bull-nonsense. Searching for a truth engine (whatever that may mean...) helping us most to evolve? If you wanna evolve into a truth engine then it's maybe handy. I surely don't! -

dimosthenis9

846"Human societies", like "a country", are abstract concepts and can as such not be good or bad. A country or society has no mind of its own. Nor has a society. Good and bad are not "defined", they are just human qualities — GraveItty

dimosthenis9

846"Human societies", like "a country", are abstract concepts and can as such not be good or bad. A country or society has no mind of its own. Nor has a society. Good and bad are not "defined", they are just human qualities — GraveItty

Human societies aren't abstract concepts. They are reality. Just saying that meanings like "good" and "bad" are only conceptions that human use as to live together in harmony.

To answer your first question, it can. And in science-based societies it's doing even evil, with no bad intentions though. Look at the state of the world. Look at the harm done to Nature. — GraveItty

Which exactly human a priori skill is by nature evil? We can discuss the use we make of such a priory skills and how we can create evil. But by nature these skills on their own can never be evil. Is our walking ability evil also then?

Look at the harm done to Nature. — GraveItty

I can't follow you. Is that Logic's fault?

Searching for a truth engine (whatever that may mean...) helping us most to evolve? If you wanna evolve into a truth engine then it's maybe handy. I surely don't! — GraveItty

I don't want to evolve into anything. We humans have that mind ability already. Logic is our mind's searching for truth mechanism and I still can't see not even one thing that Logic brings harm.

And yeah of course Logic helped us the most to evolve. We use Logic as to find the best solution - truth in every situation - problem we faced as humanity. And that's how we got here.

I can't see how anyone can deny that. But apparently you do. -

GraveItty

311Human societies aren't abstract concepts. — dimosthenis9

GraveItty

311Human societies aren't abstract concepts. — dimosthenis9

They are. But part of reality.

I don't want to evolve into anything. We humans have that mind ability already. Logic is our mind's searching for truth mechanism and you still haven't mentioned not even one thing that Logic brings harm. — dimosthenis9

Of course, logic perse does no harm. But logic and "search for truth" relentlessly pursuited by scientists (who indeed are similar to truth or logic engines, though luckily there are exceptions) and applied to Nature brings our physical, and all the live in it, to the brink of extinction. Many species have already been swiped away from the Earth's surface, people suffer from science-based technology (as do many caged animals in experiments to find the so-called Truth; there are even scientists getting rewarded for systematically torturing animals!). And it doesn't look the situation gets better.

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum