-

Count Timothy von Icarus

4.3kPost-modernism is generally seen as to some degree in conflict with popular realist accounts of science. However, I have come to the conclusion that successful theories in science support some of the key claims of post-modernism.

Count Timothy von Icarus

4.3kPost-modernism is generally seen as to some degree in conflict with popular realist accounts of science. However, I have come to the conclusion that successful theories in science support some of the key claims of post-modernism.

To explain why, we have to briefly turn to the "Scandal of Deduction."

The Scandal of Deduction

In any deductively valid argument, the conclusions must already be implicit in the premises. From this, it follows that no new information is generated by deterministic computation in the form of logical operations, nor by deduction in general. On the face of it, this widely accepted proposition, the “scandal of deduction,” seems ridiculous. Deductive arguments appear to carry information; they reduce our uncertainty. Data analysis software seems to tell us new things about our data, etc.

Prior attempts to analyze this disconnect have tended to focus on less popular semantic theories of information as a means resolving the problem. My contention would be that it can be explained entirely within the framework of Claude Shannon’s foundational theory of information.

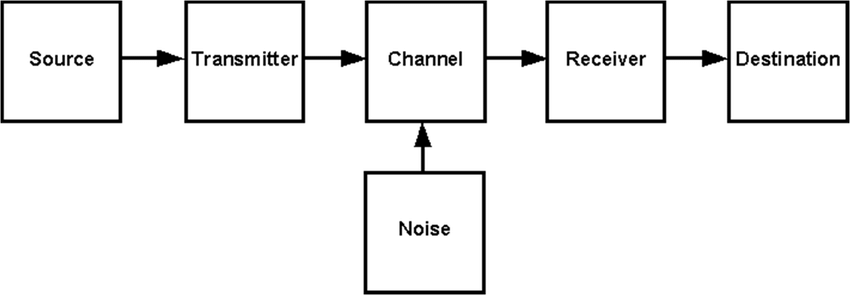

Shannon’s theory shows how information flows from a source to a destination. Information is transmitted across achannel. The channel is the medium through which a signal passes from the information source to a receiver (e.g., between a physical system and a measuring device). Thereceiver then “reconstructs the message from the signal,” making it available to a destination, “the person (or thing) for whom the message is intended.”

The key to resolving the Scandal of Deduction is to realize that computation itself, and the activities of the human brain, all involve ongoing communication. We tend to think of the “destination” in Shannon’s theory as a unified entity, but this is only true in the abstract. We might think of the text of this post as the signal in Shannon’s model, the space between our eyes and the screen as the channel, and our eyes as the receiver, but this is too simple. Our experience of sight is the result of an extraordinary number of neurons computing and communicating together. We could as well talk of electrical activity down the optic nerve or the collective action of some neurons in the visual cortex as the signal vis-à-vis different stages of the process. Cognitive science tells us there is no one “destination,” no Cartesian homunculus, who ultimately understands the message; consciousness is an emergent process.

Likewise, in the archetypal example of a Turing Machine there is communication after an input has been fed to the machine. The head of the machine has to read the tape. Nothing in the Turing Machine is a “destination,” that receives the entire output at once, just as no single neuron understands a message and no single logic gate does computation alone. In the same way, “memory” systems can be seen as a form of communication between past states of a system and its future self.

The information entropy of an original message is not changed by computation; information sets an upper bound on the maximum reduction in uncertainty a recipient can receive from that datum alone*. However, the “Scandal” only exists if we assume all the information required to generate "meaning" exists in the physical signal of some message. This is obviously false, rocks do not gain information about logical truths if we carve them into them, meaning is not contained in signals alone.

A machine running an algorithm doesn't "know" the output of that algorithm before it is done computing for precisely the same reason that a communication cannot arrive at a destination before it has been sent. The enumeration of logical entailments in computation are causal processes and take time to complete-- the "Scandal of Deduction," comes from assuming that effects can precede their causes.

When we understand messages we “bring information to the table.” The initial signals we receive are combined with a fantastic amount of information stored in the brain before we become consciously aware of a meaning.

Messages do not exist as isolated entities in the real world; when we receive a message it is necessarily corelated with other things in the world. Indeed, Shannon Entropy can only be calculated in terms of the number of possible outcomes for some random variable in a message, but knowledge of which outcomes are possible, and their probabilities, requires background information.

The Relevance of Information Theoretic/Computational Accounts of Consciousness to Post-Modernism and Theories of Meaning

The information that signals are combined with in the brain varies by person and it varies according to the amount of cognitive resources that we are able to dedicate to understanding the message. "Understanding," is itself an active process that requires myriad additional communications between parts of the mind and the introduction of vast quantities of information not in the original signal.

This is why listening to someone read a passage while you are distracted versus meditating on/completing a "deep read" of that same passage can result in taking an entirely different meaning from the exact same signal. The semantic content of messages is thus determined not only at the individual level, but at the level of the individual instance of communication.

That said, the amount of resources we commit to understanding a message is dictated by not only conscious processes (i.e., executive function), but by unconscious processes. If we are angry, hungry, high on drugs, etc., the ways in which we understand are affected in ways that we often are unaware of. One other way our interpretation of signals is affected unconsciously is through social conditioning, norms, etc. So here, post-modernism has a point about how culture interacts with experience and meaning. This is not true simply of language, but of all of phenomenal existence, as all incoming sensory data is itself a "message."

For an example of this, consider how you are able to properly respond to someone without giving offense even when you are distracted and not really listening to what they are saying. Here, learned social norms of discourse are unconsciously dictating both the amount of meaning you glean from incoming signals (i.e., just what is needed to complete your part of the language game), as well as the signals you will produce in response.

A few other points:

- In a way, post-modernism is correct to reject the "universal validity" of binary oppositions. While it is true that some things imply their opposite, it is not true that all entailments are necessarily immediately accessible to us. This follows if we adopt some form of computational theory of mind, even a limited one. Per the analysis of the Scandal of Deduction above, we do not understand all the entailments of some proposition until we have actually finished the computation that enumerates that entailment. Further, depending on our conscious and unconscious priorities, we might not even preform such computations, so such "implied opposites" simply do not exist (for us) in some contexts. We must differentiate between entailment as an abstract, eternal relation, and entailment as enumerated in physical computation.

- Derrida's claim, following Sausser, that signs gain their meaning through contrast with other signs alone is, in fact, completely in line with modern information theory. Taken with the point above though, we must keep in mind that even if a sign is defined in terms of oppositions, we need not always have all those oppositions present within conscious awareness to understand part of a sign's meaning. This seems to flow with the ideas behind deconstruction.

- The "belief that phenomena of human life are not intelligible except through their interrelations," and that "these relations constitute a structure, and behind local variations in the surface phenomena there are constant laws of abstract structure," Blackburn's description of structuralism, could as well be applied to an information theoretic understanding of cognition. Cognition, in this account, involves introducing a vast amount of information into our active understanding of signals, and this is only possible because we are equipped to map commonalities onto one another.

- Information theory gives us the tools to explain how it is that incorporeal entities such as recessions, cultures, laws, etc. can exist within a physicalist ontology and causally interact with the world. Such theories of incorporeal entities necessarily share a lot of similarities with structuralism. Moreover, they offer us a way to understand how entities like culture or zeitgeist can both exist in the world and have a causal role in our perceptions.

- An information theoretic model of consciousness suggests that something like Bachelard's proposed "epistemological obstacles/breaks"—"or unthought/unconscious structures that were immanent within the realm of the sciences," are not only possible, but likely to exist.

- Post-modernism may overstate the justification for the linguistic turn. When we understand words, we use our entire sensory system. The same system used to process vision is used to imagine or remember images. Likewise, the auditory cortex processes incoming sounds, and also works to help us imagine sounds. Thus, the line between "language" and "phenomena," is artificial. Language is not some distinct thing we do that cuts us off from "non-linguistic phenomenal existence," rather language does its job by utilizing the same systems that give rise to phenomenal existence itself.

* To see why, consider that a program which randomly generates a page of text will eventually produce every page that will ever be written in English: the pages of a paper on the cure for cancer, the pages a proof that P ≠ NP, a page accurately listing future winning lottery numbers, etc.

However, the information contained in messages is about (corelated with) their source. Even if such a program miraculously spawned several pages that purported to hold the cure for cancer, there would be almost no chance that it corresponded to a real cure for cancer, since there are far more ways to write papers on incorrect cures for cancer than there are ways to write a paper about how to actually cure cancer. Any messages from such a program only tell us about the randomization process at work. - In a way, post-modernism is correct to reject the "universal validity" of binary oppositions. While it is true that some things imply their opposite, it is not true that all entailments are necessarily immediately accessible to us. This follows if we adopt some form of computational theory of mind, even a limited one. Per the analysis of the Scandal of Deduction above, we do not understand all the entailments of some proposition until we have actually finished the computation that enumerates that entailment. Further, depending on our conscious and unconscious priorities, we might not even preform such computations, so such "implied opposites" simply do not exist (for us) in some contexts. We must differentiate between entailment as an abstract, eternal relation, and entailment as enumerated in physical computation.

-

Ø implies everything

264I didn't read your entire post because I found it overly complicated. If you tell me my response shows a lack of understanding of the essence of your stance, then I will read the entirety of your post.

Ø implies everything

264I didn't read your entire post because I found it overly complicated. If you tell me my response shows a lack of understanding of the essence of your stance, then I will read the entirety of your post.

All the information in a formal system finds its most reduced informational form in its axioms and inference rules, just like 122122122... finds its most reduced informational form in repeat(122). So, in this sense, you do not gain any information when you derive things in a formal system; the only thing you do is expand the information, like repeat(122) = repeat(122122).

But this is all talking about the information inherent in some Platonic form of a formal system. It doesn't matter. Our minds are limited, we are not capable of taking information in its most reduced form and immediately seeing all of its expanded forms. Thus, we learn things when we expand information; which means we do derive new information from deduction. The new information finds its place in our minds, not in the Platonic realm, but who gives a damn?

The Scandal of Deduction is, although true, no scandal at all. -

Count Timothy von Icarus

4.3k

Count Timothy von Icarus

4.3k

Yeah that's it. But the move isn't just to assert that the scandal is resolved "because we are finite," but to show how the foundational model in information theory generates this problem by having a Cartesian Homunculus hidden in plane sight.

And given the amount of ink spilled on this topic it does not seem like it has been easy for people to disambiguate mathematical relations as abstract/Platonic entities and computation as a description of how physical systems or abstractions based on them like the Turing Machines, differ in fundemental ways.

The other part is how semantic meaning is not contained in messages themselves, which is why several semantic theories of information have failed. The problem is a too simplistic formalism of how cognition works rather than allowing that you're talking about a formalism that is estimated to best be represented digitally by a thousand-million-million floating point operations per second (and potentially far more).

IMO, a reason for this disconnect is the fact that the amount of information entering conscious awareness appears to be an extremely small fraction of all information being processed by the brain at any given point. Thus, the complexity of producing meaning from signs is all hidden from us, causing people to either propose wholly insufficient formalisms to account for semantics or to posit the necessity of dualism or idealism to explain the disconnect.

But actually, most of the post is how some theses in post modern philosophy fit with the information theoretic model of cognition. -

javra

3.2kWhile the so called “scandal of deduction” is not something I personally find great interest in (primarily due to what I take to be the exceeding ambiguity, and possible equivocations, to what is meant by the term “information”), I can certainly respect your argument and agree with its conclusion. To paraphrase my understanding of it: The understanding of conclusions obtained from valid (or sound) deductions will always be novel to anyone who has not previously held an understanding of that concluded but who does hold awareness of the premises – and so will thereby bring about new information to such persons.

javra

3.2kWhile the so called “scandal of deduction” is not something I personally find great interest in (primarily due to what I take to be the exceeding ambiguity, and possible equivocations, to what is meant by the term “information”), I can certainly respect your argument and agree with its conclusion. To paraphrase my understanding of it: The understanding of conclusions obtained from valid (or sound) deductions will always be novel to anyone who has not previously held an understanding of that concluded but who does hold awareness of the premises – and so will thereby bring about new information to such persons.

I here however primarily want to champion this following affirmation, which some might not deem intuitive:

When we understand messages we “bring information to the table.” The initial signals we receive are combined with a fantastic amount of information stored in the brain [I'd prefer to say, "in the unconscious mind ... which holds the cellular processes of the brain as it constituent makeup (as does consciousness)"] before we become consciously aware of a meaning. — Count Timothy von Icarus

Every understanding (else here expressed, “meaning”) – this as one example of what we can intend to consciously convey – which we seek to express to others via perceptual means (these most often being visual, auditory, or tactile signs among humans) shall always be understood by others via that body of ready established understandings which each individual other for the most part unconsciously holds in a ready established manner. This will of course apply just as much to all understandings others intend to express to us.

It can thereby be safely inferred that, most of the time, the meaning we are consciously aware of and intend to convey to others via signs will end up in the other’s mind being a hybridization of a) the meaning we hold in mind that we intend to convey and b) the ready established, largely unconscious, body of meaning which the other is endowed with. And, so, in most cases it will not be understood by the other in an identical way to our own conscious understanding. Less often, we can feel, or intuit, that the other fully “gets us” – such that what they hold in mind is felt to be indiscernible from what we hold in mind – and we can corroborate this feeling by further interactions with the said other. Nevertheless, in all such instantiations, the meaning which is conveyed shall always be in large part dependent – not on the perceptual phenomena employed, but, instead – on the ready present, largely unconscious, body of meaning the other is endowed with.

You’ve exemplified rocks. One can just as validly exemplify lesser animals. A typical dog, for example, can understand a very limited quantity of meanings which we convey by signs. But express the phrase “biological evolution” or that of “calculus” to a dog and the dog will hold no comprehension of the meaning one here would be intending to convey by these linguistic signs. The same dog might get you when you utter “good dog”, but the dog’s understanding of what this meaning is, for example, in reference to will be fully dependent on the dog’s largely unconscious body or ready present understandings.

Which is to only further endorse parts of the OP such as the following:

The information that signals are combined with in the brain varies by person and it varies according to the amount of cognitive resources that we are able to dedicate to understanding the message. "Understanding," is itself an active process that requires myriad additional communications between parts of the mind and the introduction of vast quantities of information not in the original signal. — Count Timothy von Icarus

--------

At any rate, nice OP! -

RogueAI

3.5kSomeone on a philosophy forum once said the amount of new information (they might have said knowledge) in a dictionary is essentially zero, because all the dictionary does is refer to itself. That's always stuck with me. What do you think of that?

RogueAI

3.5kSomeone on a philosophy forum once said the amount of new information (they might have said knowledge) in a dictionary is essentially zero, because all the dictionary does is refer to itself. That's always stuck with me. What do you think of that? -

Wayfarer

26.2kYour prose is always a model of clarity, and this piece is very well written, but I wonder if it is too much information (speaking of information!) for a forum post. I don't know if you write on Substack or Medium or any of the long-form prose platforms that are beginning to proliferate, but I suspect a piece of this length and density might be better suited to those media.

Wayfarer

26.2kYour prose is always a model of clarity, and this piece is very well written, but I wonder if it is too much information (speaking of information!) for a forum post. I don't know if you write on Substack or Medium or any of the long-form prose platforms that are beginning to proliferate, but I suspect a piece of this length and density might be better suited to those media.

I did read the entire post, and hereunder a few points.

In any deductively valid argument, the conclusions must already be implicit in the premises.... — Count Timothy von Icarus

I don't know if this allows for the all-important role which Kant assigns to the synthetic a priori. A purely deductive truth, that if a man is a bachelor he must be unmarried, is indeed kind of trite, if not scandalous, but the role of the synthetic a priori is a different matter. Einstein's theory of special relativity could be considered an example. Concepts like time dilation, length contraction, and the equivalence of mass and energy (E=mc²) were not deduced purely from logical analysis but were derived from a combination of theoretical reasoning and empirical evidence. However, they are a priori in the sense that they hold true in all inertial frames of reference and do not require direct empirical verification for their validity. There are countless other examples in science (one classic being Dirac's prediction of anti-matter on the basis of mathematics.)

Shannon’s theory shows how information flows from a source to a destination. — Count Timothy von Icarus

Shannon's theory has been the source for an abundance of metaphors and allegories about the purported metaphysics of information, but we can't loose sight of the fact that Shannon was an electrical engineer, and his theory applies to the transmission of information across media (which is why the theory is so fundamental to technology.) The fact that he linked his theory with entropy gives it an additional level of suggestiveness (do you know that Shannon only introduced the idea of 'information entropy' at the urging of Von Neumann, who told him that, as nobody really understood what it meant, it would give him the edge in any debate?)

In any case, the major point is that Shannon's theory strictly speaking applies to information transmission via electronic media, and its use beyond that is allegorical.

Cognitive science tells us there is no one “destination,” no Cartesian homunculus, who ultimately understands the message; consciousness is an emergent process. — Count Timothy von Icarus

I don't think the fact that cognitive science can identity such an entity detracts from the all-important role of judgement in the reception of information, nor does it account for the subjective unity of consciousness, which is the lived reality, even if cognitive science can't account for it. As you go on to say:

The initial signals we receive are combined with a fantastic amount of information stored in the brain before we become consciously aware of a meaning. — Count Timothy von Icarus

That information is not only 'in the brain'. I don't know If you're aware of the cognitive scientist and philosopher Alva Noë. In "Out of Our Heads," Noë argues against the view that consciousness is solely a product of the brain's activity. He contends that the traditional approach of trying to understand consciousness by studying neural processes within the brain is insufficient and ultimately misleading. Noë proposes that consciousness is not something that happens exclusively inside the brain but emerges through dynamic interactions between the brain, body, and the external world. As such he is aligned with enactivism or embodied cognition which explores how our perception and experience of the world are shaped by our embodiment and interaction with our surroundings.

That's about all, otherwise my own response will also be too long! I have a few other points but I'll save them for now. -

Ø implies everything

264Yeah that's it. But the move isn't just to assert that the scandal is resolved "because we are finite," but to show how the foundational model in information theory generates this problem by having a Cartesian Homunculus hidden in plane sight. — Count Timothy von Icarus

Ø implies everything

264Yeah that's it. But the move isn't just to assert that the scandal is resolved "because we are finite," but to show how the foundational model in information theory generates this problem by having a Cartesian Homunculus hidden in plane sight. — Count Timothy von Icarus

Given your post (which I have read in its entirety now), I assume you mean the issue with the Homunculus is that the information that reaches us never reaches a destination. Instead, it is processed bit by bit (pun intended), thus delocalizing the information's meaning across the computation; both the structure and the process (that is, it is delocalized both in space and time).

If I understand correctly, you think that the lack of unity in the destination means that the original information is never fully reconstructed, which is why we do not wind up with that original information; instead, we wind up with information derived from it, that is tied up into a model of reality (the information we bring to the table), and typically not fully put together as single entity anyways.

Although the lack of a destination would be able to explain the "appearance" of a paradox in the Scandal of Deduction, it is not necessary, nor the most pertinent fact. Everything is explainable by the fact that computation is needed to expand information (for lowly creatures like us), which thus explains why we can add information to our minds by manipulating information that already contained (formally) the information we added.

An analogy can be used. Let's say you have two objects present with you in a room. After an action that does not involve anything or -one leaving or entering the room, a new object becomes apparent to you. How? Well of course! The second object was contained within the first object, meaning the first object obscured the second. The action you performed was taking the second object out of the first object, thus revealing a new object. It is new to you, but not new to the room.

You are the computer and the room is the formal system; the first object is a (list of) premise(s) and the second object a theorem. The theorem already existed within the Platonic form of the formal system, but only after the computation did the theorem become apparent to you.

Computation generates new information in the sense that it reveals the information you had "access" to but did not previously apprehend. Whether or not one can call that new information is just a matter of semantics; whether one should is a matter of practicality. I think we should, because practically speaking, deduction is a way to gain new information. -

javra

3.2k

javra

3.2k

Because I don’t want to start a new thread on this topic, I’m presenting this as a tangential to the thread’s theme. Hopefully @Count Timothy von Icarus doesn’t object.

[Moderator note: @javra - the remainder of this comment has been copied to a separate discussion as while it's a very interesting question and worth pursuing, it is very much outside the scope of this original post's intent in my view.] -

Mark Nyquist

783

Mark Nyquist

783

Shannon information is a physical state.

Shannon information = [physical state]

Brain information ( what we experience) is much more. There is the ability to hold and manipulate non-physicals.

Brain information = [the physical brain; (non-physical content)]

This is probably the basic premise behind dualism and Shannon information doesn't address it.

A simple example would be time perception. The past and future do not physically exist but are part of our mental process. My observation is that Shannon information has no ability to step outside the physical present but brain information does.

So discuss Shannon information all you like but be aware of its limitations.

And if you are looking for a grand theory of things you should abandon Shannon information and go with brain information. Try an explanation of time perception using Shannon information and the problems become apparent. -

Count Timothy von Icarus

4.3k

Count Timothy von Icarus

4.3k

Thank you.

primarily due to what I take to be the exceeding ambiguity, and possible equivocations, to what is meant by the term “information”

Yup, and it is a huge problem precisely because information theory has been so successful across so many fields. You see information invoked in physics, biology, economics, psychology, etc. and it also gets held up as a bridge that can even translate across these fields. For example, there is the idea that gold became a standard currency in so many places because of its physical traits, namely that it is scarce enough without being to scarce, and because its malleability gave it cryptological significance. That is, since you couldn't fake it, it said something about your real willingness to live up to trade obligations.

But everyone admits the term is loaded and lacks a clear definition. Attempts to operationalize it in different ways haven't necessarily done much to fix this. I think part of the reason for this is because we have been looking at the formalism in terms of quantification only, and not so much the model that goes along with the formalism.

Every understanding (else here expressed, “meaning”) – this as one example of what we can intend to consciously convey – which we seek to express to others via perceptual means (these most often being visual, auditory, or tactile signs among humans) shall always be understood by others via that body of ready established understandings which each individual other for the most part unconsciously holds in a ready established manner. This will of course apply just as much to all understandings others intend to express to us.

Exactly. While I am not a fan of eliminativism, I have always been a big fan of R. Scott Bakker. While I don't think his Blind Brain Theory does what he wants it to, it made me realize how unconscious processes play this absolutely tremendous role in cognition that it is very easy to ignore. I will probably try to flesh out this set of ideas with those from this prior thread:

https://thephilosophyforum.com/discussion/11154/blind-brain-theory-and-the-unconscious/p1

It can thereby be safely inferred that, most of the time, the meaning we are consciously aware of and intend to convey to others via signs will end up in the other’s mind being a hybridization of a) the meaning we hold in mind that we intend to convey and b) the ready established, largely unconscious, body of meaning which the other is endowed with. And, so, in most cases it will not be understood by the other in an identical way to our own conscious understanding.

Exactly. And this flows with Gadamer's "Fusion of Horizons," theory very well. I actually think there is a ton of good work that can be done by mining the insights of continental philosophy and other humanities and attempting to put them into a framework that will play nicely with the sciences. Obviously, this will involve losing some things in translation, and some will complain that this necessarily "corrupts" the original sense in which the theories were offered.

I am not too worried about that. Some, obviously not all, continental philosophy has some incredible intuitions spelled out for us. However, the huge gulf between the two main branches of philosophy, and the (IMO currently healing) gulf between (mostly analytic) philosophy and science has kept these from being more widely dispersed. I think information theory and complexity studies in particular give us the language to begin a translation process. E.g., I'm also working on trying to put Hegel's theory of institution/state development, laid out in the Philosophy of Right, into the terms of the empirical sciences.

(And of course, part of the reason for the gulf is the "linguistic turn" leading continental philosophers to begin making up slew of new compound words and phrases, so as to avoid "cultural taint," but IMO this has mostly had the effect of making them unintelligible to people outside a small niche). -

javra

3.2kAnd this flows with Gadamer's "Fusion of Horizons," theory very well. — Count Timothy von Icarus

javra

3.2kAnd this flows with Gadamer's "Fusion of Horizons," theory very well. — Count Timothy von Icarus

Just checked it out. It strikes me as a very formal way of addressing what is traditionally meant by the non-euphemistic use of the term "intercourse". In other words, that course, or path, that so becomes on account of interactions and which all interlocutors pursue. We don't hear "verbal intercourse" too often, but that's what all communications consist of. As I see it at least.

I actually think there is a ton of good work that can be done by mining the insights of continental philosophy and other humanities and attempting to put them into a framework that will play nicely with the sciences. — Count Timothy von Icarus

For lack of better current analogy, I find a tightrope in the empirical sciences between raw data empirically obtained and the (inferential) theories that accounts for the data. That said, for me at least, at the end of the day the empirical sciences are about validating falsifiable inferences via empirical observations. I often like to simplify the matter into the humorous story of: "Hey, I see X. Do you see X as well? Yea?, then lets see if others also see X. If we all see X, then X must be real to the best understanding of reality we currently posses. Given all the Xs we currently know of, whats the best way to inferential account for all of them? OK, that theory works better than the others. We will hold onto it until the new Xs we discover can no longer be explained by the theory. So our theories regarding what is real can drastically change over time; but the X's we've all observed and can still observe at will shall remain staple aspects of what we deem real."

All that to say, I agree with what you've expressed. Bearing in mind that such very approach can be reduced to the humanities in so far as it is itself a philosophical understanding of how to best understand out commonly shared world. Hence, the philosophy of (the empirical) sciences. (As an aside, I'm very grateful for having taken such courses as part of my biological evolution studies in university days, offered by some fairly hardcore biological scientists. I developed better Cog. Sci. experiments because of it, for starters.)

I think information theory and complexity studies in particular give us the language to begin a translation process. E.g., I'm also working on trying to put Hegel's theory of institution/state development, laid out in the Philosophy of Right, into the terms of the empirical sciences. — Count Timothy von Icarus

That is awesome! I'd like to have access to your work someday, this when you're ready to share it.

(And of course, part of the reason for the gulf is the "linguistic turn" leading continental philosophers to begin making up slew of new compound words and phrases, so as to avoid "cultural taint," but IMO this has mostly had the effect of making them unintelligible to people outside a small niche). — Count Timothy von Icarus

I get that and I agree. The irony being that in my own work I haven't been able to find an alternative to using novel words for what I find to be novel ways of conceptualizing things. But yea, if one can avoid it, it's best to avoid so doing. -

Count Timothy von Icarus

4.3k

Count Timothy von Icarus

4.3k

Count Timothy von Icarus Your prose is always a model of clarity, and this piece is very well written, but I wonder if it is too much information (speaking of information!) for a forum post. I don't know if you write on Substack or Medium or any of the long-form prose platforms that are beginning to proliferate, but I suspect a piece of this length and density might be better suited to those media.

Yeah probably. I have been meaning to look into those.

I don't know if this allows for the all-important role which Kant assigns to the synthetic a priori. A purely deductive truth, that if a man is a bachelor he must be unmarried, is indeed kind of trite, if not scandalous, but the role of the synthetic a priori is a different matter. Einstein's theory of special relativity could be considered an example. Concepts like time dilation, length contraction, and the equivalence of mass and energy (E=mc²) were not deduced purely from logical analysis but were derived from a combination of theoretical reasoning and empirical evidence. However, they are a priori in the sense that they hold true in all inertial frames of reference and do not require direct empirical verification for their validity. There are countless other examples in science (one classic being Dirac's prediction of anti-matter on the basis of mathematics.)

Right, empirical evidence is crucial for coming up with and vetting the equations. The Scandal has to do with the fact that given any algorithm A and an input I, A(I) = O, the output, with probability = 100%. This is true even for non-deterministic polynomial time problems because the way you search the sample space doesn't matter, so long as it is deterministic. Non-deterministic computation would indeed introduce new information.

The idea isn't that we don't get new information by combining deduction and induction, it's that deterministic symbolic manipulation specifies its output with p = 1, which implies no information gain. But to take this a step further, Russell and the Vienna Circle largely held that mathematics as a whole was tautological in this way. I have seen some efforts to get around this that are quite elaborate and I think it misses the point, which is that the meaning is what we're out to discover, and that doesn't all come with the formal symbol manipulation.

Of course, J.S. Mill had a great rebutal the the "deduction does not tell us anything new," line, which was a deadpan: "one must have made some significant advances in philosophy to believe it." :rofl: He thought all deduction was still empirical because it's still experienced, but of course he lived before digital computers.

I don't think the fact that cognitive science can identity such an entity detracts from the all-important role of judgement in the reception of information, nor does it account for the subjective unity of consciousness, which is the lived reality, even if cognitive science can't account for it. As you go on to say:

There is plenty of evidence against the Cartesian Homunculus/Theater outside of cognitive science. You see it in phenomenological accounts too. Hume describes a bundle of sensations and thoughts as opposed to the unified I of Descartes, Buddhist anattā gets at the same sort of thing, and Nietzsche complains that the "I" should be thought of as a "congress of souls." The Patristics had a similar conception; Saint Paul fights a war with "the members of [his] body," while one of them, I forget which, needs Christ to quell the uproar of the "legion within" (obviously recalling the exorcism in Luke; might be Basil the Great).

This tracks with behavioral data. You have the observations of split brained people that show they seem to almost have two minds that are working. Asking them to write down their ideal job yields a different answer from each hand (each hemisphere of the brain). You have blind sight, where people with intact eyes but damaged occipital lobes no longer experience or remember sight, yet can navigate a room avoiding objects, or even catch a thrown object, presumably due to the direct connections between the optic nerve and the motor cortex.

So, even if you're coming from an idealist or dualist leaning, I think there is plenty to support the idea of some sort of mind/body interaction that in turn makes perception less than unified. IMO, physicalist philosophy of mind always seemed better supported than physicalism as an ontology, and I don't think they even necessitate one another (e.g., Kastrup's idealism seems like it would work fine with physicalist phil of mind).

That information is not only 'in the brain'. I don't know If you're aware of the cognitive scientist and philosopher Alva Noë. In "Out of Our Heads," Noë argues against the view that consciousness is solely a product of the brain's activity. He contends that the traditional approach of trying to understand consciousness by studying neural processes within the brain is insufficient and ultimately misleading. Noë proposes that consciousness is not something that happens exclusively inside the brain but emerges through dynamic interactions between the brain, body, and the external world. As such he is aligned with enactivism or embodied cognition which explores how our perception and experience of the world are shaped by our embodiment and interaction with our surroundings.

Agree 100%. Isn't this the "embodied cognition," thesis? Brains in a vacuum don't do anything; a vacuum is going to be instantly fatal. That's an important point to highlight. So, at first, we do have the outside world, because we are thinking of the signal coming in from "outside," but of course there is also plenty of communication going on "inside" as well.

Right, I use Shannon's Theory because it is so pervasive for trying to understand this sort of thing, although it is often used in modified forms. We have logical information, Kolmogorov Complexity, Carnap-Bar Hillel semantic information. I think it's worthwhile trying to make sense of this using the major quantitative theory because so much work has already been built around it. We can answer some questions about philosophy and perception without having to first solve the Hard Problem, which I don't see being done any time soon.

I'll respond more later, but I guess the problem here is that we're already defining information in terms of correlations. If we can't talk about what it tells us in terms of statistical probabilities, i.e. "the probability of X given message M," information theory doesn't work the way we want it to. But, because it has been so successful, we have good reason to think it is describing something right.

I think you're right that work has to be done and that entailments need to be computed. But a tricky thing is figuring out how to formalize that. E.g., if we see one angle in a triangle is labeled 90 degrees then we know it is 90 degrees, no need to talk about extra computing outside what vision and understanding takes. But if we're also asked to answer a geometry question, then there is more that must be done.

Which gets at something absolutely crucial I totally missed-- what gets calculated "automatically," and what we have to work through in consciousness is itself determined by our individual anatomy, past experiences, cultural conditioning, etc. It's a sort of Kantian transcendental, but only for some incoming perceptions, and it varies by person. E.g., I speak a bit of Arabic, but I have to focus to read Fusha. With English the connections are totally unconscious, I can't help but understand.

I feel like it's true in a limited sense. Anything only has information vis-a-vis how it interacts with other things. I don't know if self-reference is really required for that, it just needs to be self-contained. I have thought of a short story where aliens in another dimension find a magical portal on their world that is just a black and white screen that scrolls through all of Wikipedia. Since it is correlated with nothing in their world, it can never say anything to them. However, because the symbols correlate with one another, they can see self-referential patterns.

But obviously we can string together words to say novel things about other words, and that can be informative. This makes sense, since the data source is, well, us. Likewise, the source of a dictionary is a set of editors, and we can learn things through the text in that way. For instance, just the words in a dictionary could tell you what century it is from. -

Mark Nyquist

783

Mark Nyquist

783

No doubt the Shannon theory has had a huge impact. The subject of information gets worked over a lot here. On the subject of information theory and what is being taught in course work at universities my view is it's subject specific but has limited use in philosophy, such as you mentioned...the hard problem. -

L'éléphant

1.8k

L'éléphant

1.8k

To me this is incorrect as there are actually panels of experts who write the dictionary, they're just not cited for each word definition. So, it looks like "no human source" was used in the making of the dictionary. Besides, words, as years go by can change its meaning due to change in population's way of talking or writing. And more words are added to the dictionary, as we now know "google" is a verb.Someone on a philosophy forum once said the amount of new information (they might have said knowledge) in a dictionary is essentially zero, because all the dictionary does is refer to itself. That's always stuck with me. What do you think of that? — RogueAI -

Mark Nyquist

783

Mark Nyquist

783

Going a little deeper on the relation of Shannon information to our brain information things get interesting when you consider computation. A basic premise in computing is that a Turing machine can mimic anything that can be computed. This would relate to AI becoming conscious.

As I mentioned, Shannon information can be considered entirely physical, but brain information seems to have the ability in it's physical form to hold non-physicals. Past time and future time being examples as well as many other things. Since it's ultimately a computation, I don't see any reason it couldn't be modeled by a Turing machine.

Computers might do it differently as far as addressing data and building networks but should be able to get the same results. -

Count Timothy von Icarus

4.3kAn interesting problem here is the fact that a given neuron (or group of neurons) involved in reading a message can alternatively be mapped to any of the five components in Shannon’s model of communications depending on how we choose to analyze the process. That is, there seems to be something fundamentally subjective about what we decide to call an information source, a signal, a channel, a receiver, etc.

Count Timothy von Icarus

4.3kAn interesting problem here is the fact that a given neuron (or group of neurons) involved in reading a message can alternatively be mapped to any of the five components in Shannon’s model of communications depending on how we choose to analyze the process. That is, there seems to be something fundamentally subjective about what we decide to call an information source, a signal, a channel, a receiver, etc.

I am still thinking this through, but I am starting to see the outlines of what I think is a good argument for a Kantian "Copernican Turn," in the philosophy of information. Which is funny, because the small field has been pretty focused on solutions to this problem via formalisms and attempts to "objectify" information, so maybe I'm just going 100% in the wrong direction from reading too much continental philosophy lately lol. -

schopenhauer1

11kI had something similar but different take- more post-modernism versus information theory:

schopenhauer1

11kI had something similar but different take- more post-modernism versus information theory:

https://thephilosophyforum.com/discussion/14334/adventures-in-metaphysics-2-information-vs-stories/p1 -

Wayfarer

26.2kThat is, there seems to be something fundamentally subjective about what we decide to call an information source, a signal, a channel, a receiver, etc. — Count Timothy von Icarus

Wayfarer

26.2kThat is, there seems to be something fundamentally subjective about what we decide to call an information source, a signal, a channel, a receiver, etc. — Count Timothy von Icarus

Right - it’s a matter of judgement, which is always internal to thought, not something given in the data itself. A Kantian insight. -

Mark Nyquist

783Is information physical? If no, then it does not physically exist. And if information is physical then show what its physical form is. Is there more to it than that?

Mark Nyquist

783Is information physical? If no, then it does not physically exist. And if information is physical then show what its physical form is. Is there more to it than that?

Is a message the sending of information or a coding and decoding of physical matter? A message containing information is an abstraction and doesn't physically exist. So it's best to use encoding and decoding of physical matter.

I observe information existing as brain state...being encoded to physical matter in a standardized form... transmitted as physical matter...and the person receiving will decode the physical signal, text and the information will then physically exist in his brain state. This will account for the physicality of the process without resorting to abstract concepts of information. -

Wayfarer

26.2kI observe information existing as brain state.. — Mark Nyquist

Wayfarer

26.2kI observe information existing as brain state.. — Mark Nyquist

It’s what interprets any array that is at issue. The same information can be represented by many different states and in many different media. If the same information can be represented in many physical forms, how can it be physical? -

Mark Nyquist

783

Mark Nyquist

783

Good you point out any array. Encoded matter being a special case but all inputs to our brains can become information in the form of brain state.

There is a tendency to assign information to physical objects without regard for the workings of our brains.

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum