-

Count Timothy von Icarus

4.3kJohn Deely wrote a dialogue where he envisioned what it would be like to explain his understanding of semiotics to a standard realist. It's an idealized conversation of sorts. Although it the original is quite long, it was edited down to a bit under an hour and was performed by two actors and recorded. Here is the video:

Count Timothy von Icarus

4.3kJohn Deely wrote a dialogue where he envisioned what it would be like to explain his understanding of semiotics to a standard realist. It's an idealized conversation of sorts. Although it the original is quite long, it was edited down to a bit under an hour and was performed by two actors and recorded. Here is the video:

https://youtu.be/AxV3ompeJ-Y?si=34TM8E5xiEowIPV2

Here is the original dialogue, which is significantly longer: https://ojs.utlib.ee/index.php/sss/article/view/SSS.2001.29.2.17/12520

I had discovered this awhile back, but reading Deely again made me recall it. In the conversation Deely talks about his hope that philosophy will come out of its "long semiotic dark age." I'm not sure if we're there yet, but it does seem to me that information theory (along with computational approaches and complexity studies) are becoming pretty ubiquitous in physics, and to a lesser extent in biology and the social sciences.

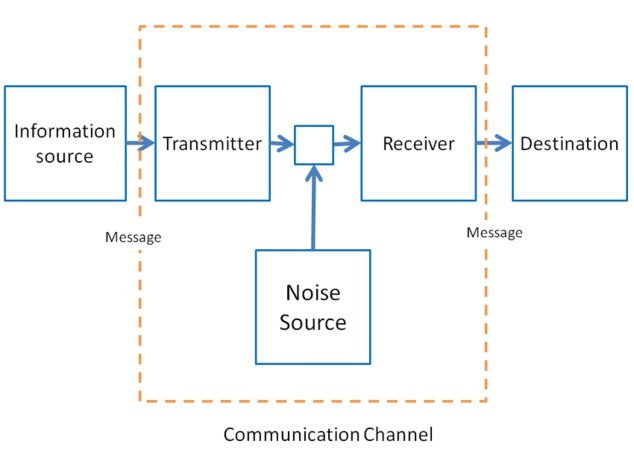

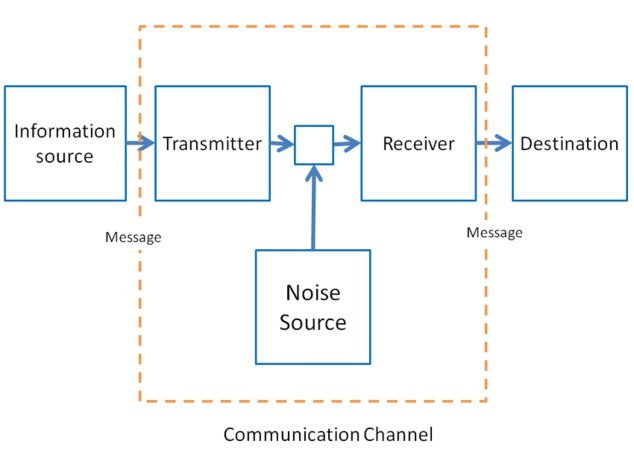

There seems to me to be a great deal of potential for crossover between IT and semiotics, but you can, and often do, see IT described in entirely dyadic and mechanistic terms. I have not been able to find any great sources on comparing the two. Terrance Deacon's paper on bringing biosemiotics into biology is interesting food for thought, but it leaves open a lot of questions on the wider potential for crossover.

Anyhow, I figured I'd share the video because I think it's great, and try to gin up some discussion on this. I have always thought that information theory suggested a metaphysics that is at odds with popular "building block" metaphysics (i.e. "things are what they are made of" and "everything interacts the way it does because of the fundamental parts things are made of."). The reason for this is that information is always relational and contextual, not "in-itself."

For example, a hot cup of coffee might be a clue at a murder scene. The cup is still hot, so we know someone made it recently. However, knowing "the precise location and velocity of every particle in the cup" would not give us access to this "clue." The information that the cup of coffee was made recently lies in the variance between its temperature and the ambient environment. Likewise, if it was iced coffee, and the ice had yet to melt, we could also tell that it could not have been there long, although this information cannot be had from taking the ice cubes in isolation.

Bits don't really work well as "fundamental building blocks," because they have to be defined in terms of some sort of relation, some potential for measurement to vary. IT does seem to work quite well with a process metaphysics though, e.g. pancomputationalism. But what about with semiotics? I have had a tough time figuring out this one. -

Count Timothy von Icarus

4.3k

Count Timothy von Icarus

4.3k

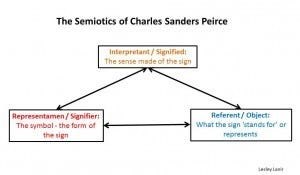

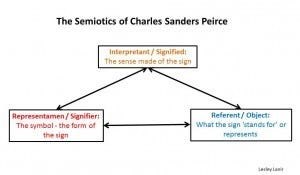

At a superficial level, it's easy to see how in the Shannon-Weaver model in IT the information source could be the object and the destination the interpretant. -

apokrisis

7.8kBits don't really work well as "fundamental building blocks," because they have to be defined in terms of some sort of relation, some potential for measurement to vary. — Count Timothy von Icarus

apokrisis

7.8kBits don't really work well as "fundamental building blocks," because they have to be defined in terms of some sort of relation, some potential for measurement to vary. — Count Timothy von Icarus

Information theory really is anti-semiotic in its impact. Interpretance is left hanging as messages are reduced to the statistical properties of bit strings. They are a way to count differences rather than Bateson’s differences that make a difference.

It becomes a theory of syntactical efficiency rather than semantic effectiveness. An optimisation of redundancy so signal can be maximised and noise minimised.

Which is fine as efficient syntax is a necessary basis for a machinery of semiosis. But the bigger question of how bit strings get their semantics is left swinging.

Computation seems to confuse the issue even more. The program runs to its deterministic conclusion. One bit string is transformed into some other bit string. And the only way this carries any sense is in the human minds that wrote the programs, selected the data and understood the results as serving some function or purpose.

In the 1990s, biosemiosis took the view that the missing ingredient is that an information bit is really an entropy regulating switch. The logic of yes and no, or 1 and 0, is also the flicking on and off of some entropically useful state. Information is negentropy. Every time a ribosome adds another amino acid to a growing protein chain, it is turning genetic information into metabolic negentropy. It is making an enzyme that is a molecule with a message to impart. Start making this or stop making that.

So information theory does sharpen things by highlighting the syntactical machinery - the bank of switches - that must be in place to be able to construct messages that have interpretations. There is the thing of a crisp mechanical interface between an organism’s informational models and the world of entropy flows it seeks to predict and regulate.

But in science more generally, information theory serves as a way to count entropy as missing information or uncertainty. As degrees of freedom or atoms of form. It is part of a pincer movement that allows order to be measured in a fundamental way as dichotomous to disorder.

Biology is biosemiotic as it is about messages with dissipative meanings. Thoughts about actions and their useful consequences.

But physics is pansemiotic as it becomes just about the patterns of dissipation. Measuring order in terms of disorder and disorder in terms of order. A way to describe statistical equilibrium states and so departures from those states. -

Count Timothy von Icarus

4.3k

Count Timothy von Icarus

4.3k

Information theory really is anti-semiotic in its impact. Interpretance is left hanging as messages are reduced to the statistical properties of bit strings. They are a way to count differences rather than Bateson’s differences that make a difference.

I think that's a fair interpretation of how IT is generally interpreted. Popular interpretations of the extreme usefulness of information theory often try to explain everything in terms of "limits on knowledge" for some agent/physical system. That is, information is useful as a concept but lacks "mind-independant existence." Perspective isn't "fully real" in a sense, and the true distribution for any variable vis-á-vis any physical system receiving a signal is just the very interactions that actually occur. The appearance of probability on the scene is just an artifact of our limitations, something that doesn't exist in the pristine "view from nowhere."

But it does seem that some views leave the door open for a more radical reinterpretation of the dominant mechanistic, dyadic paradigm. Relational quantum mechanics for instance looks at QM through the lens of Nagarjuna's concept of relations, not things, being primary. And there are a few other examples I can think of where a sort of "perspective" exists in physical interactions "all the way down."

I've also seen semiotic accounts of computation, but this has always focused on the computing devices designed by humans, rather than biological or physical computation writ large. Because computation can also be described as communication, it seems like there is an opening here for a triadic account, although I've yet to find one.

In his collected papers, Peirce remarks at one point about his despair over getting people to realize that the "interpretant" need not be an "interpreter." I've seen some explanations of information that try to get at this same sort of idea (e.g. Scott Mueller's Asymmetry: The Foundation of Information). The core insight is that "which differences make a difference," is contextual. When one thing is linked to something else via mediation, the nature of the interpretant matters. I'll agree that this is more obvious when it comes to organisms and their specific umwelts, but it seems true "all the way down," as well.

But I have searched in vain so far for anything that attempts to really fit these together. One big thing that semiotics would help overcome is the nominalism implicit in seeing information just in terms of numbers and probability. The idea that certain signals play a role in making the recipient think of one thing over anything else gets washed out when information appears as sheer probability. -

Count Timothy von Icarus

4.3kThe hyper nominalism and relativism of some post-structuralist might be an example of what it would be good to avoid. These theories often invoke semiotics, but it's Sausser's brand, which ends up leaving the sign and its interpretation floating free of the signified.

Count Timothy von Icarus

4.3kThe hyper nominalism and relativism of some post-structuralist might be an example of what it would be good to avoid. These theories often invoke semiotics, but it's Sausser's brand, which ends up leaving the sign and its interpretation floating free of the signified.

Information can be seen as ultimately arbitrary only when it is divorced from its source however. E.g., a random text generator might produce all sorts of texts for us, but ultimately all it reveals to us is information about the randomization process used to output the text. Even if a random text generator were to spit out a plausible explanation of a cure for cancer, we should have no reason to think it is telling us anything useful, since there are very many more ways to fail to describe a proper medical treatment than ways to describe a proper one.

Hence, the "information source" cannot be divorced from the message if we want to understand the message (nor can we ignore the recipient). But often it seems the source and recipient are ignored, abstracted away. I think one assumption at work here is the idea that processes can always be reduced to understand them better, that facts about complex things can always be explained in terms of "parts," and that parts are always more fundemental. -

apokrisis

7.8kI've also seen semiotic accounts of computation, but this has always focused on the computing devices designed by humans, rather than biological or physical computation writ large. Because computation can also be described as communication, it seems like there is an opening here for a triadic account, although I've yet to find one. — Count Timothy von Icarus

apokrisis

7.8kI've also seen semiotic accounts of computation, but this has always focused on the computing devices designed by humans, rather than biological or physical computation writ large. Because computation can also be described as communication, it seems like there is an opening here for a triadic account, although I've yet to find one. — Count Timothy von Icarus

My view is that computation always has been and always will be a technological extension of human semiosis. It isn't its own form of life or mind. AI is pipe dream. Information technology exists to extend our reach over entropic nature, just like any other mechanical contrivance we tack on to our human enterprise as we turn our entire planet into an anthrosphere shaped in our own image.

So at the level of giving computation meaning, it only can have meaning in the ways it amplifies our human ability to "harvest the biosphere" as Smil puts it. To extend the business of controlling the wider world's entropic flows.

That is the pragmatics. Howard Pattee's Artificial Life Needs a Real Epistemology is good on this. Semiosis doesn't exist unless the meaningful relation is the one of an informational model plunged into the business of regulating its entropic world.

A computer is just a machine – a rack of switches – plugged into a wall socket. It has no need to care about the source of energy that flips its logic circuits. Humans operate its metabolism, just as humans also find whatever meaning is to be had in its circuit states. The computer is no more alive or mindful than an artificial leg, flight of stairs, or chainsaw.

An irony of the current AI hype boom is that the good reason for the promotion of large language models is that they demand huge compute power. There is money to be made in building ever more energy-expensive cloud computing services and their vast data centres.

The tech giants were pushing "big data" as the business world revolution a few years ago, just as social media was supposed to be the great next step GDP productivity booster. Sold a lot of data centre, but corporations eventually realised big data was just another productivity con.

And here we go again with AI.

So yes there is a triadic account as you do need a bank of switches standing between the informational model of the world and the entropy that is what sustains the organism with its model.

But this is the biosemiotic model of semiosis. The one Salthe, Pattee and others moved towards after being influenced by Peirce's larger corpus finally beginning to hit the public space in the 1980s and 1990s.

The interpretant would be some neural or genetic structure of habit (or linguistic or numeric habit in social and technological humans). The referent would be some negentropic action in the world. The signifier becomes the interface between model and mind – the rack of switches which encode a set of off-on connections between the two sides of this biosemiotic equation.

Or in Pattee's terms, the molecule that is also the message. The enzyme that is acting as the on-off switch of some metabolic process. The receptor cell or motor neuron that is the on-off switch in terms of a sensory input or motor out put – the transduction step needed to turn thought into action, or action into thought. -

Treatid

54

Treatid

54

I'm a neophyte to semiotics.

Initial reaction on watching the video:

- This mostly seems sensible to me.

- I'm uncomfortable with the usage of "things" and "objects"/"objective".

- This seems to wildly overcomplicate things.

- I'm deeply uncomfortable with the idea that humans are qualitatively different, not just quantitatively different. (I think this is connected with ideas around emergence).

Cognition is uniquely human

The idea that humans have a unique ability to understand signs is a direct callback to the divinity of humanity.

It implies that humans have access to a special mechanism that isn't part of the rest of creation.

To believe in this version of semiotics, I am tasked with believing that God gave humanity access to mechanisms that are not available to mere mortal animals.

Even with a more mundane "emergent behaviours" justification, this seems to me to exhibit characteristics of trying to fit the evidence to the prejudices.

Things & Objects

A thing is its relationships.

In principle there is nothing wrong with referring to "thing" when we mean "the relationships of thing". Not least because "thing" is always "the relationships of thing". "Thing" without relationships is moot, irrelevant.

At a surface level - the interlocutors are referring to networks of relationships as things and I was totally onboard...

However, there appears to be an assumption that it is possible to define the terminology. To specify what semiotics is. That the notion of signs is a fixed, static target.

I can understand the urge to define the subject. The presumption of definitions is near universal. But words are their relationships - When semiotics fails to be consistent in this regard it fails at its primary purpose.

-------------------------------------------------------------------------------------------------

State of play

(Some) people can see that descriptions are relational.

Process philosophy and semiotics are approaches to knowledge based on this observation.

Even knowing that all definitions are relational, and that relationships change; these subjects are still assuming the existence of fixed definitions.

Fixed definitions

We know that we can't define X. We can only describe the relationships of X, not X itself.

Even so, the idea of a fixed X persists. Semiotics claims to be an X - A static subject matter - a fixed point of knowledge.

The first problem of semiotics is defining what semiotics (and all related terminology) is.

In trying to define itself, semiotics contradicts its premise

Interlude

I'm not sure how hard I need to sell this. Your general/academic knowledge of philosophy is far greater than mine and you've been investigating this subject matter for some time.

You've demonstrated that you have the individual pieces.

On the other hand, I'm describing a universe where nothing can be defined and meaning/knowledge/understanding are in permanent flux.

This is territory diametrically opposed to The Law of Identity.

In a world where the meaning of a sentence changes while you are reading it; logic doesn't apply.

There is no way to start from an assumption of a static objective universe and arrive at a dynamic universe of changing relationships.

We have to go back to first principles, disposing of all the assumptions predicated on The Law of Identity. Like, for example, the idea that mathematics has some fixed, objective reality. Or that semiotics and process philosophy can be defined.

Humanity exists

No-one has ever described a single instance of a fixed, unambiguous definition independent of relationships.

Our concept of X has always been "the relationships of X".

Semiotics cannot define what semiotics is except through descriptions of relationships. As relationships change, semiotics changes.

We already operate without fixed definitions.

Denying the possibility of fixed knowledge isn't nihilistic. We never had static meaning. Its absence isn't going to cause modern society to collapse.

Mathematics never defined what axioms are, let alone a single axiom. Logic cannot specify the fixed initial state from which deductions progress. Every definition in Quantum Mechanics is circular.

And still we have society and communication.

Rebuilding from first principles

Once we stop trying to do the impossible, everything else (the possible) is trivial in comparison.

We can describe relationships (with respect to other relationships).

That is it. That is our foundation; our First principle.

Any and all effort invested into describing relationships is productive.

Effort put into defining semiotics is wasted - it is an impossible task.

The difficult task is to stop attempting the impossible. "Define your Terms!" has been a defining mantra of modern thought. Possible or not - the habit of trying to define terms runs deep, to the point that subjects specifically addressing the relational nature of meaning are still reflexively trying to define their terms.

You already know how to describe relationships. The trick is not to conflate descriptions with definitions. Descriptions are possible. Definitions (c.f. The Law of Identity) are not possible. -

wonderer1

2.4kThe idea that humans have a unique ability to understand signs is a direct callback to the divinity of humanity.

wonderer1

2.4kThe idea that humans have a unique ability to understand signs is a direct callback to the divinity of humanity.

It implies that humans have access to a special mechanism that isn't part of the rest of creation.

To believe in this version of semiotics, I am tasked with believing that God gave humanity access to mechanisms that are not available to mere mortal animals.

Even with a more mundane "emergent behaviours" justification, this seems to me to exhibit characteristics of trying to fit the evidence to the prejudices. — Treatid

It seems to me one can dispense with theism, recognize that More is Different and that humans have more cortical neurons than any other species, and thereby have a basis for recognizing a uniqueness to humans. -

Count Timothy von Icarus

4.3k

Count Timothy von Icarus

4.3k

The idea that humans have a unique ability to understand signs is a direct callback to the divinity of humanity.

It implies that humans have access to a special mechanism that isn't part of the rest of creation.

To believe in this version of semiotics, I am tasked with believing that God gave humanity access to mechanisms that are not available to mere mortal animals.

I am not sure where you got that from. The conversation has several examples of animals making use of signs. The interpretant need not be an "interpreter." We could consider here how the non-living photoreceptors in a camera might fill the role of interpretant (or the distinction of virtual signs or intentions in the media). -

Gnomon

4.3k

Gnomon

4.3k

Would you prefer to believe that Random Evolution "gave" some higher animals the "mechanism" of Reasoning? For philosophers, rationality is not a material machine, but the cognitive function of a complex self-aware neural network that is able to infer (to abstract) a bare-bones logical structure (invisible inter-relationships) in natural systems*1. Other animals may have some similar abilities, but for those of us who don't speak animal languages, about all we can do is think in terms of analogies & metaphors drawn from human experience.It implies that humans have access to a special mechanism that isn't part of the rest of creation.

To believe in this version of semiotics, I am tasked with believing that God gave humanity access to mechanisms that are not available to mere mortal animals. — Treatid

Peirce's 19th century theory of Semiotics is very technical and over my head. Which "version of semiotics" are you referring to : Peirce's abstruse primitive discussion, or the more modern assessment which includes a century of evolving Information Theory*2? I suppose the OP is talking about the latter.

Besides, Peirce's notion of God*3 was probably somewhat cryptic and definitely unorthodox & non-traditional. So, a philosopher might as well substitute "Nature" for "God" as the Giver of a specialized mechanism for abstracting & symbolizing the logical structure of world systems. Would that be easier to "believe", in the context of this thread? Can you imagine Nature as a "living spontaneity"? :smile:

*1. Reason, in philosophy, is the ability to form and operate upon concepts in abstraction, in accordance with rationality and logic.

https://www.newworldencyclopedia.org/entry/Reason

*2. Information and Semiotics :

Information is a vague and elusive concept, whereas the technological concepts are relatively easy to grasp. Semiotics solves this problem by using the concept of a sign as a starting point for a rigorous treatment for some rather woolly notions surrounding the concept of information.

https://ris.utwente.nl/ws/portalfiles/portal/5383733/101.pdf

*3. C.S. Peirce's "Neglected Argument" for God :

"The endless variety in the world has not been created by law. It is not of the nature of uniformity to originate variation, nor of law to beget circumstance. When we gaze upon the multifariousness of nature we are looking straight into the face of a "living spontaneity." . . .

" … there is a reason, an interpretation, a logic in the course of scientific advance, and this indisputably proves to him who has perceptions of rational or significant relations, that man's mind must have been attuned to the truth of things in order to discover what he has discovered. It is the very bedrock of logical truth."

https://www.icr.org/article/cs-peirces-neglected-argument/ -

wonderer1

2.4kFor philosophers, rationality is not a material machine, but the cognitive function of a complex self-aware neural network that is able to infer (to abstract) a bare-bones logical structure (invisible inter-relationships) in natural systems*1. — Gnomon

wonderer1

2.4kFor philosophers, rationality is not a material machine, but the cognitive function of a complex self-aware neural network that is able to infer (to abstract) a bare-bones logical structure (invisible inter-relationships) in natural systems*1. — Gnomon

I think a philosopher might be open to facing the truth of the nature of our minds, whatever that might be.

It sounds like you are saying that a philosopher is someone with a closed mind on the subject. Is that about right? -

Joshs

6.7kIt seems to me one can dispense with theism, recognize that More is Different and that humans have more cortical neurons than any other species, and thereby have a basis for recognizing a uniqueness to humans. — wonderer1

Joshs

6.7kIt seems to me one can dispense with theism, recognize that More is Different and that humans have more cortical neurons than any other species, and thereby have a basis for recognizing a uniqueness to humans. — wonderer1

Ah, but the devil is in the details. To what extent, if any, does this difference in degree between the neurons of humans

and other animals translate into a difference in kind? Think of all of the historical assumptions concerning brain-based qualitative differences between human and animal behavioral capabilities that have turned out to be wrong-headed:

Only humans have language

Only humans have heritable culture

Only humans have cognition

Only humans have emotion

Only humans use tools

Perhaps more isnt so different after all. -

flannel jesus

2.9kFor example, a hot cup of coffee might be a clue at a murder scene. The cup is still hot, so we know someone made it recently. However, knowing "the precise location and velocity of every particle in the cup" would not give us access to this "clue." The information that the cup of coffee was made recently lies in the variance between its temperature and the ambient environment. Likewise, if it was iced coffee, and the ice had yet to melt, we could also tell that it could not have been there long, although this information cannot be had from taking the ice cubes in isolation. — Count Timothy von Icarus

flannel jesus

2.9kFor example, a hot cup of coffee might be a clue at a murder scene. The cup is still hot, so we know someone made it recently. However, knowing "the precise location and velocity of every particle in the cup" would not give us access to this "clue." The information that the cup of coffee was made recently lies in the variance between its temperature and the ambient environment. Likewise, if it was iced coffee, and the ice had yet to melt, we could also tell that it could not have been there long, although this information cannot be had from taking the ice cubes in isolation. — Count Timothy von Icarus

Seems to me like the precise location and momentum of every particle (quantum indeterminacy notwithstanding) -- not just of the cup, but of the environment too -- would have implicit discoverable facts in it, like the variance between its temprature and the ambient encironment, or whether the ice was melted. -

Gnomon

4.3k

Gnomon

4.3k

Wow! Where did you get that off-the-wall idea from an assertion about the relationship between Information and Logic? What then, is the truth about the true "nature of our minds"? Are you saying that the harsh truth is that the Mind is nothing more than a Brain? Or that Logic is objective and empirical?I think a philosopher might be open to facing the truth of the nature of our minds, whatever that might be.

It sounds like you are saying that a philosopher is someone with a closed mind on the subject. Is that about right? — wonderer1

Is the ability to discern the invisible logical structure of ideas & events & brains, a sign of a "closed" mind? Or is the ability to see the material constituents of things a sign of an "open brain"? Please clarify your veiled put-down. :smile:

In the Physicalism belief system, Metaphysics (i.e. Philosophy) is meaningless

-

Count Timothy von Icarus

4.3k

Count Timothy von Icarus

4.3k

If you include the entire room you would have the temperature difference. Complete knowledge of the room alone would not give you the cup's status as a clue in a murder case though. In fact, to understand that sort of relationship and all of its connotations would seem to require expanding your phase space map to an extremely wide temporal-spatial region.

The same is true for a fact like "this cup was produced by a Chinese company." This fact might be written on the cup, but the knowledge that this is what the writing actually says cannot be determined from knowledge of the cup alone, and likely not the room alone either.

Likewise, knowledge of the precise location/velocity of every particle in a human brain isn't going to let you "read thoughts," or anything of the sort. You need to correlate activity "inside" with what is going on "outside." That sort of fine grained knowledge wouldn't even give you a good idea what's going to happen in the system (brain) next since it's constantly receiving inputs from outside.

But "building block" reductionism has a bad habit of importing this sort of knowledge into its assumptions about what can be known about things sans any context. -

apokrisis

7.8krecognize that More is Different and that humans have more cortical neurons than any other species, and thereby have a basis for recognizing a uniqueness to humans. — wonderer1

apokrisis

7.8krecognize that More is Different and that humans have more cortical neurons than any other species, and thereby have a basis for recognizing a uniqueness to humans. — wonderer1

The human difference is we have language on top of neurobiology. And the critical evolutionary step was not brain size but vocal cords. We developed a throat and tongue that could chop noise up into a digitised string of vocal signs. Only humans have the motor machinery to be articulate and syntactical.

Exactly when homo gained this new semiotic capacity will always be controversial. But the evidence says probably only with Homo sapiens about 100,000 years ago. And the software of a complex grammar to take full advantage of the vocal tract may have come as late as 40,000 years ago judging by the very sudden uptick in art and symbolism. -

flannel jesus

2.9kIf you include the entire room you would have the temperature difference — Count Timothy von Icarus

flannel jesus

2.9kIf you include the entire room you would have the temperature difference — Count Timothy von Icarus

Yup. So that information isn't in principle absent from knowing the position and velocity of all the relevant stuff.

In fact, to understand that sort of relationship and all of its connotations would seem to require expanding your phase space map to an extremely wide temporal-spatial region. — Count Timothy von Icarus

Only as wide as the effective stuff that makes it meaningful in the first place - which is quite wide indeed -

apokrisis

7.8kPerhaps more isnt so different after all. — Joshs

apokrisis

7.8kPerhaps more isnt so different after all. — Joshs

Yep. Different was more in the case of Homo sapiens. :smile:

A step up the semiotic ladder. The brains of Neanderthals were bigger (simply because of their bigger frames). But their vocal tracts not redesigned to the extent that can be judged.

There had to be brain reorganisation too. A new level of top down motor control over the vocal cords would be part of the step to articulate speech, as might be a tuning of the auditory path to be able to hear rapid syllable strings as sentences of words.

So the human story is one of a truly historic leap. The planet had only seen semiosis at the level of genes and neurons. Now it was seeing it in terms of words, and after that, numbers. -

apokrisis

7.8kNot necessarily. Neanderthals had language, and they split from us 500k years ago. — Lionino

apokrisis

7.8kNot necessarily. Neanderthals had language, and they split from us 500k years ago. — Lionino

So you know they had grammatical speech? What evidence are you relying on.

I know this is a topic that folk get emotionally invested in. It seems unfair for Homo sapiens to draw a line with our biological cousins. Especially as we mixed genes with anyone who happened to be around.

But the evidence advanced for Neanderthals as linguistic creatures often goes away over time. For example, a finding of Neanderthals with advance tool culture becomes more plausibly explained by sapiens making a couple of brief unsuccessful first forays into Europe before a third is suddenly explosively successful and Neanderthals are gone overnight.

It doesn’t matter to the vocal tract argument whether Neanderthals had it or not. But the evidence leans on the side of not. -

apokrisis

7.8kWhat do you mean by "grammatical speech"? — Lionino

apokrisis

7.8kWhat do you mean by "grammatical speech"? — Lionino

Speech with a fully modern syntactic structure.

I take it from what I have read over the years. — Lionino

On the other side of the extreme, the theories that suggest speech showed up 50k years ago are absurd as soon as we look into palaeoanthropology. — Lionino

I will treat this as opinion until you make a better argument. This is a topic I've studied and so listened to a great many opinions over the years.

The story of the human semiotic transition is subtle. Sure all hominids could make expressive social noises as a proto-speech. Even chimps can grunt and gesture in meaningful fashion that directs attention and coordinates social interactions. A hand can be held out propped by the other hand to beg in a symbolising fashion.

But the way to think about the great difference that the abstracting power of a fully syntactical language made to the mentality of Homo sapiens lies in the psychological shift from band to tribe.

The evidence of how Erectus, Neanderthals and Denisovans lived is that they were small family bands that hunted and foraged. They had that same social outlook of apes in general as they lacked the tool to structure their social lives more complexly.

But proper speech was a literal phase transition. Homo sap could look across the same foraging landscape and read it as a history and genealogy. The land was alive with social meaning and ancestral structure. The tribal mentality so famous in any anthropological study.

It is hard to imagine ourselves restricted to just the mindset of a band when we have only experienced life as tribal. However this is the way to understand the essence of the great transformation in pragmatic terms.

Theories of the evolution of the human mind are bogged down by the very Enlightenment-centric view of what it is to be human. Rationality triumphing over the irrational. So we look for evidence of self-conscious human intelligence in the tool kits of the paleo-anthropological record. Reason seems already fully formed if homo could hunt in bands and cook its food even from a million years ago, all without a vocal tract and a brain half the size.

But if we want to get at the real difference, it is that peculiar tribal mindset that us humans could have because speech allowed our world to seem itself a lived extension of our own selves. Every creek or hillock came with a story that was "about us" as the people of this place. We had our enemies and friends in those other bands we might expect to encounter. We could know whether to expect a pitch battle or a peace-making trading ritual.

The essentials of being civilised in the Enlightment sense were all there, but as a magic of animism cast over the forager's world. The landscape itself was alive in every respect through our invention of a habit of socialising narration. We talked the terrain to life and lived within the structure – the Umwelt – that this created for us. Nothing we could see didn't come freighted with a tribal meaning.

At that point – around 40,000 years ago, after sapiens as an "out of Africa coastal foraging package" had made its way up through the Levant – the Neanderthals and Denisovans stood no chance. Already small in number, they melted into history in a few thousand years.

The animistic mentality was the Rubicon that Homo sapiens crossed. A vocal tract, and the articulate speech that this enabled, were the steps that sparked the ultimate psycho-social transformation. -

wonderer1

2.4kThe human difference is we have language on top of neurobiology. — apokrisis

wonderer1

2.4kThe human difference is we have language on top of neurobiology. — apokrisis

It would be kind of silly to think there is only one difference.

And the critical evolutionary step was not brain size but vocal cords. — apokrisis

Considering all the bird species able to mimic human speech, it doesn't seem as if you have thought this through.

I'm fairly confident that you aren't in a position to prove that the mutation leading to ARHGAP11B wasn't a critical step on the path leading to human linguistic capabilities.

ARHGAP11B is a human-specific gene that amplifies basal progenitors, controls neural progenitor proliferation, and contributes to neocortex folding. It is capable of causing neocortex folding in mice. This likely reflects a role for ARHGAP11B in development and evolutionary expansion of the human neocortex, a conclusion consistent with the finding that the gene duplication that created ARHGAP11B occurred on the human lineage after the divergence from the chimpanzee lineage but before the divergence from Neanderthals. -

apokrisis

7.8kIt would be kind of silly to think there is only one difference. — wonderer1

apokrisis

7.8kIt would be kind of silly to think there is only one difference. — wonderer1

It is silly of you to say that until you can counter that argument in proper fashion.

As is usual in any field of inquiry, we can fruitfully organise the debate into its polar opposites. Let one side defend the "many differences" in the usual graded evolution way, while the other side defends the saltatory jump as the "one critical difference" as the contrary.

To just jump in with "that's silly" is silly.

Considering all the bird species able to mimic human speech, it doesn't seem as if you have thought this through. — wonderer1

Yeah. I mean what can one say? You've reminded me of being back in the lab where we slowed down bird calls so as to discover the structure that is just too rapid for a human ear to decode. And similar demonstrations of human speech slowed down to show why computer speech comprehension stumbled on the syllabic slurring that humans don't even know they are doing.

Do you know anything about any of this?

I'm fairly confident that you aren't in a position to prove that the mutation leading to ARHGAP11B wasn't a critical step on the path leading to human linguistic capabilities. — wonderer1

I can see you are fairly confident about your ability to leap into a matter where you begin clueless and likely have no interest in learning otherwise.

you aren't in a position to prove that the mutation leading to ARHGAP11B wasn't a critical step on the path leading to human linguistic capabilities. — wonderer1

I can certainly have a good laugh at your foolishness. A gene for cortex folding ain't a gene for grammar. -

Jaded Scholar

40

Jaded Scholar

40

As soon as I read this, I also wanted to ask:Speech with a fully modern syntactic structure. — apokrisis

Then I ask you what "fully modern syntactic structure" means. — Lionino

I'm not trying to pile onto one side of a disagreement, and I can see that you've both learned a great deal on the subject of human evolutionary development, but I think 's arguments on the differences/similarities between homo sapiens and neanderthalensis make a lot more sense than 's (which is to say, they agree most with my own preconceptions).

But in seriousness, 's arguments kind of rubbed me the wrong way from the outset, because they contained a kind of derision for the notion of homo sapiens not being superior to non-sapiens, and constantly supported the conclusion that homo sapiens are indeed innately superior. And when your position exactly mirrors the culturally dominant narrative, I think that's when you should be extra careful to analyse why you believe it. One of the most ingrained narratives in modern human culture is the inherent superiority of humans, which is a conclusion that has been dominant across countless generations despite the logic supporting that conclusion being torn down countless times, only to be replaced by some other institutionally respected logic that just happens to keep that conclusion in its place of dominance.

To get into detail, the main factual inconsistencies I've noticed have been in (minor) points like:

And the software of a complex grammar to take full advantage of the vocal tract may have come as late as 40,000 years ago judging by the very sudden uptick in art and symbolism. — apokrisis

Apologies if you were intending to use "vocal tract" as byword for "speech apparatus", but if you were not, then these comments reflect outdated and refuted stances on the importance of the vocal tract for complex language [1], and more current research has demonstrated that Neanderthals absolutely did possess traits that actually are necessary for complex language like enhanced respiratory control [2] and all of the same orofacial muscle control that is necessary for complex language in humans (controversially dubbed "the grammar gene") [3]. Moreover, the most significant requirement for complex language (though not the most advanced requirement) is the positioning of our larynx, which is primarily due to walking upright, and thus, was shared with all other hominids [citation needed].But their vocal tracts not redesigned to the extent that can be judged. — apokrisis

[1] http://linguistics.berkeley.edu/~ohala/papers/lowered_larynx.pdf

[2] https://www.sciencedirect.com/science/article/pii/S0960982207020659

[3] https://doi.org/10.1002%2Fevan.20032

From what I have learned (both in the past and in my brief literature scan just now), and the main objections to the idea that other hominids probably had just as advanced linguistic competence as homo sapiens has been supported by interpolations of neurology (which is inconclusive at best) from skull shapes and artefacts, and even (most embarrassingly) from interpolated dating of the origin of language using currently-existing lingual diversity and assuming that isn't affected by any difference between the (typically) colonial expansion patterns we have used since the agricultural revolution and the nomadic expansion patterns we typically used at every point before ~10,000 BCE (and even then, contemporary conclusions support figures much older than 40kya [4]). The point is that all of the evidence supporting complex language as emerging with/from homo sapiens is approximate and indirect, which is a stark contrast to the evidence in favour of other hominids communicating with equal complexity, which actually includes direct evidence like that cited above.

[4] https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3338724/

To shift to less concretely factual, and more relational inconsistencies, I also think it's a bit naive to hold arguments like:

Firstly, I'm pretty sure that the 70kya and 40kya periods are times when (likely due to climate change, as you did mention for those periods, to give credit where due) genetic studies suggest that homo sapiens dropped to drastically low numbers, so the small numbers of Neanderthals and Denisovians really don't support your point, especially since they may actually have been larger than the fleeing Sapiens.At that point – around 40,000 years ago, after sapiens as an "out of Africa coastal foraging package" had made its way up through the Levant – the Neanderthals and Denisovans stood no chance. Already small in number, they melted into history in a few thousand years. — apokrisis

But either way, the accelerated expansion of Sapiens throughout all nearby regions in that broader time period, and their continued expansion thereafter is associated with the extinction of vast numbers of other species, like all megafauna, most notably. I want to tie in the Uncanny Valley here, as a phenomena that I found especially fascinating when I learned that it is practically unique to humans (I think there was one single study showing that macaques might have it too). It's possible, maybe even likely, that other hominids had the same trait, but regardless, isn't it interesting that we have an instinctive negative reaction towards anything that looks almost like us, but not quite, especially given our incredibly recent history where, for millions of years, we evolved alongside creatures that literally were almost like us, but not quite? Maybe we were the only hominid that survived because we had more advanced language or tools or whatever, but given the pattern of extinctions that are conclusively tied to our expansion, and our hard-coded repulsion for anything almost-but-not-quite human, I think an evidence-based mindset supports the idea that we are the lone hominid survivors because we were probably the most belligerent creatures around. Obviously, this is not proof, but it is a much better supported hypothesis than the one that we were just better at abstraction and conceptualisation (which I also concede is not a mutually exclusive hypothesis).

Lastly, I want to tie the above points together by noting that the physical evidence you do have on your side, which I think is mostly visual art and ritualistic artefacts - what I think you called a symbolic explosion or something like that - is extremely intellectually irresponsible to use as justification for some narrative like a signification of human development that surpassed the other hominids, and argue that some unknowable internal transition just happened, and then led to us thinking differently. It makes much more sense to consider that maybe, if a highly linguistically developed species has recently migrated to regions populated by other highly linguistically developed species, they might suddenly be faced with the fact that neither of you can understand the complex alien language of the other species, and maybe this naturally leads to the idea that there's a whole lot of benefit in exploring other kinds of communication? And a whole lot of benefit in creating markers and rituals that simplify both intra-species cohesion and inter-species differentiation?

Which is to say that the development of these things by homo sapiens may well be directly associated with our rise to dominance, which is completely in line with your position. But even in if that happens to be true, I think you are doing a great disservice to how clearly you see humanity, and reality itself, if you let yourself be comfortable attributing this to something innate about homo sapiens, instead of something much, much more circumstantial, that we are simply lucky (or belligerent) enough to be the beneficiaries of. -

apokrisis

7.8kBut in seriousness, ↪apokrisis's arguments kind of rubbed me the wrong way from the outset, because they contained a kind of derision for the notion of homo sapiens not being superior to non-sapiens — Jaded Scholar

apokrisis

7.8kBut in seriousness, ↪apokrisis's arguments kind of rubbed me the wrong way from the outset, because they contained a kind of derision for the notion of homo sapiens not being superior to non-sapiens — Jaded Scholar

So you project some woke position on to a factual debate? Sounds legit. I shouldn't be offended by your wild presumptions about who I am and what I think should I.

But even in if that happens to be true, I think you are doing a great disservice to how clearly you see humanity, and reality itself, if you let yourself be comfortable attributing this to something innate about homo sapiens, instead of something much, much more circumstantial, that we are simply lucky (or belligerent) enough to be the beneficiaries of. — Jaded Scholar

Jesus wept. This is so pathetic. -

apokrisis

7.8kGoing further, the phrase "fully modern syntax" ("syntactic structure" does not make sense, it is like saying wet water or dark black) doesn't seem to refer to anything. — Lionino

apokrisis

7.8kGoing further, the phrase "fully modern syntax" ("syntactic structure" does not make sense, it is like saying wet water or dark black) doesn't seem to refer to anything. — Lionino

You could start by Googling Everett’s G1/G2/G3 classification of grammar complexity if you are truly interested.

It is relevant to the OP in that Everett follows Peirce in arguing for an evolution of language where indexes led to icons, and icons moved from signs that looked like the referents, to symbols where the relation was arbitrary.

(I mean did you read that Mithen article you linked to? He just goes astray in thinking the icon/symbol step came before some more general neural reorganisation, which was likely as not itself nothing more that an example of genetic drift than anything that suddenly made sapiens rationally superior.)

Or Luuk and Luuk (2014) "The evolution of syntax: signs, concatenation and embedding" which argues like Everett that word chains become recursive.

The point is not particular whether the Peircean developmental path is right, even if it is logical. The point is that people's whose job it is are quite happy to think that syntactical structure must have evolved to arrive at its modern complexity. Out of simple beginnings, richness can grow.

(Is that woke and diverse enough for the thought police out there?)

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum