Comments

-

US Midterms

So, in the video Herp-a-durp stepped on another dude's head and that counts `as a score, I get it. As for the connection to politics, is this how you decide which laws get passed in 'Murica? Party members step on each other's heads and those with the least brain damage get to make the laws? ...Plausible. -

Ukraine Crisis

You were criticising his method of argument and suggesting he's a dishonest interlocutor, which is ad hom, because you were attacking him personally rather than his argument.

(As per the basic definition:

Ad hominem means “against the man,” and this type of fallacy is sometimes called name calling or the personal attack fallacy. This type of fallacy occurs when someone attacks the person instead of attacking his or her argument.)

https://www.google.com/search?q=ad+hominem&oq=ad+hominem&aqs=chrome..69i57.2566j0j1&sourceid=chrome&ie=UTF-8

That's not to say you broke any rules. But he's right on that point.

...and then the irony of you trying to prove why your interpretation is correct by prompting that those non-native English speakers you argue with would "clearly understand" in the way you do if they had only understood the English language better. Almost kind of racist in a way of an Ad Hominem now is it? — Christoffer

His retort wasn't racist in any way (he's a non-native speaker himself far as I know and being a non-native speaker isn't a race anyhow).

Can some other mod please enlighten me on why Benkei is still a mod on this forum? It's like a judge who's breaking the law — Christoffer

If you think Benkei broke a rule, PM me with details of the rule he broke. I don't see any rules being broken by anyone here, certainly not racism or anything remotely of the sort. Finally, please use PMs or the Feedback category for complaints in future. Thanks. -

US Midterms

Leaving that aside, the guy seems to be a scumbag from just about every angle. If you do vote for him, for the love of Yahweh at least have the decency to lie about it afterwards. -

US Midterms

My sense of U.S. politics is it's kind of like WWE where your guy taking a beating / the other guy winning is always very painful. This is borne out by my (limited) experience on U.S. politics forums, which are dominated by "Fuck you, we're gonna kick your ass" type posturing, likely supercharged in recent years by Trumpism. Also, from a practical viewpoint, with the Senate in his hands, Biden can continue to nominate Dem judges to the lower courts and pretty much ignore the House which, with a wafer thin majority, will mostly consist of Republican factions eating each other alive and nothing getting done. That's a big win for Dems. So, maybe at the margins wavering Republicans may have not bothered voting out of complacency, but wouldn't the type that care a lot about having a red Supreme Court also probably be team players that are going to vote pro-red / anti-blue every chance they get both to get the boot into the other side and due to hot-button issues like abortion etc? Like, if you're cheering that abortion rights have been curtailed by the SC, you really don't want a pro-choicer representing you at local level, correct?

All's this to say that imo the election result wasn't so much about your average Republican or Dem voters but independents who were put off by the raft of shitty Republican candidates Trump successfully pushed for and thought the Dems the lesser of two evils, i.e. moderates that would normally lean Republican in mid-terms set against a backdrop of serious economic uncertainty and an unpopular President but were given reason not to this time. And if that's the case, the absolute last red ticket you want is Orange Man redux, especially seeing as for some unfathomable reason, the equally awful De Santis seems acceptable to most Americans and would probably have an excellent chance against sleepy Joe (who seems intent on ignoring his curtain call). So, the danger here is you hand Biden a second term and the House back to the Dems without them having to do anything but sit back and watch Trump implode. Maybe a really bad recession saves you, but that's your only Hail Mary.

Are you sad yet? I'm really trying to make you sad here... :halo: -

Does something make no sense because we don't agree, or do we not agree because it makes no sense.Deleted it for low quality. You don't have absolute freedom of speech in a moderated forum. Anyhow, the above seems to be an edited version but seeing as you reposted without asking, I'm closing this. Please don't repost deleted discussions in future. If you can't accept moderation, you're better off somewhere else.

-

Currently ReadingThe Science of Storytelling - Will Storr

Excellent. For its general theory of how human minds operate, for its exposition of effective narrative and for how well written it is. -

Brazil Election

Putting it succinctly, Bolsanaro is human garbage and Brazil has rightly dumped him. They do seem ill-served by their leaders on all sides though. -

Brazil Electionyour politicians are destroying your economies, principles, values and liberties exactly as the left has tried to do here. — Gus Lamarch

Sorry, I'm afraid if you'd like to give your opinion on our internal political affairs you'll have to learn to read the languages of all the countries we come from first. -

Merging Pessimism ThreadsOK, so we changed the name to avoid the impression we are denigrating the topic. Which was never our intention. However, we will continue to expect the conversation to be limited to that thread until further notice. And if you wish to remain a member @schopenhauer1, please do not give us further reason to suspect you are evangelising.

-

Merging Pessimism ThreadsI just think titling the thread using negative language is asking for criticism and concerns of biases among the mods. — Pinprick

Fair point. -

Merging Pessimism ThreadsStapling means your existing super account automatically follows you when you change jobs. — universeness

Sounds like the plot of a postmodern horror movie. :lol: -

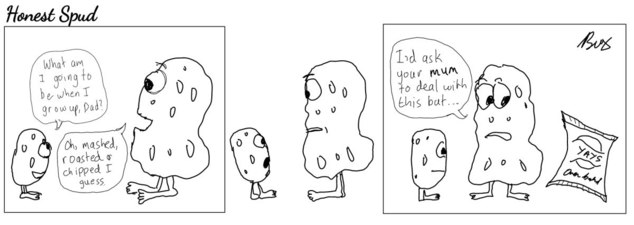

Merging Pessimism ThreadsCan you make me a cartoon with a breast bearing fish with its hair straight up? Maybe that will clarify things for me. — Hanover

Must be a reflection of my slightly deranged state of mind that I'm seriously considering that.

It's about 3 or 4 of them, mostly. But, I mean, what's the point? Like, you want to depress everybody? Read the news. — Manuel

The topic is philosophically established and acceptable but the posting behaviour pushes the boundaries of the guidelines to say the least.Attachment stapler

(11K)

stapler

(11K)

-

Merging Pessimism Threads

Gave my explanation that it was primarily about the poster, not the topic. That was ignored in favour of more complaints about suppression of the topic. That's a little annoying but no biggie. Carry on. -

Merging Pessimism ThreadsNext time maybe we'll just ban @schopenhauer1 instead of trying to be nice and just control his postings.

Thanks for feedback anyhow, guys. :up: -

Merging Pessimism Threads"Ideology" is another name for a philosophy you don't like. — T Clark

Post 200 threads in succession on any philosophical topic you like and I'll learn to hate it pretty quick, thanks. -

Merging Pessimism ThreadsThere is no philosophical justification for merging my thread with this one. The motivation is clearly just a brute dislike of philosophical discussion of antinatalism or any argument that might have antinatalist implications. — Bartricks

The motivation for all this wasn't philosophical or personal, it was moderation, i.e. to prevent @schopenhauer1 proliferating these discussions as part of what we saw as probable evangelism. Almost 200 threads by one poster on one issue was more than enough for us. The choice was to ban him or take some other measure. It's not antinatalism that's the central problem here, it's one posters use of it and his attempts to circumvent the limitations we're trying to put on him. -

Merging Pessimism Threads

I understand your concern, but to put this in context, @schopenhauer1 has started close to 200 discussions, all or almost all on the same broad topic, five of which were simultaneously running on the front page even after we specifically indicated the Life Sucks thread was the place for such. The forum is not supposed to be used as a platform for spreading any poster's particular ideology. That's what personal blogs are for and that's why we have the evangelism guideline. As @Jamal alluded, if it were not for @schopenhauer1's generally thoughtful and engaging manner, he would already have been banned. -

Grammar Introduces Logic

:chin: Well, it beats your poetry, I guess. :cheer:

@ucarr

I appreciate your open and engaging investigation but I can't help but feel you are making stuff up on the fly as it suits you, redefining terms in your own idiosyncratic way and so on. Anyhow, I'll leave you guys to it and may jump back in later if you settle on a coherent set of definitions and some kind of recognizable theory. -

Grammar Introduces Logic

If you follow the point of contention, you'll better be able to determine whether such interjections are helpful/necessary/relevant. I'll try to be as precise as I can with my phrasing. -

Grammar Introduces LogicWhat I'd really like here, I suppose, is to help avoid a descent into pseudo-science. Linguistics, like any other science, has certain principles that ought to be recognized. I've seen in similar threads before a temptation to try to treat discussions on language as if the science of linguistics didn't exist at all or was invented yesterday and everything's up for grabs. It wasn't and it's not, just as with Physics or Chemistry. I'm not saying @ucarr is doing that just that I've seen these discussions deteriorate before because so many people have a theory of language that's based more on intuition than study.

-

Grammar Introduces Logic

I can identify rocks and communicate their existence. Rocks are not a language either. So, I was clarifying what a language is and isn't, not saying that anything could not be put into language. And this is me being extremely charitable in interpreting your objection. -

Grammar Introduces Logic

The OP make some leaps I would need to look more into. Just trying to clarify a few points from my own background in linguistics, so far. -

Grammar Introduces LogicThe sentence "come here" doesn't contain any preposition, yet signifies a spatio-temporal relation. — RussellA

Yes, and can form a "complete thought" due to the fact that it fulfils at minimum the necessary requirements of a clause, i. e. it contains a verb and everything necessary for the verb in its syntactical context (its complements). And a clause whether singularly acting as a sentence or doing so in conjunction with other clauses, forms the most important semantic building block of language. Here again, the verb is central, and prepositions peripheral.

(Edited for clarity). -

Grammar Introduces LogicOur views differ in terms of the quantum vs. the continuum. Baden says the boundary between linguistic and non-linguistic is quantum; I say it is continuum. — ucarr

Not exactly. Though language has specific attributes that help identify it, there is room for debate around some of those attributes, e. g. recursion. And if we are to take it that language evolved over time, we ought to make conceptual room for a theorised primitive proto-language. However, there is no serious consideration given in academic linguistics to incorporating crow behaviour or tea-making behaviour under even the broadest umbrella understanding of language. That doesn't mean some of your other ideas aren't pertinent but you might be being a tad overambitious in the scope of your project here. -

Grammar Introduces LogicWhat is language for if not conveying information ? — RussellA

Conveying information is a necessary but not sufficient condition for language. That should be obvious from what I wrote. Passing wind may convey information as may a million other non-linguistic events. Language is special and specially defined in comparison. -

Grammar Introduces LogicThe OP does remind me of a quote I really like by Irish philologist Richard Chenevix Trench.

"Grammar is the logic of speech, even as logic is the grammar of reason.'' -

Grammar Introduces LogicChinese, for example, has a different set of linguistic conventions for dealing with the prepositional context. "Dao wo zher lai" means "come to me", but does not actually contain any word which would translate directly as a preposition in English. — alan1000

Wouldn't surprise me. Prepositions in Irish are integrated with the subject. For example, "to me' is one word. A much more central word class are verbs. -

Grammar Introduces LogicHowever, birds, as well as other animals do have language, in the sense that their calls, postures and other behaviours do convey information to other birds and animals, such as location of predators and sources of food. — RussellA

That's animal communication not language. Conveying information is not a high enough bar for language. -

Grammar Introduces LogicI think the preposition can be labeled as being a particular type of conjunction. It is the conjunction of (among other categories) space and time — ucarr

Category error and general confusion here. Conjunctions join phrases, clauses, words, and sentences. They are defined in syntactical not physical or temporal terms. Prepositions can be prepositions of time or space, and those are distinct categories. Both terms originated in classical grammar. For the purposes of your OP, a functional grammar, like SFL, might be more useful.

Language is a very particuliar form of skilled behaviour that, yes, is not uni-modal; but nevertheless has very specific properties that are well defined and understood and distinguish it from other forms of skilled behavior and non-linguistic communication. So, American Sign Language, for example, is a perfectly valid language but me making a cup of tea or physically showing you how to do that, more analogical to your crow example, is not.

Some of the specific attributes that define language include:

1. Individual modifiable units

2. Negation

3. Question

4. Displacement (e.g. tense)

S. Hypotheticals and counterfactuals

6. Open endedness (novel utterances)

7. Stimulus freedom (open responses)

You can't make crow behaviour into individual units that can be reorganized to meet the criteria above. Not only is there no language there. There is almost nothing at all like a language, -

Liz Truss (All General Truss Discussions Here)

She resigned because, facing electoral annihilation, her party would have given her the boot otherwise. It's not all that difficult to get rid of a PM compared to a U.S. President. If you're in search of common decency, you are probably looking in the wrong place.

Baden

Start FollowingSend a Message

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum