Comments

-

Welcome PF members!Given the state of AI language processing today, if you can write code that actually understands posts well enough to identify the types of arguments/fallacies being made the majority of the time, you'll be wealthy beyond your dreams. — Paul

There has been a lot of growth in this space (because of funding and interest). See some of what is on github.

Yes, if you can create what I'm talking about and secure the IP and run a business you could probably turn it into good money. There is a lot more time and effort around that than it sounds, however.

Let's just say that conn artists have made anyone trying to get funding for this kind of venture require things I don't personally have, one of which is personal wealth and the other is the time that comes from that wealth.

It's not so much what is possible as what is probable. -

Infinites outside of math?I hadn't watched that. It's an awesome series! I watched for like 20 minutes when I should be working.

-

Infinites outside of math?Yes of course. It's just like any other variable symbol.

(I tried bbcode math and mathml and texzilla but this forum doesn't support it :-( )

No work

UTF-8 but no solve 'cause not MathML\sqrt(\infinity*\infinity)=\infinity*1

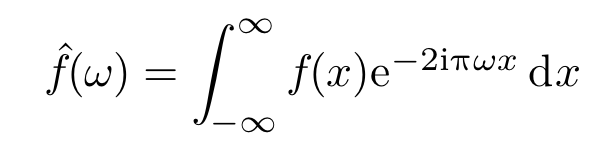

The Fourier Transform (signals math) - edit: this looks horrid. (appended what it should look like)∞+1= ∞+1-1= ∞*1

f(x)=∑n=−∞∞cne2πi(n/T)x=∑n=−∞∞f^(ξn)e2πiξnxΔξ

edit: and here is what it's doing:

You see what I mean now? You can't sum up infinity so you can't solve the answer to ∞*2 but you can work with it like any other variable and to do many modern forms of equation you have to. -

Infinites outside of math?Some MK quotes...

Once again, my colleague Stephen Hawking has upset the apple cart. The event horizon surrounding a black hole was once though to be an imaginary sphere. But recent theories indicate that it may actually be physical, maybe even a sphere of fire. But I don't trust any of these calculations until we have a full-blown string theory calculation, since Einstein's theory by itself is incomplete. — Michio Kaku

Combining quantum entanglement with wormholes yields mind boggling results about black holes. But I don't trust them until we have a theory of everything which can combine quantum effects with general relativity. i.e. we need to have a full blown string theory resolve this sticky question. — Michio Kaku

If I completely avoid theoretical physics questions and stick to mathematics do you notice something here?

I'm specifically talking about MK's approach to theories not his own...

Mathematics is a declarative language. You can make statements. You can make incomplete statements if you provide variables or equations with variables. You cannot, however, speculate or expound on theory. Mathematics is also linear. It makes clearly defined logical statements in a linear series. You can make a linear set of those linear statements. You can stack up as many linear statements describing as many dimensions or universes as you like too.

MK is a theoretical physicist specializing in cosmology and grand unified theory.

The problem MK (and all other theoretical physicists) are running into with their theories is the difficulty of constructing an analogy of sufficiently granular specificity (enough details/variables) to describe the function of the universe linearly and in an unbroken form from quantum theory into relativity (Einstein space-time).

Add to that MK's problem accepting any theory not his own and he says some truly whacked out things on a regular basis. I couldn't find your quote, but just from the ones I did find we already know he's going to utterly disagree with QMRE (quantum-modified Rocharch Equation) and infinite universe because any times infinite anything comes up (or the math showing that super-blackholes just can't form - based on Radjaharma's sp? research) he just says "bullshit" and ignores it.

He doesn't debate. He doesn't offer explanations. He doesn't refute. He just says it's not worth his valuable time. It's 100% ego-driven logic.

If ∞ does that to math, is ∞ mathematical? — Agent Smith

Nope. It doesn't do that. That's a linear assumption, NOT an axiom. It's not a proof. It isn't part of mathematics at all. It's just a belief held by some people with big egos and funding to defend. -

Infinites outside of math?It's not a joke, he's saying that your semantic mess is ridiculous.

As much as I can't stand that guy, he's right. Your entire argument is semantic and rooted in an incomplete understanding of the philosophy of mathematics and semantic meaning of the words you are using. -

Infinites outside of math?That's not how language and the brain work. It's got nothing whatever to do with what I said about sets.

I just feel sad that yet again my hopes of having a good conversation has met a disaster of self loathing instead of a peer. -

Infinites outside of math?This is not just a matter of notation, but is a crucial concept, especially in linear algebra — TonesInDeepFreeze

Of course it is, but I am not a mathematician and you're literally talking about writing style. I pointed that out already. You're talking about notation which is literally how you write it down by definition so that there isn't a bunch of words needed to explain things.

I literally said exactly that:

AFAIK one doesn't use braces for subsets but then since I'm not a mathematician by trade I don't really know anything about how mathematicians do things — SkyLeach

See, in the real world people can't specialize in everything. There are limits to how much jargon and special notation and career details a generalist can cover because there just isn't any possible way to read and remember every possible detail of every discipline, even if you spent 100% of your time doing nothing but learning and no time at all in practical application.

And you're just plain wrong about it not being "just a matter of notation". That's bloody well exactly what it is and it's specialized for your particular field because it makes sense in a field where you write down equations all the time. In fields where writing equations is a complete waste of time except for the rare instance when it's going into the documentation it's mostly worthless trivia.

As for knowing it there would be absolutely no difference at all in me saying you don't know linear algebra because you didn't know that what I pasted into my post was set notation for a set of tuples (immutable ordered sets).

If I had thought you'd try such a specious and obvious Ad Hominem attack merely because you don't like my argumet I would have taken the time to run the output through SymPy. I'd have done that because it's a tool for turning functional code notation into symbolic mathematics notation.

The tool's purpose is to ease the grunt work required to turn functional logic into symbolic mathematics for publication because ain't nobody got time for that shit but a mathematician.

I don't know why someone would be posting such bold claims as yours about mathematics and linear algebra while not even knowing that there is a distinction between merely a set and an ordered tuple.

Saying that linear algebra is the foundation of mathematics while not knowing the basic notion of an ordered tuple is like saying benzene rings are the foundation of chemistry while not knowing what an atom is. — TonesInDeepFreeze

Blah blah blah "HAHA! I found you out! Have at the pretender!"

Seriously what the hell is your problem? I don't care if you like the argument for set theory based axioms or not. It's a core concept of the philosophy of mathematics and I think it makes a lot of sense but I'm not on a crusade to make you accept it and you had no call whatever to try to trick me so you could try to shame me.

It doesn't really matter that you failed completely (and made yourself look like a jackass) so much as the fact that I'm a real person. I have real feelings. I like people and genuinely enjoy talking about philosophy and so far all I've gotten since I came here is a bunch of angry people trying to take out their personal issues on random strangers.

Seriously, go see a professional and deal with your issues, not my fault and I don't deserve your shit.

EDIT: I also didn't now that Python borrowed the term "Tuple" from mathematics. Cool. I learned a new etymology. So yeah, I guess I could have answered your question easily if I had known we shared that. -

Welcome PF members!Imagine coming to a philosophy forum in a world where even common-man wisdom like...

has been forgotten.to assume is to make an "ass" out of "u" and "me"

or... imagine the world of "Cars" where every junker on blocks thinks it's a racecar just because its horsepower is close (relatively) to the horsepower of a racecar...

we don't really have to imagine, however. We can just watch "The Walking Dead" because even though the dead are destroying society and the world the people who aren't dead are still screwing up by the numbers because they have their heads up their asses

But hey, it's cool right? We don't need to stop hoards of junkers or entitled kids or zombies from destroying the things we love. We can just ... IDK... believe it's all gonna be OK because mamma said we were special when we were kids and everyone deserves to take our toys and break them. -

Infinites outside of math?No, I don't. I have no clue what you're talking about. AFAIK one doesn't use braces for subsets but then since I'm not a mathematician by trade I don't really know anything about how mathematicians do things.

-

Welcome PF members!Yeah... I have a design for how to build it but it's a really complex program and I don't have time. I'm working about 120-160 hours a week right now (long story).

Essentially it's like an IDE linter but uses semantic and sentiment analysis and NN heuristics to identify logical fallacies (especially direct and passive ad hom) as well as other warnings and flags and then requires that the writer pass the linting test before posting. Also the linter would need a process-based Kubernetes cloud to run the training data. I also don't have the training data yet, but I suppose the post history from the bbforum backend to this site could work well. -

Welcome PF members!I am dissapoint

I came in here browsing while having coffee and this one resonated with me

place is going downhill fast at the moment. — Wayfarer

While I realize things in America are pretty shitty I never thought I would be blamed for another person's lack of education or intelligence. Yesterday I had it happen twice in a single thread. A thread about intelligence. One of them was outright rude and abusive while the other was apologetic about how they sounded.

You see what I mean? Multiple people arguing about the semantic meaning intelligence but not the meaning of the thing, the meaning of the bloody words, and hostile to bettering their approach through education because it's too much damned work.

I am not kidding, I threw up. I got up, walked around for a minute, and the wave of nausea hit me while standing in my kitchen. I barely made it to the trash.

How the fuck have we fallen so far as a people?

How can one come to a place started by a man who argued against assumed meaning and taught the man who taught the father of science and be confronted by people so entitled and arrogant they they think knowledge is an assault on their privilege?

I just can't bear it.

I'm incredibly overworked and came here to decompress and now I want to offer my services to write an algorithm to prevent morons from posting just because I'm so sad right now. -

Infinites outside of math?Yeah I worded that poorly. I was thinking ray as applied to a function but talking about vectors.

Anyhow, here:

{(5, 2, 4, 0, 0.0), (5, 2, 4, 1, 0.8414709848078965), (5, 2, 4, 2, 0.9092974268256817), (5, 2, 4, 3, 0.1411200080598672), (5, 2, 4, 4, -0.7568024953079282), (5, 2, 4, 5, -0.9589242746631385), (5, 2, 4, 6, -0.27941549819892586), (5, 2, 4, 7, 0.6569865987187891), (5, 2, 4, 8, 0.9893582466233818), (5, 2, 4, 9, 0.4121184852417566), (5, 2, 4, 10, -0.5440211108893698), (5, 2, 4, 11, -0.9999902065507035), (5, 2, 4, 12, -0.5365729180004349), (5, 2, 4, 13, 0.4201670368266409), (5, 2, 4, 14, 0.9906073556948704), (5, 2, 4, 15, 0.6502878401571168), (5, 2, 4, 16, -0.2879033166650653), (5, 2, 4, 17, -0.9613974918795568), (5, 2, 4, 18, -0.7509872467716762), (5, 2, 4, 19, 0.14987720966295234)}

Edit the ray stops at 20, but that was to keep the post short.

Edit2: it should be pointed out that since you didn't define the association, the possibilities are infinite. The only way to make it useful is to define how they're associated. -

Infinites outside of math?

Yes, it's literally the first sentence.In mathematics and physics, a vector is an element of a vector space.

Trouble is, then we have to get into what a vector space is...

In mathematics, physics, and engineering, a vector space (also called a linear space) is a set of objects called vectors, which may be added together and multiplied ("scaled") by numbers called scalars. Scalars are often real numbers, but some vector spaces have scalar multiplication by complex numbers or, generally, by a scalar from any mathematic field.

And from there we have to get into specific allegorical definitions (while trying to avoid jargon) in order to limit the definition of 'element association'.

Really though, at this point the specifics are specious since the bolded part of the definition of vector space pretty much covers it.

My word "association" is indistinguishable from the definition of mathematic field:

In mathematics, a field is a set on which addition, subtraction, multiplication, and division are defined and behave as the corresponding operations on rational and real numbers do.

Maybe a real-life example would clear it up better though

In radiology the system that stores images is called PACS. Most of the time, those systems deal with DICOM images. Those images can be regular computer graphics, but not for things like PET. PET is stored as a matrix defined in the sagittal, axial and transaxial planes. The value of each element in the matrix is called a Housfield scalar. It's a relative absorbtion rate (defined by the precision of the PET hardware) between 0-255 (or bigger).

You can't do anything with the matrix in that form, however. You must either convert it to a 2D vector space or a 3D vector space. You can do this directly or by scaling it in a process called fusion with MRI or CT data which is generally stored as a more traditional JPEG2k or MPEG.

Thus, the resulting vector space is either a 3 element vector of (x,y,h) or 4 element vector of (x,y,z,h) with x,y and z mapped to the coordinate space and h mapped to the housfield absorbtion.

It's a whole other dimension, but it's still valid. -

Is depression the default human state?Not keen on dogmatic rhetoric that sounds like you are trying to make me a gift of your wisdom. I know it's real and I have my views. And I agree that good treatment is not always provided and underfunded (I am not in America) — Tom Storm

Ouch.

I'm guessing that came from "for your benefit"?

I read in the context of your reply that you thought I might have the view that clinical treatment was "never effective" (I think @EugeneW said that)

My motive was to clear up your perspective of my views about clinical treatment, not to make any statement (even implied) about your views.

It's probably impossible for anyone to function with no ego but for whatever it's worth my ego is a very small and under-fed thing that spends all it's time plotting to kill my objectivity (which is my favorite personality trait). It got into a bit of a life accident a while back and wound up badly damaged. It was on life support for years. :-) -

Infinites outside of math?As far as I can tell he's just using the singular form of a word and saying it can't be counted which is kinda like saying "can the number 1 be counted".

The answer is, of course, yes that's the definition of commutative principle we just use a different word with a root modifier for plurality instead of the singular.

That's why I said it didn't make any sense. You can have multiple surfaces, consciousnesses, etc... -

Infinites outside of math?Your perspective of "set theory" is not the normal math perspective. If it works for you, fine — jgill

The point was that I have both perspectives. I double majored in mathematics. This being a philsophy forum I default to the more general view (and certainly in this context discussing the mathematics-only perspective would make little sense).

I'm also curious why you just skipped over the whole part about a vecor being defined by element association since that was the mathematics perspective and the practical application perspective. -

What is intelligence? A.K.A. The definition of intelligence

Kinda jumping into this after reading through posts and replies and speculations so I'm tagging the people I specifically am addressing so you all know I'm thinking of things you personally said while replying to the group.

First let me address the linguistic problem. Whether it's slang or jargon the simple fact is that language does a severely abysmal job providing the contextual clues children use in development to build cognitive links around the concept of intelligence.

Be warned, this will be a long post because it's a complex topic that aims to deal with a subject that is notoriously difficult for children to learn in the same way they learn everything else.

The concept is as diverse as the human brain and that particular object happens to be the single most complex physical object in the known universe (known by man at least if that isn't obvious).

I don't want to bore people so if you already know how amazing it is you can skip this next part. It's intended to just gush about how cool the brain is anyhow.

DATA DUMP

For any of you who might think I'm being hyperbolic, consider this: a microprocessor (current generation) is a single dye (piece of silicon) containing a gating bridge, at least two "cores", control logic and connections to L1 and L2 cache (L3 is only on dye in Xenon procs) and each core is hard-encoded instruction sets for about 12 different specially stacked instruction sets (dictionaries of operations) from the x86 architecture to MME and SSE architectures. Essentially all of them are 64x64 operator/operand intersections.

Even with all of that, it's still only binary operations where 64 bits define the operator (instruction set) to work on the other 64 bits (the working set) and the output (bit) can be 0 (neutral) or 1 (charged).

In comparison, the human brain has a sodium ion channel, a potassium ion channel, neurotransmitters (like amplifiers) and neural inhibitors (like resistors) and hormones (can activate or deactivate additional neural clusters as well as affect the release and uptake of tranmitters and inhibitors).

Those varied operations each act like 1 channel in a cpu by carrying one informational factor, so that's roughly 4 factors (equivalent to 8 possible values of 'bit').

Next, the human brain has evolved over time with stacked layers. These have names like occipital, temporal, prarietal, hypothalamus, limbic region, Broca's region, Wernicke reg... ok maybe I'm going too far.

The point is, each one of those is a large enough mass of neurons to be easily seen and held by the human eye and hand.

Packed into each is anywere from a few hundred million to a billon neurons.

Each neuron has a primary output (ganglial tail) and a cloud of synapses (like hairs on the other end) that bond them 1:1 or 1:n to other neurons (or even multiple times to a single neuron). Each one of those synapses and the ganglia transmit all 4 variables of state to their neighbors or possibly to a nerve cluster or to the lymbic region for connection to a whole other region of the brain.

So, instead of a 64bit processor you have a 4!*100 processor (2,400 channels). There are definitely signs that each neuron can even make some decisions on operations internally, not merely as part of the overall cluster, but I'm trying to simplify so I'll let that lye there. So right now we have about 24 billion possible configuration channels on the low end to about 24 trillion for larger structures like the prefrontal cortex (and you have two of them).

That is the instruction set of the brain if you will. The operation set is the neuronal network itself (the series of connected and reinforced pathways between neurons.

Each operation instruction (all those trillions of configurations) take place over a series of fractional seconds while the 400Hz oscillating pulse (theta, beta, alpha waves) continues. That basically says that unlike a CPU the operator scales over time (then falls off) instead of initiating a state change instantly. The very analog nature of the operations, however, can be used to double the state of operations as a whole new operand too.

The inference from this "summation" of the complexity is that even though the brain operates at a MUCH lower "clock speed" than a processor it still achieves many orders of magnitude faster processing because it covers its entire compiled data set 400 times per second while a modern CPU takes the better part of 45 seconds to process it's entire executable memory space and that is only about 2GB on average or about 18GB on a desktop running many applications.

Have you ever noticed your PC running very slowly while indexing files or when you have too many tabs open on the browser? Also your computer has all that information in descrete and unassociated chunks while the human brain has to hang everything it knows on an associated framework.

NOTE: The whole reason people can't remember memories before about 2 or 3 years of age is because your entire adult memory space is hung on a compatible framework that developed about that time. The things that came before just don't make sense any longer.

BREAK DATA DUMP

As I've mentioned in other posts, the study of the human mind as an empirical discipline (said with a huge amount of tongue-in-cheek) has only been around since about the 1930s so it's a very very very new "science" and a whole lot of it isn't science at all due to some problems during the cold war leading up to a total ban on many (most) forms of experimentation in the West since the 70s as unethical/inhumane. Of course that ban hasn't done a whole lot of our knowledge of how the brain works and develops, but there is still progress if slow.

One fantastic (and very new) bit of research done is about how information absorption affects perceived intelligence. Your brain needs you to read - Pocket Article. (study is referenced in the article, I can link it if anyone wants).

I'm going to take a break here because this is already long and I have other things I need to do. -

Is depression the default human state?

...Agree with you. I've worked with — Tom Storm

Just one small problem: that's not his view.

Nobody made any counter argument to yours and that's not even close to why @javi2541997 is under fire.

It's the fact that the conversation was about causes for depression and not the pros and cons of treatment for any given patient.

So, do you agree with @javi2541997 that a person should be passive-aggressively mocked and belittled when they state their views and purpose of asking a question?

Do you agree with @javi2541997 when he ignores the strictures of polite conversation, reasonable presentation of views and cited evidence contrary to his sated opinions and then follows a predictably selfish and narrow minded path towards hostility because he hasn't learned anything at all about critical analysis in finding truth?

I suspect that you just saw a page or two of argument and thought it was about whether clinical depression is real.

Let me state very clearly and unambiguously for your benefit that clinical depression is very real. The only known treatment for clinical depression is medication because its only root cause is damage to the brain.

Let me also state very clearly that diagnosis of clinical depression is now done in the most haphazard and lazy way possible by under-qualified and under-funded state hospitals given a ridiculous mandate to treat millions of depressed people and since the correct treatment is too hard to do on a shoestring budget it's just easier to call them all clinical and dump the problem on medical insurance.

Just... please don't feed the trolls. -

Is depression the default human state?2. I shared an academic paper of Harvard explaining it, but you do not like it. — javi2541997

Completely ignoring the time I spent (and money I saved you personally) by providing the full unlocked study by link, the breakdown of both the so-called "paper" (it was a medical brochure) and it's author (clinical psych) which is an unwarranted and rude waste of my time.

Washington against me view — javi2541997

... are you ... ok?

Then, you started to laugh at me and denigrate my dignity. — javi2541997

Never laughed at you and whatever dignity you have was forfeit the moment you started passive-aggressive mockery of the entire foundation of this forum.

Do you know what do you excess of? hypocrisy — javi2541997

Maybe, but you're never going to convince anyone by quoting me in a way that does nothing whatever to support your argument and verbatim follows the path I said that every moral relativist follows when faced with evidence of their intolerance of others. -

Is depression the default human state?

I really wish I knew how to actually help people going through your problem more effectively.Eugene, would you really let us down because you do not want to share your intelligence? I am disappointed... you said you were the best at neuroscience previously.

You speak speak with such rotundity that it looks like you are the best...

Do you know what do you lack of? modesty — javi2541997

Just because a person knows more about one thing or even about everything doesn't mean that they add up to a more valuable person. That overly simplistic and frightening way of thinking about relative self-worth is typically the underlying source of hostility towards people who don't accept one's point of view.

It tends to stem from a morally relative core. That's a person who, rather than having philosophical underpinnings for truth, uses relative consensus to establish the value of information (i.e. correctness).

Unfortunately it's based on a logical flaw known as appeal to the majority and completely ignores the unfortunate truth that the majority is statistically more often wrong (to some greator lesser degree) than any single given philosophy. This is just due to some of the rules of information theory and least common denominator in social memetics.

I have yet to convince even a single moral relativist to chill out and figure out why they're so intolerant of intelligent discourse but at least I tried (without just getting angry at you) so I call that a win in my personal growth. -

Is depression the default human state?Are you expecting people to just accept really rude sarcasm because you said it passive aggressively?

You are being incredibly rude too. He didn't claim he had a special degree or certification and lots and lots of people spend their private time studying subjects of special interest to them.

Let me find that reserarch... one second... aha! Yeah so a Yale Psychology study showed that introverts were actually really really good as psychology (because they try to understand people due to their personality traits). Here's the study: Yale - Social Psychology -

Is depression the default human state?If you ever want to up your game look up Neuron (neural pathway simulation) which allows modeling of ion channels for the purpose of simulated experimentation with neurochemistry.

There are whole suites of tools available (open source I might add) for modeling the brain now which helps when deconstructing fMRI. -

Is depression the default human state?And you're nominated as resident bird watcher. (meant 80% light-heartedly)

More seriously though, this is philosophy forum and the whole point of this place is to talk about philosophy. That's what both of us are doing so... what's the problem? -

Is depression the default human state?Are you really sure of such statement? According to Harvard Health Publishing (HHP) :

To be sure, chemicals are involved in this process [depression], but it is not a simple matter of one chemical being too low and another too high. Rather, many chemicals are involved, working both inside and outside nerve cells. There are millions, even billions, of chemical reactions that make up the dynamic system that is responsible for your mood, perceptions, and how you experience life. — javi2541997

.... so? Yup, there are a lot. That's why you have to discretize the problem rather than trying to solve a thousand variable differential like you're Deep Thought in HGTG. Christ just forget this way of looking at it...

Have you ever looked at that study? I mean really dug deep into it. Probably not, let me help.

Disclaimer: I did this really fast because I have no time so I didn't double check things (cut corners) and if I missed anything I apologize in advance. I really don't have time but I care about social sickness so... doing my best.

Here is the source ($20 so... you're welcome) - Understanding Depression - Harvard Health.

And what the article paraphrases is this: it's a big problem and has lots of repercussions and we aren't paid to sabotage our funding so STFU and take the drugs.

The paper is literally a brochure for clinical psychology to make scared patients shut up and buy the damned drugs. It's written by this douche: Michael Miller

Look at what branch of psychology he's in: clinical.

CLINICAL PSYCHOLOGY IS ABOUT TREATMENT, NOT CAUSES.

(not yelling, emphasizing)

There are lots of branches of psychology (and medicine) and each one focuses on a distinct area. Evolutionary, developmental and neuroscience focus on causes, the rest are less empirical and far more about... well other goals.

This being a philosophy forum surely you can piece together the fact that a persons motives are going to be driven by context and a lot of our medical science is driven by the "do no harm" maxim. A patient isn't an experiment. You're supposed to make them "better" which means improve their state of well-being not fix them.

I believe, very passionately believe (if you haven't noticed) that they definitely are doing a great deal of harm by suppressing people trying to figure out why they're depressed. Drugging them isn't a solution, it's a cheap way for an over-stressed and under-funded piece of the social machine to get some grease so it stops threatening to come apart and mess up the whole machine.

All of society is getting ... worse. People are suffering more mental health problems. People are more angry, confused and dysfunctional than at any point in medical history (which is really short because psychology wasn't really a thing until the 1930s). Entitlement is on the rise. Reading comprehension is dropping at a compounding rate that was over 20% in 2016. Intolerance and violence are on the rise. Lying (dishonesty) is also increasing at a geometric rate.

Just like you said about the brain: these are compound problems driven by hundreds of thousands of factors but the measurements are pretty solid and more approachable than ever. The WHO has a suite of databases and tools for access to national data archives and science. There are redistributors that use loopholes to distribute journals to people who can't afford the outrageous fees. I use sci-hub.nl btw.

Anyhow, my hope is that you guys can leverage my incredibly extensive knowledge of the humanities to at least sum up the state of things. BTW, my specialization for the humanities is as a research consultant (data science with NLU (Natural Language Understanding) and the use of social media and big data to run semantic and sentiment analysis in real-time). -

Is depression the default human state?There are two ways to look at depression. The "official" way (clinical) vs. the evolutionary/developmental/sociological way.

The official way says it's a disease that should be treated with drugs. The official way is complete horseshit that intends to "fix" you by selling you drugs that mess you up and completely ignore the needs of the patient.

The actual empirical science says that depression is the result of being trapped in a completely messed up social system that treats you like a biological machine and a resource to be harvested and used up then thrown away. You don't have to be smart for your brain to know the truth while your rational mind deals with it by denial: depression. That isn't an implication that intelligence has anything to do with it. In fact, studies suggest that the smarter you are, the more depressed you are because the more you understand how totally used and unappreciated you actually are.

By the numbers, slavery was replaced with a kind of denial-based inflation that forces the slaves to feed, house, clothe and sell themselves for ever lower prices on less food and in more cramped housing but since they sell themselves they blame themselves and since some people have it worse (they're beaten and raped) they beat themselves up for feeling bad about how they're treated. It's diabolical. -

Infinites outside of math?Nope, I definitely haven't done whatever you said. I also have no clue how to do that because I don't understand.

-

Infinites outside of math?A sphere in 3-D is not composed of points? — jgill

not if you're using set theory. Calc 3 FTMFL

EDIT: damnit I just realized that my perspective will probably get me argued with again...

Set theory isn't just sets of points, it can also be a scene described as a space (hilbert, sobolev, etc...) with objects described functionally instead of sets of points. I deal far more with scenes described rather than sets of points except when rendering a solution set. -

Infinites outside of math?The sequence <2,7,9> can be seen as a vector. The set {2,7,9} cannot, since the positions of the elements is arbitrary — jgill

What's that? So a set of random integers is defined that way? Do you speak of a set or a sequence? — jgill

It's strange that you read those statements as "all sets are" instead of "any set can be". Maybe my word choice was poor? I tend to think in terms of software, not geometry, so there really doesn't need to be a pattern in the numbers in a set just a relationship between them that associates them, even if they're pseudo-random numbers.

Typically a vector is rationally linked with some spatial coordinate system but in software you just can't limit them by guessing the relationship between them from their values.

Not my experience — jgill

This isn't about personal experience or a single academic discipline. When I think of the problems in academia I just don't think about math as one of those. It's not that it isn't one, it's just that the only time the problems ever came up was over the rants against infinity and set theory (which are actually a bit funny to read at times). Mathematics is quite possibly the most empirical of all the sciences.

When I talk about many of the problems in academia I tend to be thinking of cosmology, astronomy, paleontology, the humanities (psych, anthro, socio, etc...) The more empirical and rigid a discipline is the less they seem to get into academic problems.

I double majored but since I graduated I don't think I've read more than a half dozen research papers in mathematics. It's just not one I need to follow closely because new algorithms or solutions sets are rare. -

Infinites outside of math?↪SkyLeach

I'm somehow not convinced by your words. Uncountability is, in a sense, beyond (conventional) mathematics seen as a counting activity.

True that the variety of numbers has expanded over history. Yet we seem distinctly more comfortable with the category of natural numbers than with any other I can think of. — Agent Smith

Perhaps this will help.

Starting Premises:

- Mathematicians earn a PhD just like all other non-medical disciplines

- Previous is because their doctorate is in a very specific branch of philosophy

- Previous is called the philosophy of Mathematics

- Previous is derived from axioms (self-evident truths)

- The argument to prepend (as opposed to append) ZFC to the philosophy of mathematics is a rational argument based in a self-evident truth, just like all other axioms of mathematics.

- My words are just me talking about that axiom as I understand it.

Look around you (allegorically speaking) and point at anything that is a 1 or a 2 ... or any other number.

Numbers aren't things, they're representations.

Q: What do they represent?

A: Cognitively distinct concepts.

Q: What is a cognitively distinct concept?

A: Anything the rational mind considers a discrete set.

Q: Is there any limit on what the mind considers a distinct concept?

A: Yes, the mind is only able to process allegorical comparisons as set theory and has increasing trouble with concepts sufficiently distant from experience to create one-off pathways (dangling pathways or singular references or [in the case of damage] Disconnection Syndrom

Q: Can you put that in terms I can understand?

A: Maybe. Concepts like massive measurements are very hard for the mind to put into comparative concept and since that's how the mind actually works a great many mistakes are made when doing it until the mind has compensated.

Q: Can you give an example?

A: Yup. Timescales in galactic terms. Distances in interplanetary terms. Infinities. Distance between two points along a curved path like a planet's surface instead of a straight line. Asymmetric periodicity.

Right now, traditionally and with the same consensus as JGill mentioned, numbers are referred to as measurements.

Counting (commutative principle) is just an axiom. Numbers aren't counting except in the sense of combining any two measurements.

When we think of counting apples it can be hard to think it's a measurement. In effect, however, you're measuring volume (just not being very precise). If you're counting apples to fill a pie then the correct answer can vary because the size of the apples definitely will vary based on what kind of apple you are counting. Granny smith vs. red delicious (for example) since granny smith are small green apples and red delicious are quite large red apples.

Ok given that all numbers are just relative measurements between two points in a conceptual context of the mind we can begin to argue for set theory being the first axiom:

- All real numbers are representational measurements of similar sets (i.e. apple = set of apple fruit cells) with a contextually regular periodicity (i.e. each set is defined by the apple skin around it or a complex series of functions that eventually lead back there in a well-understood way).

- Any set can be defined as a vector (a starting point but no limit) - comes from ZFC

- Any set can be defined in terms of its periodicity function (an algorithm, typically a mathematical functioion, that defines the measurement of members of the set) - from ZFC

- Any set defined in terms of its periodicity function is effectively infinite unless bounded by logical rules imposed by the algebraic functions of the definition. (also from ZFC)

I know that's a lot to absorb but it's my best attempt at simplifying the entire argument. -

Infinites outside of math?You didn't ask and even if you did I wouldn't for the reasons I already stated.

To remind you, in case you forgot, it's a waste of my time. Don't care what you infer, you've already demonstrated that isn't going to be affected by anything I say or do. -

Infinites outside of math?Well sure. That was why I pointed out the demographic spectrum and how things are changing, but slowly. There is too much direct control asserted over too much of each generation's career by the previous generation, causing the normal evolution of thought and culture to be retarded in academics.

In addition, the economic and sociopolitical spectrum tends to limit entry into academics as part of it's statistical weighting towards wealth and privilege.

Finally, the social authority encourages ego which, like the other factors mentioned, retards change and adoption of new ideas. -

Infinites outside of math?Not really. That's just the first traditional axiom (self-evident truism in the philosophy of mathematics).

Then again, as I said, there is a very strong and growing debate about that since the argument for sets being the the first self-evident truth has a lot to offer and, of course, can't (or hasn't been) invalidated.

The essential argument is that numbers are simply a form of measurement and mathematics is a precise language for making falsifiable linear statements about those measurements. Numbers are, after all, assigned to representations of sets. Apples/fruit/kilometers/distance/seconds/time... you get the idea. -

Infinites outside of math?♾? If I understand you, the only thing the infinity symbol in mathematics means is that the limit isn't known (thus it can't be summed).

-

Infinites outside of math?ooh you're right I forgot about that one and yes, it's a problem with rotating space instead of rotating the object in space. You can actually have the problem with either implementation depending on how you're treating your overall scene because quaternions still operate on the three axis and, depending on what you're doing in the scene, become mathematically ambiguous due to loss of precision (i.e. the square root problem and other things).

I am an engineer but software, not mechanical/chemical/structural/etc...

[hr/]

As forI was joking about the behavior of fellow mathematicians. In all my days I never saw a rant. And your description of a math person's personality is valid sometimes, but more often they are social animals - the practice of mathematics is a very social activity. I recall being at an autumn meeting at the Luminy campus of the University of Marseilles in 1989 at which there was communal dining and quite a jovial atmosphere. And a summer meeting at the University of Trondheim in 1997 where a member of the royal family attended a convivial banquet overlooking a ski jump where their Olympic team put on a performance. Other international meetings displayed similar atmospheres. — jgill

Please allow me to clarify using my own quote:

...gifted in mathematics tend to be (very much a generalization) very judge... — SkyLeach

I am very aware of the problems of bias in generalization and that's why I pointed out it was a generalization. Any time you combine multiple data points in a demographic you wind up with a much flatter distribution curve. "... tend to be ..." drops to 12% instead of the 50:50 median split of a single data point. Most people conceptualize bias generalizations at 50+ during conversation because... well I'll avoid going into why right now...

As soon as you add any other variables you can completely invalidate the metric. I was using my knowledge of Jungian and the MMPI which are the most widely used and thus easiest to draw inferences from. They have their limits, however, so I try to make it clear that I'm generalizing and try never to use them to evaluate any individuals (or prejudice my behavior). The operative word being try, I'm human too.

EDIT: one further thing to add. When it comes to a discipline, generalizations become much more appropriate because we are very much social animals and when we make choices about our own bias which is essential to cognitive function we must choose between social consensus and personal time investment for validation (hopefully those, not the bad ones like bigotry, faith or inanity). Academics is, at its core, an appeal to authority. -

Infinites outside of math?It's the only one you're getting. You're a limbrain ISFJ with an ego the size of a mountain that has repeatedly demonstrated he's here to stroke his ego not discuss philosophy.

And no, I'm not going to ignore you but I'm also not going to argue (as in fight) with your ego instead of your rational mind. It's a waste of my time.

EDIT: I mixed up two replies in one. Glad I caught it on the editorial re-read. That's what I get for typing in the middle of a family in full Saturday boogy. -

Infinites outside of math?instead of just assuming you should learn a bit more about the humanities and what kinds of research are regularly done

SkyLeach

Start FollowingSend a Message

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum