Comments

-

A Pascalian/Pragmatic Argument for Philosophy of ReligionOne can raise the stakes of any proposition, simply by appending to it a stake-raising clause. For any proposition A there is a proposition A* = A & C, where C = (costly consequences for not believing A). So the consequence of your position is that you have to take seriously infinitely many propositions, not just those concerning the existence of god and his proper worship.

You could object that A* is spurious, but your argument does not say anything about the content and the quality of the propositions that you say should be taken seriously; the only reason you give for taking them seriously C. -

What is logic? Simple explanationTry to mount an argument that we ought to use logic if we wish to arrive at true beliefs, without using logic. — andrewk

Isn't that what the normative principle amounts to (or something similar)? It's precisely because mounting an argument without the use of logic is impossible by definition that such guiding principles cannot be anything other than normative. -

How I Learned to Stop Worrying and Love Climate ChangeIt's mind-boggling that despite a consensus opinion of the expert community, no remotely credible scientific and engineering justification, no independently verified demonstrations, and no apparent interest from the industry, which would stand to profit enormously if LENR was viable, there are still people eager to uncritically swallow this bullshit year after year after year. "Coming to market soon!" (And we've been hearing this song for how long? From Rossi alone - since 2011 at least.)

-

How I Learned to Stop Worrying and Love Climate ChangeFascinating factoid: In a single hour, the amount of power from the sun that strikes the Earth is more than the entire world consumes in an year — Hanover

But in order to make use of all that energy you would have to cover the entire surface of the earth with perfectly efficient solar panels, which then perfectly efficiently deliver that energy to end consumers. So, all things considered, that doesn't actually seem like that much usable energy. -

Causality conundrum: did it fall or was it pushed?In The Norton Dome and the Nineteenth Century Foundations of Determinism, 2014 (PDF) Marij van Strien takes a look at how 19th century mathematicians and physicists confronted instances of indeterminism in classical mechanics*. She notes that the fact that differential equations of motion sometimes lacked unique solutions was known pretty much for as long as differential equations were studied, since the beginning of the 19th century. Notably, in 1870s Joseph Boussinesq studied a parameterized dome setup, of which what is now known as the Norton's Dome is a special case. Although Boussinesq did not solve the equations of motion for the dome, he studied their properties, and noting the emergence of singularities for some combinations of parameters he concluded that the equations must lack a unique solution in those instances (this can also be seen by noting that the apex of the dome does not satisfy the Lipschitz condition in those configurations - see above.)

* A less technical work by the same author is Vital Instability: Life and Free Will in Physics and Physiology, 1860–1880, 2015 (PDF).

Van Strien writes that "nineteenth century determinism was primarily taken to be a presupposition of theories in physics." Boussinesq was something of an exception in that he took the nonunique solutions that he and others discovered seriously. (He acknowledged that his dome was not a realistic example, taking it more as a proof-of-concept; rather, he thought that actual indeterminism would be found in some hypothetical force fields produced by interactions between atoms, which he showed to be mathematically similar to the dome equations.) Boussinesq believed that such branching solutions of mechanical equations provided a way out of Laplacian determinism, giving the opportunity for life forces and individual free will to do their own thing.

But by and large, Van Strien says, such mathematical anomalies were not taken as indications of something real: in cases where solutions to equations of motion were nonunique, one just needed to pick the physical solution and discard the unphysical ones. This is probably why these earlier discoveries did not make much of an impression at the time and have since been partly forgotten, so that Norton's paper, when it came out, caused a bit of a scandal.

From my own modest experience, such attitudes towards mathematical models still prevail, at least in traditional scientific education and practice. It is not uncommon for a model or a mathematical technique to produce an unphysical artefact, such as multiple solutions where a single solution is expected, a negative quantity where only positive quantities make sense, forces in an empty space in addition to forces in a medium, etc. Scientists and engineers mostly treat their models pragmatically, as useful tools; they don't necessarily think of them as a perfect match to the structure of the Universe. It is only when a model is regarded as fundamental that some begin to take the math really seriously - all of it, not just the pragmatically relevant parts. So that if the model turns out to be mathematically indeterministic, even in an infinitesimal and empirically inaccessible portion of its domain, this is thought to have important metaphysical implications.

Interpretations of quantum mechanics are another example of such mathematical "fundamentalism". Proponents of the Everett "many worlds" interpretation, such as cosmologist Sean Carroll, say (approvingly) that all their preferred interpretation does is "take the math seriously." Indeed, the "worlds" of the MWI are a straightforward interpretation of the branching of the quantum wavefunction. (Full disclosure: I myself am sympathetic to the MWI, to the extent to which I can understand it.)

Are "fundametalists" right? Can a really good theory give us warrant to take all of its implications seriously? -

How I Learned to Stop Worrying and Love Climate Change"Nuclear fusion is always 30 years away," as they say. This indeed has been the case for the last half-century if not more.

LENR is just a rebranding of cold fusion. It is largely the province of cooks and scammers. -

Causality conundrum: did it fall or was it pushed?And then, of course, as SophistiCat astutely concluded (and I didn't concluded at the time) the ideal case might be inderminate because of the different ways in which the limiting case of a perfectly rigid body could be approached. — Pierre-Normand

Thanks, but I cannot take credit for what I didn't actually say :) The rigid beam case is indeterminate in the sense that multiple solutions are consistent with the given conditions. I did think that there may be a way to approach a different solution (with non-zero lateral forces) by an alternative path of idealization, perhaps by varying something other than elasticity. My intuition was primed by the 0/0 analogy, in which a parallel strategy of approaching the limit from some unproblematic starting point would clearly be fallacious. But I couldn't think of anything at the moment, so I didn't mention it.

I think I have such an example now though.

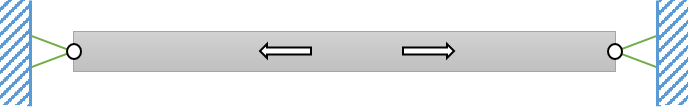

Suppose that a pair of lateral forces of equal magnitude but opposite directions were applied to the beam. This would make no difference to the original rigid beam/wall system: the forces would balance each other, thus ensuring equilibrium, and everything else would be the same, since the forces wouldn't produce any strains in the beam; the forces would thus be merely imaginary, since they wouldn't make any physical difference. However, if we were to approach the rigid limit by starting from a finite elasticity coefficient and then taking it to to infinity, there would be a finite lateral force acting on the walls all the way to the limit. -

Causality conundrum: did it fall or was it pushed?Korolev actually does a similar limiting analysis of the dome itself, showing that for any finite elasticity (assuming a perfectly elastic material of the dome) there is a unique solution for a mass at the apex: the mass remains stationary; but when the elasticity coefficient is taken to infinity, i.e. the dome becomes perfectly rigid, the situation changes catastrophically and all of a sudden there is no longer a unique solution.

In the case of the beam in Norton's paper, in order to definitely answer the question we need to find the state of stress inside the beam, which for normal bodies is fixed by boundary conditions. The problem with infinite stiffness is that constitutive equations are singular, and therefore, formally at least, the same boundary conditions are consistent with any number of stress distributions in the beam. This happens for the same reason that division by zero is undefined: any solution fits. In the simple uniaxial case, the strain is related to the stress by the equation . When E is infinite and 1/E is zero, the strain is zero and any stress is a valid solution to the equation. Boundary conditions in an extended rigid body will fix (some of the) stresses at the boundary, but not anywhere else. Or so it would seem.

However, what meaning do stresses have in an infinitely stiff body? Because there can be no displacements, there is no action. Stresses are meaningless. Suppose that instead of perfectly rigid and stationary supports, the rigid beam in Norton's paper was suspended between elastic walls. Whereas in the original problem the entire system, including the supports, was infinitely stiff and admitted no displacements, now elastic walls would experience the action of the forces exerted by the ends of the beam. Displacements would occur, energy would be expended, work would be done. The problem becomes physical, and physics requires energy conservation, which immediately yields the solution to the problem.

So I wouldn't worry too much about these singular limits; just as in the case of the division by zero, no solution makes more sense than any other, they are all meaningless. -

Causality conundrum: did it fall or was it pushed?In Determinism: what we have learned and what we still don't know (2005) John Earman "survey[ s] the implications of the theories of modern physics for the doctrine of determinism" (see also Earman 2007 and 2008 for a more technical analysis). When it comes to Newtonian mechanics he gives several indeterministic scenarios that are permitted under the theory, including the Norton's dome of the OP. Another well-known example is that of the "space invaders":

Certain configurations of as few as 5 gravitating, non-colliding point particles can lead to one particle accelerating without bound, acquiring an infinite speed in finite time. The time-reverse of this scenario implies that a particle can just appear out of nowhere, its appearance not entailed by a preceding state of the world, thus violating determinism.

A number of such determinism-violating scenarios for Newtonian particles have been discovered, though most of them involve infinite speeds, infinitely deep gravitational wells of point masses, contrived force fields, and other physically contentious premises.

Norton's scenario is interesting in that it presents an intuitively plausible setup that does not involve the sort of singularities, infinities or supertasks that would be relatively easy to dismiss as unphysical. has already homed in on one suspect feature of the setup, which is the non-smooth, non-analytic shape of the surface and the displacement path of the ball. Alexandre Korolev in Indeterminism, Asymptotic Reasoning, and Time Irreversibility in Classical Physics (2006) identifies a weaker geometric constraint than that of smoothness or analyticity, which is Lipschitz continuity:

A function is called Lipschitz continuous if there exists a positive real constant such that, for all real and , . A Lipschitz-continuous function is continuous, but not necessarily smooth. Intuitively, the Lipschitz condition puts a finite limit on the function's rate of change.

Korolev shows that violations of Lipschitz continuity lead to branching solutions not only in the case of the Norton's dome, but in other scenarios as well, and in the same spirit as Andrew above, he proposes that Lipschitz condition should be considered a constitutive feature of classical mechanics in order to avoid, as he puts it, "physically impossible solutions that have no serious metaphysical import."

Ironically, as Samuel Fletcher notes in What Counts as a Newtonian System? The View from Norton’s Dome (2011), Korolev's own example of non-Lipschitz velocities in fluid dynamics is instrumental to productive research in turbulence modeling, "one whose practitioners would be loathe to abandon on account of Norton’s dome."

It seems to me that Earman oversells his point when he writes that "the fault modes of determinism in classical physics are so numerous and various as to make determinism in this context seem rather surprising." I like Fletcher's philosophical analysis, whose major point is that there is no uniquely correct formulation of classical mechanics, and that different formulations are "appropriate and useful for different purposes:"

As soon as one specifies which class of mathematical models one refers to by “classical mechanics,” one can unambiguously formulate and perhaps answer the question of determinism as a precise mathematical statement. But, I emphasize, there is no a priori reason to choose a sole one among these. In practice, the choice of a particular formulation of classical mechanics will depend largely on pragmatic factors like what one is trying to do with the theory. — Fletcher -

The Torquemada problemYes indeed. They are abrogating their moral duty to the letter of the law. Which makes them less human, allegedly. — unenlightened

Not exercising a quintessentially human faculty in the context of performing a specific task does not make you "less than human," in and of itself - it all depends on context. Following printed instructions while assembling an IKEA table won't land you in Nuremberg. What is morally suspect is abrogating moral duty when exercising moral duty is called for (and yes, that can happen in a legal context as well). -

The Torquemada problemDoes this apply to judges who refer to statute, convention, constitution, case law, etc? — unenlightened

When judges defer to law, they are not exercising their human ethical judgment (at least in theory, which I take to be the context of your hypothetical question). -

Do you believe there can be an Actual InfiniteNo, it's not just a semi-infinite number line, because that omits the temporal context. Time does not exist all at once, as does an abstract number line.

Consider the future: it doesn't exist. — Relativist

Neither does the past, whether finite or infinite, according to the A theory of time, which you brought up for no apparent reason. The A theory of time is a red herring; this metaphysical position is irrelevant to the argument that you are trying to make, which is:

The present is the END of a journey of all prior days. That would be the mirror image of reaching a day infinitely far into the future, which cannot happen. A temporal process cannot reach TO infinity, and neither can a temporal process reach FROM an infinity. — Relativist

We've been over this already: this is the same question-begging argument that you made at the beginning of the discussion. The reason a temporal process will never reach infinitely far into the future is that there is nothing for it to reach: a process can start at point A and reach point B, but if there is no point B, then talk about reaching something doesn't make sense. Turn this around, and you get the same thing: you can talk about reaching the present from some point in the past, but if there is no starting point (ex hypothesi), the talk about reaching from somewhere doesn't make sense, unless you implicitly assume your conclusion (that time has a starting point in the past).

Look, you don't have an argument here; you are just stating and restating your conclusion in slightly different ways. You aren't the first to fight this hopeless fight, of course: the a priori denial of actual infinities is as old as Aristotle; Kant tried to make an argument very similar to yours, and others have followed in his step, including most recently theologian W. L. Craig, who employs a raft of such arguments as part of his Kalam cosmological argument for the existence of God. But nowadays these arguments do not enjoy much support among philosophers (see for instance Popper's critique, if you can get it, or any number of more recent articles).

As for physicists and cosmologists, to whom you have appealed as well, most don't even take such a priori arguments seriously, though a few have condescended to offer a critique (such as the late great John Bell, back in 1979, responding to the same article as Popper above). As far as cosmologists are concerned, the question is undeniably empirical, and at this point entirely open-ended; see, for example, this brief survey and the following comments from Luke Barnes (who is somewhat sympathetic to your conclusion). -

Do you believe there can be an Actual InfiniteNo, I outlined a mapping of a possible finite past, and pointed out there are cosmological models based on a finite past (Hawking, Carroll, and Vilenkin to name 3). I am aware of no such conceptual mapping for an infinite past. — Relativist

Your "conceptual mapping" of a finite past was a semi-infinite number line. You say you cannot think of a corresponding "conceptual mapping" for an infinite past? Really?

I am sorry, but this isn't worth my time. -

Do you believe there can be an Actual InfiniteYes, conceivability is subjective, but conceptions can be intersubjectively shared, analyzed, and discussed. — Relativist

Conceivability, the way you are using the word, is nothing more than an attitude, an intuition, a gut feeling. While different individuals can hold such attitudes in common, it is not the sort of concept that can be described and transmitted by a rational argument. I, for instance, do not find the beginning of time to be any easier to conceive than an infinite past, and I doubt that you could do much to change my attitude.

But then I do not make much of such attitudes. If one holds time to be an objective feature of the physical world, rather than a subjective attitude, then what does it matter if an infinite or a finite past does not sit well with one's intuitions? We are animals with a lifespan of a few tens of years; we can hardly get to grips with timespans of thousands, let alone billions of years. If we were to trust our intuitions on this, most of us would have had to be Young-Earth creationists, right? But then what are we to do with the powerful intuition that at any moment there must always be before? Or, for those having trouble conceiving of an infinite space, what are they to do with Lucretius and his spear? Intuitions just aren't a good guide to the truth in this case. -

Are we doomed to discuss "free will" and "determinism" forever?Yes, we are. On one message board that I once frequented (now defunct), which wasn't even specifically for philosophy, a subsection within its only philosophy section was created just for free will discussions.

Unlike, say, Kierkegaard's esthetics or structural realism, "free will" is the sort of subject where most people feel they can jump in without any learning or reflection. Most free will discussions are therefore trivial and confused, with people talking past each other, without even stopping to think about what free will is, or why they think of it the way they do. And I am speaking as someone whose attitude towards this subject has changed - an all too rare occurrence - from learning more about it.

It would go a long way towards making such discussions more worthwhile if participants were at least somewhat aware of the history of the subject; its relation to freedom, voluntary action, agency, autonomy, responsibility, control, determination; the role it plays in law, ethics, psychology, sociology. There is, of course, massive literature on free will in philosophy, including experimental philosophy (yes, that's a thing). -

Do you believe there can be an Actual InfiniteMy mistake, your point is well taken. It should be said (somewhat contradicting what I said before) that even in something as seemingly dry and precise and abstract as mathematics, the actual process of coming up with a mathematical theory may start with somewhat vague, intuitive idea of what it is that you want to see, and then you evaluate your formal construction against that idea. Thus, we have intuitive, pretheoretical, or just pragmatic ideas of what a set should be, what an arithmetic should be, and then we axiomatize those ideas, giving them definite, precise form (and there can be more than one way to do that, some better, some worse, some just offering different possibilities).

-

Do you believe there can be an Actual InfiniteBut an infinite past still entails an infinite series that has been completed; that is the dilemma. Consider how we conceive an infinite future: it is an unending process of one day moving to the next: it is the incomplete process that is the potential infinity. The past entails a completed process, and it's inconceivable how an infinity can be completed. — Relativist

Well, inconceivable is a subjective assessment, it's a far cry from being provably impossible. If you just want to say that you don't believe the past can be infinite because an infinity of elapsed time seems inconceivable to you, you are welcome to it. Does an absolute beginning of time, such that right at the beginning there is no before, seem more conceivable to you?

Mathematical entities are abstractions, they have only hypothetical existence. — Relativist

That's neither here nor there, because this is true for all our thoughts, concepts, imaginings. When you think of a dog, even when the thought is prompted by looking at one, your thought is not the dog - it's an idea in your head, an abstraction of a dog.

How is this different from the infinity of mathematical operation of dividing 3 into 1? Just because it equates to an infinity of 3's after the decimal doesn't imply infinity exists in the world. — Relativist

You mean dividing 1 into 3, right? Exactly, very good example. You don't say that for there to be thirds we need to be able to write out all the decimal digits of 1/3, right? That would be an arbitrary, unjustified requirement. So why do you maintain that for there to be a "completed" infinite sequence we need to be able count out each individual element of the sequence? Does its existence somehow depend on us speaking or thinking it into existence, one element at a time? Bottom line, you can't just throw out such arbitrary requirements, you need to justify them. -

Do you believe there can be an Actual InfiniteIs there a theory of Absolute infinity? Please tell me if there is!!! — ssu

OK, so you make a distinction between something you call "Absolute" infinity and any other sort of infinity. I don't know what that difference is, and it doesn't look like you have a very definite idea either. When you want to find out whether something exists, you don't start by giving it a name, you start by giving it an operational definition, laying down requirements that need to be satisfied for anything to be recognized as that thing. It's no use just saying: "Well, it's Absolute, you know..." -

Do you believe there can be an Actual InfiniteI don't see how an instantiated infinity could ever be established empirically since we can't count to infinity. — Relativist

The same way we can empirically establish anything at all. We don't necessarily need to count to infinity for that, just as we don't need to write out all the digits of pi in order to empirically establish the harmonic oscillator solution. If a model that makes use of infinities provides a good fit for many observations, is parsimonious, productive, fits in with other successful models, etc. then we consider it to be empirically established, infinities and all.

On the other hand, I think in some cases, infinity can be ruled out. For example: the past cannot be infinite. Here's my argument:

1. It is not possible for a series formed by successive addition to be both infinite and completed.

2. The temporal series of (past) events is formed by successive addition.

3. The temporal series of past events is completed (by the present).

4. (Hence) It is not possible for the temporal series of past events to be infinite.

5. (Hence) The temporal series of past events is finite. — Relativist

"Successive addition" implies a starting point, which obviously precludes an infinite past. Your argument simply begs the question. An infinite past is a past that does not have a starting point.

I myself believe Absolute Infinity as an mathematical entity exists. It's just a personal hunch that it is so. — ssu

You don't need any hunches in order to believe that a mathematical entity exists: all you need is a mathematical theory that says that such and such entity is infinite - and such mathematics exists, there is no question about that. -

Do you believe there can be an Actual InfiniteIt's discrete and not a continuum at all. — LD Saunders

What is? -

Do you believe there can be an Actual InfiniteThere's no "constructing" here, space is just infinitely divisible. There's no such thing as a smallest possible distance. — MindForged

Well, actually in physics, space does not seem to be infinitely divisible. — LD Saunders

In today's physics space and time are usually modeled as a continuum. This is true for classical mechanics and quantum mechanics and for many other theories. This does not mean that we can say something definitively about the ultimate nature of space and time, or that it even makes sense to talk about such ultimate nature, as if it were uniquely defined. Conservatively, the most we can say is that current physical theories are very effective, and that gives us a good reason for thinking of space and time as a continuum and no good reason for thinking otherwise.

This doesn't mean that future physical theories will not quantize space and time. Some think that quantum physics points in that direction, although to repeat, current theory makes space and time a continuum. And an unbounded (infinite) one at that in all but some cosmological models. Speaking of which, those cosmological models with a finite or semi-infinite spacetime are so violently counterintuitive that I very much doubt that most "infinity skeptics" would be more satisfied with them than with the traditional Euclidean infinite space and time. -

Self-explanatory factsDennis, if you really believe that philosophical theories are uniquely derived from experience with unassailable reasoning, and that this can be done for Aristotelian philosophy in just a couple of paragraphs, then you are very naive. Anyway, I do not wish to detail this discussion any further.

-

Do you believe there can be an Actual InfiniteThat's paradoxical. — frank

Not paradoxical, just undefined. Let's tweak the story:

- Imagine Donald Trump

- You notice he’s counting (you can tell because he is muttering and holding up his fingers). You ask how long and he says ‘I’ve been counting for ten minutes’

- What number is he on?

So put this way, this is a pretty dumb counterexample, but there are actually many puzzles involving infinities where you might think there ought to be a definite answer, but there isn't, such as Thomson's Lamp for example. There are also genuine paradoxes, where an imaginary setup that seems like it ought to be possible, in principle, leads to contradictions. But in each of these cases you have an option to reconsider your starting assumptions: Are you sure that there must be a unique answer? How do you know? Are you sure the setup itself is coherent? How do you know? -

Self-explanatory factsIt is amazing how taste can trump analysis. — Dfpolis

Well, when it comes to philosophy, at the end of the day it does come down to "taste;" there's no getting around it, unless you believe that you can derive an entire philosophy completely a priori, without any extrarational commitments (which would be an exceptionally crankish thing to believe).

But that's not really why I don't accept your argumentation in this instance. When making an argument one must start from some common ground, and Aristotelian or Scholastic metaphysics isn't such a common ground between us. If you absolutely have to use that framework, then you would have to start by justifying that entire framework to me, or at least its relevant parts. And that is just too unwieldy a task for a forum discussion on an unrelated topic. -

Do you believe there can be an Actual InfiniteThe resolution of singularities is in part due to the precedence of them turning out to be the result of mistakes in our models. — MindForged

Singularities are nasty beasts, and there's a better reason for eschewing them than past experience: singularities blow up your model in the same way that division by zero does (division by zero is one instance of singularity); they produce logical contradictions.

Of course, singularities are not the only sort of infinities that we deal with. As you said, if we use modern mathematical apparatus, then it is exceptionally hard to get rid of all infinities. A few have tried and keep trying, but it's a quixotic battle.

As for the objection "it's just math, it's not real," then my next question is: what is real? Where and why do you draw the boundary between your conceptual mapping of the world and what you think the world really is? Is there even any sense in drawing such a distinction? Are three apples really three, or just mathematically three? If they are not really three, then what are they really? -

Self-explanatory factsOK, I see now that your position is deeply embedded in Aristotelian metaphysics, which holds no attraction for me. Thanks for taking the trouble to explain it though.

-

The Death of LiteratureNobody expects Quentin Tarantino or Ryan Gosling to have anything particularly interesting to say about the world, but they do expect that of JM Coetzee and Hilary Mantel. — andrewk

The age of the serious writer as a public intellectual carrying wisdom and moral authority is even shorter than the age of print - that started roughly in the middle 19th century in the Western world, and is on the vane now. I think you are wrong about Tarantino and Gosling, given our celebrity culture. -

The Death of Literature19th century was the golden age of print (or more precisely, from late 18th century to early 20th), and, coincidentally or not, that is also when the novel became "serious literature." By print I mean not so much the physical medium, but what has come to be associated with it: the relatively long, sequential read, which includes "literature," as well as non-fiction books and magazine and newspaper articles of nontrivial size. It is contrasted with audio-visual and multimedia entertainment, reference, social media, Internet browsing, forums like this, etc. (The latter two are on the way out, by the way.)

So literature, or print, as we conceive of it now, is actually a relatively recent and brief phase in the history of human civilization. Already, if we group together all the new forms that came to prominence in the 20th-21st centuries, this new age is comparable in length to the age of print. -

Self-explanatory factsHow can something essentially inadequate to a task perform the task? — Dfpolis

Explain, please. -

Self-explanatory factsNow, my question is the following : how would you attack this argument, in a way other than denying (P2), i.e. that there exists a series of all grounded facts ? — Philarete

Would you consider just dropping the PSR? It's difficult for me to see what the attraction of an unrestricted PSR is, Della Rocca's arguments notwithstanding. -

Why do athiests have Morals and Ethics?Why do athiests have Morals and Ethics? — AwonderingSoul

Have you tried Google? I just highlighted "Why do athiests have Morals" in your title, right-clicked, and selected the option to search Google. (You misspelled "atheists," of course, but Google is clever enough to correct the misspelling.) The very first page of results contains several responses from card-carrying (literally!) Atheists. -

Possible Worlds TalkMy understanding is along the lines of what @Snakes Alive said (I think). For a modal realist like Lewis possible worlds serve as a reductive explanation of (one type of) modality, but that is a minority view. For the rest, possible worlds talk is just that - talk. It's a metaphorical interpretation of (some) modalities. It neither explains (in the way Lewis's realism does) nor replaces modality - it's just an informal and intuitive language. Whenever possible worlds language is used, you can replace it with the appropriate formalism.

-

Knowledge without JTBWell, that is excellent news. Tell me, do you believe JTB is the best description for knowledge in a non-general sense? I know you can justify it, but I'm curious as to whether you believe it. — Cheshire

I am ambivalent about it. The advice that I gave you about seeing how it works in a philosophical context is the advice I would take myself. I haven't read enough, haven't burrowed deep enough into surrounding issues (partly because I didn't find them interesting) to make a competent judgement.

. -

Knowledge without JTBI'm not so much interested as how its used 'in language', but rather how it's used in reality. — Cheshire

Knowledge is a word, language use is its reality. It's not like there is some celestial dictionary in which the "real" meanings of words are inscribed once and for all. Knowledge is what we say it is. So one way to approach the question is to do as linguists do when they compile a dictionary: see how the word is used "in the wild." Philosophers and other specialists extent the natural language in coining their own terms, which they can do in ways that narrow the colloquial meaning or diverge from it. However, it is considered to be a bad and misleading practice to diverge too far, in effect creating homonyms.

While @javra attempted a conceptual justification of the JTB knowledge, I'll stick to natural language for a moment. How much does the JTB knowledge differ from common sense knowledge? One thing you can say about the JTB definition is that, at first glance, it does not appear to be an operational definition (this parallels both your critique and @javra's notes above). If you wanted to sort various propositions into knowledge and not knowledge, you could plausibly use the first two of the JTB criteria (setting aside for a moment legitimate concerns about those two), but you cannot apply the criterion of Truth, over and above the criterion of Justification. For how do you decide whether a proposition is true, if not by coming up with a good justification for holding it true?

But think about what happens when we evaluate beliefs that we held in the past, or beliefs that are held by other people. They are Beliefs, and they could be Justified as well as they possibly could be, given the agent's circumstances at the time. And yet, when you consider those beliefs from your present perspective, you could judge the Truth of those beliefs differently. And since it would not be in keeping with the common sense to call false beliefs "knowledge," it seems that there is, after all, a place for the Truth criterion.

And before you object, I mean to say especially philosophers, when I say people. My primary reason for making JTB a target is just because it's so well guarded from criticism and taught as if were a law of thought; when as Gettier showed in nearly satirical fashion the emperor has no cloths. — Cheshire

Well, how familiar are you with contemporary epistemology? Even from a very superficial look, it is hard to see where you got this idea - see for instance SEP article The Analysis of Knowledge. -

Knowledge without JTBI greatly appreciate the charitable read and I agree. So long as JTB isn't meant to actually describe the real world and is only maintained for the purpose of an exercise I suppose I no longer object. Thank you for the reference to Gettier; I'm aware my arguments or causal assertions must appear quite naive.

Do you think you could produce an example of these two different types of knowledge? The general and the technical?

I suppose I'm agreeing with Gettier in a sense, but avoiding his objection. He's saying hey your system doesn't work because it can produce mistaken knowledge. I'm saying some knowledge is mistaken. — Cheshire

Yes, Gettier's counterexamples are where all three of the JTB criteria seem to be satisfied, and yet the result doesn't meet our intuitive, pre-analytical notion of knowledge. Your examples are where our intuitive notion of knowledge does not meet the JTB criteria. How damaging are such attacks? That totally depends on the context.

Like I said, if the goal was to just give an accurate account of how the word "knowledge" is used in the language, you probably can't do better than a good dictionary, together with an acknowledgement that such informal usage is imprecise and will almost inevitably run into difficulties with edge cases like Gettier's.

But philosophers define their terms in order to put them to use in their investigations, so I think the best way to approach the issue is not to latch onto one bit taken out of context, but see what work that JTB idea does in actual philosophical works. Maybe the JTB scheme is flawed because it doesn't capture something essential about knowledge, or maybe the examples that you give just aren't relevant to what philosophers are trying to do. I haven't done much reading in this area myself - I am just giving what I hope is sensible general advice on how to proceed. -

Knowledge without JTBThe theory of knowledge that serves as the foundation of philosophy is flawed. — Cheshire

This reminds me of Russel's famous conundrum: "The present king of France is bald."

Anyway, the most charitable reading of your post suggests that you are dissatisfied with the JTB theory of knowledge because it does not fully reflect the way the word "knowledge" is used in the natural language (English language, at least). This would have been a valid objection if an English language dictionary gave "justified true belief" as the only definition of the word "knowledge." Like many words, the meaning of "knowledge" as exemplified by actual use is heterogeneous and will not be captured by a single, compact definition. But JTB was not meant to serve as a general definition - it was to be a technical definition for use in analytical epistemology. So we can talk about whether it is a useful definition (and many have challenged it before you, most famously, Gettier). -

Deities and Objective TruthsGod states that killing is wrong, Gob states that it is not. — Joe Salem

I think that this controversy should be resolved in the traditional way: single combat. -

Law of IdentityYou are confusing terms of language, or written symbols, with entities that are designated by them. You have essentially reproduced the confused argument of the OP.

-

In defence of Aquinas’ Argument From Degree for the existence of GodI was specifically addressing OP's understanding and presentation of the argument, and contrasting it with Aquinas's. I agree with you and others that Scholastic philosophy carries with it a load of metaphysical baggage that makes it a non-starter for many. And that, if we want to address that philosophy - whether to uphold it or to dispute it - we need to take it on its own terms (as best as we can make out those terms).

-

The argument of scientific progressThe explanandum of a cosmological argument is not the sum of the physical features of the first cause. For that, cosmological arguments are usually content to defer to science. If anything, some of these arguments present an overly confident view of science. For example, proponents of the Kalam cosmological argument, such as W. L. Craig, insist that cosmologists have already settled the scientific question of whether the universe has a beginning in time (which he identifies with the "Big Bang"), whereas in reality the question remains open.

Nothing that future science could add to its picture of the early universe could address the problem that cosmological arguments claim to raise and resolve. The only resolution that could satisfy proponents of a cosmological argument is one that proves the first cause to be necessary in the appropriate sense (depending on the type of the argument). But such a resolution could hardly be expected from science. Science tells us what is (the brute fact), not what must be. Only logic or metaphysics can claim to do the latter.

At this point I recommend that you actually take a closer look at these arguments, because I get an impression that you have a very vague idea of what they are saying. The SEP has an extensive introduction: Cosmological Argument.

SophistiCat

Start FollowingSend a Message

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum